AWS for M&E Blog

Guidance for a media lake on AWS: Accelerate media operations with agentic AI

Many media organizations face complexity in their content operations. Teams can spend weeks coordinating asset management, content analysis, and distribution across disconnected systems. Manual handoffs between different teams such as creative, operations, and distribution teams create bottlenecks that delay progress and limit business agility. A media workflow could involve multiple teams and dozens of tools, requiring multiple weeks with manual processes at each step of the workflow.

These challenges compound as more content is produced and teams are looking to create efficient processes and media. Media companies need intelligent automation that goes beyond simple workflow orchestration. They need solutions that can understand context, make decisions, and coordinate complex multi-step processes through natural language interactions.

Introducing agentic AI for a media lake

In July of this year, we announced a Guidance for a media lake on Amazon Web Services (AWS). It helps customers achieve unified content management, semantic search across media libraries, and automated metadata enrichment and discovery.

We are updating the guidance with agentic AI capabilities through Amazon Bedrock AgentCore (Preview), transforming how media teams interact with their media and workflows. Agentic AI abilities in a media lake allow for multiple agents to complete workflows, increasing business agility. Instead of navigating multiple interfaces and manually coordinating between systems, teams can now execute multi-step workflows through natural language requests.

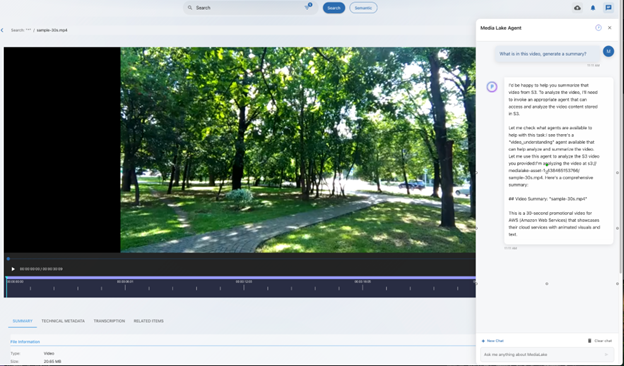

Consider these scenarios: A producer can request, “Send the final cut to our post-production partner with 4K masters and proxy files,” or marketing can ask, “What is in this video, generate a summary.” The guidance understands the context, identifies the required assets, coordinates the necessary services, and executes the complete workflow—all from a single conversational interface.

Architecture and framework

The guidance implements a three-tier agent architecture that balances specialization with coordination, providing both efficiency and flexibility in handling diverse media operations.

Deployment and infrastructure

During deployment, AWS Cloud Deployment Kit (AWS CDK) orchestrates the creation of the agent infrastructure. AWS CodeBuild packages each agent as a Docker container and stores it in Amazon Elastic Container Registry (Amazon ECR). Each deployment automatically checks for new agent versions—if updates are available, CodeBuild creates and deploys the updated agent containers, confirming the guidance uses the latest capabilities. AWS Lambda functions then instantiate Bedrock AgentCore runtimes for each agent, while Amazon DynamoDB maintains the agent registry—tracking available agents, their capabilities, and operational status.

Tier 1: Coordinator agent

The coordinator agent serves as the primary interface, interpreting user requests and orchestrating the appropriate sequence of specialized agents. It maintains broad knowledge of media operations and understands how to decompose complex requests into actionable tasks.

Tier 2: Specialized domain agents

Secondary agents handle specific domains such as video understanding, asset management, or content distribution. Each agent possesses deep expertise in its domain and knows which tools and services can accomplish specific tasks. For example, a video understanding agent can generate metadata, identify key moments, create highlight reels, and search for specific content within videos.

Tier 3: Service and partner integration agents

The third tier consists of agents that interface directly with AWS services and Partner solutions. These agents translate high-level requests into specific API calls and service interactions, handling authentication, parameter formatting, and error management.

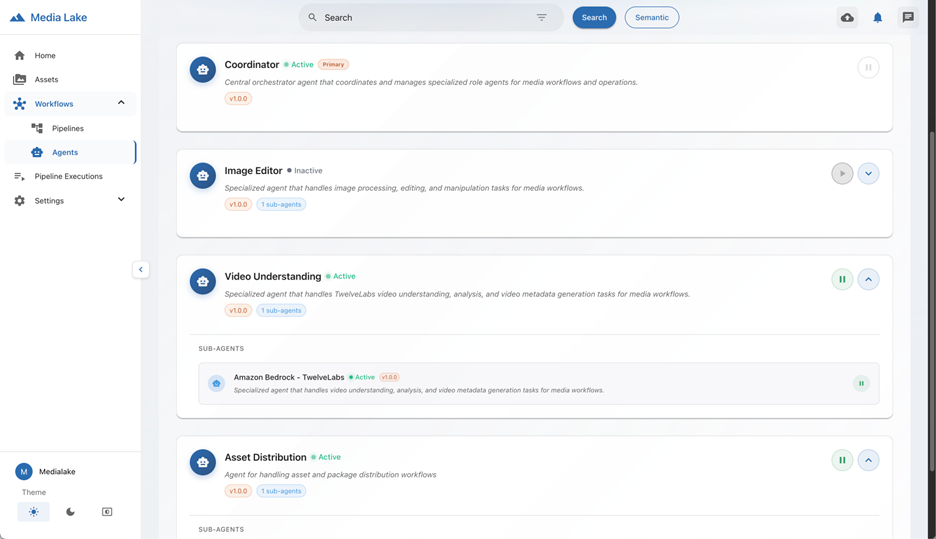

Agent management and control

The guidance provides control over agents and agent capabilities through a centralized management interface. Users can enable or disable individual agents based on organizational needs, controlling which services and operations are accessible to users. Through the agent registry, teams can activate specific agents, enable what services or tools those agents have access to, and disable agents that are no longer needed.

This flexibility helps organizations maintain full governance over their automated workflows while adapting the guidance to changing operational requirements. For example, a team might enable video analysis agents during the production phases, but disable distribution agents until final approval processes are complete.

Integration examples

The value of this guidance’s architecture becomes clear through real-world integrations with AWS Partner Network (APN) partners and AWS services—demonstrating at IBC 2025.

Nomad Media: Intelligent content management

Nomad Media demonstrates how Partners can leverage the guidance’s conversational interface and integrations without building custom connections. When a Nomad Media user requests, “Send these assets to Robert Raver,” Nomad Media calls the guidance’s media lake agent framework to orchestrate the complete workflow:

- The coordinator agent interprets the request from Nomad Media and identifies required actions

- The asset management agent accesses the specified assets that Nomad Media has made available to the media lake

- The integration agent determines the appropriate distribution method for delivering assets to the recipient

- The media lake distribution agents handle the transfer using its integrated services (such as MASV or other distribution solutions)

- The system confirms successful delivery and updates the transfer status

Through this integration, the Nomad Media platform gains immediate access to the media lake integrations with other AWS services, Partners, and solutions for distribution and processing services. Instead of building individual integrations with multiple vendors, Nomad Media can focus on their core expertise while the guidance handles the complex orchestration of asset distribution across various platforms and services. Nomad Media users can leverage sophisticated distribution workflows through streamlined natural language commands within their familiar Nomad Media interface.

MASV: Automated file transfer and distribution

MASV demonstrates how complex distribution workflows become streamlined conversational requests. MASV specializes in accelerated large file transfers, a critical capability for media organizations distributing content globally.

Through integration with the guidance, the MASV API and Amazon Simple Storage Service (Amazon S3) bucket access, users can execute sophisticated distribution workflows. They can use natural language commands such as, “Package and provide a download link so I can send this master to our post-production team via MASV.”

The guidance agent framework decomposes this request into coordinated actions:

- The asset management agent identifies the specified content based on the user’s current context and selection.

- The asset distribution agent orchestrates multiple API calls to create delivery-ready packages—gathering required assets from S3 and organizing them according to destination requirements.

- The distribution agent generates secure download links and assembles the complete package.

- The agent calls the MASV API directly, passing the S3 bucket location, authentication credentials, destination endpoints, and transfer parameters.

- MASV leverages parallel TCP connections, dynamic routing, and TLS 1.2 encryption on AWS infrastructure to maximize global transfer speeds for terabyte-scale media workflows.

Throughout the process, the guidance’s agents monitor progress through API status checks and handle exceptions. If issues occur, the agent framework coordinates retry logic through the MASV API and notifies relevant stakeholders. Upon transfer completion, the agents update asset status in the media lake catalog, provide the download link to the user, and can trigger any dependent workflows.

This integration eliminates manual coordination between asset preparation and distribution teams while giving MASV access to the guidance’s media lake’s entire integrated Partners and services.

TwelveLabs: Advanced video understanding

TwelveLabs brings video analysis and understanding capabilities to the guidance. Through the Pegasus model on Amazon Bedrock, users can request complex video understanding tasks using natural language commands.

When a user requests, “Find all scenes with penguins in Antarctica” or “Find the best scenes for a highlight reel,” the media lake agent framework orchestrates the analysis workflow:

- The coordinator agent interprets the request and routes it to the video understanding agent

- The video understanding agent analyzes the content to identify relevant scenes based on the specified criteria

- The agent invokes the TwelveLabs Pegasus model on Amazon Bedrock to perform deep video analysis

- The system processes results including scene identification, context analysis, and content relevance

- The agent compiles the findings and returns timestamped segments and metadata that was generated

The Amazon Bedrock TwelveLabs sub-agent handles the Pegasus model invocations and video processing operations, while the coordinator confirms results flow into subsequent workflow steps. This integration helps teams to extract insights from video libraries, automate content discovery, and accelerate workflows. It uses conversational commands that would traditionally require manual review of hours of footage.

Conclusion

Agentic AI in media operations represents more than automation—it’s about augmenting human creativity and decision-making with intelligent systems that understand context and intent. By combining AWS services with Partner solutions through conversational interfaces, media organizations can accelerate media operations and reduce operational complexity.

The integration of agentic AI into media operations through the Guidance for a media lake on AWS represents a shift in how media organizations can manage their complex workflows. By combining AWS services with Partner solutions through an intelligent, context-aware solution, organizations can reduce operational complexity while maintaining precise control over their processes.

The three-tier agent architecture demonstrates how specialized AI agents can work in concert to interpret natural language requests, coordinate multiple services, and execute workflows. As media workflows continue to grow in complexity, this agentic AI framework provides a flexible foundation that can evolve alongside changing industry needs and technological capabilities.

Get started today

Ready to transform your media operations with agentic AI? Here are two ways to begin:

- Connect with experts: Contact our AWS Media & Entertainment specialists to discuss how Guidance for a media lake on Amazon Web Services (AWS) can address your specific workflow challenges.

- Come see us at IBC 2025: Visit our AWS at IBC 2025 page. Learn about all the live demonstrations of our Guidance for a media lake on AWS agentic AI capabilities at the AWS booth. Our experts can walk you through real-world implementations with AWS Partners such as Nomad Media, MASV, and TwelveLabs. Or book a meeting while you are at the show to learn how AWS can accelerate your business.

The future of media operations is intelligent, adaptive, and accessible to every member of your organization. Start your journey today.