AWS for M&E Blog

Integrating AI-based audio dubbing into live streaming with AWS Media Services and CAMB.AI

As the demand for live video streams grows rapidly, broadcasters and producers face significant complexity and cost challenges to make the content available for global, multi-lingual audiences. Live dubbing the audio track to another spoken language has traditionally been a highly manual process, which adds significant cost and complexity for producers. Thanks to the latest advancements with AI dubbing, and the scalability and flexibility offered by the Amazon Web Services (AWS) cloud, new solutions can solve these legacy pain points. AI audio dubbing enables users to distribute the live stream in different languages, automatically processed and embedded in the original video transport stream.

Traditional dubbing is expensive due to the need for professional voice actors, sound engineers, and complex production workflows. CAMB.AI’s AI-powered platform automates the dubbing process, significantly lowering costs by eliminating the need for extensive human resources and studio setups. Furthermore, managing live stream latency and synchronization between multiple dubbed audios is challenging.

In this post, we introduce you to AWS Partner CAMB.AI, who offers an AI dubbing solution that can easily plug in to an AWS Media Services architecture flow. We explain how AI dubbing can integrate into a resilience and redundant live streaming architecture, to deliver live sports, news, and events to audiences worldwide. Furthermore, we give a preview of the steps needed to get started.

About CAMB.AI

CAMB.AI, an AWS Partner, is an advanced AI speech synthesis and translation company. CAMB.AI’s Dub Stream platform provides AI powered, real-time dubbing into over 140 languages, all while preserving speaker tone and emotional nuance. The added alternate dubbed audio tracks for live streaming enables organizations to provide global audiences with more options to access their streams. CAMB.AI is a great fit for AWS users because it can be seamlessly integrated with AWS Elemental Media Services. Users can flexibly embed one or multiple dubbed language tracks per stream. CAMB.AI integrates into a live streaming workflow through AWS Elemental MediaConnect service, which supports low-latency, secure delivery of transport streams.

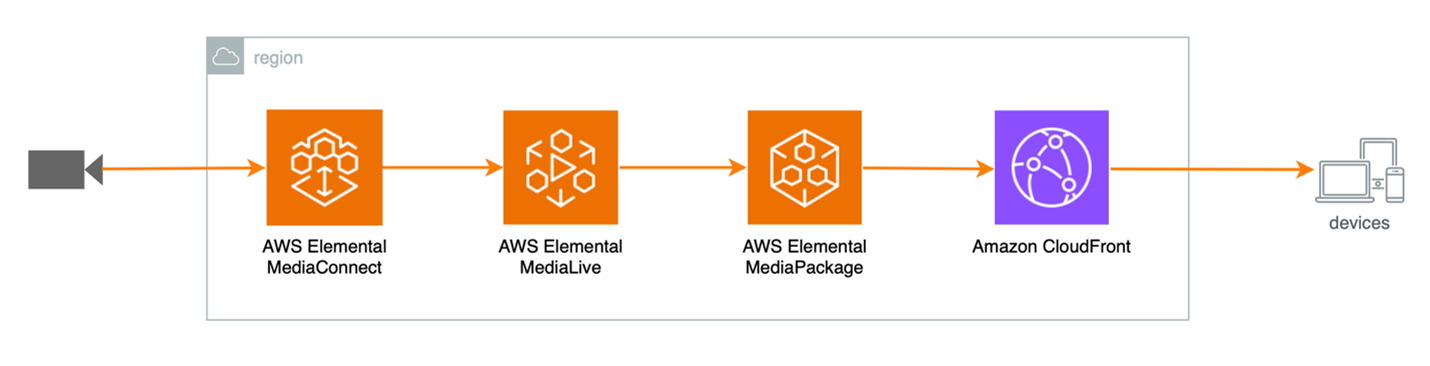

Solution overview: live streaming architecture with audio dubbing

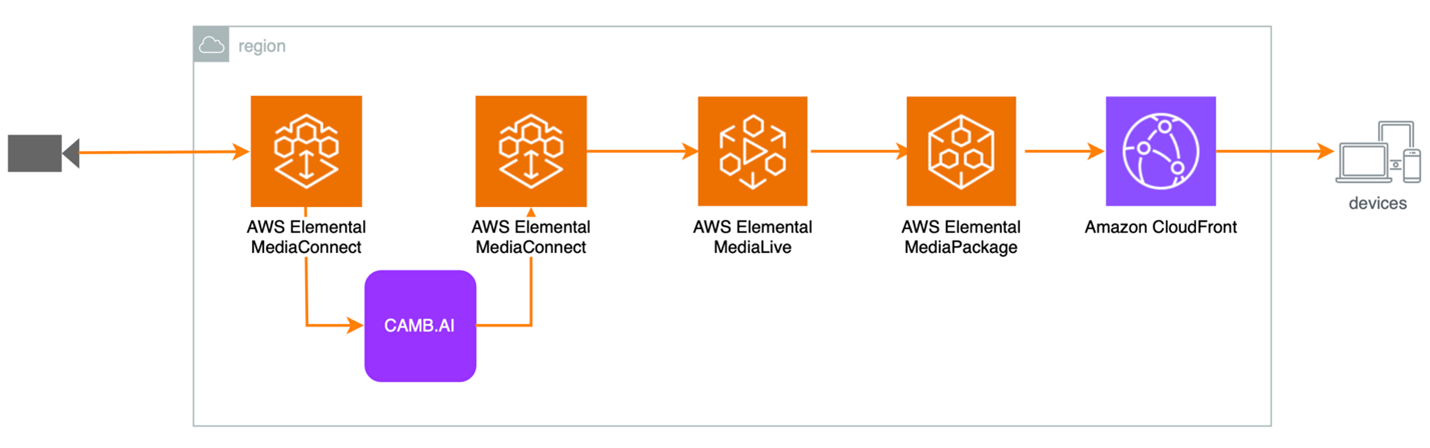

The following figure shows a live-streaming workflow using AWS Media Services, such as MediaConnect, AWS Elemental MediaLive, AWS Elemental MediaPackage, and Amazon CloudFront. MediaConnect provides secure transport of video from on-premises sources to the cloud. MediaLive transcodes video into multiple bitrate-adaptive streams. MediaPackage serves as the origin server and packager, and CloudFront is the content delivery network (CDN) that distributes the live stream to viewers. It is complex to amend a live stream after the transcoding stage, thus we recommend integrating the dubbed languages early in the stream flow, before being transcoded by a service like MediaLive.

CAMB.AI’s Dub System accepts Secure Reliable Transport (SRT) protocol streams for ingest and egress, and SRT is also natively supported by MediaConnect. A recommended flow is viewable in the following figure, which depicts a contribution video encoder to stream to a MediaConnect endpoint, which is picked up by CAMB.AI, and then passes back to MediaConnect before flowing to MediaLive. After users configure MediaLive to ingest the added dubbed audio tracks from the incoming SRT stream, and transcode them to the appropriate output format for delivery, this enables seamless integration with an industry standard highly scalable and available streaming architecture using MediaLive, MediaPackage, and CloudFront. Modern client device HTML5 video players also typically have native support the alternate language audio channels and make them selectable to the end user through the player GUI.

Getting started with CAMB.AI

When you have an account with access credentials from CAMB.AI, you can set up the AI dubbing integration.

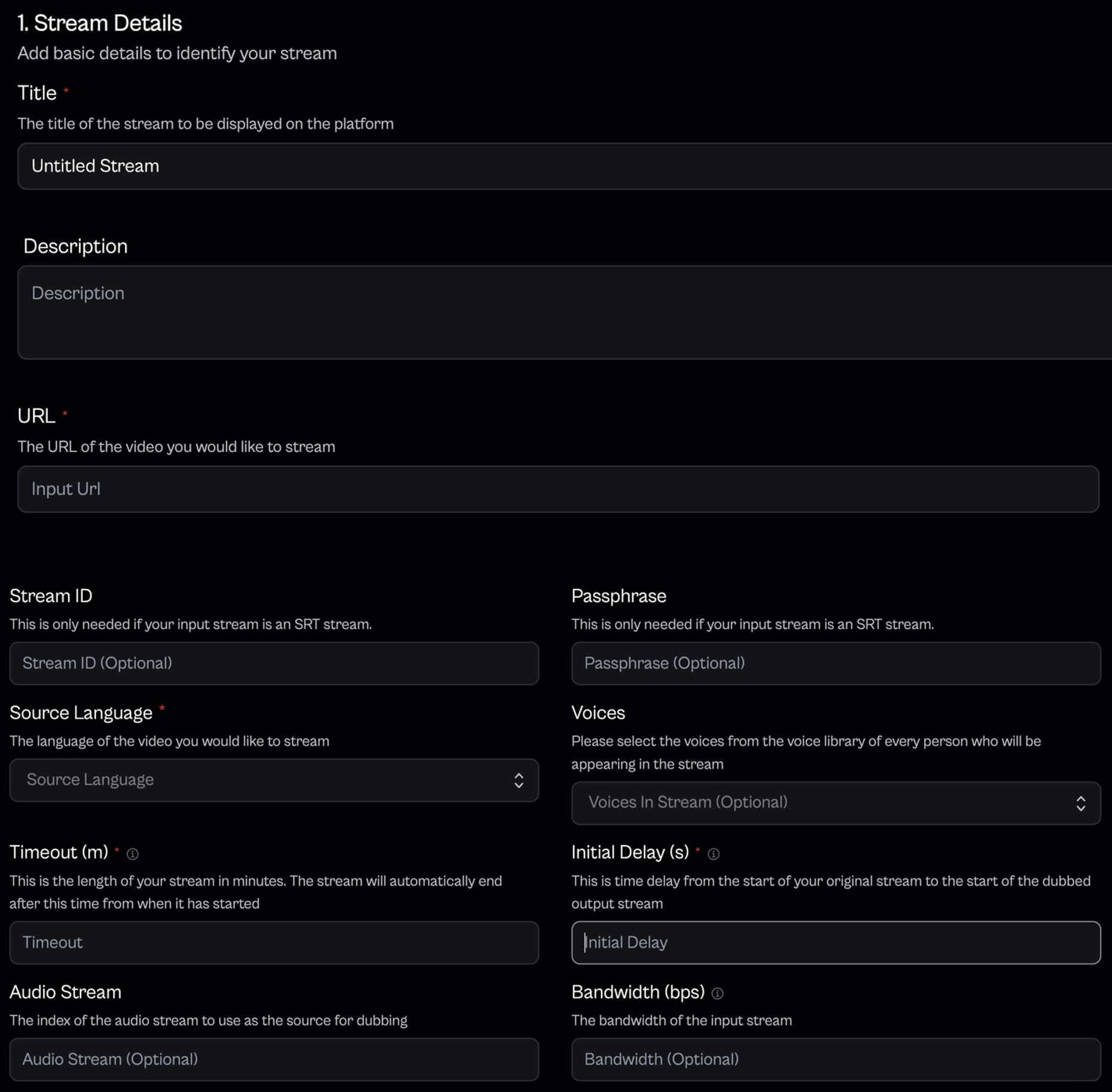

The following figure shows the user interface to configure the stream input to Dub System. The URL field is where you can configure the SRT protocol with optional Stream ID and Passphrase field. For example, srt://<ip address>:<port number>. Refer to the service documentation for specific details about configuring an SRT listener or caller within MediaConnect.

The Source Language dropdown allows you to choose the language of the original audio. The Dub System also allows users to choose a specific voice through the Voices dropdown menu.

When the stream input is configured, the next step is to configure the AI dubbed audio output. CAMB.AI does not modify your original video and audio, but the solution mixes the AI generated audio as added audio channels within the SRT stream.

The following figure shows the options to configure an output stream in Dub System. You choose the proper protocol in the Stream Type field and choose the targeted languages in the Languages field for dubbing or translation.

After configuring the input output parameters, initiate your stream by choosing Start Stream. At this point, your stream is augmented with AI generated dubbed audio and seamlessly integrated into live streaming workflow. Refer to the MediaLive user guide Input settings—Audio selectors to configure MediaLive to use the right track.

Conclusion

This post described how to incorporate AI dubbing technology, such as CAMB.AI, into a live streaming workflow. This integration enables users to deliver AI-dubbed localized streaming to global audience. To learn more about creating a live event using AWS, refer to our Live Streaming on AWS solution, which helps architecture. If you’re interested in getting started with AWS Media Services, visit the product page. For CAMB.AI’s audio dubbing solution, visit CAMB.AI.

Further reading

For those looking beyond audio dubbing and wanting to integrated caption/subtitles into their live stream, the architecture differs based specific requirements and use cases. For more information, refer to the following post: