AWS for M&E Blog

Glide GAIA powers responsible newsroom AI with Amazon Bedrock Guardrails

In the ever-competitive market of news publishing, editorial efficiency has become key to gaining an advantage. Generative AI has emerged as a powerful tool, allowing editors and writers to offload repetitive tasks so they can concentrate on keeping readers better informed. However, adoption of this technology in newsrooms has been cautious, as publishers rightfully prioritize maintaining editorial standards, reader trust, and copyright compliance.

Enter Glide Publishing Platform (Glide), which has taken a bold step forward in addressing these concerns. By integrating Amazon Bedrock Guardrails (Guardrails) into their Content Management System (CMS), Glide is enabling newsrooms to harness the power of AI while maintaining rigorous control over content quality and ethical standards.

Glide’s AI journey: from GAIA to Guardrails

Glide introduced its suite of generative AI tools, known as Glide AI Assistant (GAIA), in 2023, leveraging a range of best-fit large language models (LLMs) using Amazon Bedrock. Amazon Bedrock is a fully managed service from Amazon Web Services (AWS) that offers a choice of high-performing foundation models from leading AI companies and Amazon.

From the outset, GAIA was designed with a core focus on maintaining editorial control and content quality—a critical requirement for news publishers. Building on this foundation, Glide has now doubled down on its commitment to content integrity by incorporating Amazon Bedrock Guardrails into the platform.

Guardrails: a safety net for AI-powered journalism

Amazon Bedrock Guardrails provides configurable safeguards to help safely build generative AI applications at scale with a consistent and standard approach used across a wide range of foundation models. For news organizations, this is particularly crucial, as even a single AI-generated headline could potentially damage hard-earned trust or lead to legal complications.

GAIA uses a variety of different foundation models for different use cases, each having its own tendencies toward hallucinations or factual errors and varying built-in protections and vulnerabilities. Guardrails provides customers with a unified, consistent safety and compliance solution, whichever models are being leveraged.

Key features of the Amazon Bedrock Guardrails feature include:

- Granular content filtering against threats, hate speech, and profanity

- Topic and subject filtering based on customizable parameters

- Personal and sensitive data protection, including Personally Identifiable Information (PII) filtering

- Safeguards against prompt manipulation and malicious attacks

- Mitigation of AI model hallucinations in generated responses

Glide’s implementation: architecture and use cases

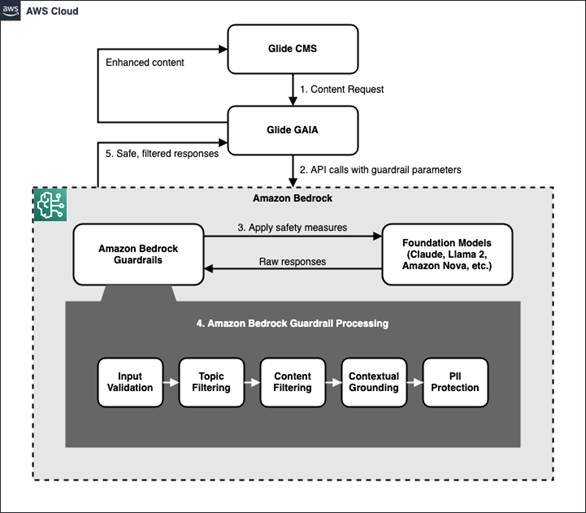

The integration of Amazon Bedrock Guardrails into Glide’s existing infrastructure is seamless, as illustrated in Figure 1.

How it works:

- Content requests originate in the Glide CMS

- GAIA processes these requests, making API calls to Amazon Bedrock with specified Guardrails parameters

- Amazon Bedrock applies safety measures through its Guardrails feature, interfacing with foundation models such as Anthropic’s Claude or Meta’s Llama2

- The Guardrails processing pipeline performs:

-

- Input validation: Confirms all content meets technical and editorial standards before publication, reducing errors and maintaining quality

- Topic filtering: Blocks unauthorized or sensitive topics from appearing in articles, facilitating compliance with editorial policies and article guidelines

- Content filtering: Automatically identifies and flags potentially harmful or offensive language in articles, helping to preserve brand integrity

- Contextual grounding: Verifies AI-generated news content is factually accurate and contextually relevant, minimizing the risk of publishing misinformation

- PII protection: Detects and redacts personally identifiable information in news articles, protecting privacy and supporting regulatory compliance

- Safe, filtered responses are returned to GAIA and ultimately to Glide CMS

This architecture makes sure that all AI-generated content adheres to predefined standards before reaching human editors.

Example use cases:

- Legal reporting: A publisher using AI to summarize court documents can set rules to prevent verbatim reproduction of sensitive testimony, while still providing accurate summaries.

- Financial news: Guardrails can be configured to confirm AI-generated content doesn’t inadvertently provide financial advice, maintaining compliance with regulatory standards.

- User-generated content: Publishers can implement robust filters to automatically screen user submissions for inappropriate content or personal information.

- Fact-checking: The contextual grounding feature helps prevent AI hallucinations, providing an additional layer of accuracy for news content.

Implementation

The code to implement Amazon Bedrock Guardrails is straightforward, as shown in the following Python snippet. This code allows for toggling of Guardrails, providing flexibility in how and when these safety measures are applied.

The real effort is in developing rules that will meet your editorial standards. What threshold levels should you set for content filtering? What definitions and sample phrases should you provide for topic filters? This is where your editors must apply their expertise to achieve the right content standards.

News is particularly challenging, since you often need to report on protests, armed conflicts, and crimes, which AI systems might flag as inappropriate. You will need to develop different sets of guardrails for different topics. The guardrails for a travel section of a news product, for example, will probably be quite different from the rules you establish for world affairs.

Conclusion: the future of AI in newsrooms

As the media landscape continues to evolve, tools like Glide CMS, with Amazon Bedrock Guardrails, are paving the way for a future where AI and human expertise work in harmony to deliver high-quality, trustworthy news content at scale.

For newsrooms, the integration of Amazon Bedrock Guardrails into Glide Publishing Platform represents a significant step forward in making AI a safe and trusted tool. By providing granular control over AI-generated content, Glide is empowering publishers to increase efficiency without compromising on the quality and integrity that readers expect.

For news organizations looking to explore the possibilities of AI while maintaining editorial standards, Glide Publishing Platform offers a compelling solution. We encourage you to learn more about how Glide and Amazon Bedrock Guardrails can benefit your newsroom. Contact an AWS Representative to know how we can help accelerate your business or contact the Glide Sales Team to learn more about Glide CMS and schedule a demonstration.

Further reading

- Use AWS IAM Policies to enforce Amazon Bedrock Guardrails

- Learn how to use the new Amazon Bedrock Guardrails safeguard tiers to tailor AI safety controls to each application’s needs

- Applying Amazon Bedrock Guardrails when deploying Retrieval Augmented Generation (RAG) with Amazon Bedrock Knowledge Base

- Read more about how Glide Publishing Platform adopted Amazon Bedrock Guardrails