AWS for M&E Blog

Automated highlights and short-form solution with DIGIBASE

This blog post was co-authored by Thomas Kramer, DIGIBASE.

As video consumption habits evolve, audiences now engage with content across various platforms and formats, especially on social media. To reach more viewers, media companies and content owners are providing near real-time highlights of live events on social platforms to maximize ad profits, as well as preparing short-form videos to drive traffic to their main platforms. This requires the ability to quickly generate multiple versions from a single video.

However, manually reviewing and editing highlights is inefficient and costly—especially as the amount of content grows. Increasing video volume often means hiring more staff, which can become a significant burden for companies.

We will share at a high-level a solution that Amazon Web Services (AWS) and Partner, DIGIBASE, built to combat this challenge. It is a natural language-based solution to automate short-form video creation. By leveraging AWS services (Amazon Transcribe, Amazon Rekognition, and Amazon Bedrock), this solution offers a customizable platform. It can flexibly address the distinct needs of customers who want to create highlights and short-form videos from any video footage, at scale, using generative AI technology.

Customer considerations

While repetitive tasks or content reviews can be delegated to AI, as an editor, it is still necessary to make decisions about instructing or having the AI repeat certain tasks to ensure the quality of the content.

Additionally, since each company has different requirements, the solution allows for customization of specific features through pre-service consultations. For example, when videos are submitted, the solution in the backend can remove L-bar advertisements automatically or exclude videos containing certain keywords from the final output.

Solution overview

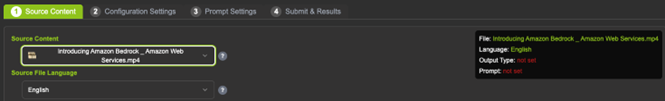

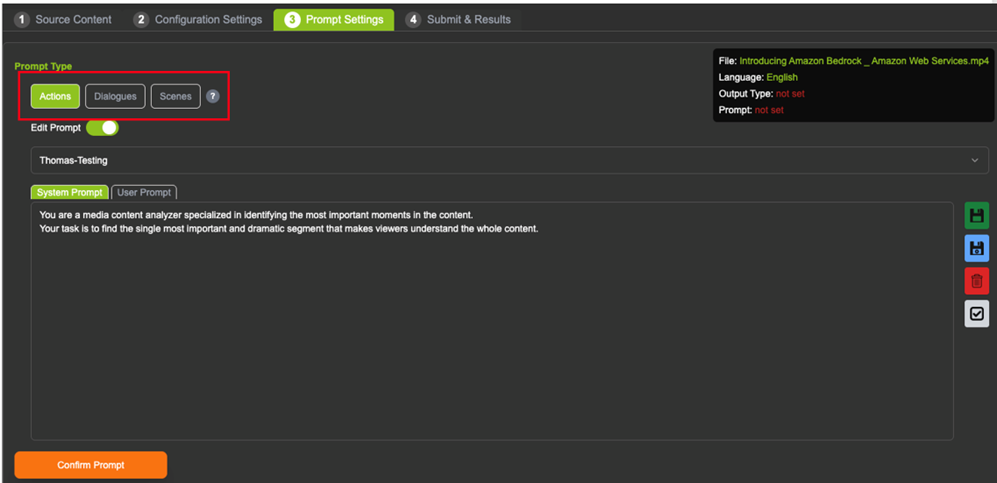

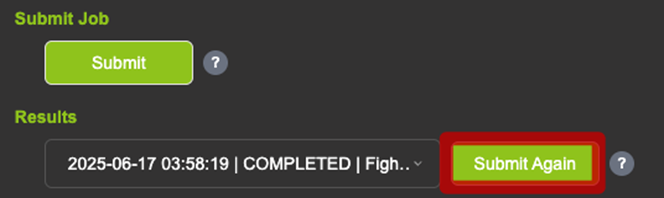

The process for using the solution is very straightforward for users, as the backend operates asynchronously. Figures 1-3 illustrate the basic workflow and usage of the solution. UI and additional features are customizable.

Explanation of each step

- Source Content: Select the video and language to process.

- Configuration Settings: Choose the desired video output (for example, 9:16 ratio, 1080p-720p).

- Prompt Settings: Select a saved default prompt type in the UI. These prompts are managed separately for each team, or user, through Amazon Bedrock Prompt Management. For non-IT users, they can click buttons (such as Action, Dialogue, Scenes) which are preconfigured. However, they can always directly edit the prompt.

- Submit the job. An AWS Step Functions workflow provides notification when processing is complete, then access the results.

- To re-generate content from existing pre-generated content, users can click the Submit Again This allows the solution to process only essential requirements (such as Amazon Bedrock and AWS Elemental MediaConvert) while skipping unnecessary steps, thereby reducing costs and accelerating processing time.

Technical overview

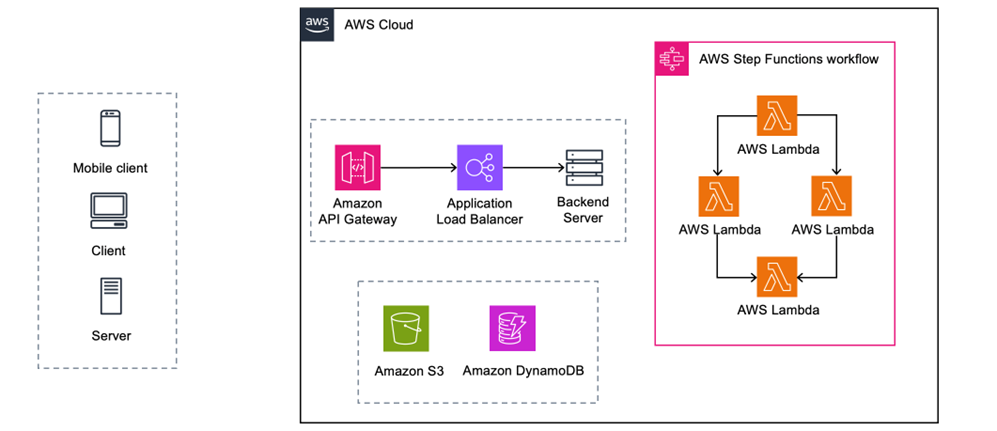

The overall concept of the solution is one-source, multi-use. Customers can quickly and repeatedly generate content from a single source while optimizing infrastructure costs. Users interact with the solution through a graphical interface (see previous Figures 1-3) or REST API. When a user submits a job, the solution divides processing into multiple independent tasks using a modular design.

Once a job is submitted, the backend handles job scheduling, queue management, and initiates an AWS Step Functions workflow called a state machine. Each stage leverages different managed services such as AWS Elemental MediaConvert, Amazon Transcribe, or Amazon Bedrock.

Event-driven notifications decouple long-running tasks, minimizing idle compute resources and reducing infrastructure costs. The workflow is fully asynchronous, and users are notified when processing is complete. This architecture supports efficient, high-volume batch processing and delivers a seamless user experience.

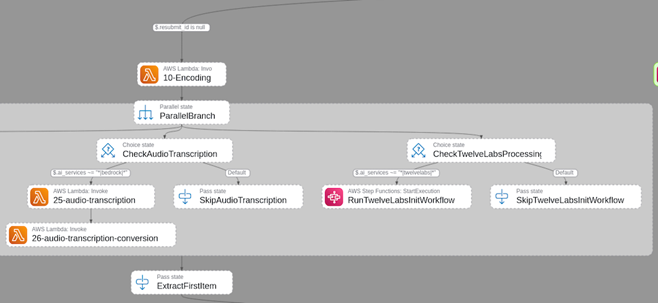

State machine workflow

The main advantage of using a state machine in AWS Step Functions is the ability to split a job into smaller, independent tasks that can be executed separately. The workflow defines the initial step and its input parameters. It then passes the output of each task as the input to the next. This workflow can be simple (a linear sequence of tasks) or complex (with parallel execution and branching based on output variables, asynchronous notifications, and automated default or failure states).

Separating tasks into individually executable steps makes the overall solution highly flexible and maintainable. Each step is typically implemented as an AWS Lambda function, so tasks can be updated or versioned independently without affecting the entire application.

At runtime, AWS Step Functions automatically allocates compute resources for one or many parallel workflow executions. Additionally, the AWS Step Functions console offers a graphical view of each execution, making it effortless to monitor and troubleshoot workflows.

Steps in a state machine are typically small, short-running tasks that each perform a specific part of the overall job. If tasks are independent, they can run in parallel branches, which improves the total processing time.

For longer-running activities (such as video encoding or audio transcription), Step Functions tasks submit jobs to the appropriate managed service and immediately return, helping to reduce compute costs. Figure 6 illustrates part of the workflow for processing a video using the AWS Step Functions workflow.

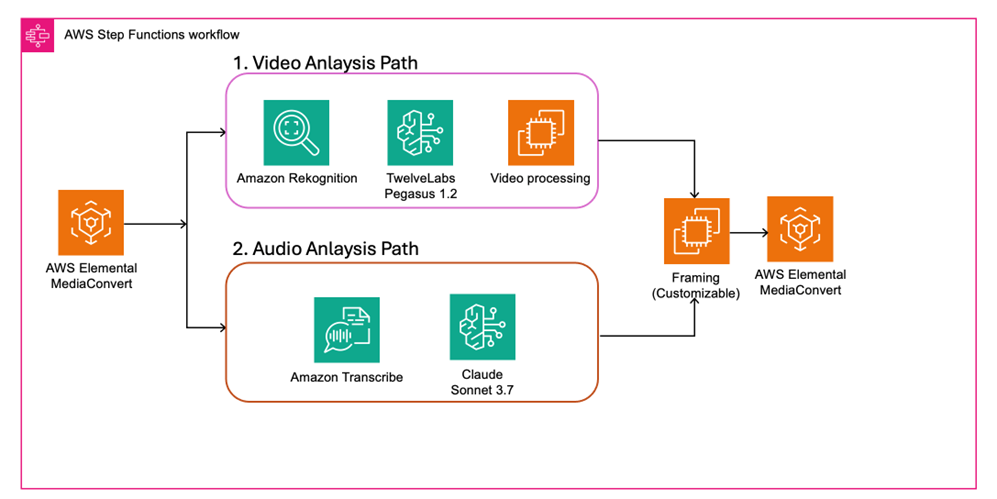

The AWS Step Functions workflow begins with AWS Elemental MediaConvert as the entry point.

From there, the process splits into two main parallel branches:

- Video analysis path

-

- Amazon Rekognition starts segment-detection to detect shots and technical cues.

- TwelveLabs Pegasus 1.2 on Amazon Bedrock runs for advanced video understanding.

- The results go through a video processing step for refinement or transformation.

- Audio analysis path

-

- Amazon Transcribe converts audio from the video into text.

- The transcribed text is processed by Anthropic Claude Sonnet 3.5 for further analysis

After processing, the data is sent to Claude Sonnet 3.7 to finalize the segments. The finalized content is then returned to AWS Elemental MediaConvert for final encoding and delivery.

Expected outcome

In many industries, customers have different needs, but there are some key features that are important for anyone creating short-form videos. This solution provides these essential features along with short-form video content.

Following are some examples of customized features the solution provides, which are applied to the Video Processing and Framing (customizable) instances shown in Figure 6:

- Thumbnails at specific timestamps

- Timecode delivery for particular scenes or situations

- Machine learning-based ad removal (linear, L-bar)

- Selectable transition effects

- Subtitle formats

- Batch jobs option

These features are often complicated and time-consuming to develop on your own, but the solution makes them straightforward to access and manage.

Summary

Creating short-form videos and highlights in the media industry is often a time-consuming, repetitive task that demands substantial human effort—especially at scale. Many customers have shared that scaling this workflow is a challenge, both in terms of cost and operational efficiency.

To address these challenges, AWS with DIGIBASE built a serverless, AI-powered solution that automates the creation of shorts and highlights—dramatically reducing manual work and enabling faster, more reliable production.

This solution can support media companies who are ready to produce more content, faster and at a lower cost, while confirming quality and consistency. If you’d like to learn more about how AWS-powered AI can transform your video production workflow, contact an AWS Representative for details.

Further reading

- Optimize costs with tiered pricing in AWS Elemental MediaConvert

- Video semantic search with AI on AWS

- Optimize multimodal search using the TwelveLabs Embed API and Amazon OpenSearch Service

- Streamlining AWS Serverless workflows: From AWS Lambda orchestration to AWS Step Functions

- How to decide between Amazon Rekognition image and video API for video moderation