AWS for M&E Blog

5 ways Prime Video improves the viewing experience with generative AI on AWS

When it comes to streaming, audiences have a wide selection at their fingertips. With so many options available to customers, a key differentiator when considering where to watch content is often the user experience. Prime Video continuously strives to provide the best streaming experience possible, from helping customers find the perfect movie (or the next binge-worthy series) to watching the big game (even when you’re late). Generative AI is responsible for powering many of these improvements.

Using Amazon Bedrock, a fully managed service from Amazon Web Services (AWS) for building generative AI applications, Prime Video is able to deliver more value and bespoke insights to viewers. It’s already improved the streaming experience in a range of areas and is only scratching the surface of what’s possible.

Here are five ways Prime Video uses generative AI from AWS to deliver premium viewing experiences for customers:

1. Efficient, personalized content recommendations

Between the range of must-watch Amazon MGM Studios Original films and series, licensed content, and even add-on subscriptions (such as Apple TV+, HBO Max, and Crunchyroll), Prime Video offers customers a vast array of premium programming.

As the amount of content available on Prime Video increases, so too does the importance of search and recommendation tools to help customers spend more time watching and less time searching. That’s why Prime Video is using AI to make it easier to serve up, search for, and find the entertainment experiences customers want.

For example, Amazon Bedrock is helping power personalized content recommendations directly within the “Movies” and “TV Shows” landing pages of Prime Video. Viewers will see “movies we think you’ll like” and “TV shows we think you’ll like” collections on each page that are curated based on their interests and viewing history.

2. Stay caught up with X-Ray Recaps

The X-Ray Recaps feature on Prime Video helps viewers get up to speed on whatever they’re watching, without risking spoilers. X-Ray Recaps creates brief, easy-to-digest summaries of full seasons of TV shows, single episodes, and even pieces of episodes.

From a few minutes into a new episode, halfway through a season, or having taken a break from watching a series and needing a refresher, X-Ray Recaps delivers short text snippets. The snippets describe key cliffhangers, character-driven plot points, and other details that can all be accessed at any point in the viewing experience.

Powered by a combination of Amazon Bedrock managed foundation models and custom AI models, trained using Amazon SageMaker, X-Ray Recaps works by analyzing various video segments. Combined with subtitles or dialogue, it generates detailed descriptions of key events, places, times, and conversations. Amazon Bedrock Guardrails are applied to verify that summaries remain spoiler-free.

X-Ray Recaps builds upon the existing X-Ray features on Prime Video, which help viewers dive deeper into what they’re watching by offering trivia and information about the cast, soundtrack, and more.

3. Bringing deeper insights to Thursday Night Football and NASCAR

The most recent seasons of exclusive NFL Thursday Night Football (TNF) and NASCAR events on Prime Video marked the debut of several new Prime Insights. These new insights are AI-powered broadcast enhancements specifically designed to bring fans closer to the action. They were built through a unique collaboration of Prime Sports producers, engineers, on-air analysts, AI and Computer Vision experts with an AWS team using Amazon Bedrock. These new Prime Insights illuminate key performance angles and storylines like never before.

Prime Insights illustrate hidden aspects of the game and forecast pivotal moments before they happen. For instance, the “Defensive Vulnerability” feature on TNF is powered by a proprietary machine learning model. It employs thousands of data points to analyze defensive and offensive formations and highlight where the offense will—or should—attempt to attack. The “Burn Bar” on Prime coverage of NASCAR uses an AI model on Amazon Bedrock, combined with live tracking data and in-car telemetry signals. It analyzes fuel consumption and fuel efficiency for every car in the field, identifying which drivers are conserving fuel and which are burning it fast to reach the finish line and capture the checkered flag.

Rapid Recap, which is also powered by AWS using Amazon Bedrock, is a feature that helps fans catch up on the action quickly after joining an event that is already in progress. Rapid Recap automatically compiles a full recap of highlights, up to two minutes in length, then drops fans into the live feed.

4. Making content more accessible

The first capability of its kind, Dialogue Boost analyzes the original audio in a movie or series and uses AI to intelligently identify points where dialogue may be hard to hear above background music and effects. Then, the feature isolates speech patterns and enhances audio to make the dialogue clearer. This AI-based approach delivers a targeted enhancement to portions of spoken dialogue, instead of a general amplification at the center channel in a home theater system.

To help power Dialogue Boost, Prime Video utilizes a variety of AWS services including AWS Batch with Amazon Elastic Container Registry (Amazon ECR), Amazon Elastic Container Service (Amazon ECS), AWS Fargate, Amazon Simple Storage Service (Amazon S3), Amazon DynamoDB, and Amazon CloudWatch, among others. Initially launched in English, Dialogue Boost now supports an additional six languages including French, Italian, German, Spanish, Portuguese and Hindi.

5. Enhanced video understanding

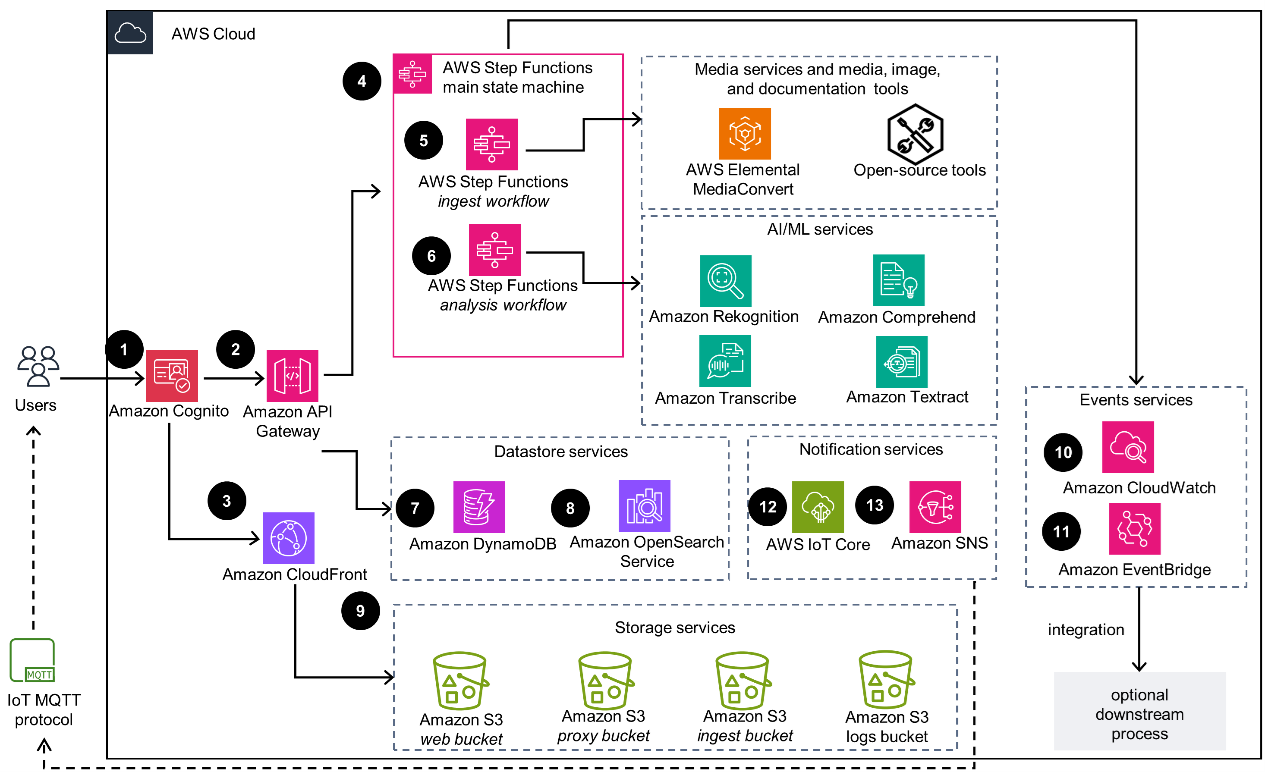

Using generative AI, media and entertainment companies can now better understand their media assets by extracting metadata and adding vector embeddings, known as video understanding. Marketing assets of Prime Video are stored across disparate systems, with sometimes insufficient metadata. This makes it difficult for teams to discover, track rights, verify quality control, analyze, and monetize their content effectively.

To address this, Prime Video started using the Media2Cloud on AWS guidance—which delivers comprehensive media analysis at frame, shot, scene, and audio levels. The guidance helps to enrich assets’ metadata (such as celebrity, text on screen, moderation, mood detection, and transcription). Powered by Amazon Bedrock, Amazon Nova, Amazon Rekognition, and Amazon Transcribe, Media2Cloud enables faster, more accurate, video understanding for enhanced content management, search capabilities, and audience engagement.

Metadata from media assets of Prime Video are automatically fed to an AWS Partner, Iconik, media asset management (MAM). As a result, Prime Video has enriched hundreds of thousands of assets and improved discoverability in its marketing archive.

Whether for personalization, catching up viewers, driving deeper insights, making content more accessible, or better understanding media assets, generative AI on AWS is essential for helping Prime Video deliver standout viewing experiences.

Learn more about how Prime Video is using generative AI on AWS to improve the streaming experience and find out how other companies are benefitting from Amazon Bedrock.

Contact an AWS Representative to know how we can help accelerate your business.