Artificial Intelligence

Category: Thought Leadership

Advanced fine-tuning techniques for multi-agent orchestration: Patterns from Amazon at scale

In this post, we show you how fine-tuning enabled a 33% reduction in dangerous medication errors (Amazon Pharmacy), engineering 80% human effort reduction (Amazon Global Engineering Services), and content quality assessments improving 77% to 96% accuracy (Amazon A+). This post details the techniques behind these outcomes: from foundational methods like Supervised Fine-Tuning (SFT) (instruction tuning), and Proximal Policy Optimization (PPO), to Direct Preference Optimization (DPO) for human alignment, to cutting-edge reasoning optimizations such as Grouped-based Reinforcement Learning from Policy Optimization (GRPO), Direct Advantage Policy Optimization (DAPO), and Group Sequence Policy Optimization (GSPO) purpose-built for agentic systems.

Exploring the zero operator access design of Mantle

In this post, we explore how Mantle, Amazon’s next-generation inference engine for Amazon Bedrock, implements a zero operator access (ZOA) design that eliminates any technical means for AWS operators to access customer data.

Governance by design: The essential guide for successful AI scaling

Picture this: Your enterprise has just deployed its first generative AI application. The initial results are promising, but as you plan to scale across departments, critical questions emerge. How will you enforce consistent security, prevent model bias, and maintain control as AI applications multiply?

Beyond the technology: Workforce changes for AI

In this post, we explore three essential strategies for successfully integrating AI into your organization: addressing organizational debt before it compounds, embracing distributed decision-making through the “octopus organization” model, and redefining management roles to align with AI-powered workflows. Organizations must invest in both technology and workforce preparation, focusing on streamlining processes, empowering teams with autonomous decision-making within defined parameters, and evolving each management layer from traditional oversight to mentorship, quality assurance, and strategic vision-setting.

Practical implementation considerations to close the AI value gap

The AWS Customer Success Center of Excellence (CS COE) helps customers get tangible value from their AWS investments. We’ve seen a pattern: customers who build AI strategies that address people, process, and technology together succeed more often. In this post, we share practical considerations that can help close the AI value gap.

Physical AI in practice: Technical foundations that fuel human-machine interactions

In this post, we explore the complete development lifecycle of physical AI—from data collection and model training to edge deployment—and examine how these intelligent systems learn to understand, reason, and interact with the physical world through continuous feedback loops. We illustrate this workflow through Diligent Robotics’ Moxi, a mobile manipulation robot that has completed over 1.2 million deliveries in hospitals, saving nearly 600,000 hours for clinical staff while transforming healthcare logistics and returning valuable time to patient care.

Accelerate enterprise solutions with agentic AI-powered consulting: Introducing AWS Professional Service Agents

I’m excited to announce AWS Professional Services now offers specialized AI agents including the AWS Professional Services Delivery Agent. This represents a transformation to the consulting experience that embeds intelligent agents throughout the consulting life cycle to deliver better value for customers.

Custom Intelligence: Building AI that matches your business DNA

In 2024, we launched the Custom Model Program within the AWS Generative AI Innovation Center to provide comprehensive support throughout every stage of model customization and optimization. Over the past two years, this program has delivered exceptional results by partnering with global enterprises and startups across diverse industries—including legal, financial services, healthcare and life sciences, […]

Beyond pilots: A proven framework for scaling AI to production

In this post, we explore the Five V’s Framework—a field-tested methodology that has helped 65% of AWS Generative AI Innovation Center customer projects successfully transition from concept to production, with some launching in just 45 days. The framework provides a structured approach through Value, Visualize, Validate, Verify, and Venture phases, shifting focus from “What can AI do?” to “What do we need AI to do?” while ensuring solutions deliver measurable business outcomes and sustainable operational excellence.

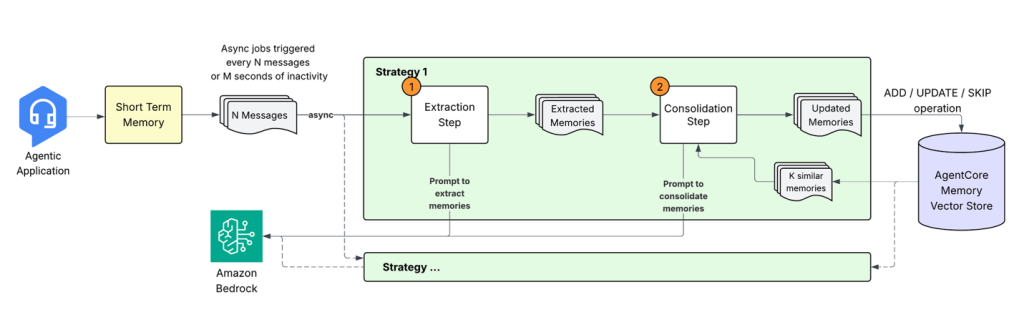

Building smarter AI agents: AgentCore long-term memory deep dive

In this post, we explore how Amazon Bedrock AgentCore Memory transforms raw conversational data into persistent, actionable knowledge through sophisticated extraction, consolidation, and retrieval mechanisms that mirror human cognitive processes. The system tackles the complex challenge of building AI agents that don’t just store conversations but extract meaningful insights, merge related information across time, and maintain coherent memory stores that enable truly context-aware interactions.