Artificial Intelligence

Category: Generative AI

TII Falcon-H1 models now available on Amazon Bedrock Marketplace and Amazon SageMaker JumpStart

We are excited to announce the availability of the Technology Innovation Institute (TII)’s Falcon-H1 models on Amazon Bedrock Marketplace and Amazon SageMaker JumpStart. With this launch, developers and data scientists can now use six instruction-tuned Falcon-H1 models (0.5B, 1.5B, 1.5B-Deep, 3B, 7B, and 34B) on AWS, and have access to a comprehensive suite of hybrid architecture models that combine traditional attention mechanisms with State Space Models (SSMs) to deliver exceptional performance with unprecedented efficiency.

Powering innovation at scale: How AWS is tackling AI infrastructure challenges

As generative AI continues to transform how enterprises operate—and develop net new innovations—the infrastructure demands for training and deploying AI models have grown exponentially. Traditional infrastructure approaches are struggling to keep pace with today’s computational requirements, network demands, and resilience needs of modern AI workloads. At AWS, we’re also seeing a transformation across the technology […]

Accelerate your model training with managed tiered checkpointing on Amazon SageMaker HyperPod

AWS announced managed tiered checkpointing in Amazon SageMaker HyperPod, a purpose-built infrastructure to scale and accelerate generative AI model development across thousands of AI accelerators. Managed tiered checkpointing uses CPU memory for high-performance checkpoint storage with automatic data replication across adjacent compute nodes for enhanced reliability. In this post, we dive deep into those concepts and understand how to use the managed tiered checkpointing feature.

Maximize HyperPod Cluster utilization with HyperPod task governance fine-grained quota allocation

We are excited to announce the general availability of fine-grained compute and memory quota allocation with HyperPod task governance. With this capability, customers can optimize Amazon SageMaker HyperPod cluster utilization on Amazon Elastic Kubernetes Service (Amazon EKS), distribute fair usage, and support efficient resource allocation across different teams or projects. For more information, see HyperPod task governance best […]

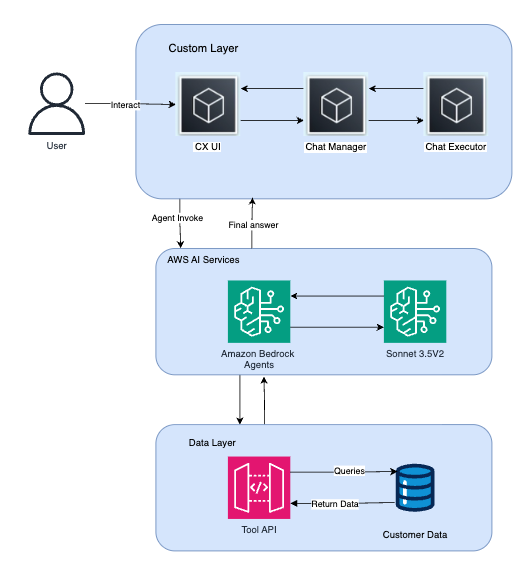

Skai uses Amazon Bedrock Agents to significantly improve customer insights by revolutionized data access and analysis

Skai (formerly Kenshoo) is an AI-driven omnichannel advertising and analytics platform designed for brands and agencies to plan, launch, optimize, and measure paid media across search, social, retail media marketplaces and other “walled-garden” channels from a single interface. In this post, we share how Skai used Amazon Bedrock Agents to improve data access and analysis and improve customer insights.

Exploring the Real-Time Race Track with Amazon Nova

This post explores the Real-Time Race Track (RTRT), an interactive experience built using Amazon Nova in Amazon Bedrock, that lets fans design, customize, and share their own racing circuits. We highlight how generative AI capabilities come together to deliver strategic racing insights such as pit timing and tire choices, and interactive features like an AI voice assistant and a retro-style racing poster.

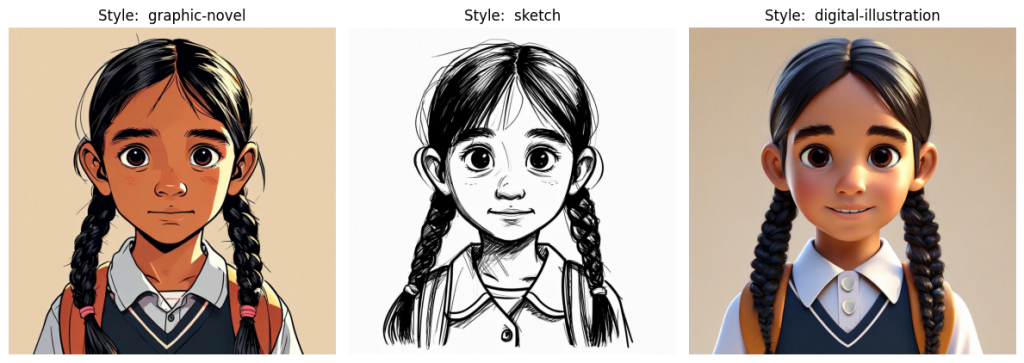

Build character consistent storyboards using Amazon Nova in Amazon Bedrock – Part 1

The art of storyboarding stands as the cornerstone of modern content creation, weaving its essential role through filmmaking, animation, advertising, and UX design. Though traditionally, creators have relied on hand-drawn sequential illustrations to map their narratives, today’s AI foundation models (FMs) are transforming this landscape. FMs like Amazon Nova Canvas and Amazon Nova Reel offer […]

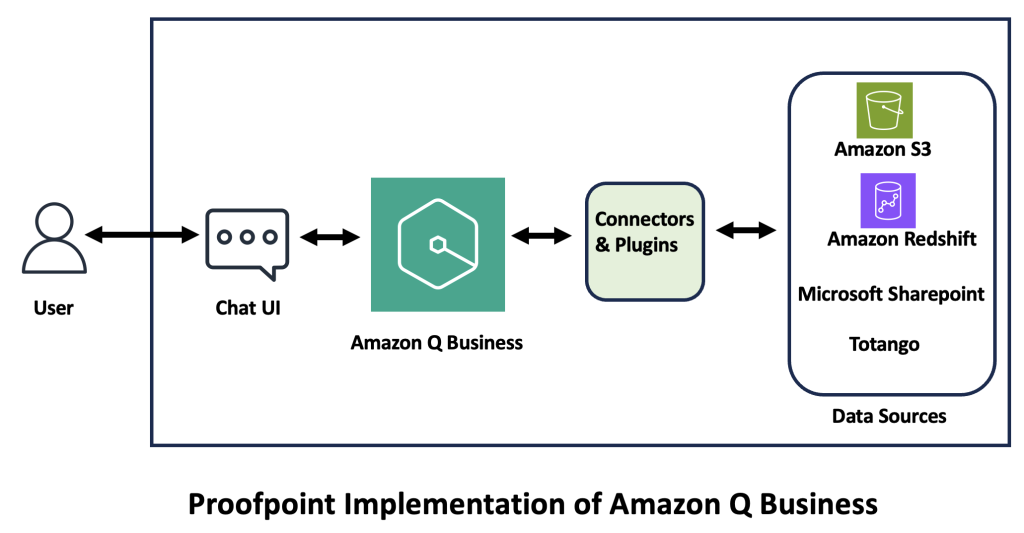

Unlocking the future of professional services: How Proofpoint uses Amazon Q Business

Proofpoint has redefined its professional services by integrating Amazon Q Business, a fully managed, generative AI powered assistant that you can configure to answer questions, provide summaries, generate content, and complete tasks based on your enterprise data. In this post, we explore how Amazon Q Business transformed Proofpoint’s professional services, detailing its deployment, functionality, and future roadmap.

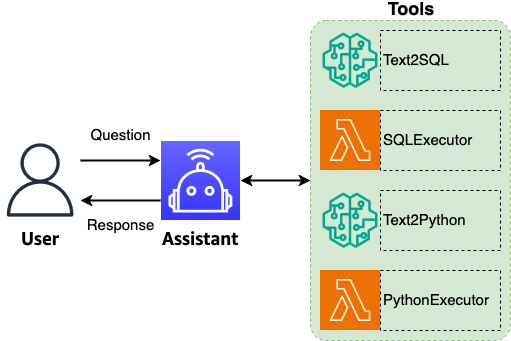

Natural language-based database analytics with Amazon Nova

In this post, we explore how natural language database analytics can revolutionize the way organizations interact with their structured data through the power of large language model (LLM) agents. Natural language interfaces to databases have long been a goal in data management. Agents enhance database analytics by breaking down complex queries into explicit, verifiable reasoning steps and enabling self-correction through validation loops that can catch errors, analyze failures, and refine queries until they accurately match user intent and schema requirements.

Deploy Amazon Bedrock Knowledge Bases using Terraform for RAG-based generative AI applications

In this post, we demonstrated how to automate the deployment of Amazon Knowledge Bases for RAG applications using Terraform.