AWS Storage Blog

Enhancing FSx for Windows security: AI-powered anomaly detection

In today’s rapidly evolving threat landscape, Security Operations Center (SOC) teams face significant challenges in efficiently analyzing audit logs to identify potential security breaches in cloud file systems. Amazon FSx for Windows File Server generates comprehensive audit logs capturing detailed user activities, but traditional manual analysis of these logs is time-consuming, resource-intensive, and often ineffective at scale. As organizations’ cloud footprints expand, the volume of audit logs grows exponentially, making it difficult for security analysts to identify suspicious patterns using conventional methods.

Consider this scenario: A large enterprise processes over 10 million FSx audit log entries daily across its cloud infrastructure. Using traditional methods, it takes weeks to review a single day’s logs, creating a significant security blind spot. This manual process is not only time-consuming but also costly. The key question is: how can we analyze these massive volumes of audit log entries in real-time while maintaining accuracy and reducing costs?

In this post, we show you how to implement an innovative anomaly detection solution using AWS generative artificial intelligence (AI) services. This solution is particularly valuable for enterprises managing large-scale FSx for Windows File Server deployments. Using Amazon Bedrock, AWS Lambda, and Amazon CloudWatch allows security analysts to query logs using natural language and receive AI-powered insights that highlight potential security threats. This approach automates log analysis, identifies suspicious user activity patterns, and provides actionable insights—dramatically reducing investigation time and improving threat detection capabilities while also offering cost savings.

The generative AI solution: automating log analysis

Security analysts can now ask questions in plain language and get immediate, actionable insights from their audit logs thanks to AWS generative AI services. We demonstrate how these services can automate log analysis and uncover suspicious user activity patterns that might otherwise remain hidden in vast amounts of log data.Integrating natural language processing models into security workflows allows analysts to query logs using plain language. The AI analyzes the data, identifies anomalies, and provides actionable insights. For example, an analyst might ask, “Show me all activities performed by <username>” or “Identify who deleted the file named confidential.txt” without needing to know the specific event IDs or log formats.The AI-powered system automatically does the following:

- Query translation and parsing:

- Translates natural language queries into structured CloudWatch Logs Insights queries.

- Parses the XML-formatted FSx for Windows File Server logs to extract relevant information.

- Anomaly detection:

- Analyzes patterns in user behavior to identify potential anomalies.

- Highlights suspicious activities that might indicate security breaches.

- Exposes potential data exfiltration attempts through abnormal file access volumes.

- Reveals unauthorized modification or deletion of sensitive files.

- Insight provision:

- Provides contextual insights about security events in human-readable format.

This approach dramatically reduces the time and expertise needed to analyze FSx for Windows File Server audit logs, enabling security teams to focus on addressing potential threats rather than parsing through log data. Exposing and highlighting anomalies that would typically remain buried in massive log datasets allows the solution to transform raw log data into actionable security intelligence.

Solution overview

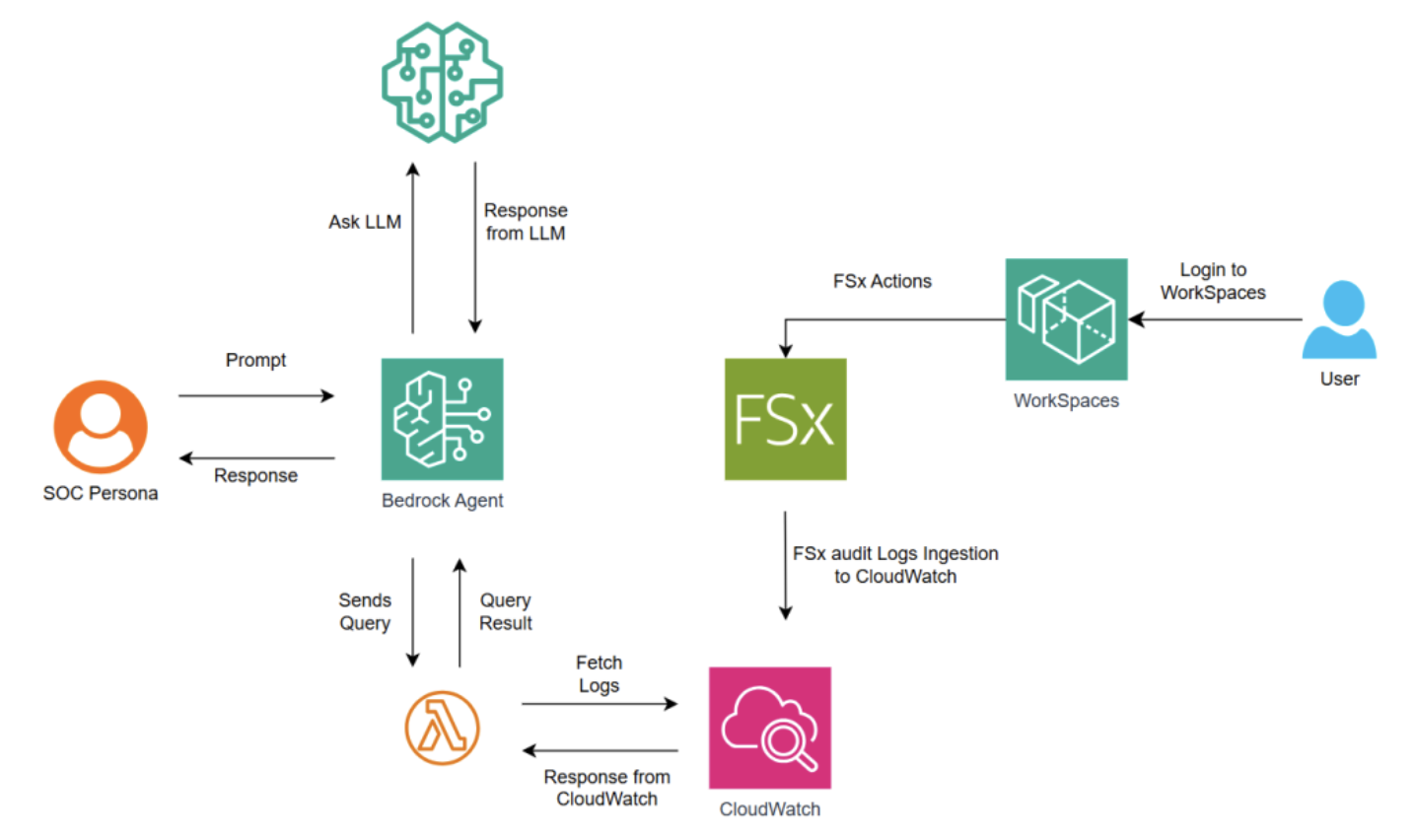

In this post, we walk you through the process of setting up an anomaly detection system (depicted in the following picture) for Amazon FSx for Windows File Server audit logs. However, the framework in this solution can be extended to other log sources to detect anomalous behaviors quickly and investigate for compliance and policy enforcement.

Figure 1: Architecture overview

The solution has the following components:

User activity and log generation: Users access their workstations through Amazon WorkSpaces, which provides secure virtual desktop infrastructure. During their regular activities (such as file access, system modifications, or security-relevant actions), FSx for Windows File Server automatically generates detailed audit logs. This represents the initial data generation phase of this solution, where every relevant user action is captured and logged.

Log collection in CloudWatch: The FSx for Windows File Server audit logs are automatically ingested into CloudWatch, a centralized logging and monitoring service. This process happens in real-time, making sure that user activities are captured and stored in a centralized, searchable format. CloudWatch is the primary data source for subsequent analysis, providing a secure and scalable repository for FSx for Windows File Server audit logs.

SOC team interface: SOC teams interact with the system through an Amazon Bedrock Agent interface. This web-based interface allows security analysts to ask their questions in natural language rather than having to write complex log queries. For example, an analyst might type “Show me who changes permission on file name payroll.pdf” instead of writing a specific CloudWatch Logs Insights query.

Query processing through Amazon Bedrock Agents: When an SOC analyst enters a natural language question, it’s sent to an Amazon Bedrock Agent. The Agent serves as an intelligent intermediary that:

- Analyzes the natural language question to understand the intent

- Processes the question to determine what information needs to be retrieved

- Converts the natural language question into a structured CloudWatch Logs Insights query

- Invokes a Lambda function with the formulated query

CloudWatch Log retrieval: The Lambda function, after receiving the structured query from the Amazon Bedrock Agent, executes the query against CloudWatch Logs, retrieves the relevant log entries, formats the results appropriately and returns the formatted log data back to the Amazon Bedrock Agent.

Enhanced analysis: The Amazon Bedrock Agent then performs analysis through the following:

- Takes the retrieved log data as context.

- Sends both the original question and the log data to a large language model (LLM) through Amazon Bedrock.

- The LLM analyzes the logs for anomalies or patterns based on the original question.

- Generates insights and explanations based on the analysis. This step enhances the raw log data with AI-powered analysis and interpretation.

Response delivery: The Amazon Bedrock Agent formats the LLM’s analysis and sends it back to the SOC analyst in a clear, readable format.

Prerequisites

The following prerequisites are necessary to deploy the solution:

- An AWS account with an AWS Identity and Access Management (IAM) role and user with permissions to create and manage the necessary resources and components for the application. If you don’t have an AWS account, then go to the post How do I create and activate a new Amazon Web Services account?

- If you prefer to use WorkSpaces for testing this solution, then follow the steps outlined in this Amazon WorkSpaces quick setup to setup workspaces and Using Amazon FSx for Windows File Server with Amazon WorkSpaces to mount Amazon FSx volumes to workspaces.

- Before you can use a foundation model in Amazon Bedrock, you must request access to it add or remove access to Amazon Bedrock foundation models

- Our solution needs an FSx for Windows File Server file system with file access auditing enabled. You may follow the steps outlined in the post File Access Auditing Is Now Available for Amazon FSx for Windows File Server to set up your file system, or refer to the file access auditing documentation.

Walkthrough

In the following steps we demonstrate how the audit logs generated from FSx for Windows File Server can be searched for anomalies using Amazon Bedrock agents.

Step 1: Set up Lambda function

Create a Lambda function to query CloudWatch Logs, to do this:

- Open the Lambda console.

- Choose Create function.

- Configure the following settings:

- Function name: Enter a name for the function.

- Runtime: Choose Python 3.13 or greater version.

- Choose Create function.

- The console creates a Lambda function with a single source file named lambda_function. Edit this file and add the following code in the built-in code editor. To save your changes, choose Deploy.

Adjust the following configuration in the created Lambda function

- Configure Lambda function timeout value to 15 minutes.

- Add an environment variable with the key “CW_LOG_GROUP_NAME” and set its value to the CloudWatch Log group name that is configured to store Amazon FSx audit logs.

- Change the value of QUERY_DAYS environment variable value according to your requirement in the Lambda code. For example, if you want to query one week of data QUERY_DAYS = 7. Lambda doesn’t return more than 20 KB regardless of this value.

- Grant the Lambda execution role permissions to read FSx CloudWatch log groups.

Step 2: Set up Amazon Bedrock Agent

To create an Amazon Bedrock Agent:

- Sign in to the AWS Management Console using an IAM role with Amazon Bedrock permissions, and open the Amazon Bedrock console.

- Choose Agents from the left navigation pane under Builder tools.

- In the Agents section, choose Create Agent.

- (Optional) Change the automatically generated Name for the agent and provide an optional Description for it.

- Choose Create. Your agent is created and you’re taken to the Agent builder for your newly created agent, where you can configure your agent.

- For the Agent resource role, choose Create and use a new service role. Let Amazon Bedrock create the service role and set up the necessary permissions on your behalf.

- For Select model, choose a foundation model (FM) (in this solution we are using Claude 3.5 Sonnet V2) for your agent to invoke during orchestration.

- In Instructions for the Agent, enter details to tell the agent what it should do and how it should interact with users.

- Click Save

- Leave Additional Settings as default.

- In the Action groups section, you can choose Add to add action groups to your agent.

- (Optional) In the Action group details section, change the automatically generated Name and provide an optional Description for your action group.

- In the Action group type section, choose Define with function details.

- In the Action group invocation section choose Select an existing Lambda function. Choose a Lambda function that you created previously in Lambda and the version of the function to use.

- Provide a Name and optional (but recommended) Description.

- Enable Confirmation of action group function – optional

- Choose Disabled

- In the Parameters subsection, choose Add parameter. Define the following fields:

- Name : query

- Description : query to extract logs

- Type : String

- Required : True

- When you’re done creating the action group, choose Create.

- Make sure to Prepare to apply the changes that you have made to the agent before testing it.

Step 3: Set up permission for Amazon Bedrock Agent to invoke Lambda

- Go into the Lambda function.

- Choose the Configuration tab.

- Choose the Permission menu item.

- Scroll down to Resource-based Policy Statements and choose the Add Permissions button.

- Chose the AWS Service radio button.

- Choose

Otherfrom the Service dropdown. - Enter a unique statement ID for Statement ID.

- Enter

bedrock.amazonaws.com.rproxy.govskope.cafor the Principal. - Enter your Bedrock Agent’s ARN as the Source ARN.

- Choose

lambda:InvokeFunctionas the Action. - Choose Save.

Testing and user experience workflow

When you’ve created your agent, you can test it directly within the Amazon Bedrock console by following the steps outlined in this Test and troubleshoot agent behavior. After confirming your agent’s configurations are satisfactory, you can create a version (a snapshot of your agent) and an alias that points to that version. Although our example demonstrates testing agent from the Amazon Bedrock console, you can integrate the agent within your application. For more detailed instructions on deployment and integration, refer to Deploy and integrate an Amazon Bedrock agent into your application.

- Query Input: The analyst enters a natural language question in the prompt field, such as “Identify any potential suspicious patterns or anomalies” or “What are the files deleted by user?”.

- AI-powered analysis: Upon submission, the system:

- Interprets the natural language query

- Generates an appropriate CloudWatch query based on predefined instructions

- Executes the query against the CloudWatch Log group

- Retrieves relevant log data

- Intelligent summarization: The retrieved data is processed by an LLM to provide a concise, relevant summary of findings.

- Results presentation: The analysis is displayed in a straightforward format, and includes the following:

- Key findings and potential security issues

- Relevant details such as usernames, file names, and timestamps

- Recommendations for further investigation

Example queries and screenshots

Key benefits

This generative AI-powered approach to FSx for Windows File Server security offers several significant advantages for security operations teams:

- Efficiency: AI models can rapidly analyze vast volumes of log data, freeing up valuable time and resources for security analysts, thereby reducing mean time to detect (MTTD) security events.

- Automated pattern detection: AI excels at uncovering patterns, anomalies, correlations, and complex relationships within large log data sets, a task that would be extremely challenging and time-consuming for humans to perform manually.

- Faster and more consistent auditing: When compared to manual methods, this solution enables security teams to prioritize and address the highest-risk audit findings with greater efficiency and consistency.

- Accessibility: Natural language querying makes log analysis accessible to security team members with varying technical expertise, minimizing the need for specialized knowledge of log formats or query languages.

- Scalability: The solution scales effortlessly as your FSx for Windows File Server environment grows, maintaining performance even as log volumes increase.

- Proactive security: Continuously analyzing logs for suspicious patterns allows the system to help identify potential security threats before they escalate into major incidents.

Cleaning up

If you’re experimenting using the steps in this post, then delete the resources created to avoid incurring costs. To do this:

- Delete the Lambda function created.

- Open the Lambda console.

- Choose the function created for testing, choose Actions, and choose Delete. Confirm deletion by typing “delete” in the confirmation field and choosing Delete.

- Delete the Amazon Bedrock Agent created.

- Delete if you have created an FSx for Windows File Server filesystem for testing this solution.

- Delete if you have created Workspaces for testing this solution.

Conclusion

Combining AWS services such as Amazon Bedrock, AWS Lambda, Amazon CloudWatch, and Amazon FSx for Windows File Server with cutting-edge generative AI capabilities, this solution transforms how organizations approach file system security. Security teams can now identify potential threats more quickly, investigate incidents more thoroughly, and maintain stronger security postures with less manual effort.The implementation detailed in this post demonstrates how to:

- Configure FSx for Windows File Server audit logging to capture comprehensive security events.

- Process XML-formatted logs using CloudWatch Logs Insights.

- Create a Lambda function to execute and format query results.

- Configure an Amazon Bedrock Agent to translate natural language to structured queries.

This approach not only enhances security for FSx for Windows File Server environments but also demonstrates the broader potential of generative AI to revolutionize cloud security operations. The framework established here can be extended to other log sources, creating a comprehensive security monitoring system that uses the latest in AI technology to stay ahead of evolving threats.As cloud adoption continues to accelerate, solutions like this will become increasingly important for organizations looking to maintain robust security postures while efficiently managing their resources. Automating the analysis of audit logs and providing natural language interfaces for security teams, organizations can reduce the time and expertise required to identify and respond to potential security incidents.

For more information on AWS security best practices, check out the AWS Security Documentation and the AWS Security Blog. To learn more about file storage access patterns and insights using FSx for Windows File Server, refer to the related AWS Storage posts that explore more approaches to forensic analysis of file audit records.

Thank you for reading this post. Try out the solution in this post, and leave your feedback and questions in the comments section.