Artificial Intelligence

Category: Responsible AI

Governance by design: The essential guide for successful AI scaling

Picture this: Your enterprise has just deployed its first generative AI application. The initial results are promising, but as you plan to scale across departments, critical questions emerge. How will you enforce consistent security, prevent model bias, and maintain control as AI applications multiply?

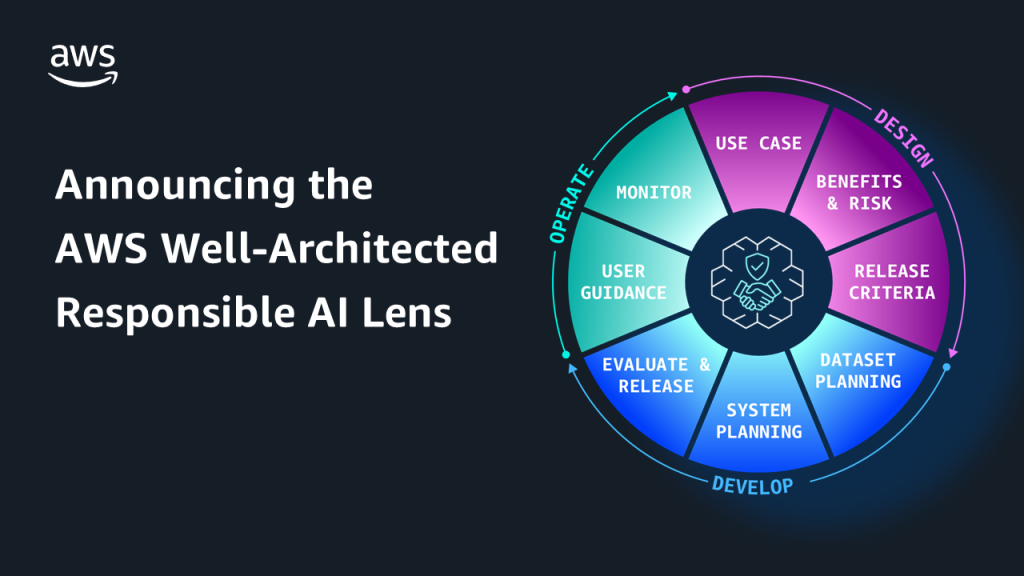

Announcing the AWS Well-Architected Responsible AI Lens

Today, we’re announcing the AWS Well-Architected Responsible AI Lens—a set of thoughtful questions and corresponding best practices that help builders address responsible AI concerns throughout development and operation.

Responsible AI design in healthcare and life sciences

In this post, we explore the critical design considerations for building responsible AI systems in healthcare and life sciences, focusing on establishing governance mechanisms, transparency artifacts, and security measures that ensure safe and effective generative AI applications. The discussion covers essential policies for mitigating risks like confabulation and bias while promoting trust, accountability, and patient safety throughout the AI development lifecycle.

Incorporating responsible AI into generative AI project prioritization

In this post, we explore how companies can systematically incorporate responsible AI practices into their generative AI project prioritization methodology to better evaluate business value against costs while addressing novel risks like hallucination and regulatory compliance. The post demonstrates through a practical example how conducting upfront responsible AI risk assessments can significantly change project rankings by revealing substantial mitigation work that affects overall project complexity and timeline.

Responsible AI for the payments industry – Part 1

This post explores the unique challenges facing the payments industry in scaling AI adoption, the regulatory considerations that shape implementation decisions, and practical approaches to applying responsible AI principles. In Part 2, we provide practical implementation strategies to operationalize responsible AI within your payment systems.

Responsible AI for the payments industry – Part 2

In Part 1 of our series, we explored the foundational concepts of responsible AI in the payments industry. In this post, we discuss the practical implementation of responsible AI frameworks.

How Rocket streamlines the home buying experience with Amazon Bedrock Agents

Rocket AI Agent is more than a digital assistant. It’s a reimagined approach to client engagement, powered by agentic AI. By combining Amazon Bedrock Agents with Rocket’s proprietary data and backend systems, Rocket has created a smarter, more scalable, and more human experience available 24/7, without the wait. This post explores how Rocket brought that vision to life using Amazon Bedrock Agents, powering a new era of AI-driven support that is consistently available, deeply personalized, and built to take action.

Tailor responsible AI with new safeguard tiers in Amazon Bedrock Guardrails

In this post, we introduce the new safeguard tiers available in Amazon Bedrock Guardrails, explain their benefits and use cases, and provide guidance on how to implement and evaluate them in your AI applications.

Building trust in AI: The AWS approach to the EU AI Act

The EU AI Act establishes comprehensive regulations for AI development and deployment within the EU. AWS is committed to building trust in AI through various initiatives including being among the first signatories of the EU’s AI Pact, providing AI Service Cards and guardrails, and offering educational resources while helping customers understand their responsibilities under the new regulatory framework.

Build generative AI solutions with Amazon Bedrock

In this post, we show you how to build generative AI applications on Amazon Web Services (AWS) using the capabilities of Amazon Bedrock, highlighting how Amazon Bedrock can be used at each step of your generative AI journey. This guide is valuable for both experienced AI engineers and newcomers to the generative AI space, helping you use Amazon Bedrock to its fullest potential.