Artificial Intelligence

Category: How-To

Drive organizational growth with Amazon Lex multi-developer CI/CD pipeline

In this post, we walk through a multi-developer CI/CD pipeline for Amazon Lex that enables isolated development environments, automated testing, and streamlined deployments. We show you how to set up the solution and share real-world results from teams using this approach.

Unlock powerful call center analytics with Amazon Nova foundation models

In this post, we discuss how Amazon Nova demonstrates capabilities in conversational analytics, call classification, and other use cases often relevant to contact center solutions. We examine these capabilities for both single-call and multi-call analytics use cases.

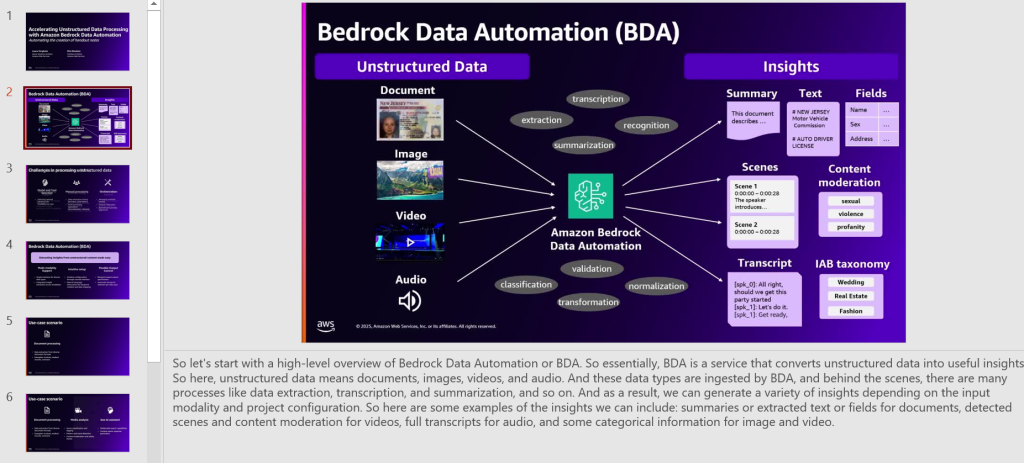

Automate the creation of handout notes using Amazon Bedrock Data Automation

In this post, we show how you can build an automated, serverless solution to transform webinar recordings into comprehensive handouts using Amazon Bedrock Data Automation for video analysis. We walk you through the implementation of Amazon Bedrock Data Automation to transcribe and detect slide changes, as well as the use of Amazon Bedrock foundation models (FMs) for transcription refinement, combined with custom AWS Lambda functions orchestrated by AWS Step Functions.

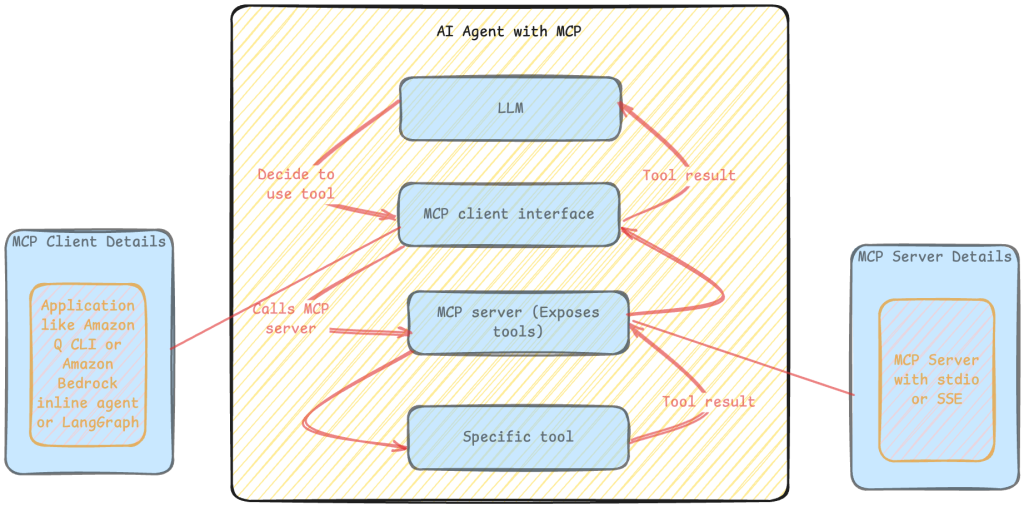

Streamline GitHub workflows with generative AI using Amazon Bedrock and MCP

This blog post explores how to create powerful agentic applications using the Amazon Bedrock FMs, LangGraph, and the Model Context Protocol (MCP), with a practical scenario of handling a GitHub workflow of issue analysis, code fixes, and pull request generation.

A generative AI prototype with Amazon Bedrock transforms life sciences and the genome analysis process

This post explores deploying a text-to-SQL pipeline using generative AI models and Amazon Bedrock to ask natural language questions to a genomics database. We demonstrate how to implement an AI assistant web interface with AWS Amplify and explain the prompt engineering strategies adopted to generate the SQL queries. Finally, we present instructions to deploy the service in your own AWS account.

From fridge to table: Use Amazon Rekognition and Amazon Bedrock to generate recipes and combat food waste

In this post, we walk through how to build the FoodSavr solution (fictitious name used for the purposes of this post) using Amazon Rekognition Custom Labels to detect the ingredients and generate personalized recipes using Anthropic’s Claude 3.0 on Amazon Bedrock. We demonstrate an end-to-end architecture where a user can upload an image of their fridge, and using the ingredients found there (detected by Amazon Rekognition), the solution will give them a list of recipes (generated by Amazon Bedrock). The architecture also recognizes missing ingredients and provides the user with a list of nearby grocery stores.

Build a multi-tenant generative AI environment for your enterprise on AWS

While organizations continue to discover the powerful applications of generative AI, adoption is often slowed down by team silos and bespoke workflows. To move faster, enterprises need robust operating models and a holistic approach that simplifies the generative AI lifecycle. In the first part of the series, we showed how AI administrators can build a […]

Index your Atlassian Confluence Cloud contents using the Amazon Q Confluence Cloud connector for Amazon Q Business

In this post, we provide an overview of Amazon Q Business Confluence Cloud connector and how you can use it for seamless integration of generative AI assistance to your Confluence Cloud.

Use the ApplyGuardrail API with long-context inputs and streaming outputs in Amazon Bedrock

As generative artificial intelligence (AI) applications become more prevalent, maintaining responsible AI principles becomes essential. Without proper safeguards, large language models (LLMs) can potentially generate harmful, biased, or inappropriate content, posing risks to individuals and organizations. Applying guardrails helps mitigate these risks by enforcing policies and guidelines that align with ethical principles and legal requirements.Amazon […]

Improve RAG accuracy with fine-tuned embedding models on Amazon SageMaker

This post demonstrates how to use Amazon SageMaker to fine tune a Sentence Transformer embedding model and deploy it with an Amazon SageMaker Endpoint. The code from this post and more examples are available in the GitHub repo.