Artificial Intelligence

Category: Amazon Machine Learning

Iterate faster with Amazon Bedrock AgentCore Runtime direct code deployment

Amazon Bedrock AgentCore is an agentic platform for building, deploying, and operating effective agents securely at scale. Amazon Bedrock AgentCore Runtime is a fully managed service of Bedrock AgentCore, which provides low latency serverless environments to deploy agents and tools. It provides session isolation, supports multiple agent frameworks including popular open-source frameworks, and handles multimodal […]

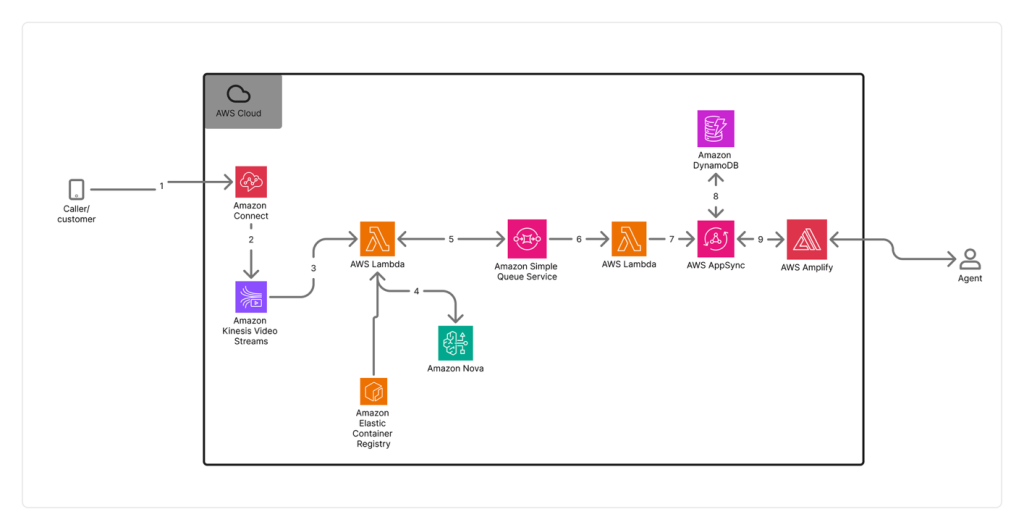

How Switchboard, MD automates real-time call transcription in clinical contact centers with Amazon Nova Sonic

In this post, we examine the specific challenges Switchboard, MD faced with scaling transcription accuracy and cost-effectiveness in clinical environments, their evaluation process for selecting the right transcription solution, and the technical architecture they implemented using Amazon Connect and Amazon Kinesis Video Streams. This post details the impressive results achieved and demonstrates how they were able to use this foundation to automate EMR matching and give healthcare staff more time to focus on patient care.

Build reliable AI systems with Automated Reasoning on Amazon Bedrock – Part 1

Enterprises in regulated industries often need mathematical certainty that every AI response complies with established policies and domain knowledge. Regulated industries can’t use traditional quality assurance methods that test only a statistical sample of AI outputs and make probabilistic assertions about compliance. When we launched Automated Reasoning checks in Amazon Bedrock Guardrails in preview at […]

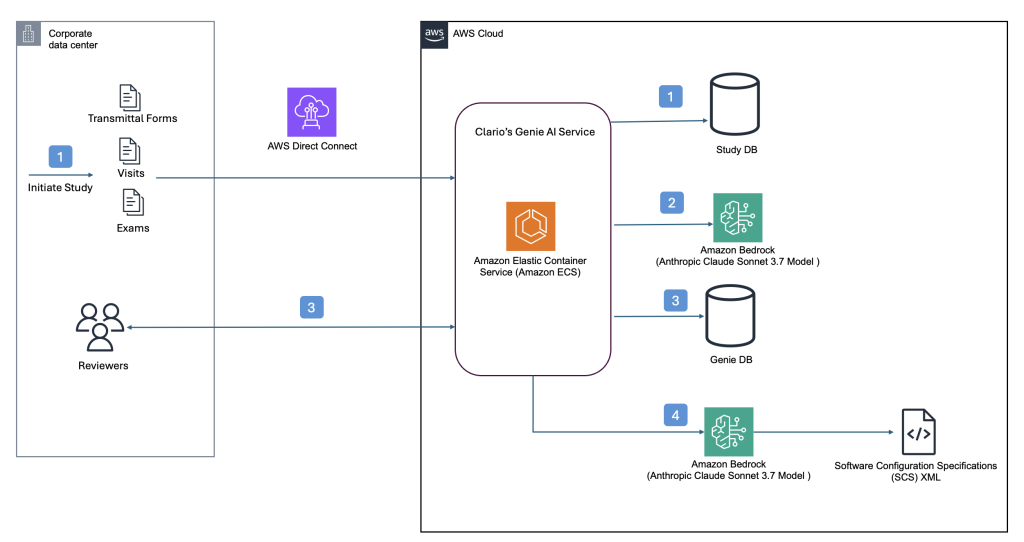

Clario streamlines clinical trial software configurations using Amazon Bedrock

This post builds upon our previous post discussing how Clario developed an AI solution powered by Amazon Bedrock to accelerate clinical trials. Since then, Clario has further enhanced their AI capabilities, focusing on innovative solutions that streamline the generation of software configurations and artifacts for clinical trials while delivering high-quality clinical evidence.

Introducing Amazon Bedrock cross-Region inference for Claude Sonnet 4.5 and Haiku 4.5 in Japan and Australia

こんにちは, G’day. The recent launch of Anthropic’s Claude Sonnet 4.5 and Claude Haiku 4.5, now available on Amazon Bedrock, marks a significant leap forward in generative AI models. These state-of-the-art models excel at complex agentic tasks, coding, and enterprise workloads, offering enhanced capabilities to developers. Along with the new models, we are thrilled to announce that […]

Reduce CAPTCHAs for AI agents browsing the web with Web Bot Auth (Preview) in Amazon Bedrock AgentCore Browser

AI agents need to browse the web on your behalf. When your agent visits a website to gather information, complete a form, or verify data, it encounters the same defenses designed to stop unwanted bots: CAPTCHAs, rate limits, and outright blocks. Today, we are excited to share that AWS has a solution. Amazon Bedrock AgentCore […]

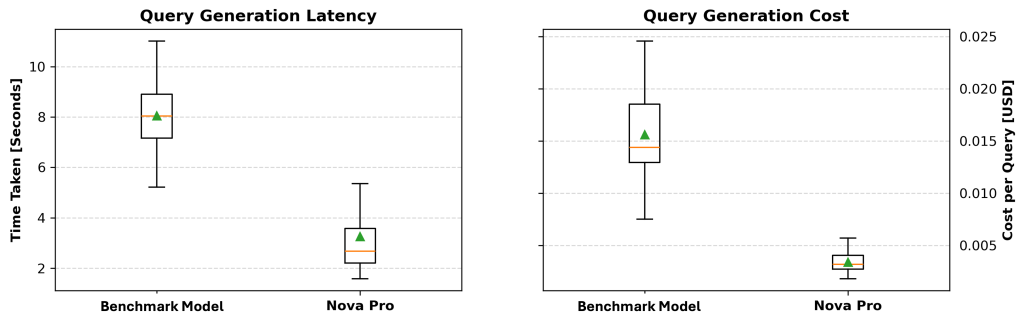

Generate Gremlin queries using Amazon Bedrock models

In this post, we explore an innovative approach that converts natural language to Gremlin queries using Amazon Bedrock models such as Amazon Nova Pro, helping business analysts and data scientists access graph databases without requiring deep technical expertise. The methodology involves three key steps: extracting graph knowledge, structuring the graph similar to text-to-SQL processing, and generating executable Gremlin queries through an iterative refinement process that achieved 74.17% overall accuracy in testing.

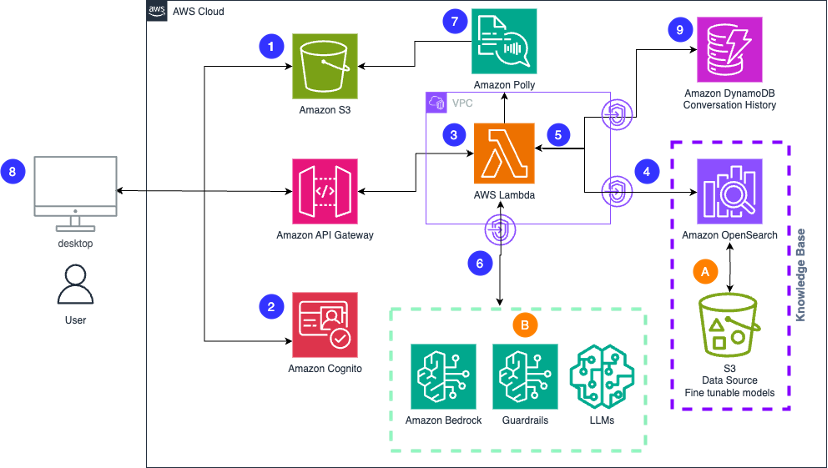

Build scalable creative solutions for product teams with Amazon Bedrock

In this post, we explore how product teams can leverage Amazon Bedrock and AWS services to transform their creative workflows through generative AI, enabling rapid content iteration across multiple formats while maintaining brand consistency and compliance. The solution demonstrates how teams can deploy a scalable generative AI application that accelerates everything from product descriptions and marketing copy to visual concepts and video content, significantly reducing time to market while enhancing creative quality.

Build a proactive AI cost management system for Amazon Bedrock – Part 2

In this post, we explore advanced cost monitoring strategies for Amazon Bedrock deployments, introducing granular custom tagging approaches for precise cost allocation and comprehensive reporting mechanisms that build upon the proactive cost management foundation established in Part 1. The solution demonstrates how to implement invocation-level tagging, application inference profiles, and integration with AWS Cost Explorer to create a complete 360-degree view of generative AI usage and expenses.

Build a proactive AI cost management system for Amazon Bedrock – Part 1

In this post, we introduce a comprehensive solution for proactively managing Amazon Bedrock inference costs through a cost sentry mechanism designed to establish and enforce token usage limits, providing organizations with a robust framework for controlling generative AI expenses. The solution uses serverless workflows and native Amazon Bedrock integration to deliver a predictable, cost-effective approach that aligns with organizational financial constraints while preventing runaway costs through leading indicators and real-time budget enforcement.