Artificial Intelligence

Category: Amazon Machine Learning

Fine-tune and deploy Meta Llama 3.2 Vision for generative AI-powered web automation using AWS DLCs, Amazon EKS, and Amazon Bedrock

In this post, we present a complete solution for fine-tuning and deploying the Llama-3.2-11B-Vision-Instruct model for web automation tasks. We demonstrate how to build a secure, scalable, and efficient infrastructure using AWS Deep Learning Containers (DLCs) on Amazon Elastic Kubernetes Service (Amazon EKS).

How Nippon India Mutual Fund improved the accuracy of AI assistant responses using advanced RAG methods on Amazon Bedrock

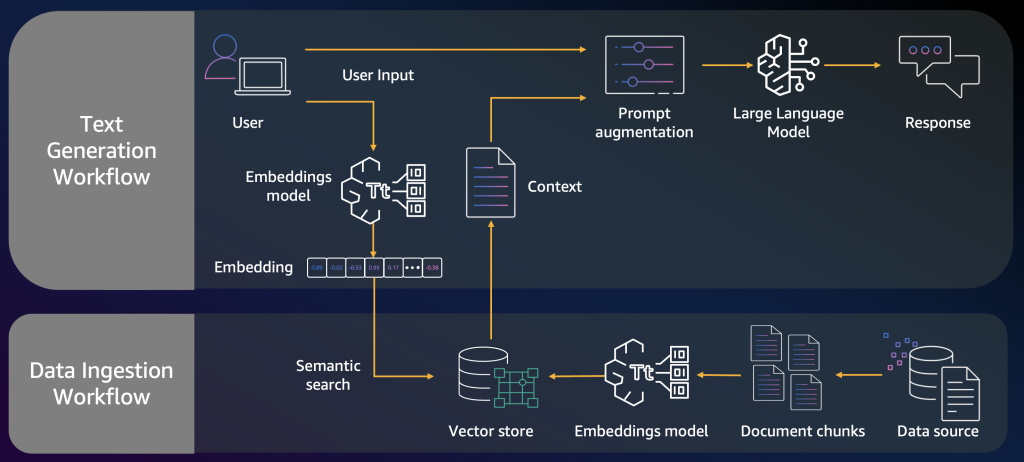

In this post, we examine a solution adopted by Nippon Life India Asset Management Limited that improves the accuracy of the response over a regular (naive) RAG approach by rewriting the user queries and aggregating and reranking the responses. The proposed solution uses enhanced RAG methods such as reranking to improve the overall accuracy

Build a drug discovery research assistant using Strands Agents and Amazon Bedrock

In this post, we demonstrate how to create a powerful research assistant for drug discovery using Strands Agents and Amazon Bedrock. This AI assistant can search multiple scientific databases simultaneously using the Model Context Protocol (MCP), synthesize its findings, and generate comprehensive reports on drug targets, disease mechanisms, and therapeutic areas.

Amazon Nova Act SDK (preview): Path to production for browser automation agents

In this post, we’ll walk through what makes Nova Act SDK unique, how it works, and how teams across industries are already using it to automate browser-based workflows at scale.

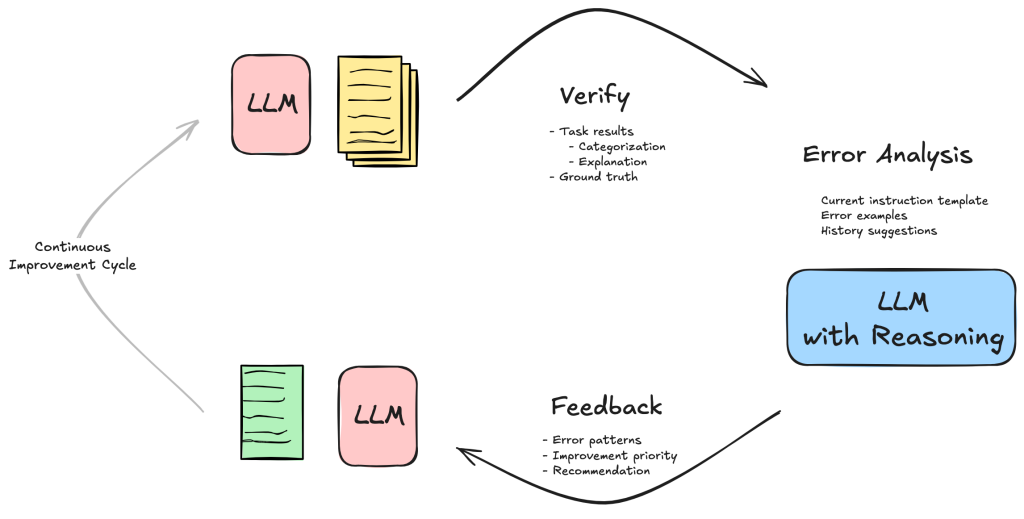

Optimizing enterprise AI assistants: How Crypto.com uses LLM reasoning and feedback for enhanced efficiency

In this post, we explore how Crypto.com used user and system feedback to continuously improve and optimize our instruction prompts. This feedback-driven approach has enabled us to create more effective prompts that adapt to various subsystems while maintaining high performance across different use cases.

Build an intelligent eDiscovery solution using Amazon Bedrock Agents

In this post, we demonstrate how to build an intelligent eDiscovery solution using Amazon Bedrock Agents for real-time document analysis. We show how to deploy specialized agents for document classification, contract analysis, email review, and legal document processing, all working together through a multi-agent architecture. We walk through the implementation details, deployment steps, and best practices to create an extensible foundation that organizations can adapt to their specific eDiscovery requirements.

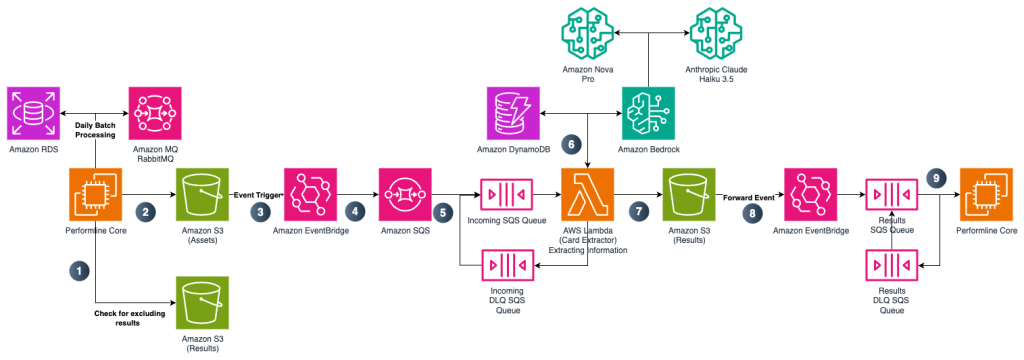

How PerformLine uses prompt engineering on Amazon Bedrock to detect compliance violations

PerformLine operates within the marketing compliance industry, a specialized subset of the broader compliance software market, which includes various compliance solutions like anti-money laundering (AML), know your customer (KYC), and others. In this post, PerformLine and AWS explore how PerformLine used Amazon Bedrock to accelerate compliance processes, generate actionable insights, and provide contextual data—delivering the speed and accuracy essential for large-scale oversight.

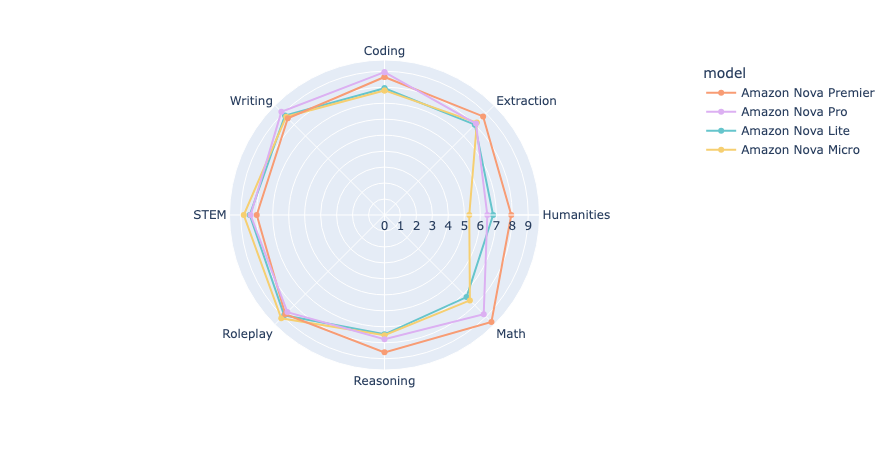

Benchmarking Amazon Nova: A comprehensive analysis through MT-Bench and Arena-Hard-Auto

The repositories for MT-Bench and Arena-Hard were originally developed using OpenAI’s GPT API, primarily employing GPT-4 as the judge. Our team has expanded its functionality by integrating it with the Amazon Bedrock API to enable using Anthropic’s Claude Sonnet on Amazon as judge. In this post, we use both MT-Bench and Arena-Hard to benchmark Amazon Nova models by comparing them to other leading LLMs available through Amazon Bedrock.

Customize Amazon Nova in Amazon SageMaker AI using Direct Preference Optimization

At the AWS Summit in New York City, we introduced a comprehensive suite of model customization capabilities for Amazon Nova foundation models. Available as ready-to-use recipes on Amazon SageMaker AI, you can use them to adapt Nova Micro, Nova Lite, and Nova Pro across the model training lifecycle, including pre-training, supervised fine-tuning, and alignment. In this post, we present a streamlined approach to customize Nova Micro in SageMaker training jobs.

Multi-tenant RAG implementation with Amazon Bedrock and Amazon OpenSearch Service for SaaS using JWT

In this post, we introduce a solution that uses OpenSearch Service as a vector data store in multi-tenant RAG, achieving data isolation and routing using JWT and FGAC. This solution uses a combination of JWT and FGAC to implement strict tenant data access isolation and routing, necessitating the use of OpenSearch Service.