AWS for Industries

Part 5: BBVA’s unified console: Key to successful global platform deployment

This is the fifth post of a six-part series detailing how BBVA migrated their Analytics, Data, AI (ADA) platform to AWS. In this post, we share how BBVA leveraged AWS services to build all the necessary operations capabilities to support a global data platform. We will also show how BBVA manages to combine building the basic requirements with implementing new ideas and technologies which have allowed them to innovate and improve the platform users’ experience.

Read the entire series:

- Part 1: BBVA: Building a multi-region, multi country global Data Platform at scale

- Part 2: How BBVA processes a global data lake using AWS Glue and Amazon EMR

- Part 3: How BBVA built a global analytics and machine learning platform on AWS

- Part 4: Building a Multi-Layer Security Strategy for BBVA’s Global Data Platform on AWS

- Part 5: The secret of BBVA’s success: a global deployment with a unified console offering a seamless experience

- Part 6: Effective sunset of the legacy data platform in BBVA: the migration methodology

Challenges

As BBVA expanded its data platform across multiple regions and countries, it encountered several interconnected challenges that needed to be addressed to ensure the platform’s success.

The first major challenge was balancing user experience with control mechanisms. With thousands of users needing access to data and analytics capabilities, BBVA had to provide seamless access while maintaining strict security and governance standards.

Deployment complexity presented another significant challenge. Operating across multiple regions and AWS accounts required sophisticated coordination of deployments and updates, while maintaining consistency in configurations and security policies across all environments.

The third critical challenge was achieving comprehensive operational visibility. With thousands of daily processes running across different regions, BBVA needed to effectively monitor platform operations while ensuring regulatory compliance and optimizing resource utilization.

BBVA addressed these challenges through three strategic initiatives

Data Platform Core Services

BBVA focuses on empowering users to work directly with AWS Services when feasible. For complex processes that require additional governance or custom workflows, BBVA created Core Services. These services simplify access to AWS capabilities through user-friendly APIs while maintaining security and compliance requirements. This approach allows users to leverage advanced AWS features without needing deep cloud expertise, while ensuring corporate standards and controls are automatically enforced.

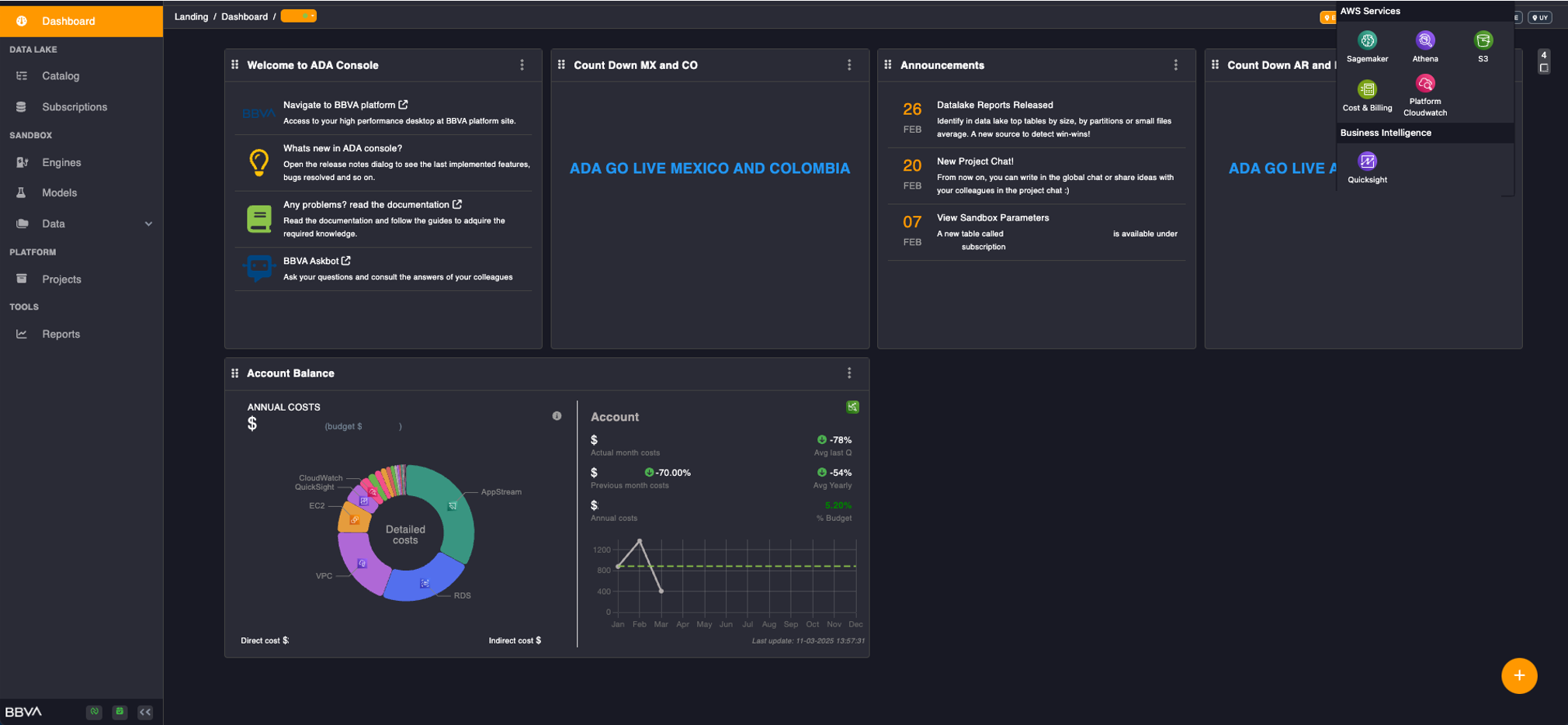

Console

The ADA Platform Console serves as the central entry point to the platform ecosystem, connecting seamlessly ‘API-based’ services with direct AWS capabilities. It provides role-based access controls for both AWS and custom ADA services.

Figure 1 – Main view of the Ada Platform Console for a Project Owner

Figure 1 – Main view of the Ada Platform Console for a Project Owner

Projects

This service manages project creation and cataloging through three main steps:

- Collecting project information (e.g. business unit, project owner) and metadata.

- Creating user access resources and configuring user profiles.

- Orchestrating calls between platform services and triggering resource creation.

Once created, project users can access the platform with different user profiles, each granting access to specific features based on their role.

Data subscriptions

BBVA, as a global financial institution serving millions of customers, requires enterprise-grade data security and governance. The Data Subscriptions Service implements these requirements while facilitating controlled data access within the ADA platform.

This service allows users in the ADA platform to get access to what they need while allowing BBVA to introduce their governance mechanisms in the entire process.

The standard subscription request flows consist of:

1. A user from a project searches the platform’s catalog for the data they need to cover the project´s needs. For more information about the catalog check Part 2 of this series.

2. Once the user has found the data they need, the project owner creates a subscription request.

3. Depending on the security level of the data, there are two scenarios:

a. If the data is classified as non-confidential or unrestricted, the request is automatically approved and access granted.

b. If the data is classified as confidential or restricted, then an authorization workflow is triggered, and the data owner and data security teams are notified to handle that request.

4. Once the request is approved, the project will have access to the dataset in their Sandbox environment. If the request is denied, the project owner receives a notification explaining the rejection reasons.

Jobs Services

ADA platform implements comprehensive control and governance mechanisms to manage its large-scale analytical processes. Central to this management is the concept of a Job – a distributed data processing unit within the platform. Key attributes of a Job include:

- Size: Determines the scale of computational resources allocated.

- Runtime: Specifies the execution framework, often leveraging BBVA’s proprietary Apache Spark-based tools for enhanced efficiency.

- Config: Contains critical metadata such as I/O locations and runtime parameters, essential for both coded and no-code operational environments.

To orchestrate these jobs effectively, the platform utilizes two core services: Job Manager Service and the Job Metadata Service. These services form the control plane for all Job operations within the ADA ecosystem, ensuring robust governance and efficient resource utilization

Jobs Manager Service

Handles creation, execution status visualization, modification, and deletion of jobs. It offers users screens in the ADA console for easier management.

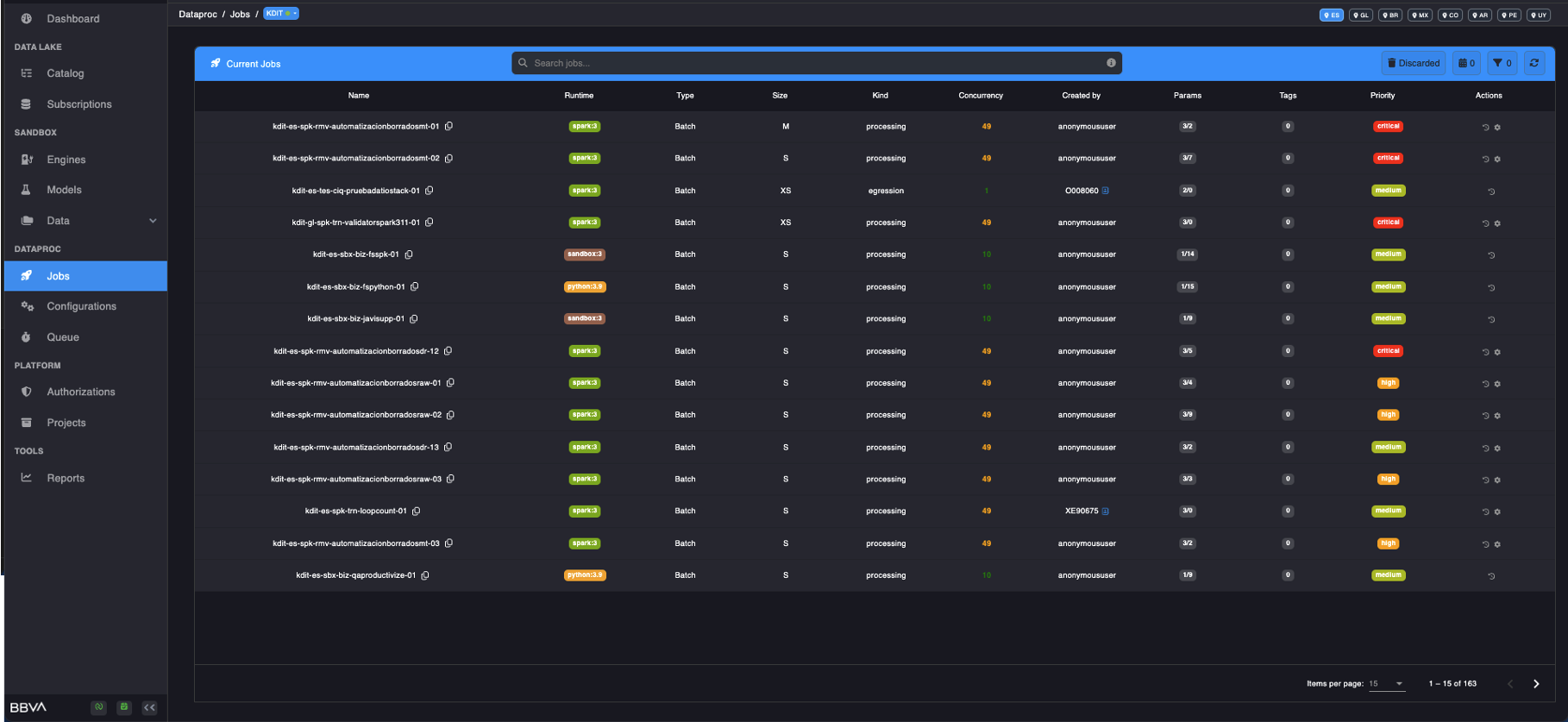

Figure 2 – Console View for the Jobs of the platform

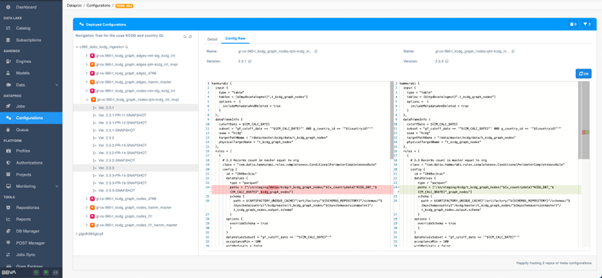

Jobs Config Service

With a daily volume exceeding 100,000 processes, efficient configuration file management and robust search capabilities are critical for maintaining operational integrity and performance.

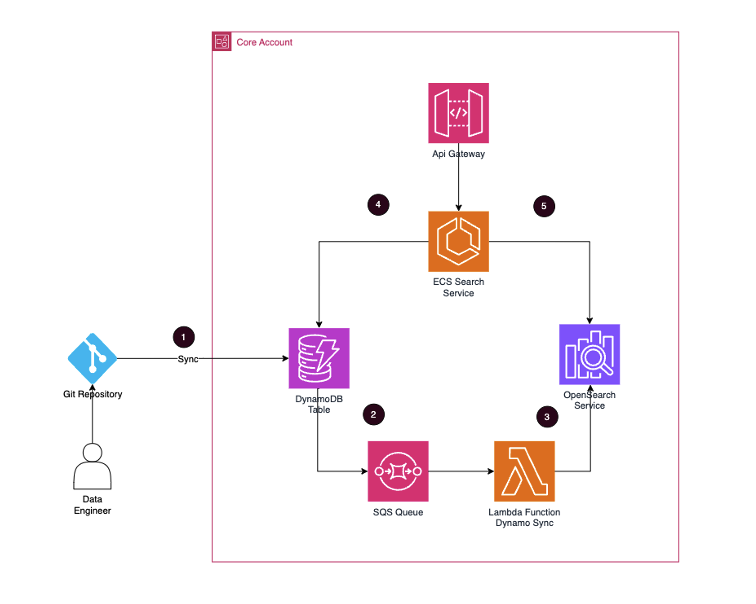

Figure 3 – Config Service AWS Architecture

The architecture works as follows:

- Once a user creates, updates or deletes a job through the Jobs services the new information is synchronized to Amazon DynamoDB.

- This sends an event to a Amazon Simple Queue Service queue with the information of the change using Amazon DynamoDB Streams.

- The queue triggers a AWS Lambda Function, which syncs the job´s metadata to the corresponding Amazon OpenSearch Service index.

- The final user can perform a search on a tree view on the main fields of the job, such as name, entity.

- A free text search capability is also available through the Amazon OpenSearch Service database.

Figure 4 – Example of how a configuration would be searched and compared in the platform´s console

Figure 4 – Example of how a configuration would be searched and compared in the platform´s console

Repositories Service: Standardizing Development

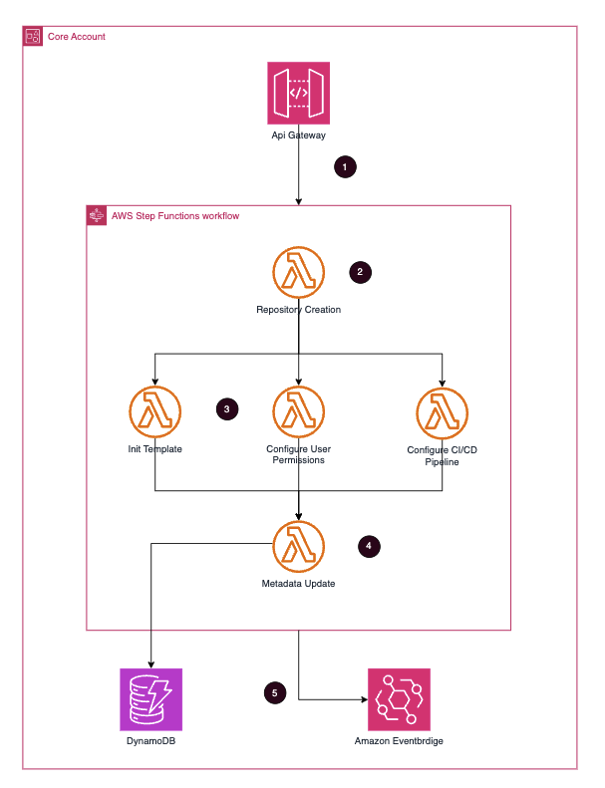

Through a simple click interface, users can quickly set up complete development environments. The service automates repository creation, configures access permissions, and establishes CI/CD pipelines using AWS API Gateway, AWS Step Functions, and Lambda functions.

Figure 5 – Repositories Service architecture for a repository creation request

The service works as follows:

1. A user requests the creation of a repository in the ADA console. AWS API Gateway receives this request and triggers an asynchronous AWS Step Function execution to handle the workflow through various Lambda functions.

2. The system creates an empty repository.

3. Then, the system runs the following steps in parallel:

a. It initializes the repository with a template and configures access permissions for the project users.

b. It sets up a CI/CD pipeline with automated testing and deployment.

4. All metadata is stored in an Amazon DynamoDB table. From this point on, it becomes accessible to the user.

5. Finally, a functional event is sent into the platform’s Amazon EventBridge, enabling automated workflows across the platform. For example, this triggers notifications to team members, updates project dashboards, and initiates security scanning processes. This event-driven approach ensures seamless integration with other platform services and maintains a consistent system state.

Deploying a global platform

Global deployment with AWS CloudFormation

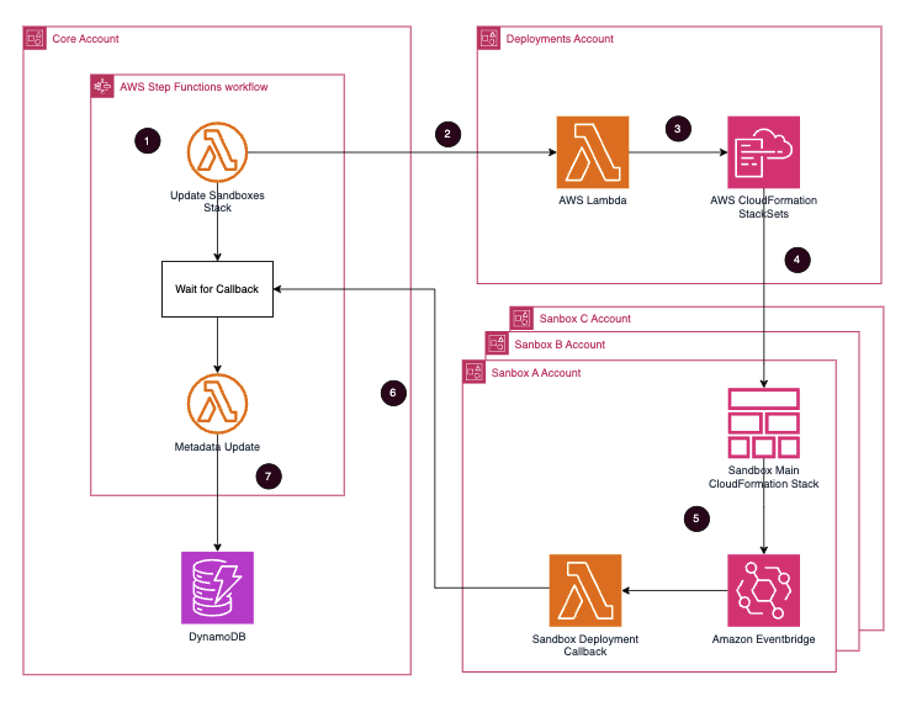

ADA’s deployment leverages AWS CloudFormation StackSets to automate infrastructure provisioning across multiple AWS Accounts and Regions. The deployment spans Europe (Spain) and America (N. Virginia) as the main AWS Regions, employing multi-AZ architecture for resilience. These regions have been strategically chosen to reduce data access latency and comply with local regulations.

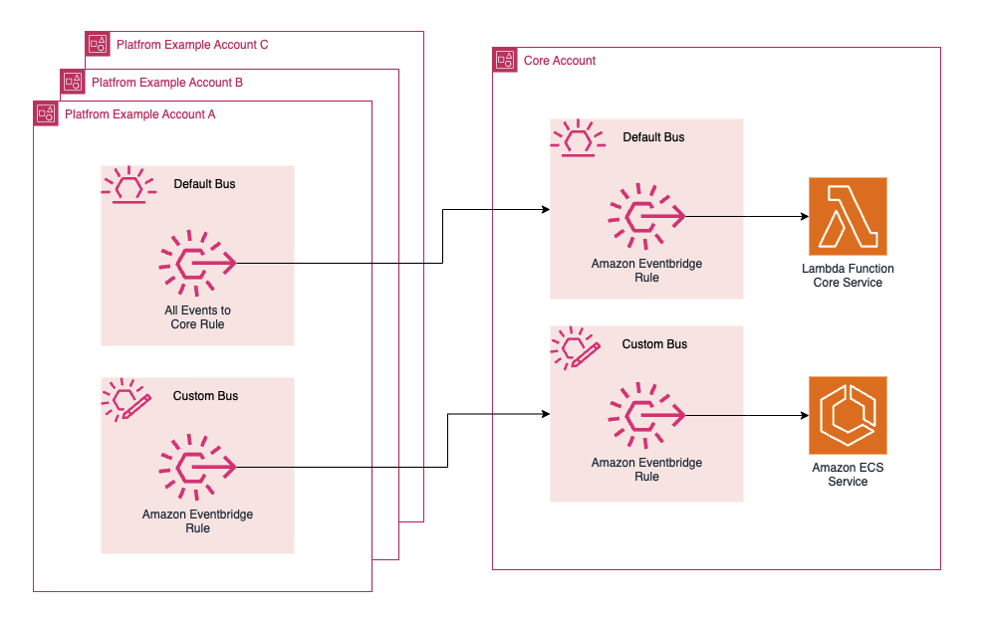

To support this global infrastructure efficiently, BBVA implemented an automated sandbox environment strategy that enables teams to rapidly prototype and test new features. This approach uses an event-driven architecture operating through three tiers:

- Core Account: It houses the central orchestration components triggered by projects service mentioned in the previous section. Here is an AWS Step Function that manages the sandbox update process.

- Deploy Account: It contains the CloudFormation StackSets that define the sandbox infrastructure template.

- Sandbox (SBX) Accounts: Each of these accounts include the main CloudFormation stack, EventBridge rules for monitoring and Lambda functions for deployment management.

Figure 6 – Platform deployment architecture

Steps to Global Deployment

- The automated deployment starts when the Core AWS Account initiates an update and executes the Sandbox Update workflow.

- The workflow invokes a cross-account call to forward these changes to the Deployment’s Account.

- A Lambda Function in the Deployment’s Account validates and translates these changes to an AWS CloudFormation StackSet operation.

- The system triggers and executes the StackSet operation across Sandbox Accounts.

- EventBridge rules actively monitor the stack status for different events throughout the deployment.

- A callback Lambda function within the Sandbox captures these events and forwards them to the Core Account, where the process originated.

- The system updates and stores all this information in a DynamoDB table to track the deployment’s status and publishes it to the platform’s event bus, enabling other services to know about the Sandbox creation.

Integration, Monitoring, and Observability focused on data/processing

Another core element of BBVA’s ADA platform success is its robust integration, monitoring, and observability strategy. Built around a synchronous API layer and an event-driven integration layer, the platform enables both real-time and asynchronous operations across services.

Additionally, BBVA has implemented a powerful observability layer using AWS tools like Amazon CloudWatch, enriched with custom metrics and dashboards. All events are funneled through a central event bus, enabling real-time insights, automated alerts, and full traceability—ensuring efficient operations and strong governance across the platform.

Figure 7 – Platform Integration Architecture

Event Alerts and Threshold Monitoring

Real-time alerting ensures that operations teams can respond immediately to critical issues.

- Threshold-Based Alerts enable proactive monitoring by triggering notifications when operational metrics breach defined limits, including resource utilization thresholds (CPU, memory, storage) and performance benchmarks (job duration, processing speed, response times).

- Event-Driven Automation leverages AWS Lambda to monitor Amazon EMR clusters and execute recovery protocols. When cluster steps fail, Lambda automatically terminates the problematic cluster and launches a fresh instance, minimizing processing disruptions.

Centralized Logs and Storage for Regulatory Compliance

ADA’s centralized log management system not only ensures regulatory compliance but also reduces operational costs and minimizes business risks. This approach saves hundreds of hours in audit preparation and provides immediate access to compliance data.

- Centralized Log Aggregation: All logs generated by ADA’s services are routed to Amazon CloudWatch. This allows the platform to query, analyze, and visualize logs from a single account. Transformation job logs are tagged with metadata (like

region=zazorjob_id=12345)enabling faster troubleshooting. - Regulatory Data Storage: Logs relevant to regulatory compliance are archived in Amazon Single Storage Service (Amazon S3 buckets with lifecycle policies that align with BBVA’s retention standards, ensuring compliance with mandates for sensitive data.

- Encryption and Tagging: Archived logs are encrypted with AWS Key Management Service keys and tagged with metadata to differentiate them from operational logs.

Native and Custom Metrics for the Platform

- Native Metrics: AWS services like EMR, Glue, and Lambda generate standard metrics that provide insights into resource utilization, execution times, and error rates. See HYPERLINK “https://docs.aws.amazon.com/emr/latest/ManagementGuide/UsingEMR_ViewingMetrics.html”Monitoring Amazon EMR metrics with CloudWatch as an example.

- Custom Metrics: Metrics are generated for specific workflows and business-critical operations. These metrics are pushed to CloudWatch via the Amazon CloudWatch Agent or custom Lambda functions. Examples include Job status or Data fragmentation.

Dashboards for Real-Time Visibility

Dynamic dashboards drive ADA’s monitoring strategy, delivering real-time Key Performance Indicator (KPI) insights across regions and services

Figure 8 – Dashboard to monitor jobs executions in the platform

The monitoring system delivers operational advantages enhancing platform’s efficiency and reliability. Real-time alerting capabilities ensure immediate notification of critical issues, allowing teams to respond promptly to potential problems before they impact service delivery. Through comprehensive historical data analysis, teams can identify long-term trends and patterns, enabling proactive capacity planning and performance optimization.

These enhanced dashboards empower operations teams to identify performance bottlenecks and anomalies immediately, enabling faster decision-making and more effective resource optimization. The new monitoring architecture provides deeper visibility into Spark processes, helping debug performance issues and optimize specific workloads as the platform scales while maintaining compliance with security policies and requirements

Platform Usage Data for analysis

BBVA publishes operational metrics as a dataset in the data lake, making it available to all internal projects for collaboration and analysis. This transparency allows users to track key metrics such as compute resource usage, storage consumption, and service performance patterns over time, enabling data-driven decisions about resource allocation and capacity planning.

Conclusion

BBVA has invested in a large global platform that provides thousands of users with the tools to model, ingest, and leverage data from across the company. Whether you are starting your cloud journey or looking to scale your existing platform, implementing these architectural principles will help you build a robust and maintainable AWS environment.

In this vision, a solid architecture is essential. It’s crucial to lay the groundwork for seamless in-house development and AWS service adoption. In this regard, we highlight three key ideas:

- Multiple accounts – Single model: Best practices dictate that a large project should use multiple accounts, but it is also necessary to establish mechanisms that enable them to work together and simplify maintenance. Setting up an account with cross-account Amazon CloudWatch, connectivity between accounts via Amazon EventBridge, and unified exploitation of AWS CloudTrail are steps on this direction.

- Unified platform vision: Using AWS native services consistently reduces integration complexity and accelerates the adoption of new capabilities. Combining this with the creation of various services under a unified console and a homogeneous microservices architecture to abstract certain functionalities allows you to offer new services to users and simplify the use of technology. Services that abstract data access profiling and process management are prime examples of this vision.

- Monitoring and transparency: Publishing metrics, dashboards, and generating platform usage data that is accessible to users builds trust and helps improve decision-making and drives continuous improvement.”