Desktop and Application Streaming

Use Agentic AI to Simplify Amazon WorkSpaces Support with Amazon Bedrock Agents

As companies look to provide around the clock support, helpdesks must scale accordingly. Often, support demands exceed available resources. Prior blogs discuss creating a self-service portal for Amazon WorkSpaces users to manage their environments easily. Self-service portals work well for users who have clear requests. However, it is a challenge when users do not know what they need or must complete multi-step processes. Similarly, administrators frequently interact with different services such as WorkSpaces and Amazon CloudWatch to troubleshoot issues. To enhance the support experience, you can integrate Amazon Bedrock Agents into your WorkSpaces environment.

Unlike a traditional self-service portal, AI agents can ask clarifying questions to reduce ambiguity and gain a better understanding of a user’s intent. Agents pull information from several services and summarize the findings. This is helpful because the guidance is relevant to your environment at that moment, and eliminates the need to access each service individually.

This blog post demonstrates an architectural approach when building an AI agent for your support desk. In addition, you can leverage the sample code at the end of the blog post to build out a proof of concept in your own environment.

Solution overview

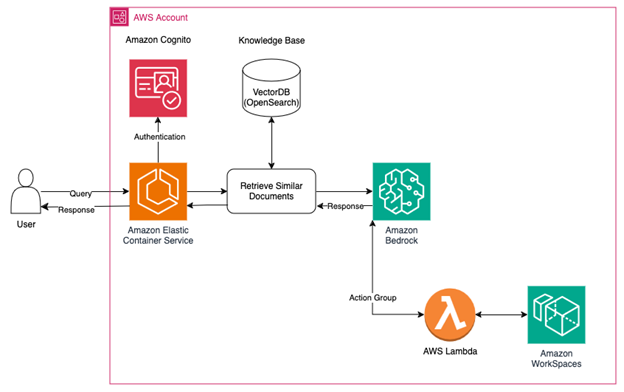

The architecture is designed to provide users with a web portal for authentication and interaction with the agent. When a user submits a question, a vector database is used to retrieve contextually relevant information before sending it to Bedrock for evaluation by an LLM. During the evaluation process, Agents invoke AWS Lambda functions that interact with the WorkSpaces environment. Once the function is called, results are sent back to Bedrock, and a response is generated for the user.

In the example architecture, Amazon Cognito handles user authentication. Amazon Elastic Container Service (ECS) hosts a web application that integrates with Cognito. This verifies user credentials and serves as the main interface to the LLM. Besides providing an authentication mechanism it also provides context to the model. Requestor information — such as the username and group membership — is also sent to the model. This allows the agent to look up active sessions without asking for additional information.

A vector database is used to store institutional knowledge or runbooks. By integrating your proprietary knowledge, you can use Retrieval Augment Generation (RAG) to search your data and answer common user questions. RAG enhances the accuracy and relevance of AI-generated responses by grounding them in your specific information, leading to more contextually appropriate and reliable outputs. A good way to get started with RAG is by leveraging Knowledge Bases for Amazon Bedrock.

When a query is submitted to the model, the query along with any contextually relevant information from the knowledgebase is sent to the model for evaluation. With the available information the model will determine if it has enough information to fulfill the request and if so, respond accordingly. Otherwise, it will look for clarity either by asking a follow up question or by invoking an action group. Action groups allow you to define actions that the Bedrock Agent should take on behalf of the user. In situations where multiple actions need to be taken, Bedrock Agents help break down user requests into smaller, manageable steps. For instance, if a user has trouble connecting to their desktop, the agent will run several troubleshooting steps as per internal guidelines. If unsuccessful, observations are forwarded to the help desk for further assistance, bypassing initial investigations. Action groups help to provide a point in time snapshot of your WorkSpaces. Without this information the model would be unaware of the WorkSpaces environment and only able to offer general advice. By incorporating action groups, the model can provide tailored guidance to the individual scenario or take actions on Workspaces.

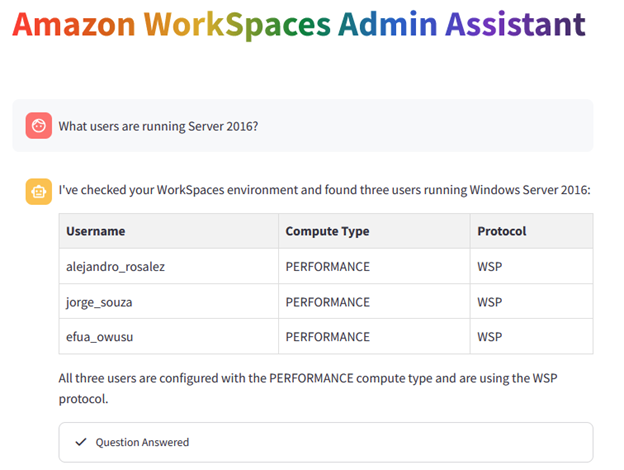

With an agent deployed, you can support users and administrators with common tasks. Users can log in and get immediate help, simplifying troubleshooting for things like registration codes or webcam redirection. Pairing this with internal documentation can further aid troubleshooting. Administrators can utilize the assistant to quickly access details regarding their environment or run administrative tasks. For example, administrators who are proactively looking to identify users to migrate to a newer operating system can ask the agent to get that information.

It is important to incorporate guardrails into a support automation solution so that users have a relevant and safe experience that aligns with their internal policies. Amazon Bedrock Guardrails assists by validating LLM inputs and outputs appropriate content, the topics being discussed are relevant, and any personally identifiable information is blocked or masked.

To prevent API throttling and improve performance, consider having the Lambda functions use an Amazon DynamoDB table instead of directly querying WorkSpaces APIs. DynamoDB handles millions of requests per second, supports scalability, and avoids constant polling of the WorkSpaces environment. Remember to regularly refresh the data in DynamoDB to ensure it remains accurate.

Conclusion

This solution enables the extension of current WorkSpaces support capabilities by utilizing an automated agent that assists users at any time. Users can interact with the agent using their own words to describe their challenges. The agents manage this ambiguity, offering guidance and performing actions on the user’s behalf to resolve their issues.

For instructions on using agents within your WorkSpaces environment, refer to the sample code, which includes two CloudFormation templates. One template sets up a knowledge base and automatically loads public WorkSpaces documentation. The other sets up a Bedrock Agent for basic calls in your environment. Additionally, it includes a local front-end web interface. This solution can be further enhanced by integrating it into a full-stack template or reviewing the Front-End Web and Mobile blog for additional ideas.

|

Dave Jaskie brings 15 years of experience in the End User Computing space. Outside of Work, Dave enjoys traveling and hiking with his wife and 4 kids. |

|

Matt Aylward is a Solutions Architect at Amazon Web Services (AWS) who is dedicated to creating simple solutions to solve complex business challenges. Prior to joining AWS, Matt worked on automating infrastructure deployments, orchestrating disaster recovery testing, and managing virtual application delivery. In his free time, he enjoys spending time with his family, watching movies, and going hiking with his adventurous dog. |