AWS Database Blog

Year One of Valkey: Open-Source Innovations and ElastiCache version 8.1 for Valkey

In April 2024, AWS announced support for Valkey, a community-driven fork of Redis born out of a shared belief that critical infrastructure software should be vendor neutral and open source. Since then, support for Valkey has grown to over 150 active contributors from more than 50 organizations such as Ericsson, Google Cloud, Aiven, Oracle, Percona, ByteDance and Amazon Web Services contributing over 1,000 commits. Together, we have delivered 230% higher throughput, 20% higher memory efficiencies, and new features that have reset the bar for community-led innovation.

In this post, we share how, just over a year in, we remain fully committed to the Valkey project and announce support for the latest version with Amazon ElastiCache version 8.1 for Valkey. We explore the benefits of Valkey through real-world examples the benefits of the latest innovations, including a new hash table with additional memory efficiencies, support for Bloom filters, observability enhancements, and new functionality.

Built on open, forever on open

Valkey was founded on the principle that it should be open and collaborative. Valkey is stewarded by the Linux Foundation, with a vendor-neutral, transparent, and community-driven governance model. No single vendor controls Valkey. That stability—of governance and licensing—is critical for developers, startups, enterprises, and cloud providers who rely on it for everything from caching to real-time analytics. Valkey has never relicensed and never will.

Valkey is not only open in license, it’s open in development: features, bug fixes, and design proposals are all discussed in the open. This open model is paying off. Just a year after the initial fork, the project is now backed by more than 50 organizations—with more than 150 contributors delivering nearly 1,000 commits. This open model and its associated innovations are also why the broader community has so quickly embraced Valkey and why Valkey is quickly becoming the default open source caching solution, including wide availability on Linux distributions.

Innovations that matter

A small sampling of the innovations that have come in the last year include reducing the memory overhead of keys, which resulted in up to 20% lower memory usage, over three times throughput improvements with up to 1 million RPS on a single node through innovative I/O threading, and native support for JSON objects, vector search, and Bloom filters. These innovations are driving real impact. Memory efficiencies and increased throughput translate directly to over 20% lower infrastructure costs and improved application responsiveness for even the most demanding workloads. Bloom filters provide highly efficient and scalable membership testing for everything from frequency capping in digital advertising to fraud detection in FinTech.

The scale, quality, and speed of innovation that the Valkey community has produced is impressive, and yet this is only the beginning. With innovation no longer gated by a single vendor’s priorities, the project is evolving based on what the ecosystem needs and at a pace that only open collaboration can achieve. Amazon Web Services (AWS) proudly contributed many innovations to the Valkey project and the Amazon ElastiCache team is excited to bring those innovations to customers with the introduction of ElastiCache version 8.1 for Valkey.

Introducing Amazon ElastiCache version 8.1 for Valkey

ElastiCache version 8.1 for Valkey introduces a new hash table that provides a 20% improvement in memory efficiency, built-in support for Bloom filters, and observability enhancements for in-memory workloads. These improvements are especially impactful for customers running large-scale, memory-bound caches where every byte and millisecond counts.

To ground these innovations in something familiar, imagine a common caching scenario in which a high-traffic ecommerce platform uses ElastiCache to store frequently accessed data such as session tokens, product details, and ads. This cache helps reduce backend load, accelerate page loads, and provide a responsive user experience. Whether it’s reducing memory usage with the new hash table, using Bloom filters for space-efficient lookups, or gaining better insight into client behavior with COMMANDLOG, ElastiCache version 8.1 for Valkey delivers enhancements that bring tangible benefits to real-world, memory-sensitive caching workloads like this one.

A new hash table

Typical caching workloads are often memory-bound: the more data you can fit into memory, the better the hit rate and the fewer trips sent to the underlying database. But memory isn’t free. Every byte saved—whether through more efficient data structures, smarter eviction policies, or lower protocol overhead—translates directly into lower infrastructure cost or more room for growth without scaling out.

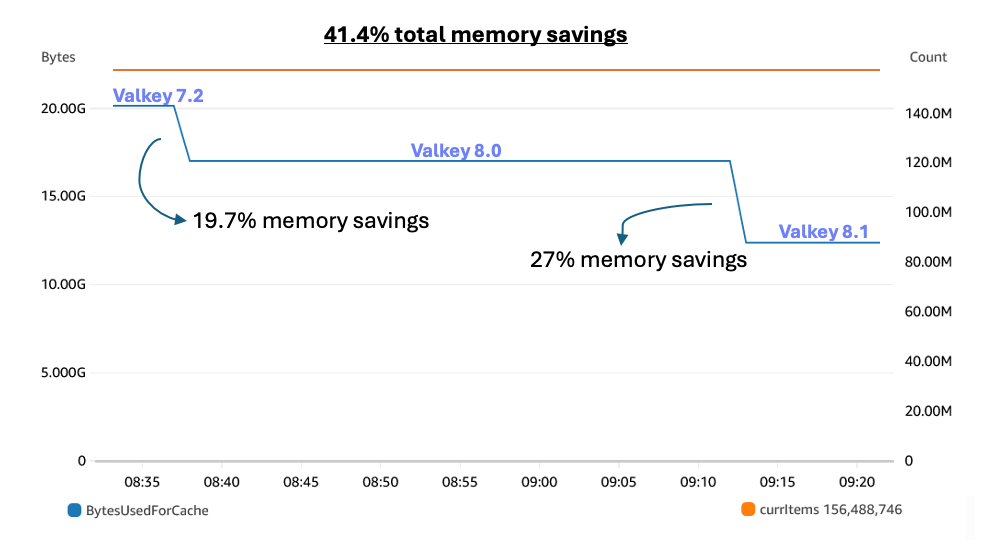

Valkey 8.1 introduces a new memory-efficient dictionary implementation that reduces the memory footprint by over 20% for common key-value patterns—a direct cost saving for customers operating large-scale memory-bound caches. This is incremental to memory savings already introduced in ElastiCache version 8.0 for Valkey, meaning you could enjoy around 36% cost reduction (1 – (0.8 × 0.8)) when upgrading to Valkey 8.1 from ElastiCache version 7.2 for Valkey or any ElastiCache for Redis OSS version.

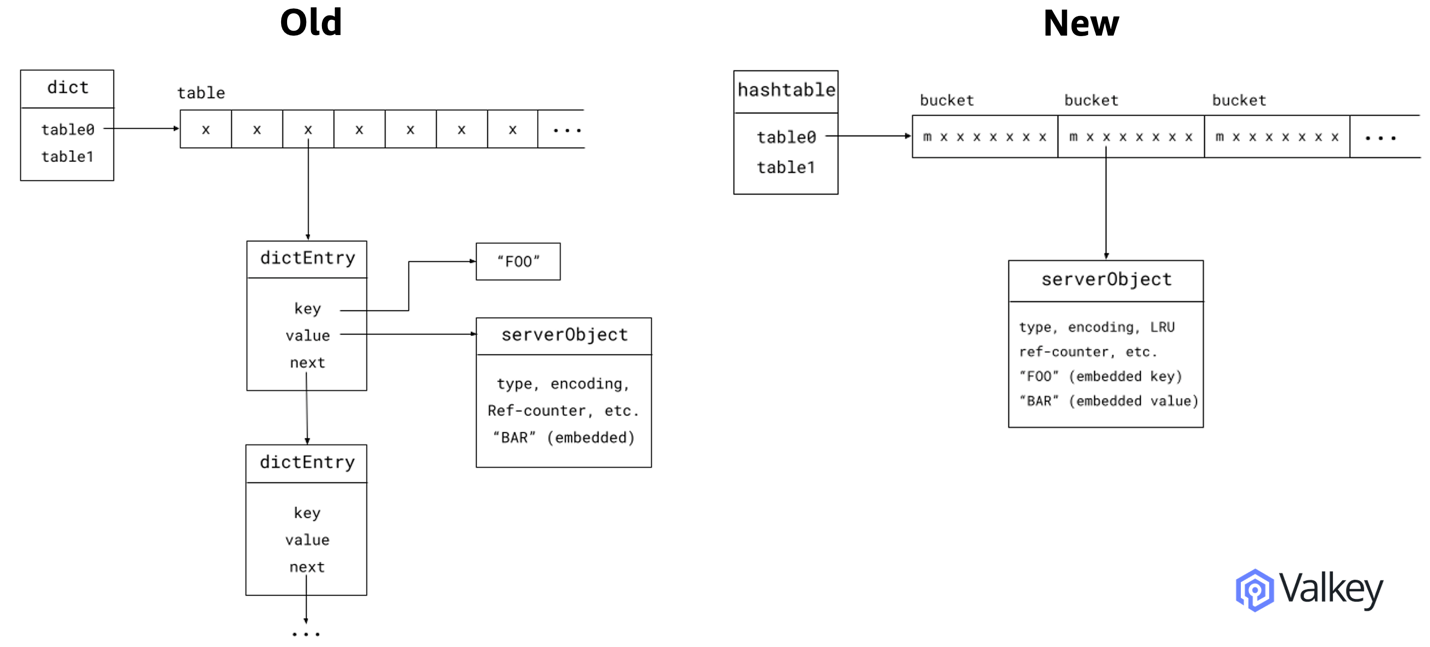

The following diagram shows how the legacy hash table used two dictionaries with chained linked lists, requiring multiple memory reads—four for a straightforward lookup and two more per hash collision. This design caused latency and memory overhead due to pointer chasing, especially in 64-bit systems where pointers are 8 bytes each.

To improve latency and reduce memory overhead, the new hash uses a more efficient, cache-optimized design inspired by Swiss tables. Keys are now stored contiguously within 64-byte cache lines, reducing pointer use and improving lookup performance by up to 10%. This new hash table powers not only the main store but also hash, set, and sorted set datatypes. It saves about 20–30 bytes per entry, improving memory efficiency by over 20% for common key-value patterns—a major cost benefit for memory-heavy workloads.

The previously mentioned web application use case is a good example to demonstrate the memory savings in action. We were storing usernames, which averaged about 16 bytes, and user’s session information, which also averaged 16 bytes. We had deployed our cache on a three-node cluster running on a cache.r7g.xlarge instance type, with each node using 21.2 GB out of the available 28.26 GB available on the instance type. We then used the ElastiCache in-place zero-downtime upgrade tool to upgrade our cache from Valkey 7.2 to Valkey 8.0 and then to Valkey 8.1. The following image shows the Amazon CloudWatch graph for memory usage for this cache, and you can observe how each upgrade results in about a 20% reduction in memory usage each, with the resulting cache only using 12.5 GB of memory per node. This allows us to downsize our node types to a r7g.large instance size and thus save 50% cost for the same workload.

Bloom filters: Fast, space-efficient lookup

ElastiCache version 8.1 for Valkey introduces Bloom filters, a new datatype contributed by AWS to the Valkey project. Bloom filters are a space-efficient probabilistic data structure that you can use to quickly check whether an item might be in a set. In the ecommerce use case, imagine you want to avoid showing the same personalized ad to a user more than one time. Previously, you could use the set datatype by creating a set for each ad and storing all users that have received a certain ad in its respective set. You could then perform checks on that set when your application decides which ad to show a user. Bloom filters use a probabilistic approach in which you accept a small chance of false positives to achieve the same outcome as sets while using as much as 98% less memory and without compromising performance. This new feature is fully compatible with the valkey-bloom module and API compatible with the Bloom filter command syntax of the Valkey client libraries, such as valkey-py, valkey-java, and valkey-go. For an in-depth look at how Bloom filters work in ElastiCache for Valkey with real-world examples, check out Implement fast, space-efficient lookups using Bloom filters in Amazon ElastiCache.

Enhanced observability

Observability is essential for operating large-scale caches reliably and cost-effectively. For example, in the ecommerce use case, customers often cache product data or session tokens and expect sub millisecond response times. When latency spikes occur—especially under peak load—it’s critical to quickly diagnose which requests are causing slowdowns and why.

Valkey provides the SLOWLOG command to help identify long-running commands. However, SLOWLOG only captures the time spent processing the command, which can miss other system bottlenecks such as excessive network bandwidth usage. With ElastiCache version 8.1 for Valkey, you can now use the new COMMANDLOG feature to capture full details of impactful commands, including their arguments, reply size, and the client that issued them. This enables much deeper root cause analysis than before.

Consider an example from the caching use case. Suppose a new backend service started caching videos for products. The system starts noticing the network bandwidth limits being exceeded, introducing latency spikes into the system. You can use COMMANDLOG to identify that some of the products weren’t properly compressed, leading to substantially increased network usage. You might notice a response such as the following, which shows in line three that getting a video was over 2 MB.

Now that you have visibility into what is consuming so much bandwidth, you can investigate how best to prevent it from recurring.

Conditional updates with the SET command

In a distributed system, you might have multiple different services updating a single cached value at the same time, which can introduce race conditions where one server overwrites the value of another. Traditionally, these types of race conditions are solved with conditional updates, checking whether the value was updated since the last value you observed. Valkey has many built-in ways to handle this, such as WATCH and EVAL, but starting in Valkey 8.1 there is a simpler primitive, the conditional set.

In the ecommerce website, you might have multiple different services responsible for proactively updating the cached values of products when its value is updated on the backend. In earlier versions of Valkey, these services might have used a Lua script to conditionally check they had the latest value of the data before updating the cache. They might have used a script like the following to update the price:

With Valkey 8.1, you can now perform conditional updates when using the SET command based on whether a given comparison value matches the key’s current value. The command replaces the need for writing a custom script to implement the same logic. The new logic is simpler and runs more efficiently:

The future of in-memory data

At AWS, we believe Valkey represents the future of in-memory data stores. It’s fast, lightweight, and production ready. But more importantly, it’s led by a global community of engineers committed to building the best possible system together—and doing it in the open. We’re proud to support Valkey in Amazon ElastiCache, where customers can run it with all the scalability, security, and operational excellence they expect from AWS. And we’ll continue to contribute engineering time, testing, benchmarking, and patches upstream so that the entire community benefits.

The last year has proven that open source done right—open governance, open development, and open innovation—can thrive. Valkey is proof of that. To everyone who has contributed, tested, deployed, or supported Valkey over the past year: thank you. We’re just getting started. To join the community and contribute to the Valkey project, get involved in the Valkey GitHub page.

ElastiCache version 8.1 for Valkey is now available in all AWS Regions. In ElastiCache, you can upgrade from previous versions of Valkey or Redis OSS to Valkey 8.1 in a few clicks without downtime. You can get started using the AWS Management Console, AWS SDKs, or AWS Command Line Interface (AWS CLI). For more information about Valkey 8.1, visit valkey.io.

For more information about ElastiCache version 8.1 for Valkey, visit Amazon ElastiCache features and Amazon ElastiCache Documentation.