AWS Contact Center

How Empower scaled contact center quality assurance with Amazon Connect and Amazon Bedrock

By Marcos Ortiz (AWS), Ryan Baham (Empower), Illan Geller (Accenture), Ozlem Celik-Tinmaz (Accenture) , and Prabhu Akula (Accenture)

Introduction

Empower is a leading financial services company serving over 18 million Americans with $1.8 trillion in assets under administration. They take approximately 10 million customer calls annually through their care centers. To maintain service excellence at this scale, Empower teamed with AWS and Accenture to transform their quality assurance (QA) process using generative AI. By implementing a custom solution with Amazon Connect and Amazon Bedrock, Empower can scale call coverage for quality assurance by 20x, now analyzing thousands of call transcriptions daily and reducing QA review time from days to minutes.

In this post, we explore how this three-way collaboration delivered a production-ready generative AI solution from experiment to production in just 7 months. This demonstrates the power of combining AWS technology, Accenture’s implementation expertise, and Empower’s Technology Innovation Lab vision.

The challenge: Manual quality assurance at scale

Empower’s contact center employs a comprehensive evaluation framework called GEDAC (Greet, Engage, Discover, Act, Close) to assess agent performance across five key skill areas. Each area encompasses numerous sub-skills, from greeting customers appropriately to maintaining a friendly demeanor and responding promptly. Quality analysts manually reviewed call recordings and scored agents against predetermined criteria for each skill area.This manual process presented several challenges. With human reviewers only able to evaluate a small subset of the 10 million annual calls, coverage remained limited. The manual evaluation process also left room for inconsistent assessments, as different evaluators could score identical interactions differently. Furthermore, the time-intensive nature of each evaluation restricted the number of possible reviews. This led to delayed feedback, with agents receiving performance assessments days or even weeks after their customer interactions. As call volumes continued to grow, these scalability constraints made comprehensive coverage increasingly difficult to achieve.

“We recognized that to truly enhance our customer experience at scale, we needed to fundamentally reimagine our approach to quality assurance,” says Joe Mieras, VP of Participant Services at Empower. “The manual process simply couldn’t keep pace with our growth and our commitment to service excellence.”

This challenge aligned perfectly with Empower’s Technology Innovation Lab mission to ignite innovation by testing new capabilities, accelerating new technologies, and differentiating the customer experience through safe and transparent exploration. The Lab, which had already engaged over 11,000 associates through demos and roadshows while researching 80+ emerging technologies, identified call QA as a prime candidate for generative AI experimentation.

Solution overview: Automated QA with generative AI

Working with AWS and Accenture, Empower developed an automated QA solution that leverages Amazon Connect Contact Lens, which they already enabled and was providing high-quality, PII-redacted transcriptions. By combining these ready-to-use transcriptions with Amazon Bedrock and Anthropic’s Claude 3.5 Sonnet for intelligent evaluation, the team avoided weeks of extract, transform, and load (ETL) and data masking development time. The solution processes 5,000 pre-redacted transcriptions per day in batches, evaluating agents across all GEDAC categories.

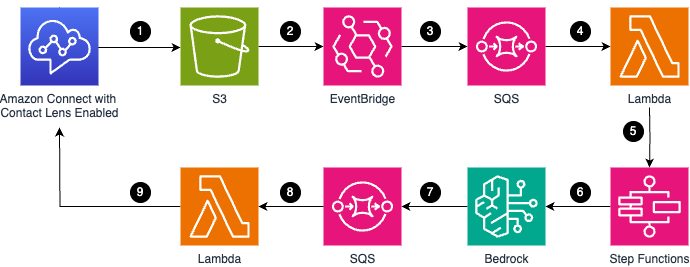

The following diagram illustrates the high-level solution architecture:

High-level architecture diagram

The workflow consists of the following steps:

- Call transcription: Amazon Connect Contact Lens automatically transcribes customer calls with high accuracy, redacting PII and capturing speaker diarization, sentiment, and other metadata, and stores transcription files to Amazon S3, eliminating the need for custom data pipelines.

- Event notification: Amazon EventBridge detects new transcription files in S3.

- Queue management: EventBridge sends a message to Amazon SQS, which manages the queue of transcriptions to be processed, ensuring reliable and scalable batch processing.

- Batch processing: AWS Lambda functions poll the SQS queue and retrieve transcription batches for processing.

- GEDAC process orchestration: The Lambda function triggers the associated Step Functions to evaluate each call transcription against all topics on the GEDAC framework.

- AI evaluation: The step functions sends transcriptions to Amazon Bedrock, where Claude 3.5 Sonnet evaluates agent performance across five GEDAC categories.

- Results queuing: Evaluation results are sent to another SQS queue for controlled processing and delivery.

- Results processing: A second Lambda function processes the evaluation results from the queue.

- Results delivery: The Lambda function writes the evaluation results back to Amazon Connect using the Agent Evaluation API. Managers can view them directly in the existing Amazon Connect Quality Management interface—no custom GUI development required.

Leveraging AWS services

A critical factor in the solution’s rapid development and deployment was using existing AWS services and features, instead of building everything from scratch.

The ability to combine Amazon Connect for our cloud contact center with other AWS services, rather than building from scratch, was a critical factor in the solution’s rapid development and deployment.

Amazon Connect Contact Lens already provided automatic redaction of personally identifiable information (PII) in call transcriptions. This eliminated the need for Empower to implement custom ETL pipelines and data masking solutions, significantly reducing development time and ensuring compliance with data protection requirements from day one. The team could focus on building the evaluation logic with data privacy and compliance already addressed.

Contact Lens automatically streams call transcription files to Amazon S3, triggering downstream processing. This native integration eliminated the need for custom data movement solutions and provided a reliable, scalable foundation for the batch processing pipeline.

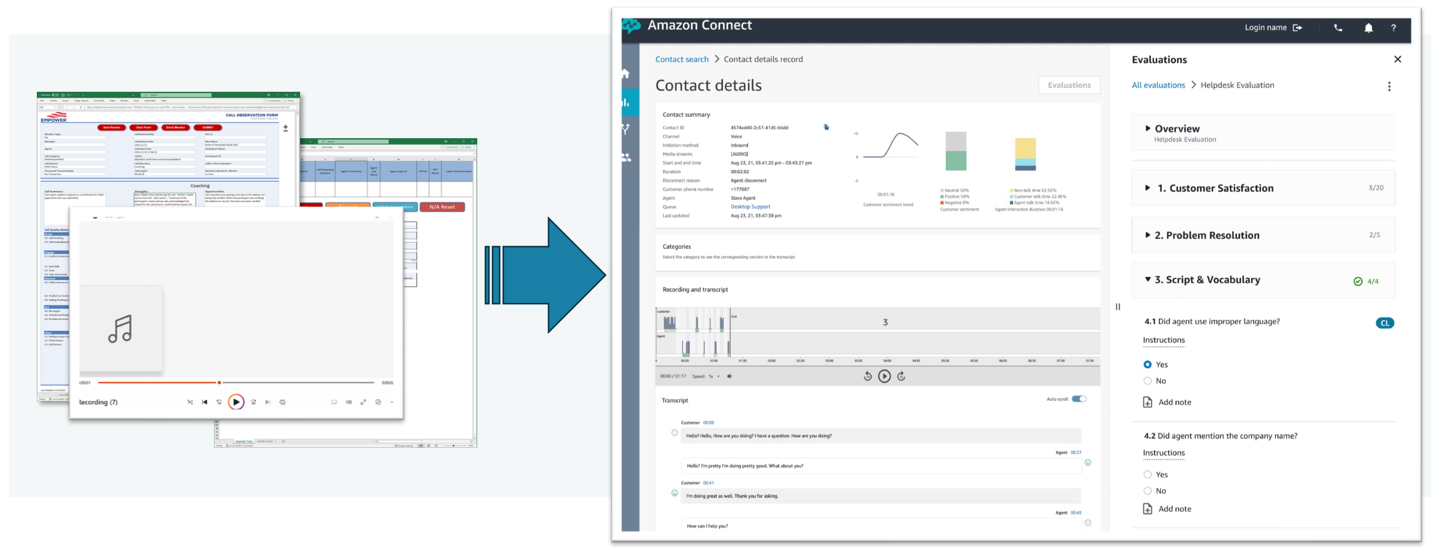

Additionally, the existing agent performance evaluation features available in Amazon Connect provided a ready-made user interface for displaying evaluation results. The solution uses the Amazon Connect Agent Evaluation API to write the evaluation outputs from Bedrock directly into Amazon Connect, where managers can view them alongside other quality metrics in a familiar interface. “We didn’t need to reinvent the wheel,” explains Joseph Mieras, VP of Customer Experience at Empower. “By using the Amazon Connect Quality Management API, we could present AI-generated evaluations in the same interface our team was already using, dramatically improving adoption and reducing training requirements.”

Amazon Connect Agent Evaluation GUI

Why custom implementation over built-in features

The agent performance evaluation features of Amazon Connect provides excellent out-of-the-box functionality for many organizations. However, Empower’s GEDAC framework represents decades of refinement specific to their business. The flexibility of Amazon Connect to customize their solution using Amazon Bedrock allowed Empower to implement their exact evaluation criteria while maintaining the ability to evolve the solution as their needs change.

This solution allowed Empower to implement their GEDAC methodology with nuanced evaluation across multiple sub-skills, capturing the specific criteria that make their framework unique. It provided the flexibility to adjust prompts and evaluation criteria without system changes, enabling continuous refinement based on business needs. Additionally, it delivered detailed rationale for each score, providing the explainability needed to support effective agent coaching and performance improvement.

Security and responsible AI considerations

The solution employs robust security measures to protect sensitive information throughout the evaluation process. All data is encrypted both in transit and at rest, for protection against unauthorized access. Role-based access controls ensure that only authorized personnel can view evaluation results, maintaining strict data governance. Automated policies manage the data lifecycle in accordance with regulatory requirements, while comprehensive audit logging provides a complete trail of all system activities for compliance and security monitoring purposes.

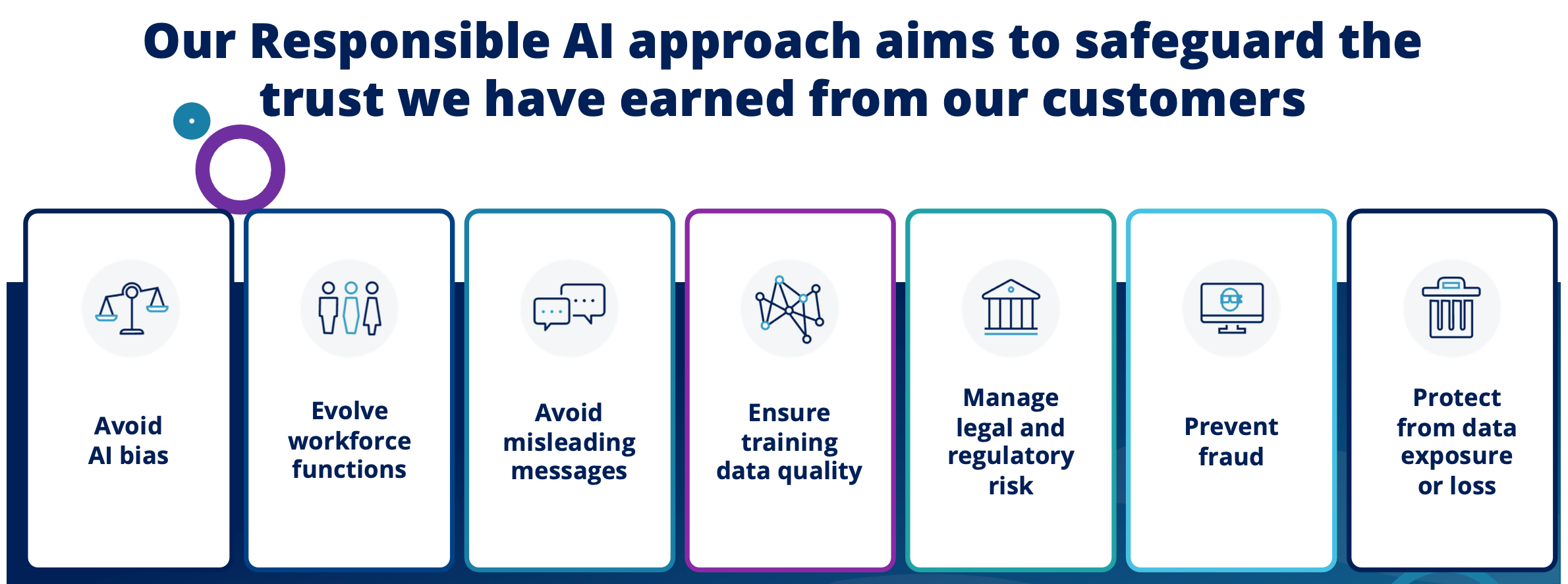

Empower has implemented a robust AI governance framework that addresses multiple dimensions of responsible AI. An AI Governance Committee provides centralized oversight of all AI use and development, reviewing and assessing risks prior to execution. The company has established comprehensive legal and compliance guardrails for AI model development and use, along with a model oversight process that maintains a centralized AI inventory with transparent ownership, ongoing supervision, and formalized annual certification.

Empower’s Responsible AI Approach

This multi-faceted approach actively works to avoid AI bias, prevent fraud, protect from data exposure, manage legal and regulatory risk, and ensure training data quality. The framework also emphasizes transparency, allowing agents to review AI evaluations and understand scoring rationale, while maintaining human oversight where quality analysts can review and override AI evaluations. Continuous monitoring ensures ongoing assessment of model performance and fairness, creating a sustainable and ethical AI implementation.

The power of the AWS, Accenture, and Empower partnership

The successful implementation of this solution highlights the value of strategic partnerships in delivering enterprise AI solutions.Amazon Connect, as Empower’s contact center solution, delivers high-quality transcriptions essential for accurate evaluation. Integrating Amazon Bedrock offers access to cutting-edge foundation models with enterprise-grade security. The team ensured optimal design for scale and performance through architecture guidance, and shared learnings from similar implementations across the financial services industry, bringing valuable best practices to the project.

Accenture’s role as implementation partner was crucial in translating Empower’s requirements into a production-ready solution. The team developed sophisticated prompts that accurately captured GEDAC evaluation criteria, conducting multiple rounds of testing and optimization with domain experts to ensure precision. They ensured seamless integration with Empower’s existing technology stack while supporting the organizational transition to AI-augmented quality assurance through comprehensive change management. The team worked closely with Empower’s quality analysts to understand the nuances of their evaluation criteria, then translated that expertise into prompts that Claude 3.5 Sonnet could execute consistently and accurately. This collaborative process ensured the AI model could replicate the depth and specificity of human evaluations while maintaining the consistency needed for scaled operations.

Empower’s contribution went beyond being a customer—they were an active partner in solution design. They provided deep understanding of contact center operations and quality requirements, sharing the detailed GEDAC methodology and scoring criteria that formed the foundation of the AI evaluation system. Their quality analysts played a crucial role in validating AI evaluations and providing feedback for continuous improvement. Additionally, Empower positioned this initiative within their broader generative AI transformation strategy, ensuring alignment with long-term organizational goals and creating a blueprint for future AI implementations across the enterprise.

Results and business impact

With this solution Empower saw a remarkable 20x increase in QA call coverage, expanding from reviewing only a sub-set sample to potentially scoring all calls. Evaluation time decreased from days to minutes, while standardized AI assessments eliminated inter-evaluator variability that had previously challenged consistency. Amazon Connect handles daily volume fluctuations without requiring additional resources, providing the scalability needed for Empower’s growing operations. Additionally, QA teams can prioritize calls needing the most feedback, ensuring human reviewers focus their efforts where they add the most value.

The implementation has driven substantial cost optimization by automating repetitive manual tasks, allowing QA analysts to redirect their expertise toward high-value activities such as handling complex cases, developing training programs, and providing personalized agent coaching. Rather than spending hours on routine evaluations, QA personnel now focus on strategic improvements that directly enhance customer experience. Beyond immediate efficiency gains, the solution created an extensible foundation that can be reused for other use cases across the organization.

Agents receive performance insights within hours instead of weeks, transforming the feedback cycle. Detailed explanations accompanying each evaluation will help managers provide targeted coaching based on specific interaction examples. The analytics capabilities also can reveal patterns across teams and call types that were previously invisible, enabling data-driven improvements to training and processes. Regular model updates introduce new best practices and a continuous improvement cycle.

“The impact of solutions like this will be transformative,” says Kyle Caffey, VP of Innovation Lab at Empower. “Not only are we dramatically improving our operational efficiency, but we’re also driving better quality, which directly translates to improved customer experiences.”

Lessons learned and best practices

The implementation underscored several key learnings. First and foremost, starting with clear business objectives proved essential. Empower’s specific focus on automating GEDAC evaluations provided measurable success criteria that guided every technical decision. The quality of AI outputs directly correlated with prompt quality, making Accenture’s iterative approach to prompt engineering, crucial for achieving the accuracy needed for production deployment.

Planning for scale from day one was another critical success factor. Empower could handle increasing volumes without architectural changes by implementing Amazon Bedrock cross-Region inference and batch processing capabilities early. The team also recognized that AI augments rather than replaces human judgment. Empower’s quality analysts continue to play a vital role in validating AI evaluations and handling edge cases, ensuring the system maintains high quality standards.

Finally, the implementation reinforced the importance of continuous improvement. Regular reviews of AI evaluations help refine prompts and improve accuracy over time, creating a feedback loop that ensures the system evolves alongside business needs. This iterative approach, combined with strong partnerships and clear objectives, created a blueprint for successful enterprise AI deployment that extends beyond this single use case.

Expanding the vision: What’s next on Empower’s generative AI journey

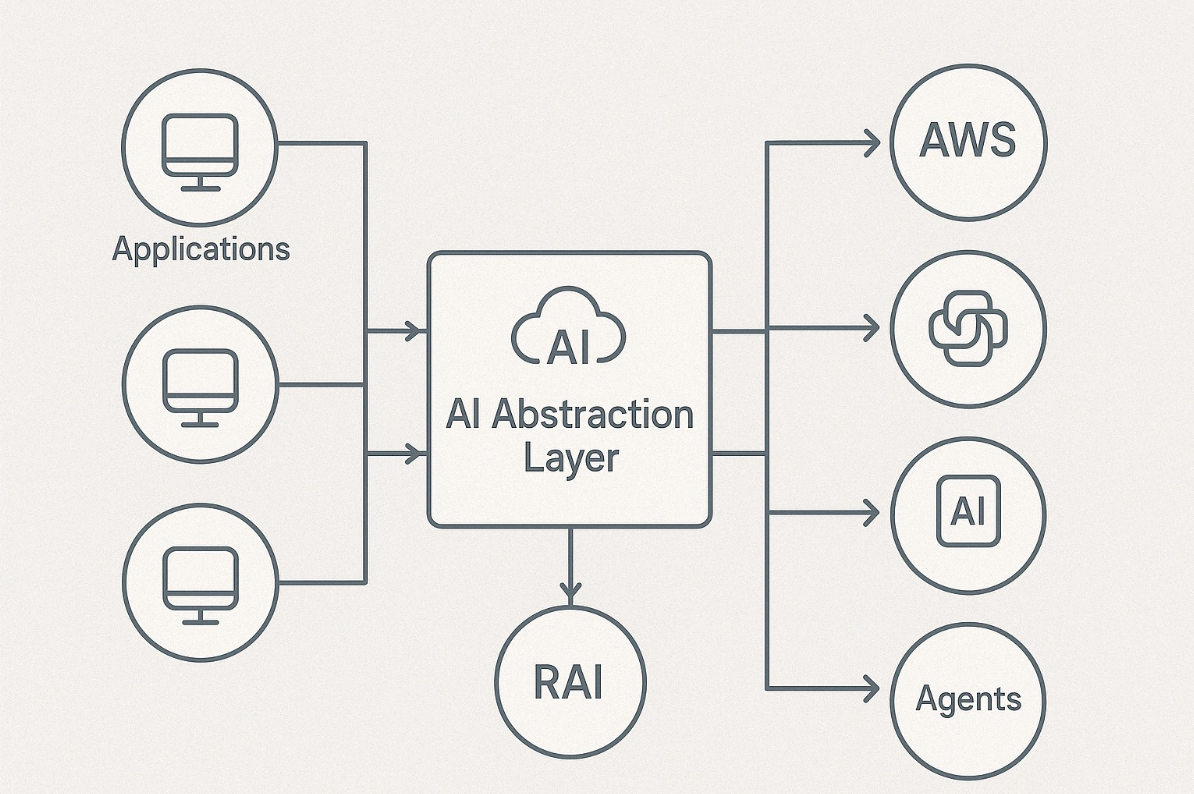

This represents just the beginning of Empower’s generative AI transformation. Building on this success, Empower is developing a centralized generative AI platform to democratize AI capabilities across their 1,500+ developers. This will provide centralized governance for consistent security, compliance, and responsible AI controls. It will offer an abstraction layer for simplified access to various AI models and capabilities, comprehensive usage monitoring for tracking and cost management, and shared best practices with reusable components that accelerate development across teams.

Empower’s Generative AI Abstraction Layer

Empower has identified several additional use cases for implementation that span multiple business functions. These include extending automated evaluation to wealth management and investment advisory calls, training agents with AI-powered conversation simulators, automating the analysis of retirement plan documents, and enhancing developer productivity with AI-powered coding tools. Each use case builds upon the foundation established by the agent QA solution, leveraging proven patterns and architectural decisions.

By combining the robust AI services available in AWS, Accenture’s implementation expertise, and Empower’s domain knowledge, the partnership delivered a solution that not only meets immediate business needs but also lays the foundation for broader AI adoption. As generative AI continues to evolve, these lessons from Empower’s journey will help other organizations navigate their own transformations by starting with clear objectives, choosing the right partners, and maintaining a relentless focus on delivering business value.

About the authors

|

Marcos Ortiz is a Principal Solutions Architect at AWS, working with enterprise customers to design and implement scalable solutions that accelerate their digital transformation journey and maximize business value. |

|

Ryan Baham is Head of Architecture in Empower’s Innovation Lab, where he leads the company’s generative AI initiatives. Ryan is passionate about leveraging technology to enhance customer experiences and drive operational excellence. Under his leadership, Empower has successfully launched multiple AI-powered solutions that are transforming how the company serves its 18 million customers. |

|

Illan Geller is a Managing Director at Accenture, leading the generative AI practice for financial services. With over 20 years of experience in technology consulting, Illan specializes in helping enterprises implement cutting-edge AI solutions while ensuring alignment with business objectives and responsible AI principles. |

|

Ozlem Celik-Tinmaz is a Managing Director and a thought leader in Responsible AI (RAI), with a proven track record of advising organizations on the design and implementation of enterprise-grade AI governance and risk management frameworks. She brings deep expertise in end-to-end RAI strategy, spanning AI governance, RAI policy, technical AI risk controls, and model risk testing for both AI and GenAI systems. |

|

Prabhu Akula is an Associate Director at Accenture, leading the Generative AI delivery at Empower. As a visionary in Data & AI, he designs sophisticated GenAI solutions and cutting-edge modern data platforms, driving transformation, and harnessing the power of advanced platforms to meet business objectives. |