AWS Compute Blog

Understanding and Remediating Cold Starts: An AWS Lambda Perspective

Cold starts are an important consideration when building applications on serverless platforms. In AWS Lambda, they refer to the initialization steps that occur when a function is invoked after a period of inactivity or during rapid scale-up. While typically brief and infrequent, cold starts can introduce additional latency, making it essential to understand them, especially when optimizing performance in responsive and latency-sensitive workloads.

In this article, you’ll gain a deeper understanding of what cold starts are, how they may affect your application’s performance, and how you can design your workloads to reduce or eliminate their impact. With the right strategies and tools provided by AWS, you can efficiently manage cold starts and deliver consistent, low-latency experience for your users.

What is a cold start?

Cold starts occur because serverless platforms like AWS Lambda are designed for cost-efficiency – you don’t pay for compute resources when your code isn’t running. As a result, Lambda only provisions resources when needed. A cold start happens when there isn’t an existing execution environment available and a new one must be created. This can happen, for example, when a function is invoked for the first time after a period of inactivity or during a burst in traffic that triggers scale-up.

When this occurs, Lambda rapidly provisions and initializes a new execution environment for running your function code. This initialization adds a small amount of latency to the request, but it only occurs once for the lifecycle of that execution environment.

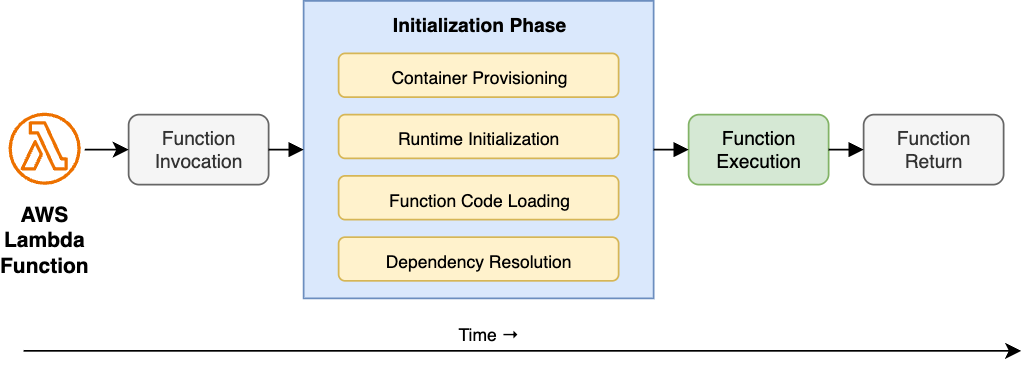

Cold starts consist of several steps that make up the Initialization Phase, which occurs before the function begins running. These steps take place when the Lambda service creates a new execution environment, contributing to the latency commonly referred to as the INIT duration of the function, as illustrated in a following diagram:

- Container Provisioning: Lambda allocates the necessary compute resources to run the function, based on its configured memory.

- Runtime Initialization: Lambda loads the language runtime environment (Node.js, Python, Java, etc.) into the container. You can also define a custom runtime using the Lambda Runtime Interface.

- Function Code Loading: Lambda downloads and unpacks the function code into the container.

- Dependency Resolution: Lambda loads required libraries and packages so the function can execute successfully.

While cold starts typically affect less than 1% of requests, they can introduce performance variability in workloads where Lambda needs to create new execution environments more frequently, such as after periods of inactivity or during rapid scaling. This variability can impact perceived response times, especially in latency-sensitive applications such as user-facing APIs.

Why do cold starts occur?

Cold starts are a natural aspect of the serverless computing model due to its core design principles:

- Resource Efficiency: To optimize cost and resource usage, AWS Lambda automatically shuts down idle execution environments after a period of inactivity. When the function is invoked again, a new environment must be provisioned.

- Security and Isolation: Each Lambda execution environment runs in an isolated container to ensure strong security boundaries between invocations. This container-level isolation requires a fresh initialization process, which adds startup latency.

- Auto-Scaling: Lambda automatically creates new environments to handle increased traffic or concurrent invocations. Each new environment requires provisioning and initialization, which contributes to cold start latency.

Understanding and optimizing cold start factors

The following sections explore factors contributing to cold starts, and optimization techniques to initialize your functions faster.

Runtime selection

Lambda supports multiple programming languages through runtimes, including the ability to create custom runtimes. A runtime handles core responsibilities such as relaying invocation events, context, and responses between the Lambda service and your function code. The time it takes to initialize a runtime can vary depending on the language. Interpreted languages, such as Python and Node.js, typically initialize faster, while compiled languages like Java or .NET may take longer due to additional startup steps such as loading classes. Custom, or OS-only runtimes commonly provide fastest cold start performance as they typically run compiled binaries on the underlying Linux environment.

Runtimes are regularly maintained and updated by AWS, with newer versions typically offering improvements in performance, security, and startup latency. To take advantage of these enhancements, AWS recommends keeping your functions up to date with the latest supported runtimes.

Packaging and layers

AWS Lambda supports two packaging options for deploying your function code – ZIP archives and container images. Each approach offers unique advantages and may influence cold start latency depending on how it’s used.

For ZIP-based deployments, you can upload your function code directly (up to 50MB) or via Amazon Simple Storage Service (Amazon S3) (up to 250MB unzipped). To promote reusability, Lambda also supports Lambda layers, allowing you to share common code, libraries, or runtime dependencies across multiple functions. However, larger packages can impact cold start latency due to factors such as increased S3 download time, ZIP extraction overhead, layer mounting and initialization. The size and number of dependencies directly affects initialization time – each added dependency increases the deployment artifact size, which Lambda must download, unpack, and initialize during the INIT phase.

To optimize cold start performance, keep your deployment ZIP packages small, remove unused dependencies with techniques like tree shaking, prioritize lightweight libraries, exclude unnecessary files like tests or docs, and structure your layers efficiently.

When using container-based deployments, you push your function image to Amazon Elastic Container Registry (Amazon ECR) first. This option provides greater flexibility and control over the runtime environment, especially useful when your function code exceeds 250MB or when you require specific language version or system libraries not included in the AWS-managed runtimes. While container images allow for highly customized deployments, pulling large images from ECR might contribute to cold start latency. Similar to ZIP-based approach, make sure to keep your image sizes minimal by removing unnecessary artifacts.

Resource allocation

Memory allocation plays a key role in both the performance and cost of your Lambda functions. When you assign more memory to a function, Lambda also allocates more CPU power, which can help reduce the time it takes to initialize and run your code – often improving cold start performance.

Use the AWS Lambda Power Tuning tool to balance performance benefits with added cost of allocating more memory. This tool runs your function with different memory settings and analyzes the trade-offs between speed and cost. This makes it easier to find the most cost-effective configuration for your workload.

Network configuration

By default, your Lambda functions are connected to the public internet, however you can attach them to your own Amazon Virtual Private Cloud (Amazon VPC) instead, for example when your functions need to access VPC-hosted resources such as databases. When this happens, the Lambda service creates an Elastic Network Interface (ENI) to attach your functions to. This process involves multiple steps, such as creation of network interfaces, subnets, security groups, route table and so on. While Lambda service tries to minimize added latency, applying this configuration might introduce additional latency, therefore you should only use it when access to VPC resources is necessary.

Design considerations

Optimizing your function initialization code can help to reduce cold start latencies. Streamline your function code to load and prepare quickly, alongside its runtime environment and dependencies. Employ lightweight libraries and implement lazy loading for resources to further cut initialization time. Minimize code size by eliminating unnecessary dependencies. Consider your architecture carefully: break down large functions into smaller, more focused units based on invocation patterns. This approach allows for quicker initialization of individual components. These smaller, task-specific functions offer the added benefits of improved modularity, easier testing, and simpler maintenance. However, always strike a balance between function size and functionality to maintain overall system efficiency. By implementing these optimization strategies, you can substantially mitigate cold start impacts while preserving your application’s core functionality and performance.

Provisioned Concurrency

Provisioned Concurrency addresses cold starts by pre-initializing function environments and keeping them “warm”, always ready to respond to incoming function invocations. By maintaining pre-initialized execution environments, Provisioned Concurrency delivers consistent performance for frequently invoked functions while eliminating throttling during peak loads. Provisioned Concurrency results in predictable performance for a function by providing consistent latency at some cost for reserved instances. Provisioned Concurrency is beneficial for high-traffic applications that requires consistent performance during heavy traffic and latency sensitive applications that requires fast responses for an interactive application, thereby reducing cold starts benefitting overall performance. The customer success story from Smartsheet demonstrates significant improvement in user experience with reduced latencies and better cost efficiency.

SnapStart

Lambda SnapStart improves cold invoke latency by reducing the time it takes for a function to initialize and become ready to handle incoming requests. When SnapStart is enabled for a function, Lambda creates an encrypted snapshot of the initialized execution environment when you publish a new function version. This triggers an optimized INIT phase of the function where an immutable, encrypted snapshot of the memory and disk is taken. This snapshot is cached for reuse later. When a SnapStart-enabled function is invoked again, Lambda restores the execution environment from the cached snapshot instead of creating a new environment, thus moderating a cold invoke. SnapStart minimizes the invocation latency of a function, since creating a new execution environment no longer requires a dedicated INIT phase.

SnapStart is an efficient cold start solution, currently available for Java, Python, and .NET functions. It is particularly useful for functions with long initialization times. Inactive snapshots are automatically removed after after 14 days without invocation for Java based runtimes. For detailed pricing information, check out our pricing page.

Figure 3: Lambda SnapStart architecture: optimizing cold starts through snapshot-based initialization

Observability

Use out-of-the-box observability facilities provided by AWS Lambda to investigate whether your functions or user experience are affected by cold starts and identify most impactful optimization areas. Monitoring Lambda cold start performance using built-in metrics such as INIT duration, invocation duration, and error rates is crucial for identifying bottlenecks and refining the function for optimal performance and cost-effectiveness. Use the following metrics:

- INIT duration: The INIT duration metric, found in the REPORT section of function logs, measures the time taken for the function to initialize and become ready to handle invocation.

- REPORT Message: Lambda reports total invocation time, such as initialization, in the REPORT log message at the end of each invocation. Monitoring this metric helps identify potential bottlenecks within the function code.

- Error Rates: Monitoring error rates helps identify issues within the function, thus guaranteeing reliability and stability.

- Concurrency Metrics: Concurrency metrics help understand if a function is hitting the concurrency limits that can contribute to potential increases in cold start durations and throttling.

Conclusion

In this post you’ve learned a detailed breakdown and insights about various aspects of Lambda cold starts, offering a comprehensive understanding of the challenges and solutions in this space. While cold starts commonly affect less than 1% of requests, understanding their nature and implementing appropriate remediation strategies early can help to minimizing their impact in the most latency-sensitive applications.