AWS Storage Blog

Mountpoint for Amazon S3 CSI driver v2: Accelerated performance and improved resource usage for Kubernetes workloads

Amazon S3 is the best place to build data lakes because of its durability, availability, scalability, and security. In 2023, we introduced Mountpoint for Amazon S3, an open source file client that allows Linux-based applications to access S3 objects through a file API. Shortly after, we took this one step further with the Mountpoint for Amazon S3 Container Storage Interface (CSI) driver for containerized applications. This enabled you to access S3 objects from your Kubernetes applications through a file system interface.

Users like you appreciated the high aggregate throughput that this CSI driver offered. However, you asked us for a way to run Mountpoint processes, not on the host but inside containers, and without elevated root permissions. This redesign would have three key benefits. First, it would free up systemd resources of the host that can instead be used for other operational needs. Second, it would let pods on a node share the Mountpoint pod and its cache. By using the new caching capabilities in Mountpoint for Amazon S3 CSI driver v2, you can finish large-scale financial simulation jobs up to 2x faster by eliminating the overhead of multiple pods individually caching the same data. Third, this design would be compatible with Security-Enhanced Linux (SELinux) enabled Kubernetes environments such as Red Hat OpenShift. Therefore, we delivered the first major upgrade and launched Mountpoint for Amazon S3 CSI driver v2.

In this post, we dive into the core components of this driver, the key benefits of using it to access S3 from your Kubernetes applications, and a real-world illustration on how to use it.

How we built the Mountpoint for Amazon S3 CSI driver v2

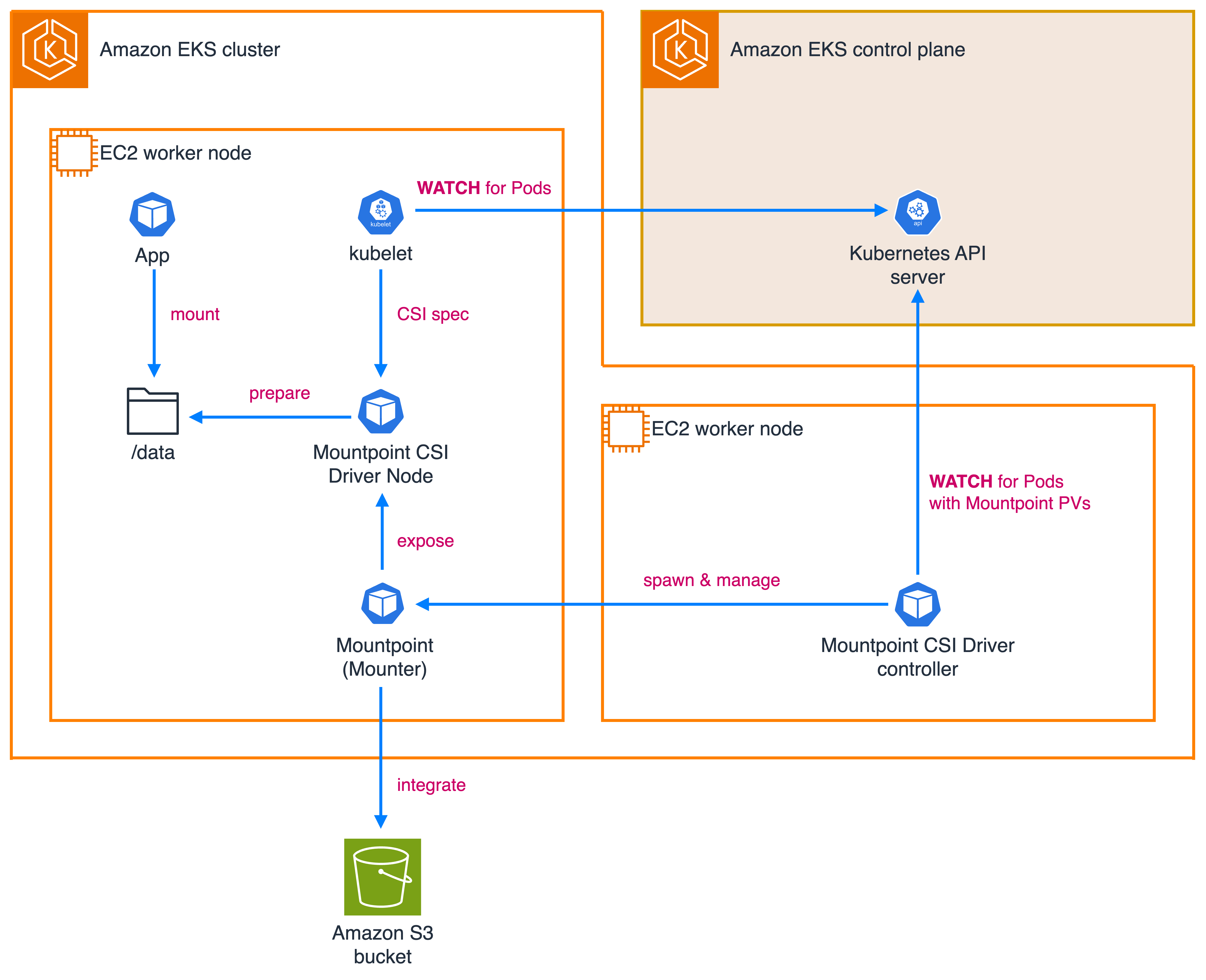

We built this CSI driver with the following three main components, also shown in the following figure:

- CSI driver node: This component is deployed as a DaemonSet on each node. It implements the CSI Node Service RPC and handles mount operations. It communicates with the kubelet and manages the lifecycle of Mountpoint instances.

- CSI driver controller: This component schedules a Mountpoint pod on the appropriate node for each workload pod that uses S3 volumes. It uses a custom resource called

MountpointS3PodAttachmentto track which workloads are assigned to which Mountpoint pods. - CSI driver Mounter/Mountpoint pod: These are the pods that actually run Mountpoint instances. They receive mount options from the CSI Driver Node and spawn Mountpoint processes within their containers.

Overview of the key benefits

Mountpoint for Amazon S3 CSI driver v2 offers the following key benefits:

- Efficient resource usage for the nodes on your Kubernetes cluster as pods on the same Amazon EC2 instance (specifically a node) have the ability to share a common Mountpoint pod.

- Optimized performance and reduced cost for repeatedly accessed data when pods share the local cache.

- Streamlined credential management with EKS Pod Identity by Amazon Elastic Kubernetes Service (Amazon EKS).

- Compatibility with Security-Enhanced Linux (SELinux) requirements, enabling regulatory compliance and supporting Red Hat OpenShift environments.

- Improved observability with Mountpoint pod logs now available through standard Kubernetes command line tools such as

kubectl logs. You can also enable Amazon CloudWatch integration for centralized logging with the CloudWatch Observability EKS add-on.

In the following section, we dive deep into how this driver improves resource usage, accelerates performance, and streamlines credential management.

Efficient resource usage for the nodes on your Kubernetes cluster

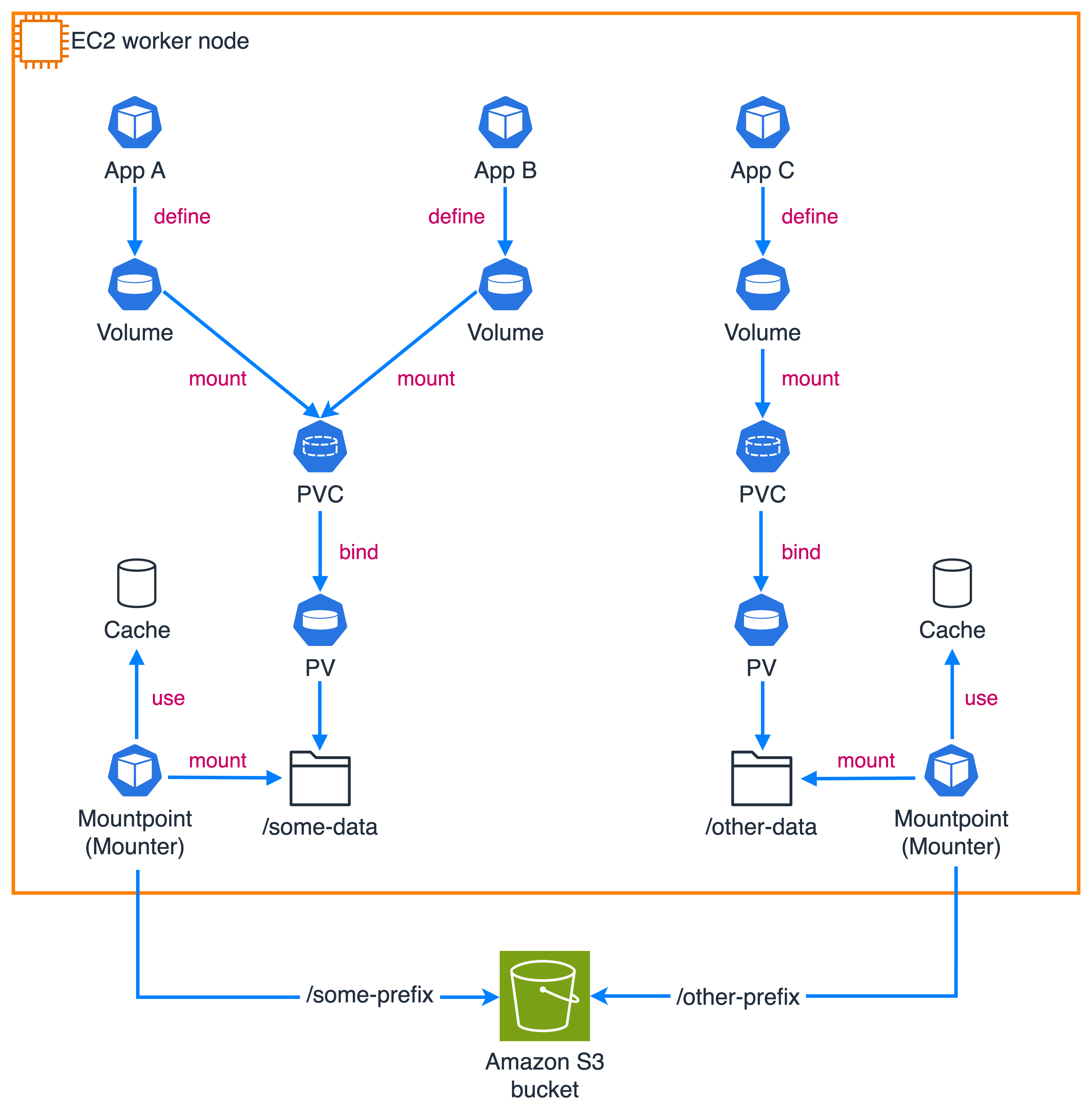

Mountpoint for Amazon S3 CSI driver v2 allows you to run a single Mountpoint instance and shares it among multiple worker pods (as shown in the following figure) on the same node when those pods have identical configuration parameters such as namespace, service account name, or the source of authentication. This improves resource usage in your Kubernetes clusters so that you can launch more pods per node.

Optimized performance and reduced cost for repeatedly accessed data

The driver allows you to locally cache repeatedly accessed data and share the cache across pods on the same node (as shown in the preceding figure). When you configure caching, it automatically creates the cache folder for you. It also lets you control the cache size and storage characteristics, and you can choose to cache on either an emptyDir volume (using the node’s default storage medium) or a generic ephemeral volume. By using the new caching capabilities in Mountpoint for Amazon S3 CSI driver v2, you can finish large-scale financial simulation jobs up to 2x faster by eliminating the overhead of multiple pods individually caching the same data.

Streamlined credential management with EKS Pod Identity support

In this major upgrade, we also introduced support for EKS Pod Identity, a simpler and more scalable alternative to IAM Roles for Service Accounts (IRSA) to manage access policies across EKS clusters, including cross-account access. EKS Pod Identity can be installed as an Amazon EKS add-on.

When you use EKS Pod identity, you do not need to create a separate OpenID Connector (OIDC) provider per cluster. You also do not need the namespace and the service account to already exist, and so this further streamlines setup and configuration (for example for tenant onboarding). Furthermore, EKS Pod Identity supports attaching session tags to the temporary credentials associated with a service account, thus allowing Attribute-based access control (ABAC). Pod Identity provides tags for namespace session, kubernetes-service-account or eks-cluster-name so you can control access at the desired levels of granularity and segments.

The driver can be configured to use two different types of credentials:

- Driver-level credentials, where a single set of permissions associated with the service account of the driver is used for pods in the cluster.

- Pod-level credentials, where the credentials associated with each pod’s service account are used to configure access permissions for that pod.

The latter option, in combination with the credentials associated using EKS Pod Identity and its session tags features, allows you to create scalable, multi-tenant solutions.

For example, with EKS Pod Identity, you can define clauses in AWS Identity and Access Management (IAM) policies attached to the IAM role associated with a service account using these session tags:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::some-bucket/s3mp-apps-data/shared/*",

"arn:aws:s3:::some-bucket/s3mp-apps-data/${aws:PrincipalTag/kubernetes-namespace}/*"

]

}

]

}Example usage patterns for Mountpoint for Amazon S3 CSI driver v2

In this section, we walk through an example that demonstrates the driver in action.

The example assumes that the driver is installed and configured to access the corresponding S3 bucket and prefixes using EKS Pod Identity or IRSA.

Creating a PersistentVolume

To allow applications to handle the files in the S3 bucket as if they were in a local directory, we must create a PersistentVolume (because the driver, at the time of writing this post, only supports Kubernetes static provisioning):

apiVersion: v1

kind: PersistentVolume

metadata:

name: s3mp-data-pv

spec:

storageClassName: '' # Required for static provisioning

capacity:

storage: 1Gi # Ignored, required

accessModes:

- ReadWriteMany

claimRef: # To ensure no other PVCs can claim this PV

namespace: apps

name: s3mp-data-pvc

mountOptions:

- region: eu-central-1

- prefix data/

csi:

driver: s3.csi.aws.com # Required

volumeHandle: my-s3-data-volume # Must be unique

volumeAttributes:

bucketName: mp-s3-bucket-<some unique suffix>

cache: emptyDir

cacheEmptyDirSizeLimit: 1Gi

cacheEmptyDirMedium: MemoryThe PersistentVolume defines:

- Its storage capacity (necessitated by CSI spec, but unused)

- The access modes

- The bucket (name and region) to use

- The size and location of the cache to be used by the driver Mountpoint pods

Using the PersistentVolume

We can now create an application that uses the preceding PersistentVolume:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: s3mp-data-pvc

namespace: apps

spec:

storageClassName: ''

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

volumeName: s3mp-data-pv

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: some-app

namespace: apps

spec:

replicas: 2

selector:

matchLabels:

name: some-app

template:

metadata:

labels:

name: some-app

spec:

nodeName: ip-10-0-82-168.eu-central-1.compute.internal

containers:

- name: app

image: busybox

command: ['sh', '-c', 'trap : TERM INT; sleep infinity & wait']

resources:

...

volumeMounts:

- name: data

mountPath: /data

volumes:

- name: data

persistentVolumeClaim:

claimName: s3mp-data-pvcThe preceding code defines:

- A PersistentVolumeClaim that uses the PersistentVolume

- The mount storage capacity (needed by CSI spec, but unused)

- Its

storageClassNamethat must match the one defined on the PersistentVolume - An application that mounts the PersistentVolumeClaim under

/data

We forced both application replicas onto the same worker node to demonstrate the caching capability.

Verifying the deployment

The created PV and PVC and the deployed application would look similar to the following (with some data abridged for clarity):

$ kubectl get pods -n apps -o wide NAME READY STATUS AGE IP NODE some-app-556c9447fd-sjf4k 1/1 Running 3m14s 10.0.94.129 ip-10-0-85-106.eu-central-1.compute.internal some-app-556c9447fd-wkpbv 1/1 Running 3m14s 10.0.89.177 ip-10-0-85-106.eu-central-1.compute.internal $ kubectl get pv,pvc -n apps NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE persistentvolume/s3mp-data-pv 1Gi RWX Retain Bound apps/s3mp-data-pvc <unset> 4m NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE persistentvolumeclaim/s3mp-data-pvc Bound s3mp-data-pv 1Gi RWX

When the application is deployed, the driver creates a Mountpoint pod on each node where the pods are running (a single one in this case) to set up the pod sharing to allow other pods on the node to access the local cache.

$ kubectl get pods -n mount-s3 -o wide NAME READY STATUS AGE IP NODE mp-xgscz 1/1 Running 3m48s 10.0.94.38 ip-10-0-85-106.eu-central-1.compute.internal

Using the cache

Accessing one of the files in the mounted bucket and its data/ prefix allows us to see the cache in action, downloading and counting words in a 2.5MB file:

$ POD_ID=$(kubectl get pod -n apps -l name=some-app -o jsonpath='{.items[0].metadata.name}')

$ echo ${POD_ID}

some-app-556c9447fd-sjf4k

$ kubectl exec -it -n apps ${POD_ID} -- time wc /data/some-file

129419 130195 2424064 /data/some-file

real 0m 0.22s

user 0m 0.01s

sys 0m 0.00s

$ POD_ID=$(kubectl get pod -n apps -l name=some-app -o jsonpath='{.items[1].metadata.name}')

$ echo ${POD_ID}

some-app-556c9447fd-wkpbv

$ kubectl exec -it -n apps ${POD_ID} -- time wc /data/some-file

129419 130195 2424064 /data/some-file

real 0m 0.09s

user 0m 0.01s

sys 0m 0.00s

Considering that the word counting on the same instance takes roughly the same amount of time, you can see the difference that the cache makes, as indicated by the real times in the outputs of the preceding commands.

Upgrading from Mountpoint for Amazon CSI driver v1 to v2

To install the driver, you can follow our installation guide. If you are upgrading from v1, please note the new release introduces changes in how volumes are configured. We recommend going over the list of configuration changes prior to upgrading your CSI driver, as you will need to make these changes when you upgrade.

At the time of writing this blog post, the Mountpoint for Amazon S3 CSI driver v2 has some constraints with node autoscalers such as Karpenter and Cluster Autoscaler. For more details, go to this GitHub issue.

Conclusion

Mountpoint for Amazon S3 CSI driver v2 provides key enhancements in how Kubernetes applications interact with S3 data. These enhancements include the pod sharing feature for improved resource usage and faster performance, SELinux support, and streamlined logging with kubectl and permissions management using Amazon EKS Pod Identity.

Whether you’re building data analytics pipelines, machine learning (ML) workflows on Amazon EKS, or other applications that need access to S3 data, through a file like interface, Mountpoint for Amazon S3 CSI driver v2 offers an efficient solution.

We’re excited to see how you’ll use this new capability in your Kubernetes environments. The driver is available today on GitHub, and we welcome your feedback and contributions to help shape its future development. Thank you for reading this post. If you have comments or questions, don’t hesitate to leave them in the comments section.