AWS Storage Blog

How Uniphore achieved 30% cost savings by modernizing Windows servers on AWS

Uniphore is the first built-for-scale, AI-native company that infuses AI into every part of the enterprise experience. Uniphore’s enterprise-class multimodal AI and data platform unifies all elements of voice, video, text, and data by using generative AI, Knowledge AI, Emotion AI, and workflow automation together as trusted co-pilot. Uniphore was running one of their core business applications on legacy Windows Server infrastructure, which faced scalability and performance challenges as their business grew. Increasing maintenance costs and security concerns regarding end-of-support operating systems meant that Uniphore needed to modernize their infrastructure while making sure of business continuity.

Uniphore successfully used Amazon Web Services (AWS) services to modernize their Windows workloads through containerization and cloud-native solutions. Using Amazon Elastic Kubernetes Service (Amazon EKS) for containerization and Amazon Elastic Compute Cloud (Amazon EC2) for compute resources, combined with Amazon Elastic File System (Amazon EFS) and Amazon S3 for storage, Uniphore achieved 30% cost savings while significantly improving their operational capabilities. They migrated from their Windows on-premises infrastructure to Linux on AWS to optimize their call-center analytics workloads. They found that their core business requirements for training and fine-tuning large language models (LLMs) perform significantly better on Linux-based systems, enabling them to build a unified, high-performance ecosystem tailored for AI/ML operations.

In this post, we explore how Uniphore approached their modernization journey and share practical insights for organizations considering similar transformations. We demonstrate a practical approach to modernizing legacy Windows workloads through containerization, efficient data migration, and cloud-native services. Whether you’re planning a similar transformation or evaluating modernization options, this real-world example demonstrates how to overcome common migration challenges while achieving tangible business benefits such as improved scalability, enhanced security, and reduced operational overhead.

Business challenge

Uniphore’s U-Assist platform was originally hosted on-premises across 50 bare-metal Windows Server 2008 R2 instances. Business rapidly expanded and the instances began showing significant strain. Frequent storage shortages led to application cache failures and operational disruptions. The data growing beyond 60 TB and aging hardware reaching its limits meant that maintenance costs were rising while system performance degraded.

These applications weren’t designed for scalability and lacked vendor support, making it increasingly difficult to meet growing business demands. Security posed another significant concern, because Windows Server 2008 R2’s end-of-support status meant no security updates or compliance support. These challenges, combined with the substantial operational overhead from frequent manual interventions, made it clear that modernization was necessary.

Solution implemented

Uniphore implemented a comprehensive modernization strategy using AWS services across three key areas:

1. Application modernization

The team modernized the application stack by rewriting the Java-based services to be operating system (OS)-agnostic and implementing containerization using Docker. This involved refactoring Windows-specific dependencies, such as file system paths, while externalizing configurations for container compatibility. Then, the containerized application was deployed to Amazon EKS using Kubernetes, which enabled true application portability and resilience across cloud environments through automatic scaling, self-healing, and rolling updates.

2. Custom data migration solution

Uniphore developed a custom migration solution using Type 2 hypervisor technology. This innovative approach allowed hosting of the AWS DataSync agent and enabled seamless integration with Windows-based storage. Using dedicated high-speed internet connectivity, data was efficiently transferred to Amazon EFS, making sure of improved reliability and availability.

3. Cloud infrastructure setup

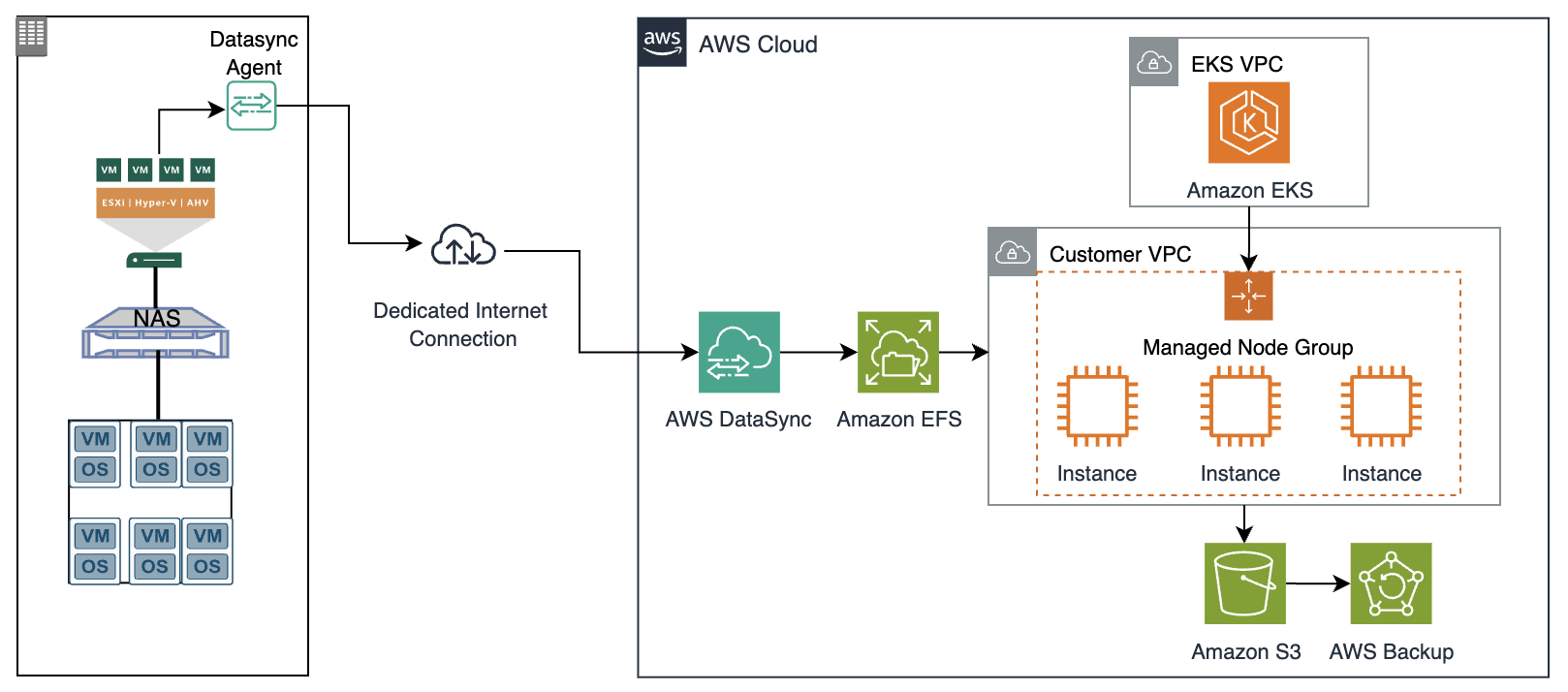

The modernized infrastructure uses Amazon EC2 and Amazon EKS for compute and container orchestration, with Amazon Virtual Private Cloud (Amazon VPC) providing secure network isolation. Storage requirements are met through Amazon EFS and Amazon S3, while DataSync was used for transferring the data from legacy servers to Amazon EFS, as shown in Figure 1. This was fed to the application running Amazon EKS and processed output from the application was stored in Amazon S3. Processed data was backed up using AWS Backup.

Figure 1: Uniphore’s data migration architecture with DataSync and AWS services

Technical implementation

To make sure of a seamless and disruption-free transition from legacy infrastructure to a modern, cloud-native environment, Uniphore executed the migration in a structured, three-phase approach. Each phase focused on minimizing risk, making sure of data integrity, and maintaining business continuity.

Phase 1: Hypervisor and DataSync agent deployment

The team began by setting up a Type 2 hypervisor (for example VMware Workstation or Hyper-V) on existing hardware to host the DataSync agent. This setup enabled secure connectivity between on-premises Windows Server instances and the AWS environment.

Key actions in this phase included:

- Provisioning and configuring the hypervisor to run the DataSync agent in a stable, isolated environment.

- Optimizing the network layer with dedicated high-speed internet and VPN tunnels to maximize throughput and reduce latency.

- Validating connectivity between on-premises storage and Amazon EFS targets to make sure of data transfer readiness.

Phase 2: Secure data migration and validation

With the infrastructure in place, the team proceeded to migrate more than 60 TB of structured and unstructured data. DataSync was used to orchestrate data transfer in a reliable, monitored fashion.

To make sure of data integrity and completeness:

- Transfers were performed incrementally, with checksum validations on both ends.

- Transfer jobs were monitored in real-time through Amazon CloudWatch and DataSync metrics.

- Performance tuning was applied iteratively to optimize throughput, particularly for large files and deep directory structures.

- A separate test environment in AWS was used to validate the migrated data and application behavior prior to final cutover.

Phase 3: Production cutover and application deployment

The final phase involved the cutover to production workloads hosted on AWS. The legacy Windows-based application stack was containerized using Docker and deployed to Amazon EKS, allowing the platform to scale dynamically and run independently of the underlying OS.

Key steps in this phase:

- EKS clusters were configured with autoscaling node groups using Amazon EC2, thus optimizing cost and performance.

- Amazon VPC was set up with custom routing, security groups, and NACLs to make sure of secure east-west and north-south traffic flows.

- Application configurations and secrets were managed using AWS Systems Manager Parameter Store and AWS Secrets Manager.

- A/B testing and gradual traffic shifting made sure of zero-downtime deployment.

- Legacy systems were decommissioned only after full validation in the cloud environment.

Best practices

The following best practices were adopted:

- Infrastructure as code (IaC) with Terraform: Infrastructure was provisioned using Terraform, enabling version-controlled, repeatable, and auditable deployments.

- CI/CD pipelines: GitLab pipelines were set up for continuous integration and deployment (CI/CD), making sure of rapid, safe updates to containerized services.

- Observability: DATADOG and CloudWatch were integrated for full-stack monitoring and alerting across compute, storage, and network layers.

- Backup and disaster recovery: AWS Backup policies were enforced for Amazon EFS and Amazon EC2 snapshots, with cross-Region replication configured for critical workloads.

Key benefits

The following six key benefits are expected with this solution.

1. Scalability and performance

Prior to modernization, Uniphore’s bare-metal servers were rigid, with fixed resource allocations that often led to performance bottlenecks during peak usage.

After the migration:

- Auto Scaling with Amazon EC2 and Amazon EKS allowed the infrastructure to automatically scale up or down based on real-time workload demands.

- This dynamic scalability made sure of high availability and consistent performance, even during traffic spikes or batch processing events.

- Application latency dropped significantly due to optimized container orchestration and right-sized compute provisioning.

2. Flexibility and portability

The legacy applications were tightly coupled with a specific Windows OS version (2008 R2), making it difficult to upgrade or rehost.

- Refactoring and containerizing applications allowed Uniphore to make them OS-agnostic and environment-independent.

- The containerized services could now run on any Kubernetes-compatible platform, such as Amazon EKS, self-managed clusters, or even other cloud providers, making sure of vendor neutrality and portability.

- Developers gained the flexibility to test and deploy in isolated environments without dependency conflicts.

3. Enhanced security and compliance

Operating unsupported systems such as Windows Server 2008 R2 posed serious security and compliance risks, such as the following:

- Lack of security patches.

- Incompatibility with modern compliance frameworks such as SOC 2, HIPAA, or ISO 27001.

Post-migration:

- The use of Amazon VPC, AWS Identity and Access Management (IAM) roles, Security Groups, and Kubernetes RBAC improved access control and network segmentation.

- All workloads ran on actively supported OS versions and were protected using AWS-native security tools such as Amazon Inspector, AWS Config, and AWS GuardDuty.

- AWS Backup and multi-Availability Zone (AZ) architectures provided built-in disaster recovery and business continuity.

4. Efficient data management

Data growth had outpaced the on-premises infrastructure, resulting in storage capacity issues and backup failures.

- Amazon EFS provided a fully managed, elastic NFS file system that scaled automatically with data growth.

- Amazon S3 was used for storing processed data, benefiting from high durability and lifecycle policies to move older data to cost-effective storage classes such as S3 Glacier.

- DataSync enabled the fast and secure transfer of over 60 TB of data, while making sure of data integrity with checksums and retry mechanisms.

5. Reduced operational overhead

Managing on-premises infrastructure came with high operational costs, from manual patching and monitoring to capacity planning.

- Post-migration, the team no longer had to worry about hardware maintenance, OS upgrades, or backup scripting.

- IaC using Terraform and automated CI/CD pipelines allowed infrastructure and deployments to be managed more reliably and consistently.

- The engineering team could now focus on product innovation instead of firefighting infrastructure issues.

6. Significant cost savings

One of the most impactful outcomes of this initiative was the 30% reduction in total operational costs.

- Cost savings were achieved by shutting down legacy data center resources, optimizing instance usage with EC2 Auto Scaling and Spot Instances, and right-sizing storage and compute.

- Containerization reduced resource overhead, allowing multiple applications to run efficiently on fewer nodes.

- The ability to scale down unused resources during off-peak hours directly translated to lower monthly cloud bills.

Conclusion

This post demonstrated how enterprises can successfully modernize legacy Windows Server workloads using AWS services, showcasing Uniphore’s journey from bare-metal servers to a containerized, cloud-native architecture. Through careful planning and implementation of containerization, custom data migration solutions, and modern cloud infrastructure, organizations can achieve significant operational and cost benefits while maintaining business continuity.

Key takeaways from this modernization journey include the following:

- The importance of a phased migration approach to minimize risk and make sure of data integrity.

- How containerization can transform legacy Windows applications into portable, scalable workloads.

- Strategies for efficient data migration using AWS DataSync and custom solutions.

- Best practices for implementing security, monitoring, and disaster recovery in the cloud.

Learn more

To begin your own modernization journey, please check these resources: