AWS Spatial Computing Blog

A Maturity Level Framework for Industrial Inspection Report Automation

Introduction

Vision systems are increasingly being deployed for industrial inspection. Mounted on drones, ground robots, or integrated into fixed and portable monitoring cameras, these systems capture massive volumes of 2D and 3D data that must be processed to yield actionable insights. Advances in computer vision—enhanced more recently by AI and agentic AI—are transforming what was once a human-driven process into an automated workflow, capable of generating detailed inspection reports at scale.

Applications span infrastructure inspection (cell towers, refineries, solar farms), tracking site development in mining and construction, and crop yield optimization in agriculture. In each case, algorithms convert terabytes of raw imagery—previously analyzed manually in a time-consuming and error-prone manner—into concise, queryable reports that can even be explored through LLM-based prompts.

This article introduces a framework for assessing the maturity of image processing pipelines designed to automate the transformation of visual data into high-level textual summary reports. While we illustrate the approach using aerial imagery from drone captures, the methodology is broadly applicable to other contexts.

4 Proposed stages and relevant AWS services

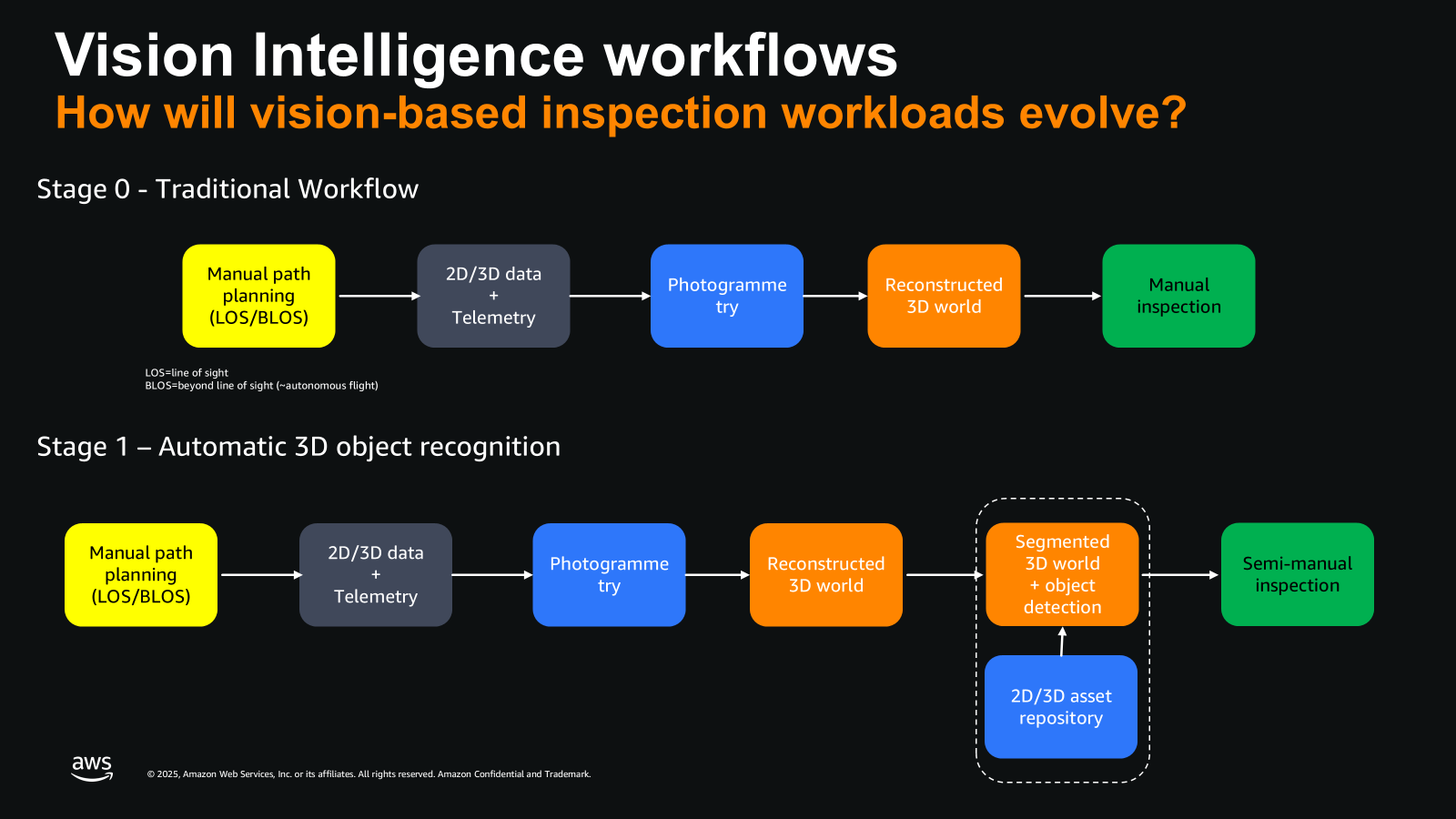

We propose a four-stage framework of increasing complexity, where each stage introduces more AI-driven automation and correspondingly reduces the need for human oversight or validation. Beginning with Stage 0, each subsequent stage builds on the capabilities of the previous one. Stage 3 represents the pinnacle of sophistication, integrating the most advanced combination of computer vision and AI techniques. We see most current implementations at stage 1 and are seeing a trend towards stage 2 and 3 evolutions where the cloud will play a key role. The 4 stages are as follows:

Image describes the vision processing workflows for Stage 0 and Stage 1

Stage 0: Image Capture and Basic Reconstruction

In this initial stage, drones are programmed to follow predetermined paths or cover specific areas (in line of sight or sometimes beyond line of sight). They capture images, videos, or LiDAR point clouds. This raw data is then processed using photogrammetric algorithms to generate a 3D digital twin of the environment, which can be used for subsequent analysis.

Imagine a construction site being scanned by a drone or other robotic systems. The goal is to generate a first-order 3D model—a meshed wireframe enriched with texture data—that can then be digitally explored and navigated from multiple angles. In this first stage, relevant AWS services include Amazon EC2 and Amazon S3 to run relevant ingestion and visualization algorithms.

Stage 1: Asset Detection and Localization

Building upon Stage 0, this stage incorporates computer vision algorithms capable of detecting and localizing specific assets within the digital twin. This process typically relies on a 2D/3D repository of images or 3D objects for identification. While this approach reduces manual work in the final analysis, significant human intervention is still required.

In this stage, EC2, S3 and AWS database services can be used and depending on the amount of .objects to be recognized and the complexity of 3D scenes, other AWS scalability services can be used such as Elastic Load Balancing.

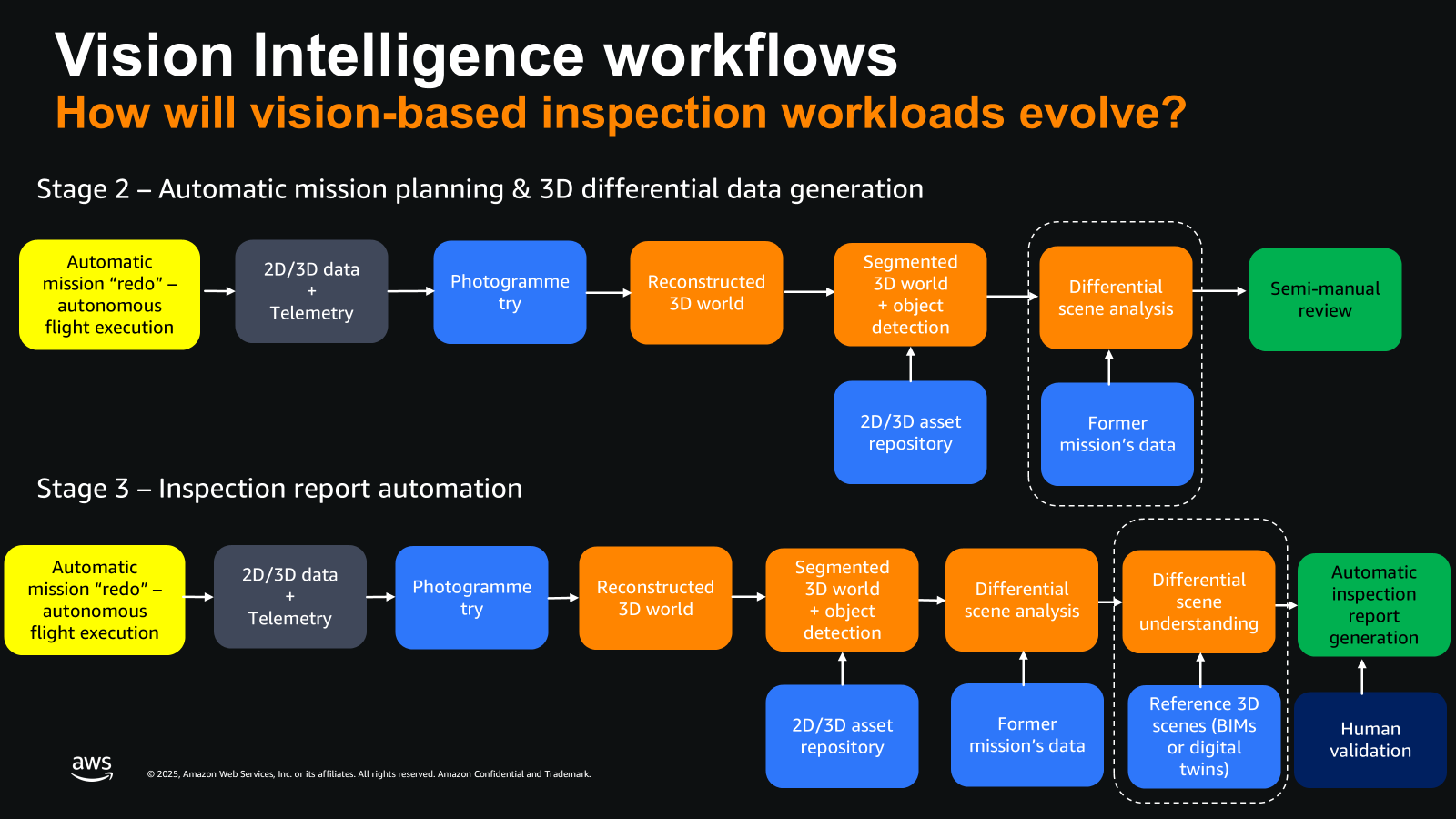

Image describes the vision processing workflows for Stage 2 and Stage 3

Stage 2: Differential Scene Understanding

Stage 2 enhances the automation level of Stage 1 by introducing differential scene understanding across successive drone missions or scene captures. This involves identifying 3D objects that have changed position or detecting significant differences in geolocalized 2D or 3D objects, which may indicate issues such as rust or unexpected changes in the environment over time. At stage 2, the adoption of cloud technology rapidly emerges to store and centralize large volumes of data across multiple sites and time periods.

In this stage, additional AWS services can be used, such as Amazon Nova foundational AI model or Amazon Bedrock for running AI inference workloads. For the training of typical defects, AWS SageMaker can be used to train or fine tune image recognition models.

Stage 3: Integration with Reference Data

This stage further amplifies Stage 2 by incorporating reference data of a site. This can include initial “ground truth” scans or construction blueprints (Building Information Modeling or BIM) used in the construction sector. The integration of this data allows for more accurate and context-aware analysis.

In this stage, AWS services able to consolidate disparate data sources such as AWS Glue can be used, such as Nova foundational AI model or Amazon Bedrock for running AI inference workloads.

Final stage of stage 3: Automated Reporting with GenAI and Agentic AI

The final stage leverages Generative AI techniques, such as Image-to-Text description, and Agentic AI for orchestrating complex data access and AI-based algorithms. This combination enables the automated generation of textual inspection summary reports. These reports require only simple human validation, significantly reducing the time spent on individual scene analysis from hours to minutes.

In the final stage, AWS models such as Nova or other GenAI model provider running Amazon Bedrock, able to generate textual metadata from 2D or 3D can be used. Also, any LLM solutions hosted on Amazon Bedrock can be used to aggregate findings of multiple inspection reports over time, to further identify trends.

This staged approach demonstrates a progressive increase in automation and intelligence, from basic data capture to advanced AI-driven analysis and reporting, revolutionizing inspection and monitoring processes across various industries.

Conclusion

Building on the leveling index we previously introduced to categorize use cases and sophistication levels in digital twin applications, this blog presents a similar framework for combining vision and AI techniques to automate inspection report generation. In the drone and aerial imaging market alone, AI-driven workflows are growing at a 27% CAGR and are projected to reach $2.7B by 2030. Additional digitalization approaches such as ground robots and fixed or mobile cameras show significant potential for cloud adoption.