AWS Open Source Blog

Open Protocols for Agent Interoperability Part 3: Strands Agents & MCP

Developers are architecting and building systems of AI agents that work together to autonomously accomplish users’ tasks. In Part 1 of our blog series on Open Protocols for Agent Interoperability we covered how Model Context Protocol (MCP) can be used to facilitate inter-agent communication and the MCP specification enhancements AWS is working on to enable that. The examples in Part 1 used Spring AI and Java for building the agents and connecting them with MCP. In Part 2 we discussed Authentication on MCP. This is a critical aspect of connecting agents so they can work together in a larger system, all with knowledge of who the user is. In Part 3 we will show how you can build inter-agent systems with the new Strands Agents SDK and MCP.

Strands Agents is an open source SDK that takes a model-driven approach to building and running AI agents in just a few lines of Python code. You can read more about Strands Agents in the documentation. Since Strands Agents supports MCP we can quickly build a system consisting of multiple inter-connected agents and then deploy the agents on AWS.

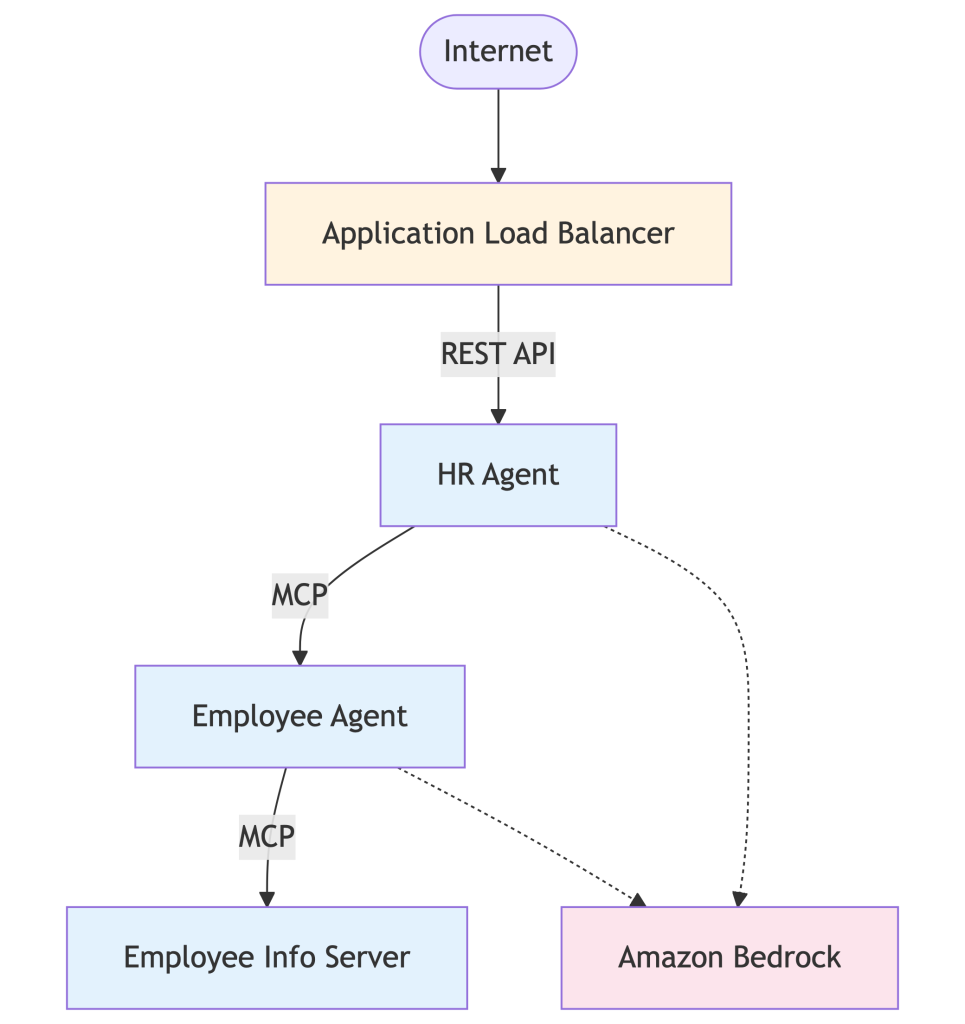

Our example is an HR agent which can answer questions about employees. To do this you could imagine the HR agent communicating with a number of other agents like an employee data agent, an Enterprise Resource Planning (ERP) agent, a performance agent, goal agent, etc. For this example let’s start with a basic architecture where a REST API exposes access to an HR agent which connects to an employee info agent:

Note: The complete, runnable version of the following example is available in our Agentic AI samples repo.

Create a System of Agents with Strands Agents and MCP

Let’s start with the MCP server that will expose the employee data for use in the employee info agent. This is a basic MCP server:

This MCP server exposes the ability to get a list of employee skills and get a list of employees that have a specific skill. For this example we are using fictitious data and in a future blog we will share how to secure this service.

Now that we have a way for an agent to get this employee information, let’s create the employee agent using Strands Agents. Our agent connects to the MCP server for employee information and uses Bedrock for inference. We also add a system prompt to provide some additional behavior to this agent:

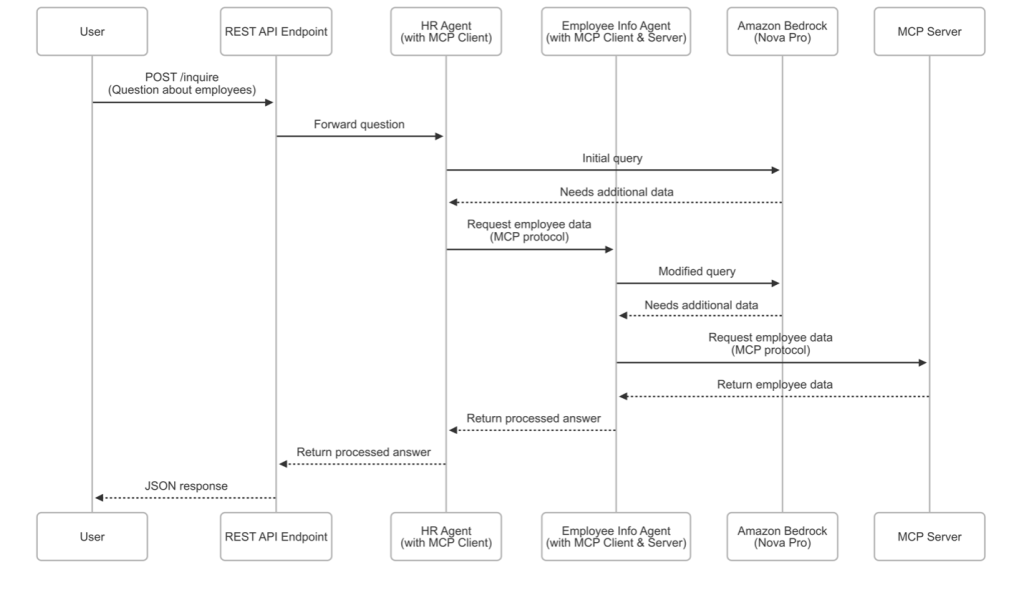

This example uses Amazon Bedrock and the Nova Micro model with the employee data tool for multi-turn inference. Multi-turn inference is when an AI agent makes multiple calls to an AI model in a loop, usually involving calling tools to get or update data, completing when the initial task is done or an error happens. In this example the multi-turn inference enables a flow like:

- User asks “list employees who have skills related to AI”

- The LLM sees that it has access to a list of employee skills and instructs the agent to call that tool

- The agent calls the employee

get_skillstool and returns the skills to the LLM - The LLM then determines which skills are related to AI and sees that it can then get employees with each skill using the

get_employees_with_skilltool - The agent gets the employees for each skill and returns them to the LLM

- The LLM then assembles the complete list of employees with skills related to AI and returns it

This multi-turn interaction across multiple LLM calls and multiple tool calls encapsulated into a single agent(question) call, shows the power of Strands Agents performing an agentic loop to achieve a provided task. This example also shows how the employee agent can add additional instructions on top of the underlying tools, in this case with a system prompt.

Expose an Agent as an MCP Server

We can interact with this agent in a number of ways. For example, we could expose it as a REST service. In our case we want to expose it in a way that will enable other agents to interact with it. We can use MCP to facilitate that inter-agent communication by exposing this agent as an MCP server. Then another agent (for example HR agent) will be able to use the employee agent as a tool.

To expose the employee agent as an MCP server we just wrap our employee_agent function in an @mcp.tool, transform the response data into a list of strings, and start the server:

Since we’ve wrapped the employee agent in an MCP server we can now use it in other agents. The HR agent is exposed as a REST API so that we could call it from a web application or other services.

Run MCP and Strands Agents on AWS

Of course we can run all of this on AWS using a variety of different options. Since the MCP servers use the new MCP Streamable HTTP transport, this could be run on serverless runtimes like AWS Lambda or AWS Fargate. For this example we will containerize the employee info MCP server, the employee agent, and the HR agent, run them on ECS, and expose the HR agent through a load balancer:

For this example we’ve used AWS CloudFormation to define the infrastructure (source). Now with everything running on AWS we can make a request to the HR agent:

And we get back:

Get the complete source for this example.

MCP Contributions from AWS

This example shows just the beginning of what we can do with Strands Agents and MCP for inter-agent communication. We’ve been working with the MCP specification and implementations to help evolve MCP to support additional capabilities that some inter-agent use cases may need.

The MCP specification has just had a new 2025-06-18 release which includes two contributions from AWS to better support inter-agent communication. We’ve also contributed support for these new features in various MCP implementations.

- Elicitation: When MCP servers need additional input they can signal that to the agent (via the MCP client). Instead of a tool call providing a data response it can elicit additional information. For example, if an Employee Info Agent using an MCP tool to get employee data determines that a tool request like “get employees that have AI skills” may return more results than the user may want, it can elicit that the user provide a team name to filter on. This approach is different from a tool parameter because runtime characteristics may determine that the team name is necessary. In a future blog post in this series we will dive deeper into how to use this feature. We’ve contributed the implementations of this specification change to the Java and Python MCP SDKs, with both having merged the changes. (Java SDK change; Python SDK change)

- Structured Output Schemas: MCP tools generally return data to the agent (via the MCP client) but in contrast with input schemas (which have always been required), we’ve contributed a way for tools to optionally specify an output schema. This enables agents to perform more type-safe conversions of tool outputs to typed data, enabling safer and easier transformations of that data within the agent. We will also cover this feature more in future blog posts in this series. Implementations of this feature have been contributed to MCP’s Java, Python, and TypeScript SDKs with the TypeScript implementation having already been merged. (Java SDK pull request; Python SDK pull request; TypeScript SDK pull request)

Conclusion

It’s exciting to see the progress with MCP for inter-agent communication and in future parts of this series we will dive deeper into how these enhancements can be used to enable richer interactions between agents.

In this blog we’ve seen how we can use Strands Agents and MCP to create a system of agents that all work together and run on AWS. Strands Agents made it easy to build an agent connected to MCP tools, and then also expose the agent as an MCP server so that other agents can communicate with it. To get started learning about Strands Agents, check out the documentation and GitHub repo. Stay tuned for more parts of this series on Agent Interoperability!