Networking & Content Delivery

Streamline in-place application upgrades with Amazon VPC Lattice

Introduction

In this post, we review how you can perform in-place application upgrades using Amazon VPC Lattice, while maintaining system reliability, security, and performance. Whether you are upgrading a classic three-tier web application, migrating from Amazon Elastic Compute Cloud (Amazon EC2) to containers, or managing periodic Kubernetes upgrades, one challenge remains consistent: making sure of zero downtime while decoupling infrastructure from application logic. Achieving this historically necessitated a mix of infrastructure orchestration, service discovery, and traffic routing mechanisms. However, with VPC Lattice you can streamline this process so that you can perform in-place upgrades with minimal disruption.

We assume you are already using VPC Lattice in your environment. If you’re new to VPC Lattice or haven’t implemented it yet, we recommend referring to this blog post on Amazon VPC Lattice DNS migration strategies and best practices. This post explores how VPC Lattice streamlines the following three scenarios:

- In-place upgrade of a three-tier web application

- Migration from Amazon EC2 to Amazon EKS or Amazon ECS

- Controlled periodic upgrades of EKS clusters

For each use case, we define the problem, outline the traditional approach without VPC Lattice, and demonstrate how VPC Lattice streamlines and enhances the upgrade process.

Solution Overview

The following sections walk through how VPC Lattice streamlines three different scenarios.

Scenario 1: In-place upgrade of a three-tier web application

In a three-tier architecture composed of a frontend, backend (application layer), and a data layer (usually a database such as Amazon Relational Database Service (Amazon RDS)), upgrading any single tier, particularly the backend, needs careful orchestration. The challenges multiply when the upgrade includes changes to the API surface or the data schema, which can impact downstream consumers and service dependencies.

Consider a scenario where you have different frontend, backend, and database services. You may need to deploy a new version of the backend service that introduces business logic changes and schema updates. The upgrade must be executed while minimizing downtime and making sure that no API contracts are broken. Inter-tier communication, such as from the frontend to the backend, must remain stable throughout the deployment. Any failure during the rollout could lead to frontend requests hitting a partially upgraded backend, resulting in inconsistent behavior or application errors.

Without VPC Lattice

You can orchestrate upgrades through Amazon Web Services (AWS) networking services such as Elastic Load Balancers (ELBs), Amazon Route 53, and Auto Scaling groups. Traffic shifting uses weighted DNS routing or blue/green deployments through Application Load Balancer (ALB) target groups. Although this approach is functional, it necessitates extensive manual configuration of load balancers, routing rules, and health checks across tiers. DNS-based cutover limitations and environment synchronization challenges complicate progressive delivery patterns. The lack of a unified traffic control plane increases operational complexity and rollback risks, making service upgrades more failure-prone and time-intensive to execute safely.

With VPC Lattice

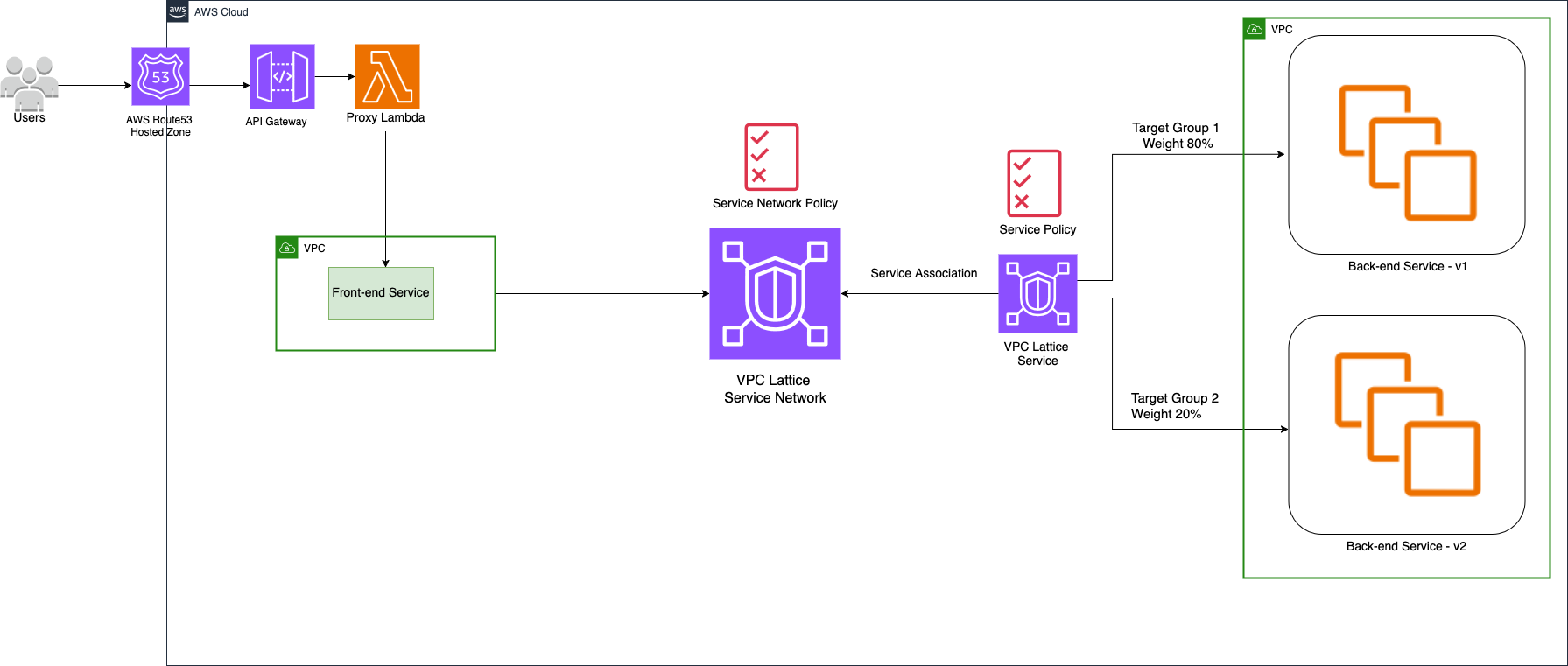

VPC Lattice introduces a built-in way to define, expose, and control service-to-service communication within and across VPC, without managing per-service load balancers, as shown in Figure 1.

Figure 1: Three-tier web application using Amazon VPC Lattice

Using VPC Lattice, each tier in your three-tier architecture has a front-end, back-end, and even database-facing logic that can be registered as a Service or a Resource within a Lattice service network. The front-end service accesses the back-end service not through a hard-coded endpoint or load balancer, but through a Lattice-managed DNS name derived from the service name (for example backend-service.1d2.vpc-lattice-svcs.region.on.aws), with VPC Lattice managing routing, health checks, and policies. While VPC Lattice provides auto-generated DNS names, most organizations prefer to use their own custom domain names (for example, backend.internal.example.com) for better readability and maintenance.

During an upgrade, you can:

- Deploy the new back-end version (

backend-v2) in parallel with the existing version (backend-v1). - Register each version of the service as a separate target group in Lattice service network.

- Use Lattice weighted target groups to start with 100% traffic to

backend-v1, then gradually shift traffic tobackend-v2in a controlled fashion by modifying the weights. - Seamlessly roll back traffic to

backend-v1if metrics indicate a failure, without changing DNS, load balancers configurations, or client applications.

Considerations

- API Version Management: Maintain backward compatibility in API contracts to prevent service disruptions.

- Health Check Configuration: Configure appropriate health checks for both old and new back-end versions to ensure proper traffic routing.

- Service Discovery Consistency: Use consistent service naming conventions within VPC Lattice to maintain proper service discovery.

- Traffic Shifting Strategy: Define clear metrics and thresholds for progressive traffic shifting between versions.

- Rollback Procedure: Maintain snapshots of the original configuration to enable quick rollback if issues arise.

Scenario 2: Migration from Amazon EC2 to Amazon EKS or Amazon ECS

Many organizations begin their cloud journey with applications hosted directly on Amazon EC2 due to its flexibility and familiarity. Over time, as scalability, portability, and operational consistency become more critical, they look to containerization solutions, particularly Amazon Elastic Kubernetes Service (Amazon EKS) or Amazon Elastic Container Service (Amazon ECS), as the next step in their modernization strategy. While ECS offers a simpler, AWS-native container orchestration solution, many organizations choose Amazon EKS for its Kubernetes ecosystem.

However, migrating a production workload from Amazon EC2 to a containerized environment introduces a unique set of challenges. Existing services or consumers such as internal micro-services, APIs and third-party partners are often tightly integrated with Amazon EC2-hosted endpoints, typically exposed through ELBs and accessed through hard-coded DNS or IPs. This tight coupling makes gradual migrations difficult, because changes can ripple across all consumers.

Beyond containerizing the application, the migration involves standing up a parallel container environment, configuring networking, managing AWS Identity and Access Management (IAM) and security transitions, and introducing traffic shifting, without breaking API contracts or affecting uptime. A full-scale cut-over is typically time-consuming and complex to implement. Production systems with live traffic and strict SLAs demand gradual, low-risk transitions. However, running this without disrupting consumers or duplicating infrastructure long-term remains an operational hurdle. In the following sections, we’ll focus on migration to EKS, although the overall concepts and challenges remain similar for ECS implementations.

Without VPC Lattice

We deploy an EKS cluster, configure networking, and containerize the application. Services are exposed through ALBs, AWS Gateway APIs, or NGINX, with traffic redirected through Route 53. IAM roles, policies, and security groups are refactored for least privilege, and traffic is shifted using DNS TTL changes, weighted records, or ELB target flips. This approach complicates service discovery, endpoint management, and security synchronization across environments. Without a unified routing layer, managing traffic between legacy and modernized services is cumbersome, which creates operational drag.

With VPC Lattice

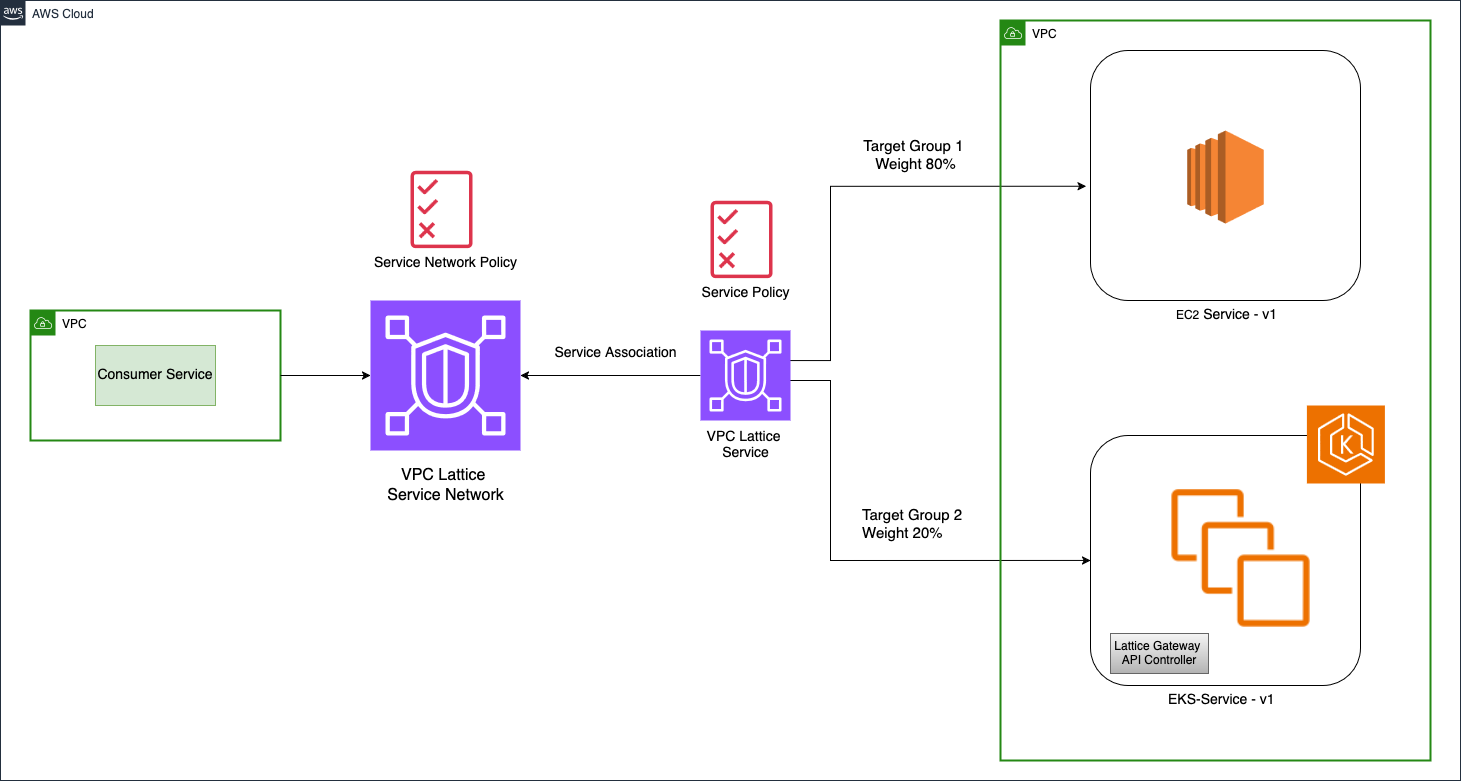

VPC Lattice offers a streamlined and safer path for service migration by abstracting service-to-service communication and traffic routing into a unified, policy-driven framework. Instead of relying on tightly coupled IPs, load balancers, or DNS, both the Amazon EC2-hosted and Amazon EKS-hosted versions of your service can be registered as targets under a single Lattice service.

Figure 2 and the following points show how the migration looks with VPC Lattice in place:

- Register the Amazon EC2-hosted service (for example

ec2-service-v1) as a VPC Lattice service and expose it using a custom domain name (e.g.,ec2-service-v1.lattice.local). - Update all consumers (front-end, APIs, partners) to route traffic to the Lattice service name, not the Amazon EC2-specific endpoint. This is a one-time change.

- Deploy the Amazon EKS-hosted version of the service (

eks-service-v1) in parallel and register it with the same Lattice service behind the new target group. - Use Lattice listener rules to route 100% of incoming traffic to the Amazon EC2 back-end initially.

- Gradually shift traffic from Amazon EC2 to Amazon EKS by updating the weighted target groups in the listener rule, enabling progressive rollout without modifying DNS records or replacing load balancers.

- Monitor performance and rollback instantly if needed, by adjusting the routing weights back to Amazon EC2, all without modifying clients.

Figure 2: Migration from Amazon EC2 to Amazon EKS using VPC Lattice

VPC Lattice eliminates the complexity and risk of Amazon EC2-to-Amazon EKS migrations by decoupling traffic routing from infrastructure. It empowers teams to evolve architectures incrementally without forcing disruptive changes on upstream consumers or overhauling legacy discovery mechanisms. Lattice enables safer, faster, and more cost-effective modernization journeys by managing service endpoints, traffic policies, and access controls at the infrastructure level. With Lattice, you’re no longer building a migration plan around DNS, ELBs, and manual security updates. Instead, you’re managing service identities and traffic flows with infrastructure-agnostic precision, an essential capability in hybrid environments where Amazon EC2 and Amazon EKS coexist during the transition.

Make sure that operational considerations that are consistent with those outlined in Scenario 1 are also applied here.

Scenario 3: Controlled periodic upgrades of Amazon EKS clusters

Upgrading EKS clusters is a critical operation that impacts both control plane stability and workload availability. Kubernetes version updates can deprecate APIs, change scheduling behavior, and disrupt controller interactions. This creates significant risk in production environments with strict service level objectives.

The key challenges include making sure that all of your applications work with the new version of Kubernetes, checking that custom resources definitions (CRDs) and controllers still function correctly, and safely moving applications to new nodes without interruption. These upgrade methods can be complex and error prone.

Without VPC Lattice

As in the previous scenario, we provision a parallel EKS cluster with replicated configurations, deploying workloads to new node groups with traffic segregation through taints. Traffic shifting occurs through new ingress resources and DNS updates. Although this pattern is functional, it introduces operational friction through DNS propagation delays, load balancer IP churn, and cluster-scoped resource conflicts. CRD and controller conflicts may arise if cluster-scoped resources drift during validation. The dual-cluster approach fragments security policies and observability, while shared resource dependencies complicate clean cutover and rollback procedures.

With Amazon VPC Lattice

VPC Lattice introduces a decoupled service network abstraction that transforms this process into a composable, policy-driven migration. By promoting services as the unit of control, Lattice enables seamless interoperability between old and new clusters.

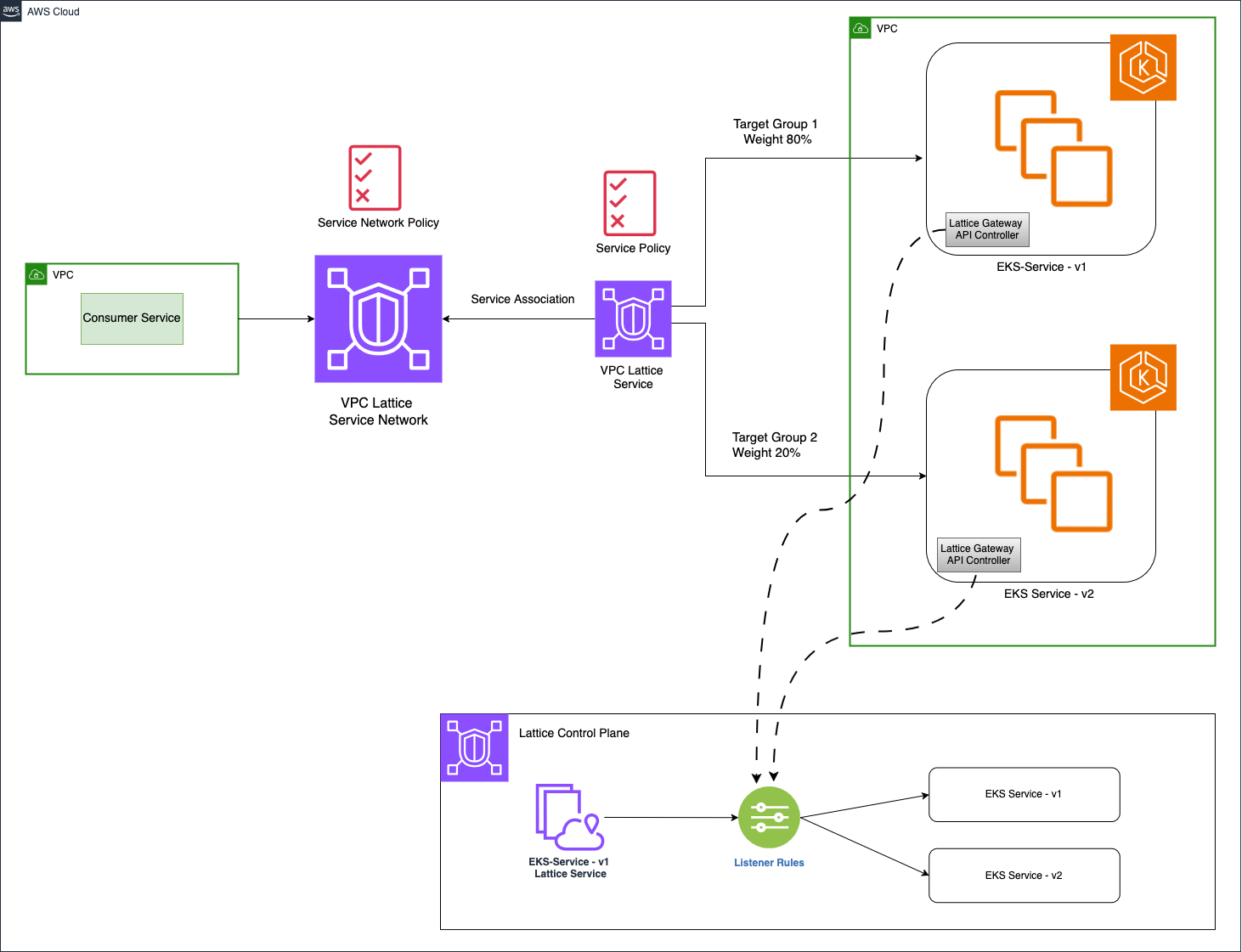

The following points show what the upgrade flow looks like with VPC Lattice in place:

- Spin up a new EKS cluster (v1.32) with parity configurations including add-ons, EKS pod identities, controller versions, security policies.

- Deploy your workloads in the new cluster with modified node selectors/labels.

- Register each upgraded service with the corresponding Lattice service as a new target group.

- Route all traffic initially to the v1.31 services using Lattice listener rules with 100% weighted targets to the old cluster.

- Apply weighted routing policies at the listener level (e.g., 80% → v1.31 cluster, 20% → v1.32 cluster).

- Validate service behavior using real-time Lattice metrics and custom observability signals (e.g., 5xx rates, latency, request volume).

- Progressively increase traffic share to the v1.32 services. Integrate with continuous integration/continuous deployment (CI/CD) pipelines for automation.

- Perform an instant rollback by reverting the traffic weights if issues surface without pod drains, DNS TTL delays, or controller rollbacks.

- Fully drain traffic from v1.31, decommission the old cluster, unregister targets.

Figure 3: Controlled periodic upgrades of EKS clusters

Figure 3 shows the AWS Gateway API Controller that integrates with VPC Lattice. This enables you to define service networks, services, listeners, and target registrations using Kubernetes Gateway API resources. To learn more, refer to this AWS containers post.

Considerations

- Controller drift: Make sure that CRDs (for example from the AWS Gateway API Controller) are reinstalled in the new cluster with identical versions. Mismatches can cause routing failures or inconsistent ingress behavior.

- Cross-cluster service naming: Use identical service names and port mappings to maintain logical consistency within the Lattice service network.

- Health check parity: Validate that both versions implement consistent health checks. Lattice deregisters failing targets automatically.

- IAM consistency: If services rely on EKS Pod Identity for fine-grained IAM access, then make sure that corresponding identity associations and role permissions are recreated in the new cluster to avoid authorization failures.

- TLS termination behavior: If TLS termination happens within the cluster (for example mTLS via service mesh) and you expose services through VPC Lattice, then consider using a TLS listener (TLS Passthrough). This makes sure that encrypted traffic, such as client certificates passing through Lattice without terminating TLS in VPC Lattice.

VPC Lattice transforms Amazon EKS upgrades from disruptive infrastructure events into smooth, reversible workflows. Logical service names remain consistent across versions, so that application teams can roll out changes without touching DNS or ELBs. Teams can use the built-in metrics, health checks, and traffic shifting teams to cut over confidently and roll back instantly if needed. Security policies remain uniform, and cluster operations no longer block feature delivery. The result is a streamlined, application-focused upgrade process that reduces operational risk and enhances developer agility.

Conclusion

In this post, we explored how Amazon VPC Lattice streamlines three modernization scenarios: in-place upgrades of three-tier web applications, migrations from Amazon EC2 to Amazon EKS, and controlled periodic EKS cluster upgrades. VPC Lattice provides a unified, infrastructure-agnostic service networking layer, thereby abstracting traffic routing, security, and observability to remove operational complexities. Therefore, teams can perform incremental upgrades and migrations with minimal disruption, consistent security, and real-time visibility. Ultimately, VPC Lattice empowers organizations to modernize their application architectures confidently, maintaining reliability and agility throughout their cloud evolution journey.