Networking & Content Delivery

AWS Direct Connect Layer 1 Explained: From Data Centers to Cloud Connectivity

In today’s cloud-first world, resilient connectivity between your on-premises infrastructure and AWS, along with a deep understanding of its implementation, is critical for your business success. For many organizations, AWS Direct Connect serves as their primary connectivity solution. Starting at the physical layer (Layer 1), it operates across the first three layers of the Open Systems Interconnection (OSI) model. In this blog, we’ll explore AWS Direct Connect through the lens of Layer 1 and investigate the physical components and layout within AWS Direct Connect locations. You’ll learn about dedicated and hosted connection types and common implementation scenarios for establishing Direct Connect connectivity. You’ll also discover best practices for Layer 1 configuration across different Direct Connect resiliency recommendations. Finally, we’ll examine how Layers 2 and 3 build upon this physical foundation.

Recommended background

Let’s begin by understanding how a Direct Connect location’s connectivity and components fit together.

Direct Connect location connectivity and components

Direct Connect locations are colocation facilities strategically positioned around the world that serve as the physical meeting points between the AWS global infrastructure and your network. The Meet-Me Room (MMR) serves as the interconnection point, keeping Direct Connect endpoints physically separate from customer, partner, and provider networking equipment. Through structured cabling and patch panels in the MMR, high-speed fiber optic cross-connects are established between the AWS space and customer, partner, or provider space, enabling connectivity to AWS Regions and AWS Local Zones.

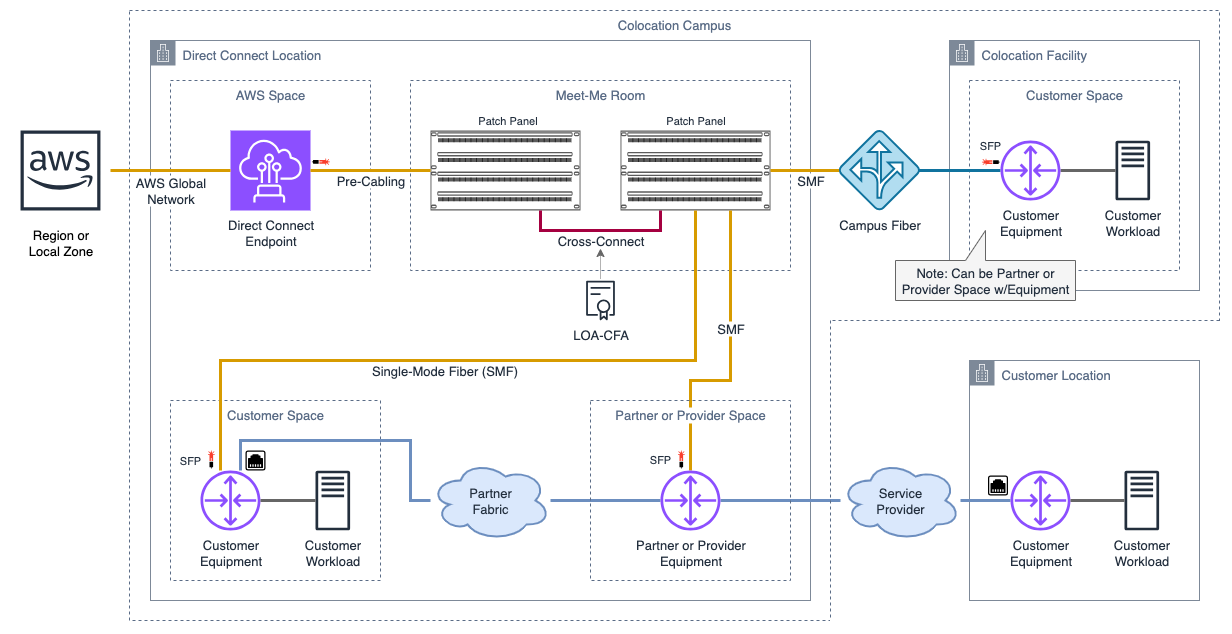

Figure 1. Direct Connect location physical connectivity layout

Components defined

- AWS Region: Physical location where AWS maintains multiple data centers, organized into Availability Zones.

- Direct Connect Location: Colocation facility, located outside of an AWS Region, where you can establish private network connections between on-premises and AWS services.

- AWS Global Network: A custom-built private network that interconnects Direct Connect locations and AWS Regions. It enables high-performance, secure, and reliable data transmission across the AWS global infrastructure.

- AWS Space: Secure area within a colocation facility where AWS installs and operates Direct Connect endpoints.

- Customer, Partner, or Provider Space: Area designated for customers, partners, or providers to install and operate networking equipment. This space can be in either the Direct Connect location or another colocation facility on the same campus managed by the colocation operator.

- Meet-Me Room (MMR): Shared space within a colocation facility where networks interconnect through patch panels. In Direct Connect locations, this shared space enables physical connections between Direct Connect endpoints and customer, partner, or provider networking equipment.

- Patch Panel: Hardware with multiple ports for organizing and managing cable connections. Within the MMR, patch panels provide demarcation points between the AWS space and customer, partner, or provider space, enabling structured management of cross-connects.

- Cross-Connect: Physical cable connection between patch panels in the MMR that connects a Direct Connect endpoint to customer or provider networking equipment. Campus fiber can extend the cross-connect to another campus colocation facility.

- Interconnect: Type of cross-connect between a Direct Connect endpoint and AWS Direct Connect Delivery Partner‘s networking equipment.

- Single-Mode Fiber (SMF): Type of optical fiber cable with a small core, designed for long-distance, high-speed data transmission. AWS Direct Connect requires SMF for all connections through the MMR to Direct Connect endpoints.

- Small Form-Factor Pluggable (SFP): Compact, hot-pluggable transceiver that converts electrical signals to light pulses for fiber optic transmission. AWS Direct Connect supports these SFP types:

- 1 Gbps: 1000BASE-LX (1310 nm)

- 10 Gbps: 10GBASE-LR (1310 nm)

- 100 Gbps: 100GBASE-LR4

- 400 Gbps: 400GBASE-LR4

- Letter of Authorization and Connecting Facility Assignment (LOA-CFA): Document provided by AWS that includes MMR information and port assignments. This authorizes the colocation operator to complete the cross-connect between the AWS space and customer, partner, or provider space.

Now that we’ve covered the physical components, let’s look at real-world implementation scenarios.

Typical AWS Direct Connect implementations

Before diving in, it’s important to understand the two types of Direct Connect connections:

- Dedicated connection: A physical, single-tenant ethernet connection directly provisioned by AWS, with fixed connection speeds up to 400 Gbps and advanced features.

- Hosted connection: A logical connection allocated by an AWS Direct Connect Delivery Partner, providing flexible bandwidth options and lower barrier to entry.

Choosing between dedicated and hosted connections depends on your specific requirements, such as bandwidth needs, security features, management preferences, setup time, and cost.

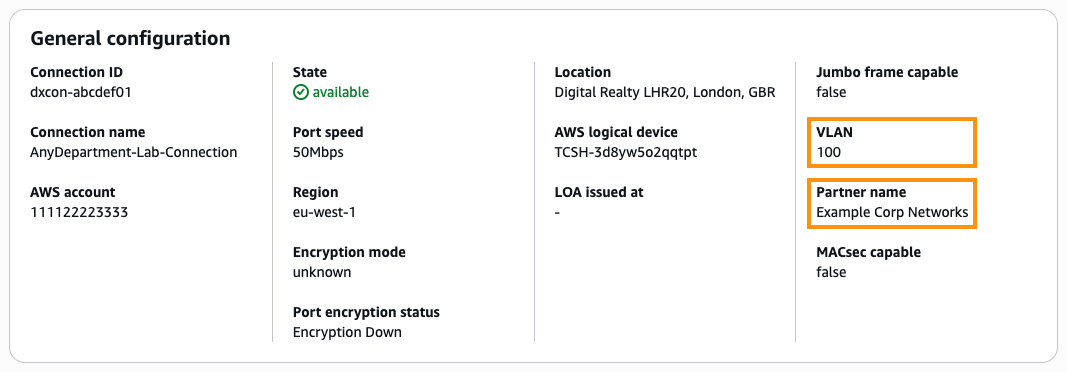

You can identify hosted connections by the presence of both a VLAN and Partner name using the AWS Direct Connect console or the DescribeConnections API.

Figure 2. AWS Direct Connect console hosted connection view

Dedicated: Colocation cross-connect

If you have network infrastructure within a Direct Connect location or campus colocation facility, and need a connection speed of 1, 10, 100, or 400 Gbps with capabilities including link aggregation groups (LAGs) or MAC security (MACsec), you can establish connectivity to the MMR without any intermediary devices.

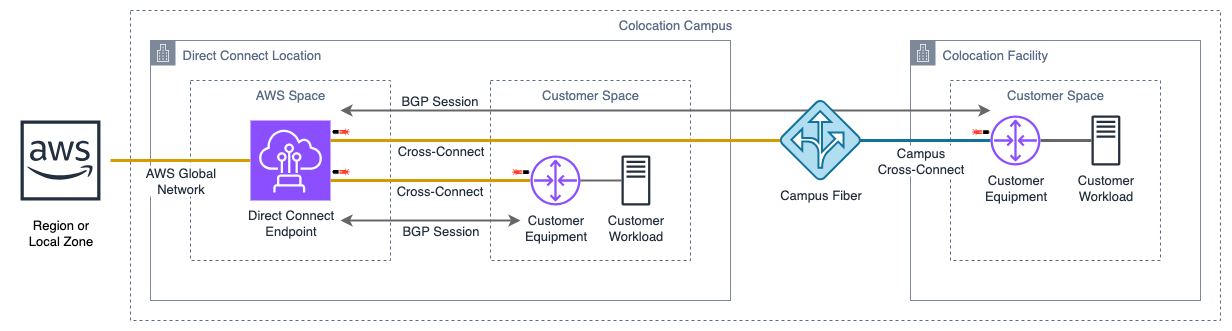

Figure 3. Dedicated connection with colocation cross-connect

Layer 1 connectivity

- Customer-owned cross-connect within MMR; extendable to another campus colocation facility using campus fiber

- Customer manages light levels and provides SMF cable with supported SFP optical transceiver

Layer 2 and 3 configuration

- Supports LAG, MACsec, and uses 802.1Q VLAN tags (1–4094); learned MAC address is the Direct Connect endpoint

- Customer establishes BFD and BGP sessions with Direct Connect endpoint

- Customer controls route advertisements, prioritization, and filtering

Operations

- Request connections via AWS Direct Connect console; send LOA-CFA to colocation operator to complete cross-connect

- All CloudWatch metrics and AWS fiber loop testing capabilities available

- Direct engagement with AWS Support

Dedicated: Network service provider (NSP) Layer 2 extension

If you don’t have network infrastructure within a Direct Connect location or campus colocation facility but need dedicated connection features, you can use an NSP’s network to extend Layer 2 connectivity from your location to the MMR, enabling the same capabilities as a local cross-connect without requiring presence at a Direct Connect location.

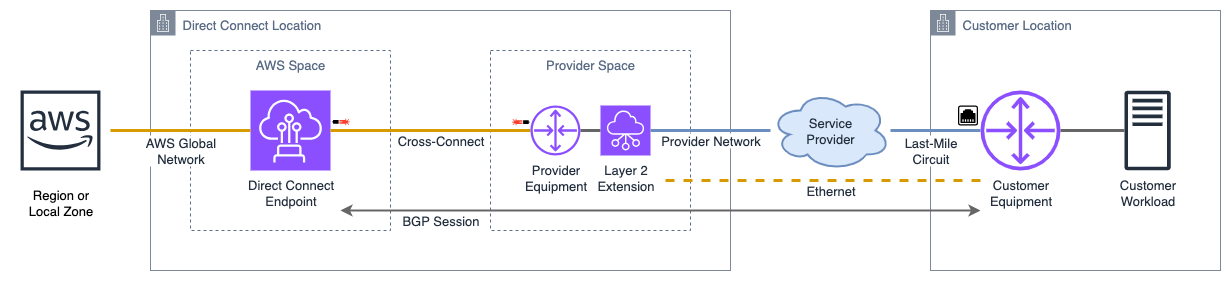

Figure 4. Dedicated connection with Layer 2 extension

Layer 1 connectivity

- NSP-owned cross-connect with managed light levels

- Flexible equipment placement within NSP’s last-mile coverage area

- Local port speeds typically match Direct Connect connection bandwidth, but may vary

Layer 2 and 3 configuration

- Identical capabilities to colocation cross-connect scenario, including LAG, MACsec, VLAN, BFD, and BGP configurations

Operations

- Request connections via AWS Direct Connect console; forward LOA-CFA to NSP for cross-connect setup

- All CloudWatch metrics and NSP-supported fiber loop testing available

- Contact NSP for support; engage AWS Support in parallel if necessary

Dedicated or Hosted: NSP Layer 3 managed

If you’re using an existing Layer 3 service like MPLS VPN to connect with remote sites, this fully managed option enables connectivity to AWS through your existing network, without requiring presence at a Direct Connect location or additional network configuration.

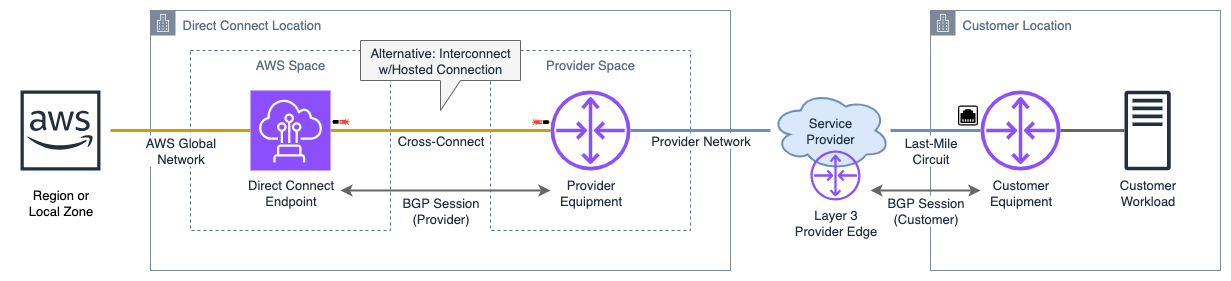

Figure 5. Dedicated or hosted connection with Layer 3 network

Layer 1 connectivity

- NSP-owned cross-connect and managed network infrastructure connecting customer locations across their network

- Last-mile circuit bandwidth often differs from Direct Connect connection bandwidth

Layer 2 and 3 configuration

- NSP establishes Direct Connect BFD and BGP sessions; customer configures BGP with the provider edge

- NSP selects VLAN; learned MAC address is the NSP’s networking equipment

Operations

- Dedicated connections requested via AWS Direct Connect console; Hosted allocated by partner, accepted in console

- All CloudWatch metrics available for dedicated connections; ConnectionState metric only for hosted

- Open tickets with NSP, who then engages AWS Support

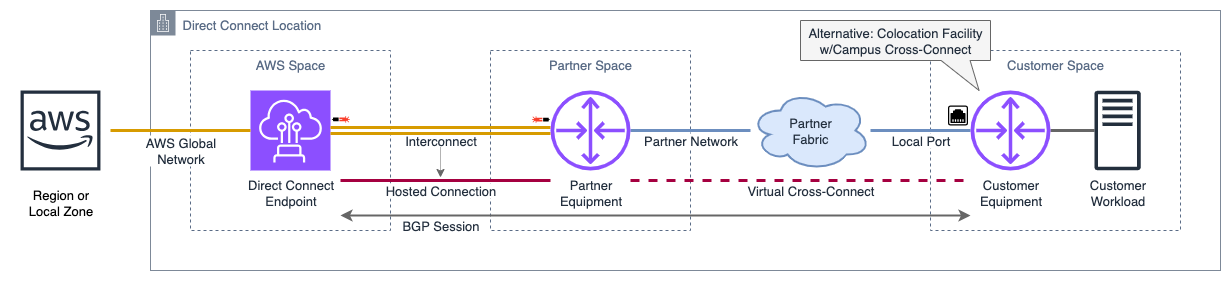

Hosted: AWS Direct Connect Delivery Partner fabric

This option provides scalable bandwidth choices, including sub-gigabit speeds, through an AWS Direct Connect Delivery Partner. It enables on-demand provisioning when you’re already connected to the partner’s network fabric, either at a Direct Connect location or from a remote data center. Additionally, it offers access to an ecosystem of third-party networks and services providers.

Figure 6. Hosted connection from AWS Direct Connect Delivery Partner fabric

Layer 1 connectivity

- Partner-managed interconnect enabling dynamic network device placement in their fabric

- Supports sub-gigabit connection bandwidth with flexible fabric port speeds

Layer 2 and 3 configuration

- Customer selects VLAN configuration type; learned MAC address is the Direct Connect endpoint

- Customer establishes BFD and BGP sessions with Direct Connect endpoint and controls routing

Operations

- Connections allocated by partner and accepted via AWS Direct Connect console

- ConnectionState metric available in CloudWatch

- Submit support requests to partner, who utilizes established AWS escalation channels

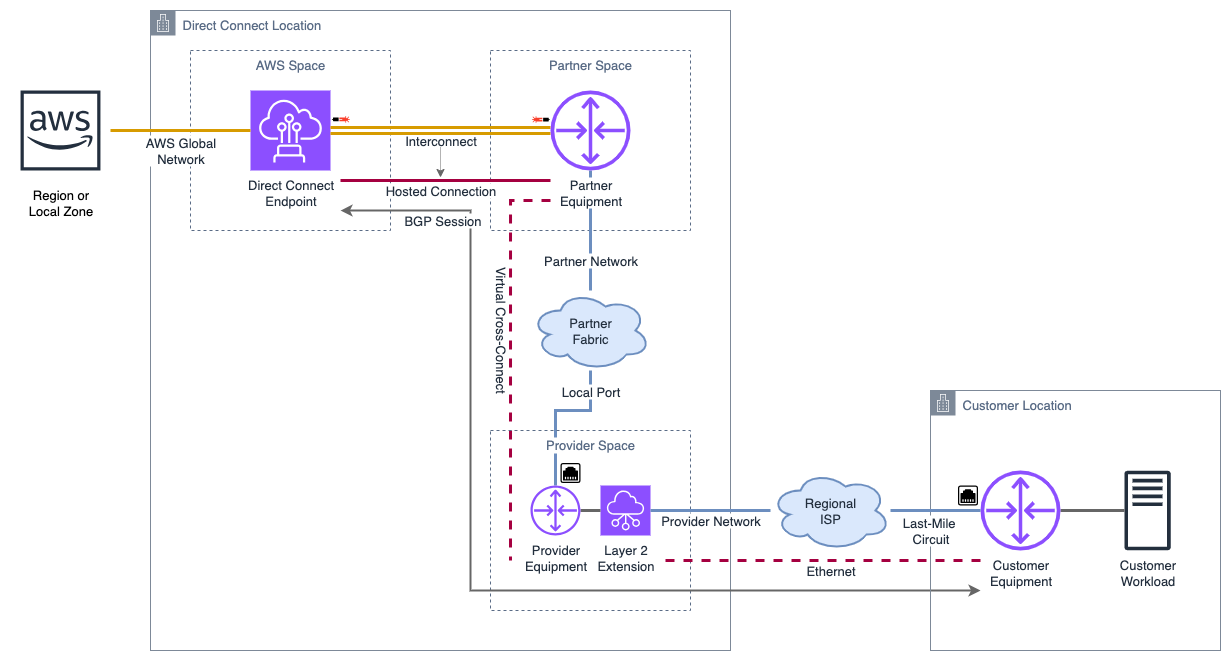

Hosted: Partner-Provider combined connectivity

Connectivity to AWS is possible even when your last-mile or regional Internet service provider (ISP) isn’t an AWS Partner. In this scenario, your ISP collaborates with an established AWS Direct Connect Delivery Partner who maintains the physical infrastructure connecting to AWS. Your regional ISP leverages this setup to transparently deliver connectivity and services to you. This approach allows you to utilize AWS Direct Connect while maintaining your existing network provider relationships.

Figure 7. Regional ISP with combined partner and provider connectivity

Network architecture and implementation

- Direct Connect partner manages Layer 1 connectivity; ISP delivers service through partner infrastructure

- Implementation follows either Layer 2 extension or Layer 3 managed models based on ISP offering

- Regional ISP serves as intermediary, enabling AWS Direct Connect benefits while maintaining existing ISP relationship

Operations

- Connections allocated by partner upon ISP’s request; customer accepts via AWS Direct Connect console

- Configure CloudWatch Network Synthetic Monitor to test connectivity and performance across provider boundaries

- Raise issues through ISP, who coordinates resolution across provider, partner, and AWS

Prior to discussing physical layer resilience, let’s cover considerations for connecting to AWS environments beyond standard Regions.

Considerations for AWS Local Zones, GovCloud (US), and China Regions

AWS Local Zones interoperate with AWS Direct Connect analogously to AWS Regions; review implementation patterns in this dedicated blog post. While AWS GovCloud (US) and AWS China Regions require additional considerations as they operate in separate AWS partitions, the Layer 1 concepts remain similar to standard AWS Regions. For GovCloud (US), you can share Direct Connect connectivity across GovCloud and commercial Regions, as detailed in this hybrid connectivity patterns blog post. For China Regions, consult the dedicated blog post about cross-border VPC connectivity solutions.

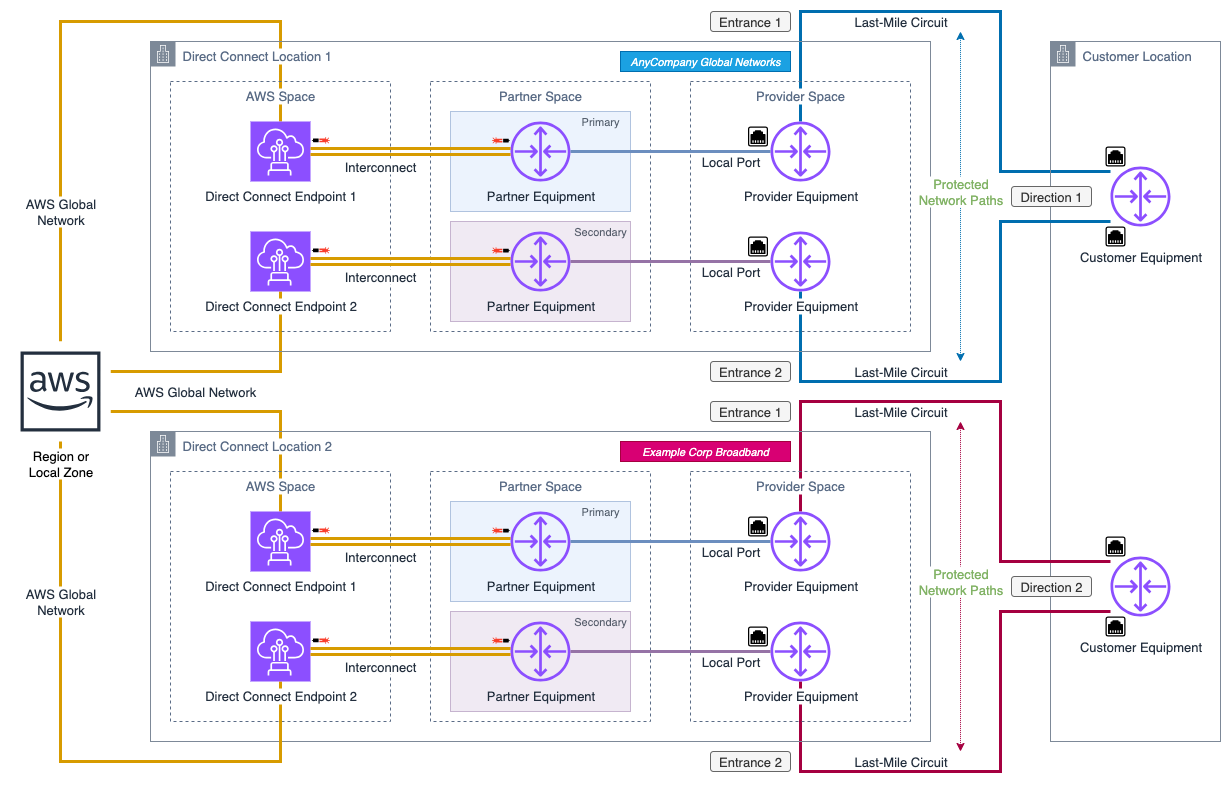

Understanding AWS Direct Connect resiliency at the physical layer

Physical layer resilience forms the foundation of reliable network connectivity, as failures at this layer can render higher layer redundancy mechanisms ineffective. AWS Direct Connect defines three resiliency recommendations; consider the following Layer 1 configurations for each:

- Maximum Resiliency: Two Direct Connect locations, multiple connections through different providers, protected circuits with diverse physical paths, and separate building entries.

- High Resiliency: Two Direct Connect locations, dual connections through the same provider, protected circuits, and diverse physical paths where possible.

- Single-Site Redundant: One Direct Connect location with dual connections through the same provider. Suitable for non-production workloads.

Choose the appropriate model and configurations based on your business availability requirements. AWS Direct Connect offers service level agreements (SLAs) with availability commitments of up to 99.99% on dedicated connections. For more information, see the AWS Direct Connect SLA.

Direct Connect colocation connectivity

When your network infrastructure is within a Direct Connect location or campus colocation facility (Figure 3), this provides the most straightforward path for establishing physical redundancy. Implement dual physical cross-connects traversing different physical paths within the colocation facility or across the campus to separate Direct Connect endpoints. Deploy separate physical network devices and use diverse power sources.

NSP and ISP configurations

When your network infrastructure is outside of a Direct Connect location or campus colocation facility, implementing last-mile connectivity through NSPs (Figures 4 and 5) requires careful attention to physical layer resilience. For maximum resiliency, contract with different last-mile providers using multiple protected circuits with diverse physical paths to separate Direct Connect locations. Ensure different physical entrance points to your facility are used. Providers can deliver this connectivity through various methods, from Carrier Ethernet services (such as EPL and EVPL) with customer-controlled network devices to MPLS VPN with provider-managed network devices. Request detailed fiber maps to verify diverse end-to-end paths and avoid common points of failure.

Partner-enabled connectivity options

Direct Connect partners offer another path to AWS (Figure 6), particularly valuable when you need managed services or customized connection options. Consult the AWS Direct Connect Delivery Partners and Locations matrix to validate available speeds and services. Apply the same physical resilience considerations as NSP implementations. While partners handle much of the physical infrastructure, request documentation detailing their redundancy best practices. Approaches vary from diverse chassis groups to separate physical paths, often identified through color-coding schemes.

Figure 8. Direct Connect maximum resiliency Layer 1 configuration

Monitoring and additional best practices

Implement monitoring across your network infrastructure to identify performance issues and enable rapid resolution. At Layer 1, track optical power levels and error rates. Monitor Layer 2 metrics including interface status, utilization, latency, and packet loss. For Layer 3, observe BGP session status, route stability, and prefix advertisements. Additionally, expand monitoring to the application layer with network observability for modern applications.

When selecting Direct Connect locations, choose two that are geographically closest to your on-premises and directionally toward your target AWS Regions. For active-active configurations, ensure sufficient capacity to handle full production load during failure scenarios. When budget constraints exist, consider adding AWS Site-to-Site VPN alongside AWS Direct Connect for basic redundancy, noting the 1.25 Gbps per-tunnel throughput limitation.

Conclusion

Understanding AWS Direct Connect’s physical (Layer 1) characteristics is fundamental to establishing reliable cloud connectivity between your on-premises and AWS environments. We’ve explored the components, implementation scenarios, and considerations for building resilient Direct Connect architectures. This knowledge enables you to make informed decisions about your connectivity strategy, whether you’re implementing straightforward colocation cross-connects or complex partner-provider solutions. By applying these Layer 1 concepts and best practices, you can build robust hybrid network architectures that meet your business requirements.

If you have questions about this post, start a new thread on AWS re:Post or contact AWS Support.