Migration & Modernization

Tackling large mainframe portfolio with Agentic AI and AWS Transform

Many enterprises have used the mainframe as a platform of choice for their core business processing over decades. The simplicity and speed of initial development in monolithic architecture have consequently resulted in the creation of large (10M+ LOC) and exceptionally large (100M+ LOC) mono-repos and complex codebases. When customers are ready to modernize these applications, a good starting point involves dead code elimination, by analyzing activity metrics to find unused transactions and jobs and remediate duplicate code. Once the preliminary activities of code elimination and remediation are complete, it is essential to start rationalizing these monoliths to domains (functional or technical). These domains can be modernized based on priority, complexity, or coupling rather than tackling the entire code base at once. This is similar to an agile software development approach. It starts with breaking down a large solution into manageable feature chunks (rationalizing large portfolio into domains – preferably business domains). Each feature chunk is built iteratively (modernizing each domain) or shipped in parallel (migrating multiple domains in waves). Refer to the glossary section for definition.

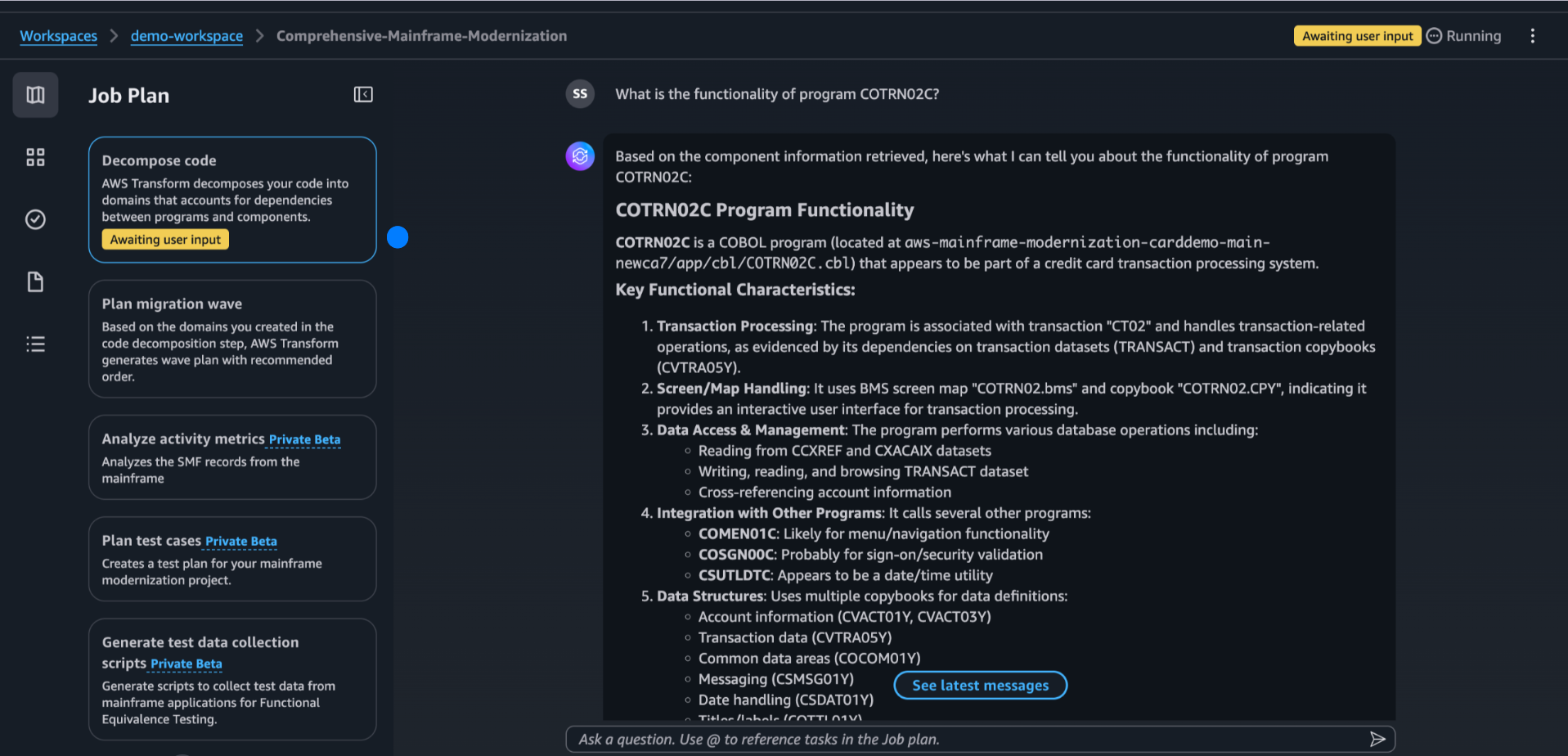

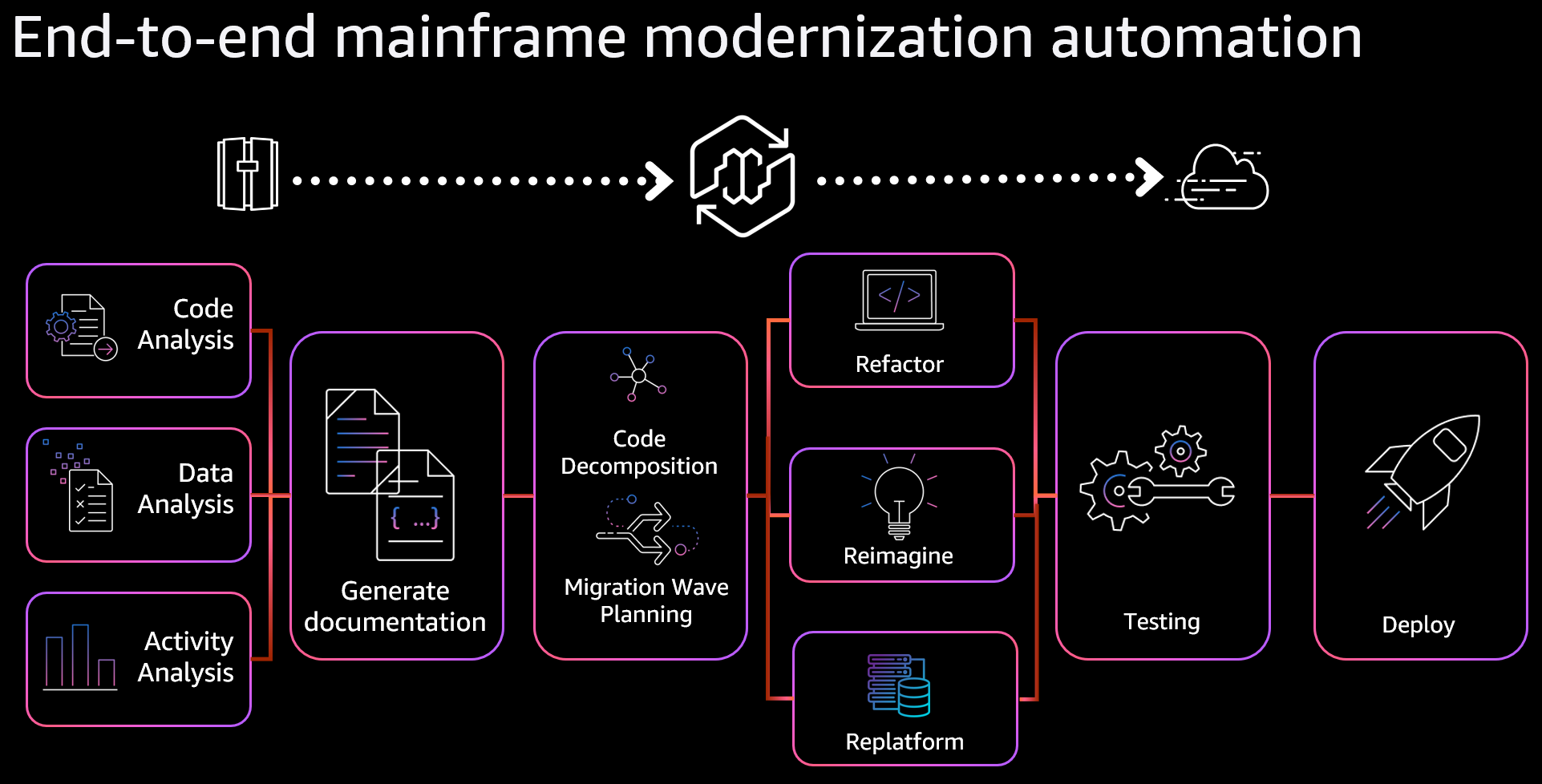

Code assessment and rationalization are imperative first steps for mainframe application modernization. It provides a comprehensive understanding of the existing system’s complexity, dependencies, and risks. This understanding is essential for creating an accurate modernization roadmap, minimizing errors, and making informed decisions on the disposition strategies for your business applications. AWS Transform for mainframe is a powerful service that accelerates the modernization of large IBM z/OS applications using AI-powered agents. AWS Transform uses a combination of proven deterministic tools, foundational models (FMs), large language models (LLMs), graph neural networks (GNNs), automated reasoning (AR), and AI infrastructure to deliver an entirely new migration and modernization experience.

When approaching large codebases (10M+ lines of code), a structured decomposition strategy is essential to manage complexity effectively. Various strategies involve decomposing large application portfolio into applications, decomposing into agile service for macro-services or microservices extraction or decompose into work packages for creating a project work-breakdown structure. AWS Transform modernization workflow follows a systematic approach beginning with comprehensive code analysis to understand complexity, homonyms, missing files, and dependencies. This initial discovery phase helps identify logical boundaries for decomposition.

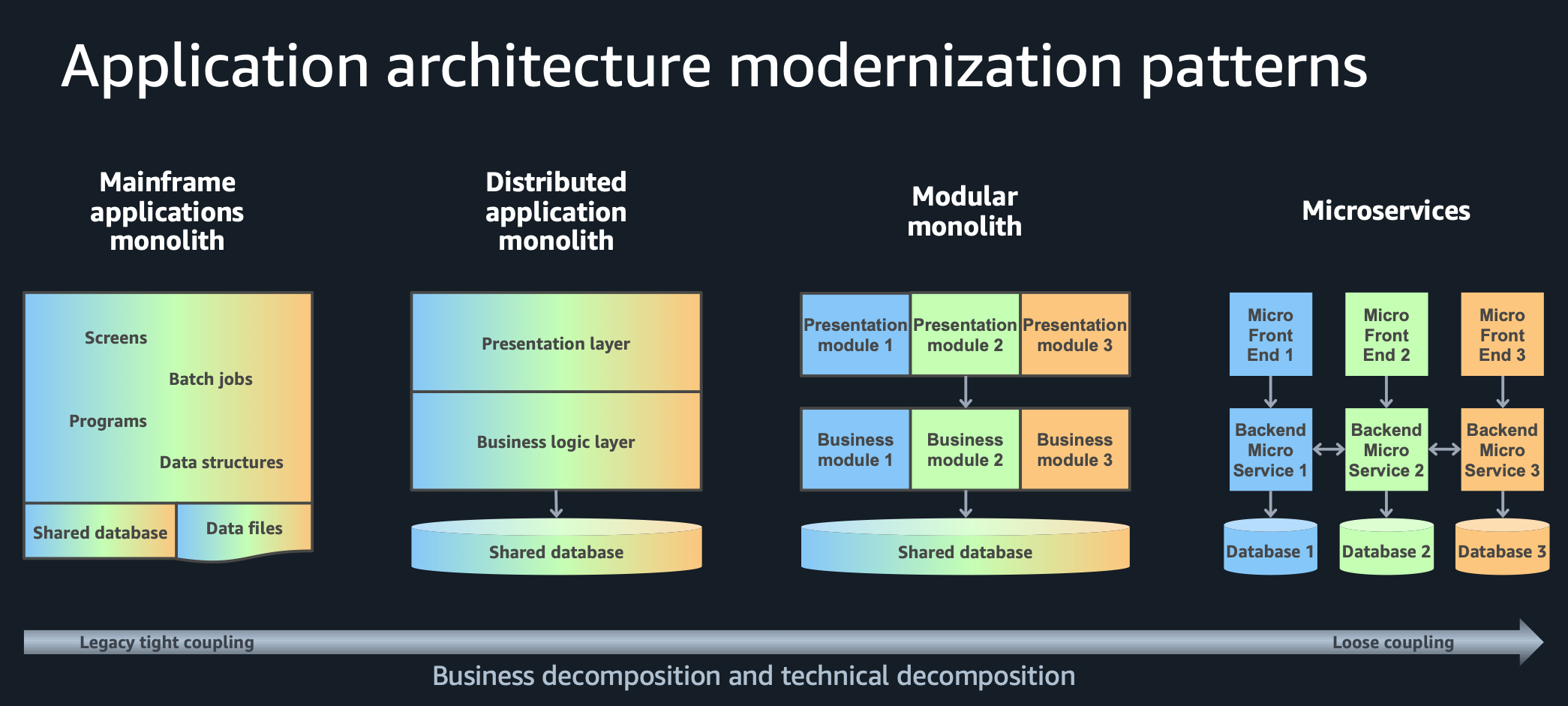

Within a single job, AWS Transform supports breaking monolithic applications into manageable domains through its decomposition and wave planning capabilities. The service can automatically analyze relationships between components to suggest logical boundaries for modernization waves. Transform is for focused modernization projects with a clear scope. It works best when you already know which applications and business domains to modernize. The key is to group related business domains, functions, and their dependencies together as cohesive units. This keeps interconnected parts together during analysis and dependency mapping. The domain best practice size likely varies depending on whether the decomposition is for a full application, a macro-service, a microservice, or a work package as illustrated in Figure1.

Figure 1: Domain (business or technical) decomposition and modernization patterns

Figure 1: Domain (business or technical) decomposition and modernization patterns

Now you are wondering, how should you decompose large mainframe codebases?

Breaking large code bases into smaller chunks using heuristics

There are many ways to break down these large codebases, and there is no one size that fits all. Choose what works best for your organization.

1. Subject matter experts (SME) knowledge

This refers to leveraging the deep expertise of individuals who understand the business domains, functions, naming conventions, deprecated or unused source code, and operational patterns embedded within mainframe applications. Teams who are familiar with the component organization can break them into smaller groups, analyze using AWS Transform, and decompose further as needed. However, many mainframe customers no longer have this tribal knowledge and may not know which components belong to which applications.

2. Systematic naming convention

Large enterprises with a portfolio of mainframe applications use pre-defined or systematic naming conventions particularly aligning to either line of business or specific business application domain.

a. By business capability or sub-domain

Mainframe application naming conventions often reflect the organizational hierarchy, line of business (LOB) alignment within their name structures to indicate which business unit or division owns the application. For example, a financial services company might prefix application libraries with identifiers like “HLQ.RETAIL.PROD.SRCLIB”, “HLQ.COMRCIAL.PROD.PROCLIB” to denote the specific line of business. Read more about decomposition using business capability. Further systematic naming convention includes the use of business units or subdomains. Some mainframe customers group source code within business capabilities and then subdomains. For example, Human Resources (HR): may have payroll and benefits as subdomains.

- HLQ.HR.PAYROLL.COBOL.SRC (Source code for payroll)

- HLQ.HR.PAYROLL.JCL.CNTL (JCL for payroll jobs)

- HLQ.HR.BENEFITS.COPYLIB (Copybooks for benefits applications)

Finance (FIN): may have accounts, general ledger, and budget as subdomains

- HLQ.FIN.ACCOUNTS.REC.SRC (Source code for accounts receivable)

- HLQ.FIN.LEDGER.JCL (JCL for general ledger processing)

- HLQ.FIN.BUDGET.LOADLIB (Load modules for budgeting applications

Read more about decomposition using sub-domain.

b. Using module prefixes or suffixes

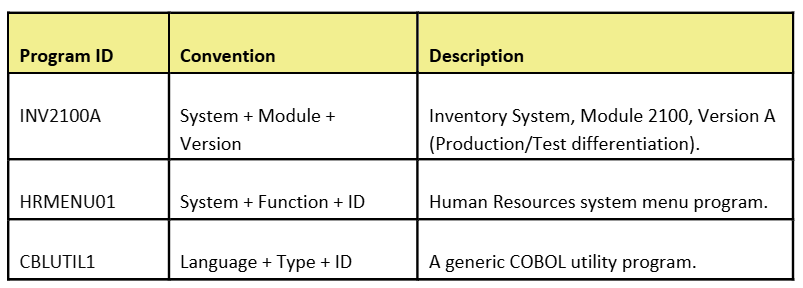

Program names in COBOL are defined in the PROGRAM-ID paragraph of the IDENTIFICATION DIVISION. Program names on the mainframe are limited to eight characters. With this limitation, mainframe customers use these characters effectively to help identify which system a program belongs to and their function. For example, XXYYYYZZ where XX, YYYY, and ZZ denote different groupings. COBOL Program IDs are short and often encoded with system identifiers.  Table 1: Sample mainframe application components and their descriptions

Table 1: Sample mainframe application components and their descriptions

c. Using other application characteristics

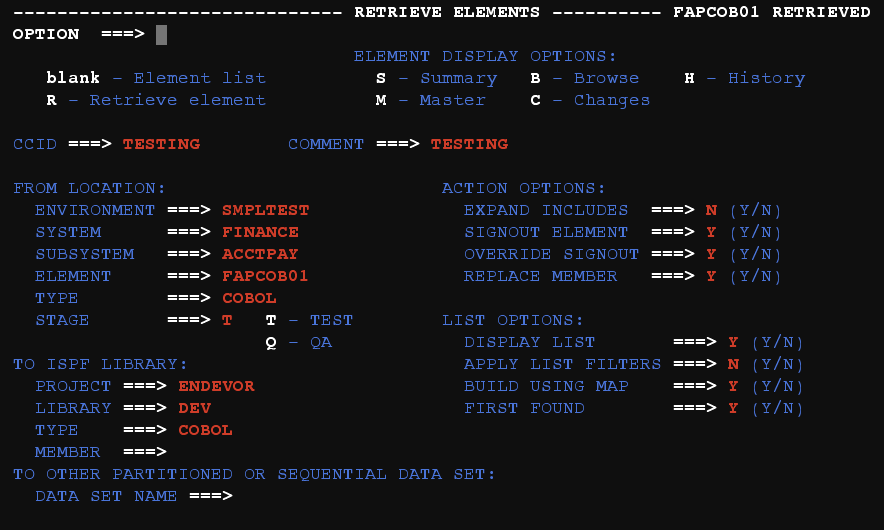

Mainframe systems contain rich contextual information revealing how components naturally group together. This includes JOBCARD accounting data and COBOL program comments indicating purpose or line of business (LOB) ownership. Source code management tools like SCLM, Changeman, and Endevor also show component relationships through promotion groupings.

Figure 2: Source code management tools on mainframe, depicting components (element) within a business domain (system) and respective application (subsystem)

Figure 2: Source code management tools on mainframe, depicting components (element) within a business domain (system) and respective application (subsystem)

d. By modality (batch/online) or transaction manager (CICS/IMS)

Grouping components by business domain and separating online from batch functions helps define a workable scope. Breaking it down between online and batch means that any integration happening between these two modalities would be at the data layer. You could also segregate, and group components based on different transaction monitors (CICS or IMS TM).

3. Using partner tooling for portfolio assessment

When SME knowledge is unavailable, naming conventions and other heuristics can help you break down large code bases. Another alternative would be partner tooling. Partner tools allow you to map your mainframe portfolio. These tools may have larger LOC limits, advanced reporting, metrics, and can save SMEs time. These tools typically require upfront licensing costs and professional services for assessment.

Refining the code base grouping with AWS Transform

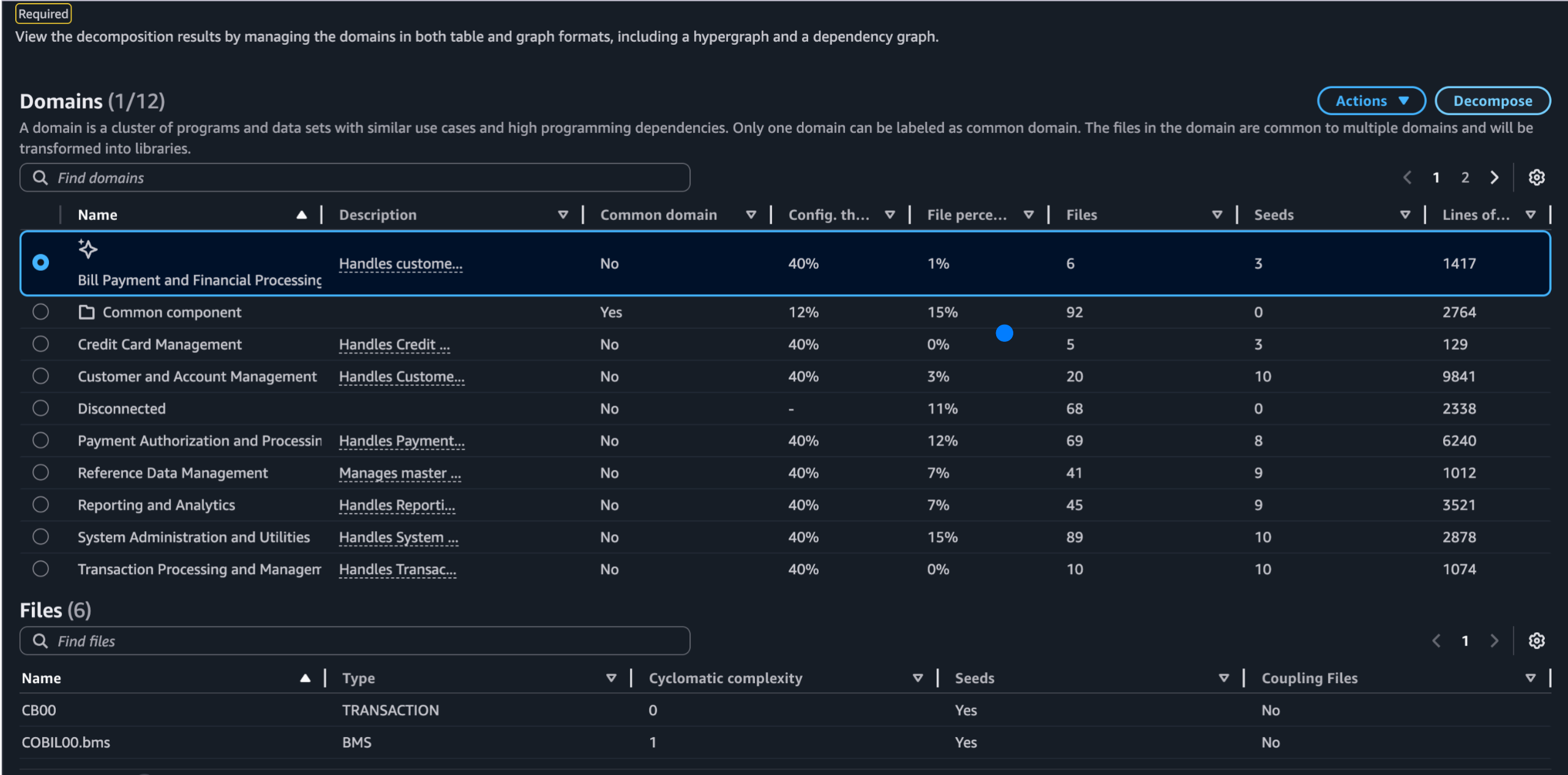

After breaking the large monolith into manageable chunks, review and resolve missing dependencies and address code base issues before proceeding. Even then, the codebase may still contain millions of lines of code (LOC). Regardless of your modernization pattern, migrating millions of LOC at once may not be the recommended approach. To further decompose the codebase into well-defined business domains, you can use either AWS Transform’s dependency graph artifact or business logic extraction (BLE) for domain decomposition.

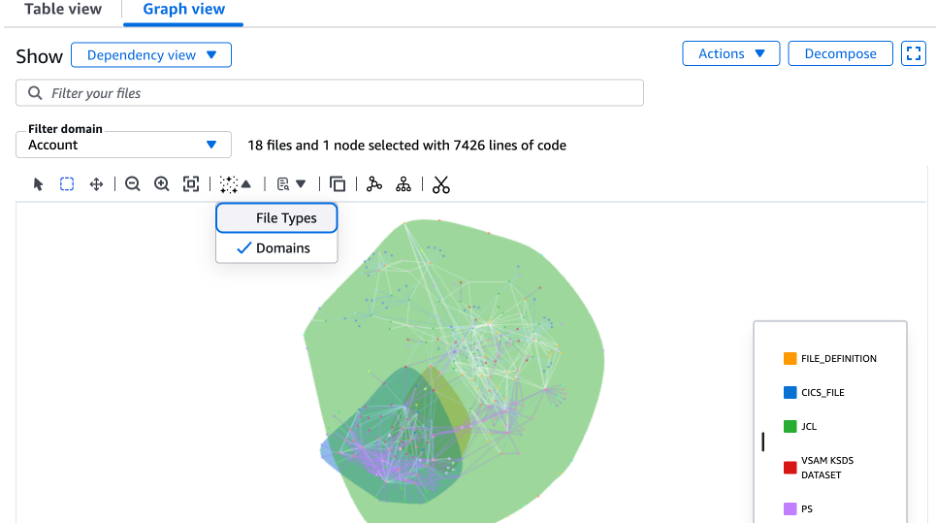

1/ Using the dependency graph artifact allows you to manually create focused domains from legacy mainframe applications. AWS Transform allows the selection of ‘seeds’ or entry points for these domains, then automatically identifies related components through dependency analysis or semantic similarity. Customize the automatic domain expansion by identifying the entry points enabling selective component inclusion, exploring component relationships, and creating or adjusting domains. The approach allows organizations to create targeted domains for application sub-functions while maintaining precise boundary control and complete decision authority over the decomposition process, as illustrated in Figure3.

Figure 3: Understand component relationships by analyzing interdomain dependencies

Figure 3: Understand component relationships by analyzing interdomain dependencies

With smaller domains, you can extract the domain components and create a new AWS Transform job only with the desired components. The resulting job contains a manageable number of LOC, allowing you to further decompose into individual applications or functions for a phased migration approach. For a detailed process on how to manually define and adjust domains, refer to the Domain decomposition using dependency graph in AWS Transform for Mainframe guidance.

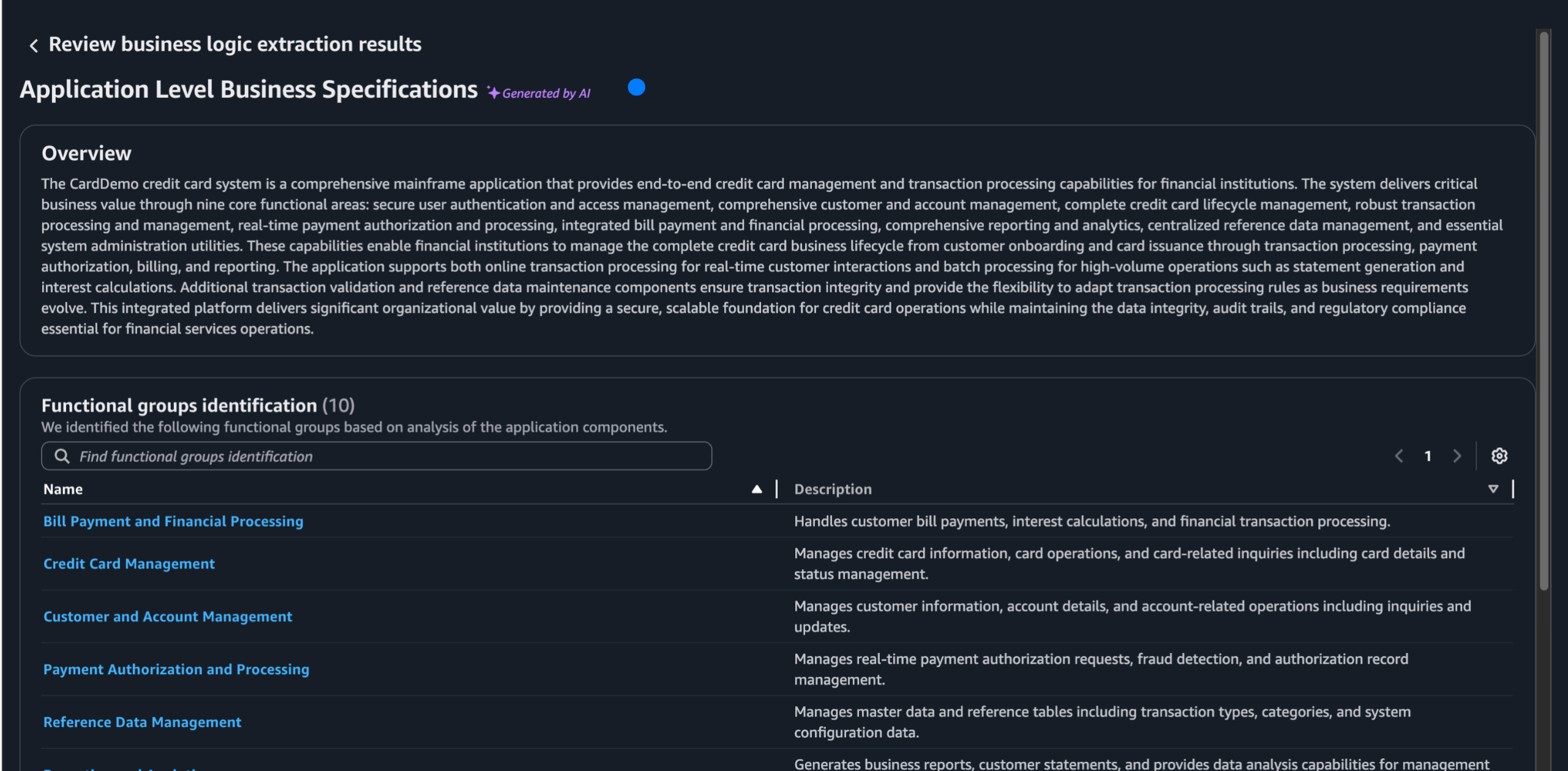

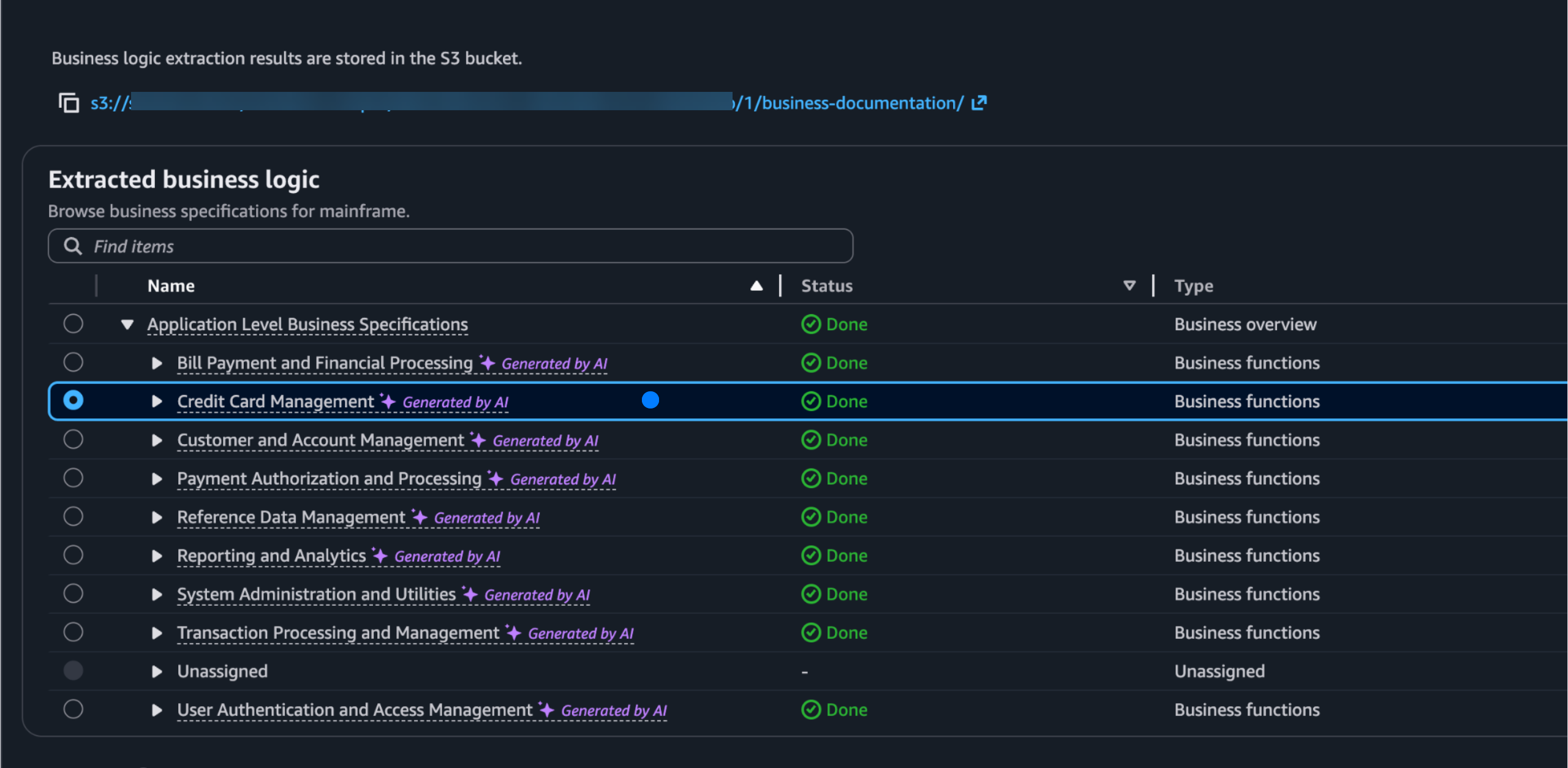

2/ Using business logic extraction (BLE) for decomposition. The application-level business logic extraction will identify entry points and group downstream dependencies together into one unit as illustrated in Figure4 and Figure5. It will group entry points that perform similar business functions together. These business functions, entry points, and their dependencies can be used in the domain and seed definition as part of the decomposition. This is decomposition using automated seed detection, as referenced in Figure6 and Figure7.

Figure 4: Application overview and identification of business function via business logic extraction (BLE)

Figure 4: Application overview and identification of business function via business logic extraction (BLE)

Figure 5: Business logic extraction (BLE) creates logical segmentation and business functions

Figure 5: Business logic extraction (BLE) creates logical segmentation and business functions

Figure 6: Review and configure inputs for code and domain decomposition

Figure 6: Review and configure inputs for code and domain decomposition

Figure 7: Business domains generated in business logic extraction can inform domain creation

Figure 7: Business domains generated in business logic extraction can inform domain creation

Alternatively, when breaking down large mainframe codebases, you can manually plant seeds (components—such as key COBOL programs, JCL jobs, or transaction IDs) that serve as anchors for identifying related application boundaries. The decomposition engine then traces dependencies outward from these seeds, following CALL statements, copybook references, file I/O operations, and database access patterns to discover all components that belong to the same logical application context.

Application rationalization

For each application domain, map the business goals and requirements. An application may require dramatic agility gains while retiring from its existing business functions and adding new ones to remain competitive. Others may choose a cloud-optimized Java stack to transition from legacy technologies and mitigate skill shortages. Another may have enough COBOL skills but face a data center exit mandate. Evaluate each application comprehensively using a disposition strategy to guide your mainframe modernization approach. Modernizing a mainframe isn’t a one-size-fits-all process. Different companies have varying objectives for modernization, requirements, success criteria, risk appetite, and budgets. To address this, we recommend using the “seven Rs” framework — seven proven approaches to modernize mainframe applications.

Reimagine, Refactor, and Replatform approaches

For each decomposed domain, AWS offers three modernization paths as illustrated in Figure8.

- Reimagine: Business rules extraction with AWS Transform to generate entirely new code using agentic AI development tools.

- Refactor: Automated code conversion that maintains functional equivalence while optimizing it in the cloud. The “reforge” capability further optimizes refactored Java code for better maintainability.

- Replatform: COBOL compilation to run on AWS using runtimes that provide similar capabilities available in the mainframe, while maintaining functional equivalence.

Figure 8: AWS Transform supports multiple modernization and migration strategies with reimagine, refactor, and replatform

Figure 8: AWS Transform supports multiple modernization and migration strategies with reimagine, refactor, and replatform

The reimagine path

The reimagine path implies a more fundamental overhaul and is considered the most transformative approach. Reimagine involves completely rethinking application architecture like using modern patterns shifting from large monoliths to macro-services or microservices or moving from batch processing to real-time functions. The reimagine pattern allows organizations to introduce functional changes to the modern application by reimagining customer experiences, optimizing the business process flows, or introducing new features, which could involve:

- Rewriting parts or all the system: Building a new codebase to address fundamental limitations or adopt a cloud optimized technology stack.

- Reengineering the architecture: Making significant structural changes to accommodate new requirements or improve scalability.

- Creating a new user experience: Reimagining the interface and interaction based on current design trends or evolving user needs.

For additional information on this approach, read how to Reimagine your mainframe applications with Agentic AI and AWS Transform

The refactor path

Refactoring focuses on improving the overall internal structure and technology stack of existing code without changing its external behavior or business functionality. This is often used for:

- Preserving business functions: goal is to preserve the critical business logic of your application while refactoring it to a modernized cloud-optimized application.

- Addressing technical debt: addressing technical debt by transforming legacy COBOL applications into modern, cloud-ready Java solutions.

- Reducing risk: Refactor is chosen for modernizing large applications at scale with tight deadlines, and application meets the business needs.

- Improving maintainability and readability: Refactor enhances transformed Java code by restructuring complex methods, adding descriptive comments, optimizing variable usage, and improving code flow. This results in more readable and maintainable code for developers.

For additional information on this approach, read how to Accelerate mainframe modernization with AWS Transform: A comprehensive refactor approach

The replatform path

Replatforming preserves the existing programming language, core business logic, and application functionality while migrating the application to modern cloud infrastructure. This approach is characterized by:

- Port and recompile: Moving existing applications to the cloud with minimal code changes, while optimizing the underlying infrastructure to leverage cloud benefits.

- Runtime compatibility: Using specialized runtime environments that replicate mainframe capabilities on AWS, allowing COBOL and other mainframe languages to execute in the cloud.

- Operational modernization: Replacing legacy job scheduling, monitoring, and operational tools with cloud-native equivalents while preserving application behavior.

- Gradual evolution: Enabling organizations to modernize incrementally by first moving quickly to the cloud, then making targeted improvements over time.

- Risk mitigation: Preserve investments and workforce using the existing programming language, providing a balance between continuity and innovation.

In summary

Successful mainframe modernization starts with assessment, dependency analysis, BLE, and decomposition to identify functional domains and guide pattern selection. For large codebases, a phased approach ensures manageable progress. By using AWS Transform, teams can stay aligned throughout the decomposition and modernization journey while managing the complexity of large-scale transformation projects. The AI-powered capabilities make this achievable, compressing timelines what once took years into months. Ready to modernize? Get started with AWS Transform today.

Glossary

- Portfolio – A company’s complete collection of products, services, strategic business units, and assets. With respect to a codebase, this concept often translates to an application portfolio (all the software applications within an organization) or a product portfolio (all software products offered by a business).

- Business domain – A specific sphere of activity or knowledge within the real world that a software system is being developed to support. This could be a broad area like “Healthcare” or “Finance,” or a more specialized area within a company like “Order Processing,” “Inventory Management,” or “Customer Support”.

- Application – A complete, deployable software system or program designed to perform specific tasks or functions for a user or another system. It is a single unit of software that typically encompasses presentation layers, business logic, and data access layers. The application codebase is the source code that makes up this single functional unit.

- Business function – A specific set of activities or operations performed within a business to achieve a particular outcome. Examples include marketing, sales, human resources, or supply chain management. In the codebase, a business function is implemented through specific logic and workflows, often residing within the application’s business layer, that define how data is processed to meet these specific business requirements. A single application might support several different business functions.