Artificial Intelligence

Use generative AI in Amazon Bedrock for enhanced recommendation generation in equipment maintenance

In the manufacturing world, valuable insights from service reports often remain underutilized in document storage systems. This post explores how Amazon Web Services (AWS) customers can build a solution that automates the digitisation and extraction of crucial information from many reports using generative AI.

The solution uses Amazon Nova Pro on Amazon Bedrock and Amazon Bedrock Knowledge Bases to generate recommended actions that are aligned with the observed equipment state, using an existing knowledge base of expert recommendations. The knowledge base expands over time as the solution is used.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Stability AI, Mistral, and Amazon through a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Amazon Bedrock Knowledge Base offers fully managed, end-to-end Retrieval-Augmented Generation (RAG) workflows to create highly accurate, low latency, and custom Generative AI applications by incorporating contextual information from your company’s data sources, making it a well-suited service to store engineers’ expert recommendations from past reports and allow FMs to accurately customise their responses.

Traditional service and maintenance cycles rely on manual report submission by engineers with expert knowledge. Time spent referencing past reports can lead to operational delays and business disruption.This solution empowers equipment maintenance teams to:

- Ingest inspection and maintenance reports (in multiple languages) and extract equipment status and open actions, increasing visibility and actionability

- Generate robust, trustworthy recommendations using experienced engineers’ expertise

- Expand the initial knowledge base built by expert engineers to include valid generated recommendations

- Accelerate maintenance times and prevent unplanned downtime with a centralised, AI-powered tool that streamlines your equipment maintenance processes on AWS

To help you implement this solution, we provide a GitHub repository containing deployable code and infrastructure as code (IaC) templates. You can quickly set up and customise the solution in your own AWS environment using the GitHub repository.

Solution overview

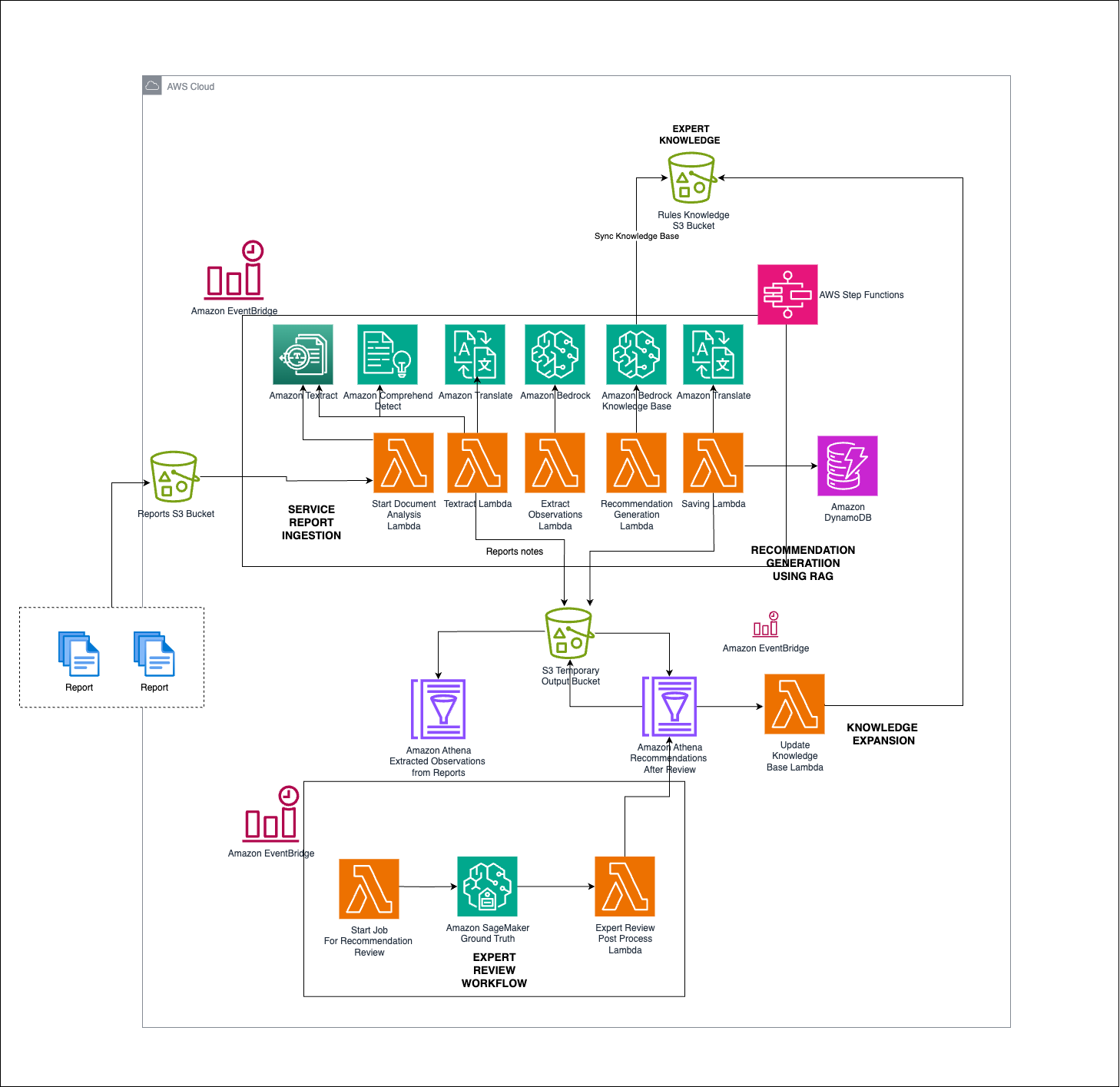

The following diagram is an architecture representation of the solution presented in this post, showcasing the various AWS services in use. Using this GitHub repository, you can deploy the solution into your AWS account to test it.

The following are key workflows of the solution:

- Automated service report ingestion with Amazon Textract – The report ingestion workflow processes and translates service reports into a standardised format. This workflow uses Amazon Textract for optical character recognition (OCR), Amazon Translate for language translation, and Amazon Comprehend for language detection. These services provide reports that are accurately processed and prepared for metadata extraction, regardless of their original format or language.

- Intelligent recommendation generation using RAG – Following ingestion, the metadata extraction and standardisation process uses RAG architecture with the Amazon Nova Pro in Amazon Bedrock and Amazon Bedrock Knowledge Bases. This workflow extracts crucial metadata from the reports and uses the RAG process to generate precise and actionable maintenance recommendations. The metadata is standardised for consistency and reliability, providing a solid foundation for the recommendations.

- Expert validation with Amazon SageMaker Ground Truth – To validate and refine the generated recommendations, the solution incorporates an expert review process using Amazon SageMaker Ground Truth. This workflow involves creating customised labelling jobs where experts review and validate the recommendations for accuracy and reliability. This feedback loop helps continually improve the model’s performance, making the maintenance recommendations more trustworthy.

- Expanding the knowledge base for future processing – The knowledge base for this tool needs to be expanded with new rules for each equipment type, drawing from two main sources:

- Analysing past equipment and maintenance reports to obtain labeled data on recommended actions.

- Reinforcing valid recommendations generated by the tool and verified by human experts.

This compiled set of rules is reviewed by experts, assigned criticality, and then automatically synced into the Amazon Bedrock Knowledge Bases to continually improve the solution’s confidence in generating the next recommended action.Together, these workflows create a seamless and efficient process from report ingestion to actionable recommendations, producing high-quality insights for maintenance operations.This solution is deployable and scalable using IaC with Terraform for ease of implementation and expansion across various environments. Teams have the flexibility to efficiently roll out the solution to customers globally, enhancing maintenance operations and reducing unplanned downtimes.In the following sections, we walk through the steps to customize and deploy the solution.

Prerequisites

To deploy the solution, you must have an AWS account with the appropriate permissions and access to Amazon Nova FMs on Amazon Bedrock. This can be enabled from the Amazon Bedrock console page.

Clone the GitHub repository

Clone the GitHub repository containing the IaC for the solution to your local machine.

Customise the ReportsProcessing function

To customize the ReportsProcessing AWS Lambda function, follow these steps:

- Open the

lambdas/python/ReportsProcessing/extract_observations.pyfile. This file contains the logic for theReportsProcessingLambda function. - Modify the code in this file to include your custom logic for processing reports based on their specific document styles. For example, you might need to modify the

extract_metadatafunction to handle different report formats or adjust the logic in thestandardize_metadatafunction to comply with your organisation’s standards.

Customise the RecommendationGeneration function

To customize the RecommendationGeneration Lambda, follow these steps:

- Open the

lambdas/python/RecommendationGeneration/generate_recommendations.pyfile. This file contains the logic for theRecommendationGenerationLambda function, which uses the RAG architecture. - Modify the code in this file to include your custom logic for generating recommendations based on your specific requirements. For example, you might need to adjust the

query_formulation()function to modify the prompt sent to Anthropic’s Claude 3 Sonnet or update theretrieve_rulesfunction to customize the retrieval process from the knowledge base.

Update the Terraform configuration

If you made changes to the Lambda function names, roles, or other AWS resources, update the corresponding Terraform configuration files in the terraform directory to reflect these changes.

Initialise the Terraform working directory

Open a terminal or command prompt and navigate to the terraform directory within the cloned repository. Enter the following command to initialize the Terraform working directory:

Preview the Terraform changes

Before applying the changes, preview the Terraform run plan by entering the following command:

This command will show you the changes that Terraform plans to make to your AWS infrastructure.

Deploy the Terraform stack

If you’re satisfied with the planned changes, deploy the Terraform stack to your AWS account by entering the following command:

Enter yes and press Enter to proceed with the deployment.

Create an Amazon Bedrock knowledge base

After you deploy the Terraform stack, create an Amazon Bedrock knowledge base to store and retrieve the maintenance rules and recommendations:

Once the knowledge bases are created, do not forget to update the Generate Recommendations lambda function environment variable with the appropriate knowledge base ID.

Upload a test report and validate the solution for generated recommendations

To test the solution, upload a sample maintenance report to the designated Amazon Simple Storage Service (Amazon S3) bucket:

Once the file is uploaded, navigate to the created AWS Step Functions State machine and validate that a successful execution occurs. The output of a successful execution must contain extracted observations from the input document as well as newly generated recommendations that have been pulled from the knowledge base.

Clean up

When you’re done with this solution, clean up the resources you created to avoid ongoing charges.

Conclusion

This post provided an overview of implementing a risk-based maintenance solution to preempt potential failures and avoid equipment downtime for maintenance teams. This solution highlights the benefits of Amazon Bedrock. By using Amazon Nova Pro with RAG for your equipment maintenance reports, engineers and scientists can focus their efforts on improving accuracy of recommendations and increasing development velocity. The key capabilities of this solution include:

- Automated ingestion and standardization of maintenance reports using Amazon Textract, Amazon Comprehend, and Amazon Translate

- Intelligent recommendation generation powered by RAG and Amazon Nova Pro on Amazon Bedrock

- Continual expert validation and knowledge base expansion using SageMaker Ground Truth

- Scalable and production-ready deployment using IaC with Terraform

By using the breadth of AWS services and the flexibility of Amazon Bedrock, equipment maintenance teams can streamline their operations and reduce unplanned downtimes.

AWS Professional Services is ready to help your team develop scalable and production-ready generative AI solutions on AWS. For more information, refer to the AWS Professional Services page or reach out to your account manager to get in touch.

About the authors

Jyothsna Puttanna is an AI/ML Consultant at AWS Professional Services. Jyothsna works closely with customers building their machine learning solutions on AWS. She specializes in distributed training, experimentation, and generative AI.

Jyothsna Puttanna is an AI/ML Consultant at AWS Professional Services. Jyothsna works closely with customers building their machine learning solutions on AWS. She specializes in distributed training, experimentation, and generative AI.

Shantanu Sinha is a Senior Engagement Manager at AWS Professional Services, based out of Berlin, Germany. Shantanu’s focus is on using generative AI to unlock business value and identify strategic business opportunities for his clients.

Shantanu Sinha is a Senior Engagement Manager at AWS Professional Services, based out of Berlin, Germany. Shantanu’s focus is on using generative AI to unlock business value and identify strategic business opportunities for his clients.

Selena Tabbara is a Data Scientist at AWS Professional Services specializing in AI/ML and Generative AI solutions for enterprise customers in energy, automotive and manufacturing industry.

Selena Tabbara is a Data Scientist at AWS Professional Services specializing in AI/ML and Generative AI solutions for enterprise customers in energy, automotive and manufacturing industry.