Artificial Intelligence

Category: Intermediate (200)

Unlock the knowledge in your Slack workspace with Slack connector for Amazon Q Business

In this post, we will demonstrate how to set up Slack connector for Amazon Q Business to sync communications from both public and private channels, reflective of user permissions.

Efficient Pre-training of Llama 3-like model architectures using torchtitan on Amazon SageMaker

In this post, we collaborate with the team working on PyTorch at Meta to showcase how the torchtitan library accelerates and simplifies the pre-training of Meta Llama 3-like model architectures. We showcase the key features and capabilities of torchtitan such as FSDP2, torch.compile integration, and FP8 support that optimize the training efficiency.

Build a generative AI Slack chat assistant using Amazon Bedrock and Amazon Kendra

In this post, we describe the development of a generative AI Slack application powered by Amazon Bedrock and Amazon Kendra. This is designed to be an internal-facing Slack chat assistant that helps answer questions related to the indexed content.

Implement model-independent safety measures with Amazon Bedrock Guardrails

In this post, we discuss how you can use the ApplyGuardrail API in common generative AI architectures such as third-party or self-hosted large language models (LLMs), or in a self-managed Retrieval Augmented Generation (RAG) architecture.

Achieve operational excellence with well-architected generative AI solutions using Amazon Bedrock

In this post, we discuss scaling up generative AI for different lines of businesses (LOBs) and address the challenges that come around legal, compliance, operational complexities, data privacy and security.

Elevate workforce productivity through seamless personalization in Amazon Q Business

In this post, we explore how Amazon Q Business uses personalization to improve the relevance of responses and how you can align your use cases and end-user data to take full advantage of this capability

Control data access to Amazon S3 from Amazon SageMaker Studio with Amazon S3 Access Grants

In this post, we demonstrate how to simplify data access to Amazon S3 from SageMaker Studio using S3 Access Grants, specifically for different user personas using IAM principals.

Improve employee productivity using generative AI with Amazon Bedrock

In this post, we show you the Employee Productivity GenAI Assistant Example, a solution built on AWS technologies like Amazon Bedrock, to automate writing tasks and enhance employee productivity.

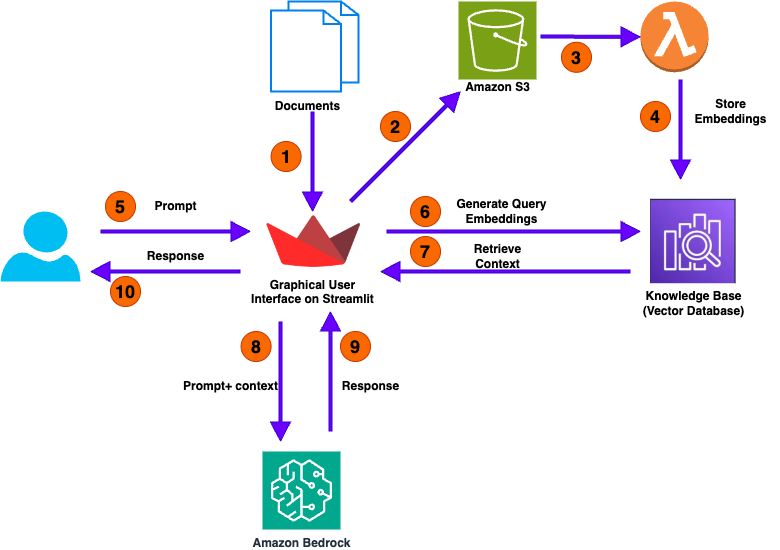

Elevate RAG for numerical analysis using Amazon Bedrock Knowledge Bases

In this post, we discuss how Amazon Bedrock Knowledge Bases provides a powerful solution for numerical analysis on documents. You can deploy this solution in an AWS account and use it to analyze different types of documents.

Deploy generative AI agents in your contact center for voice and chat using Amazon Connect, Amazon Lex, and Amazon Bedrock Knowledge Bases

In this post, we show you how DoorDash built a generative AI agent using Amazon Connect, Amazon Lex, and Amazon Bedrock Knowledge Bases to provide a low-latency, self-service experience for their delivery workers.