Artificial Intelligence

Category: Developer Tools

Create a private workforce on Amazon SageMaker Ground Truth with the AWS CDK

In this post, we present a complete solution for programmatically creating private workforces on Amazon SageMaker AI using the AWS Cloud Development Kit (AWS CDK), including the setup of a dedicated, fully configured Amazon Cognito user pool.

Empowering air quality research with secure, ML-driven predictive analytics

In this post, we provide a data imputation solution using Amazon SageMaker AI, AWS Lambda, and AWS Step Functions. This solution is designed for environmental analysts, public health officials, and business intelligence professionals who need reliable PM2.5 data for trend analysis, reporting, and decision-making. We sourced our sample training dataset from openAFRICA. Our solution predicts PM2.5 values using time-series forecasting.

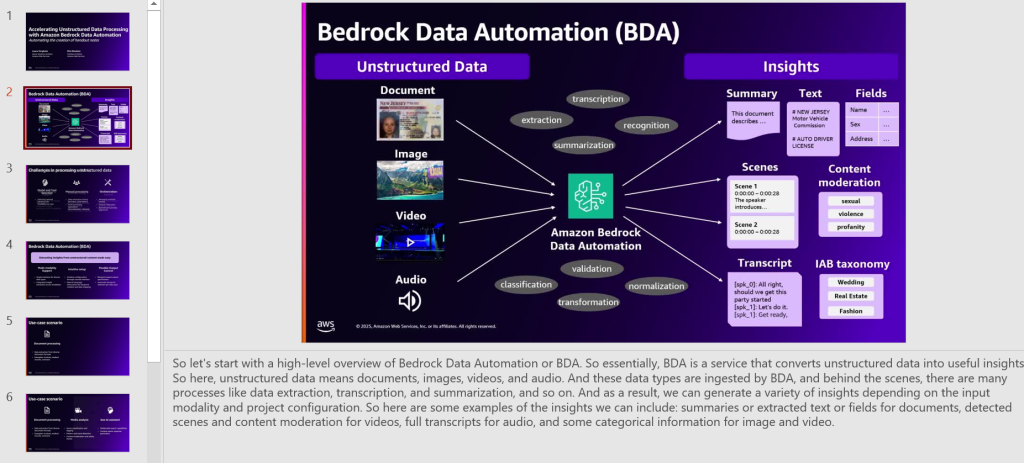

Automate the creation of handout notes using Amazon Bedrock Data Automation

In this post, we show how you can build an automated, serverless solution to transform webinar recordings into comprehensive handouts using Amazon Bedrock Data Automation for video analysis. We walk you through the implementation of Amazon Bedrock Data Automation to transcribe and detect slide changes, as well as the use of Amazon Bedrock foundation models (FMs) for transcription refinement, combined with custom AWS Lambda functions orchestrated by AWS Step Functions.

AWS App Studio introduces a prebuilt solutions catalog and cross-instance Import and Export

In a recent AWS What’s New Post, App Studio announced two new features to accelerate application building: Prebuilt solutions catalog and cross-instance Import and Export. In this post, we walk through how to use the prebuilt solutions catalog to get started quickly and use the Import and Export feature

Accelerate IaC troubleshooting with Amazon Bedrock Agents

This post demonstrates how Amazon Bedrock Agents, combined with action groups and generative AI models, streamlines and accelerates the resolution of Terraform errors while maintaining compliance with environment security and operational guidelines.

Streamline custom environment provisioning for Amazon SageMaker Studio: An automated CI/CD pipeline approach

In this post, we show how to create an automated continuous integration and delivery (CI/CD) pipeline solution to build, scan, and deploy custom Docker images to SageMaker Studio domains. You can use this solution to promote consistency of the analytical environments for data science teams across your enterprise.

Accelerate your ML lifecycle using the new and improved Amazon SageMaker Python SDK – Part 2: ModelBuilder

In Part 1 of this series, we introduced the newly launched ModelTrainer class on the Amazon SageMaker Python SDK and its benefits, and showed you how to fine-tune a Meta Llama 3.1 8B model on a custom dataset. In this post, we look at the enhancements to the ModelBuilder class, which lets you seamlessly deploy a model from ModelTrainer to a SageMaker endpoint, and provides a single interface for multiple deployment configurations.

Accelerate your ML lifecycle using the new and improved Amazon SageMaker Python SDK – Part 1: ModelTrainer

In this post, we focus on the ModelTrainer class for simplifying the training experience. The ModelTrainer class provides significant improvements over the current Estimator class, which are discussed in detail in this post. We show you how to use the ModelTrainer class to train your ML models, which includes executing distributed training using a custom script or container. In Part 2, we show you how to build a model and deploy to a SageMaker endpoint using the improved ModelBuilder class.

Apply Amazon SageMaker Studio lifecycle configurations using AWS CDK

This post serves as a step-by-step guide on how to set up lifecycle configurations for your Amazon SageMaker Studio domains. With lifecycle configurations, system administrators can apply automated controls to their SageMaker Studio domains and their users. We cover core concepts of SageMaker Studio and provide code examples of how to apply lifecycle configuration to […]

Build generative AI applications on Amazon Bedrock with the AWS SDK for Python (Boto3)

In this post, we demonstrate how to use Amazon Bedrock with the AWS SDK for Python (Boto3) to programmatically incorporate FMs. We explore invoking a specific FM and processing the generated text, showcasing the potential for developers to use these models in their applications for a variety of use cases