The Internet of Things on AWS – Official Blog

Efficient video streaming and vision AI at the edge with Realtek, Plumerai, and Amazon Kinesis Video Streams

Artificial Intelligence (AI) at the edge is popular among smart video devices. For example, Smart Home cameras and video doorbells revolutionized home monitoring. What began as simple recording and remote viewing tools has evolved into intelligent observers. With AI infusion, today’s cameras can actively analyze scenes, alert users to motion events, recognize familiar faces, spot package deliveries, and dynamically adjust their recording behavior. Enterprise surveillance cameras are another example. These cameras have superior resolution, enhanced computing power, and can drive more sophisticated AI models. These enhanced capabilities result in sharper detection at greater distances.

As illustrated, customers demand intelligent monitoring systems that can process data locally while maintaining privacy and reducing bandwidth costs. To address these needs, the AWS Internet of Things (AWS IoT) team has developed a smart camera solution with AWS partners that combines Amazon Kinesis Video Streams, Realtek’s low-power Ameba Pro2 microcontroller, and efficient machine learning models from Plumerai. This blog post provides guidance for event-triggered video uploads coupled with human detection algorithm processing at the edge.

Solution architecture

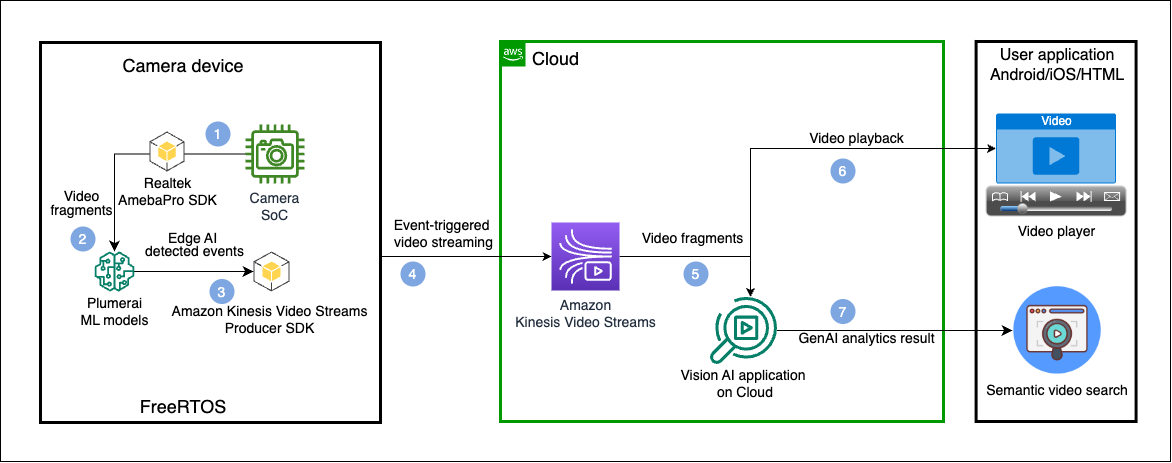

Figure below illustrates the solution architecture that this blog uses:

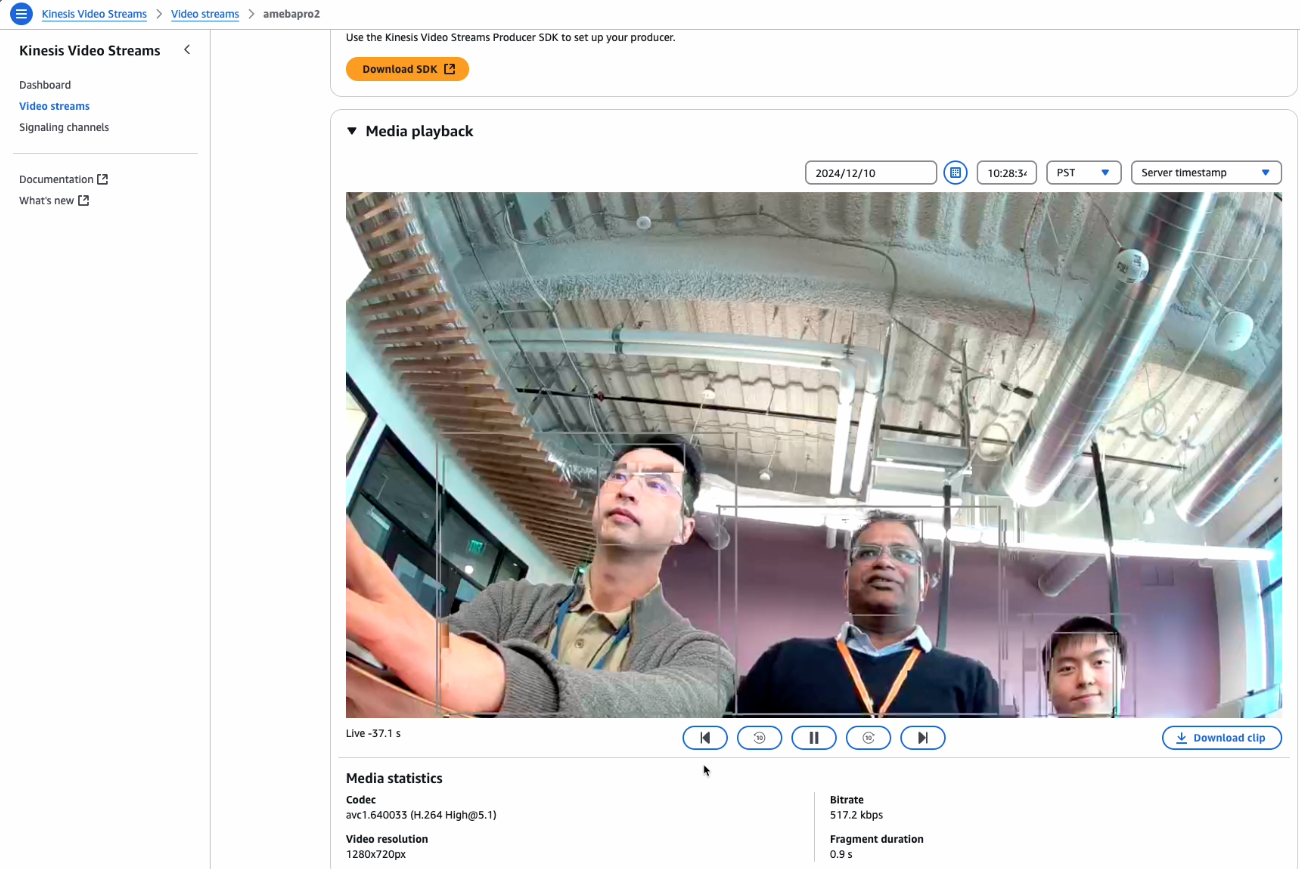

- Beginning with the camera, the device firmware has integrated Realtek SDK to access camera modules via defined APIs.

- The video fragments are delivered to Plumerai’s machine learning models for object detection.

- The sample application adds detection results as bonding box overlay on the original video fragments. This sample continuously uploads the fragments to cloud through Kinesis Video Streams Producer SDK. (As an aside, you can also set detection results to trigger uploads of 20-second video segments.)

- The Kinesis Video Streams Producer SDK relies on PutMedia API with long HTTPS connection to upload MKV fragments continuously in a streaming way.

- The media data will be ingested and the service stores all media data persistently for later analysis.

- A frontend application performs the playback of live, or previously recorded videos, relying on HLS or DASH protocols from Kinesis Video Streams.

- The solution feeds video and audio data into Large Language Models (LLMs) for Agentic AI insights. (We will cover semantic video search in our next blog).

Integration highlights

Amazon Kinesis Video Streams

Kinesis Video Streams transforms how businesses handle video solutions for IP cameras, robots, and automobiles. Key benefits include:

- A fully managed architecture. This helps engineering teams focus on innovation instead of infrastructure and is ideal for companies with limited resources.

- AWS SDKs are open-sourced. Top brands especially value this independence from platform constraints.

- Flexible pay-per-use pricing model. While device development can take months or years, you don’t pay until the cameras go live. With typical cloud storage activation below 30% and declining yearly usage, costs stay dramatically lower than fixed license fees.

Plumerai

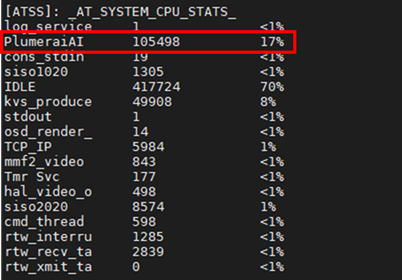

The Plumerai company specializes in embedded AI solutions, specially focused toward making deep learning tiny and efficient. The Plumerai model helps to provide inference on small, affordable, and low-power hardware. The company also optimizes AI models for the Realtek Ameba Pro2 platform through:

- Assembly-level optimizations can maximize Arm Cortex-M CPU performance, and leverages DSP instructions for enhanced signal processing capabilities.

- Neural Architecture Search (NAS) selects optimal AI models for Realtek NPU and memory architecture to achieve 0.4 TOPS NPU acceleration

- Plumerai models use Realtek on-chip hardware accelerators (scalers, format converters) to reduce computational load.

- The AI model supports RTOS to seamlessly integrates the SoC’s real-time operating system.

- The application integrates with Realtek’s media streaming framework.

- The fast boot design supports rapid booting times, which improves battery life, and ensures high speed of active object detection.

- The edge AI models are trained on 30 million labeled images and videos.

These enhancements translate into the following real-world performance:

- Delivers precision without wasting memory.

- Captures wide scenes through 180° field-of-view lenses.

- Detects individuals at 20m+ (65ft) distances.

- Handles crowds by tracking 20 people simultaneously.

- Maintains individual tracking with a unique ID system.

- Performs consistently in bright daylight and total darkness.

Realtek Ameba Pro2

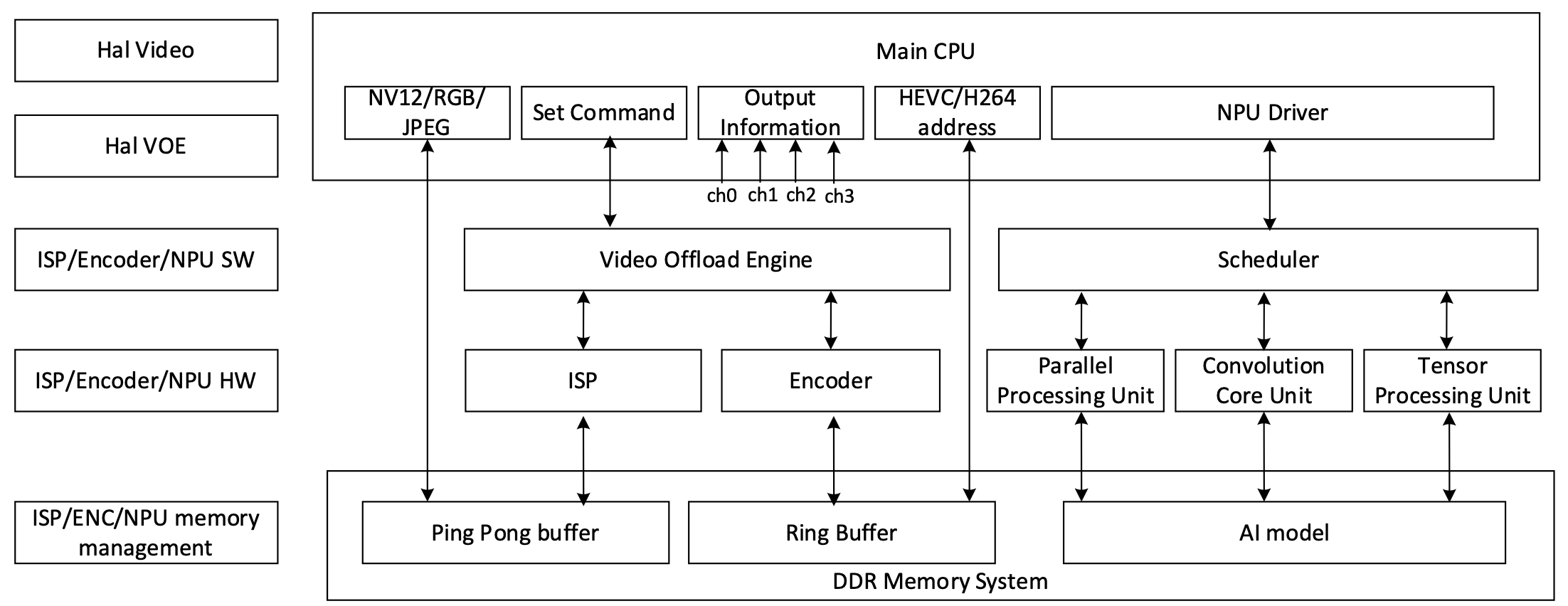

Figure above illustrates Realtek Ameba Pro2’s data architecture. It contains Integrated Video Encoder (IVE) and an Image Signal Processor (ISP) that processes media’s raw data and delivers the result to a Video Offload Engine (VOE). The VOE then manages multiple video channels and concurrent video streams to support the motion detection algorithm. The Neural Processing Unit (NPU) performs inference on images or image regions. The Parallel Processing Unit (PPU) handles multitasking jobs like cropping Regions of Interests (ROIs) from high-resolution images, resizing NPU inference input, and retrieving final output from high-resolution channels.This architecture unlocks powerful capabilities to support video analytics at the edge, including:

- Runing with minimal CPU power for maximum efficiency.

- Responding in near real time to motion.

- Begin video processing even during the boot sequence.

- Streaming to both the SD card and cloud through secure WiFi or Ethernet.

- Leveraging NPU to deliver superior AI performance.

- Integrating with Plumerai models and Kinesis Video Streams through a multimedia framework SDK.

Walkthrough

This section outlines the building steps for the solution to run edge AI and stream the video fragments.

Prerequisites

- AWS account with permission for:

- AWS console login

- Kinesis Video Streams API (GetDataEndpoint, DescribeStream, PutMedia)

- Amazon Elastic Compute Cloud (Amazon EC2) instance creation to build SDKs and binaries

- A stream resource with the name “kvs-plumerai-realtek-stream” created on Kinesis Video Streams Console.

- The Realtek Ameba Pro2 Mini MCU.

- Basic knowledge about embedded systems and working in a Linux environment.

- Internet connection to download the SDK and upload videos to AWS.

- Library and machine learning model files from Plumerai. (Please submit your request on the Plumerai Website.)

Set up the building environment

This blog uses an Amazon EC2 with Ubuntu LTS 22.04 as the building environment. You can use your own Ubuntu computer to cross-compile the SDK.

Amazon EC2 instance setup:

- Sign in into the AWS management console and navigate to Amazon EC2.

- Launch an instance with the following configuration:

- Instance name: KVS_AmebaPlumerAI_poc

- Application and OS Images: Ubuntu Server 22.04 LTS (HVM)

- Instance type: t3.large

- Create a new key pair for login: kvs-plumerai-realtek-keypair

- Configure storage: 100GiB

- Follow SSH connection prerequisites to allow inbound SSH traffic.

Download sample script from Github:

- Using the following command, log into your Amazon EC2 instance (be sure to replace xxx.yyy.zzz with the instance’s IP address). For detailed instructions, see Connect to your Linux instance using an SSH client.

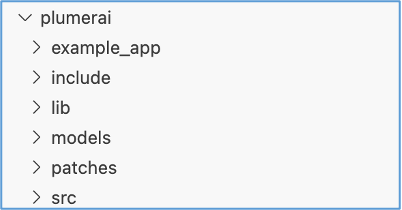

Obtain the Plumerai library:

- Using the Plumerai contact us form, submit a request to receive a copy of their demo package. Once you have the package, replace the “plumerai” directory with it in the Amazon EC2 instance. The updated directory structure should be the following:

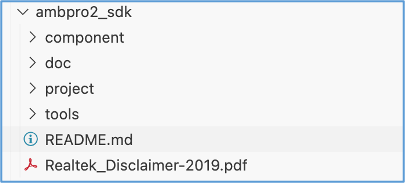

Obtain the Ameba SDK:

- Please contact Realtek to obtain the latest Ameba Pro2 SDK. In the directory structure, replace the “ambpro2_sdk” in Amazon EC2. The directory structure should be the following:

Install dependencies and configure environment

- Run the script setup_kvs_ameba_plumerai.sh in the directory sample-kvs-edge_ai-video-streaming-solution from the Github repository:

The script will automatically install the Linux dependencies, build the Realtek toolchain, run necessary Plumerai patches, copy model files, and download the Kinesis Video Streams Producer SDK. If you experience an error in the process, please contact Realtek or Plumerai for technical support.

Configure sample in Kinesis Video Streams Producer SDK

Use the following to configure AWS credentials, stream name, and AWS region. These can be found in the component/example/kvs_producer_mmf/sample_config.h file.

Comment out example_kvs_producer_mmf(); and example_kvs_producer_with_object_detection(); in the file /home/ubuntu/KVS_Ameba_Plumerai/ambpro2_sdk/component/example/kvs_producer_mmf/app_example.c

Zihang Huang is a solution architect at AWS. He is an IoT domain expert for connected vehicles, smart home, smart renewable energy, and industrial IoT. Before AWS, he gained technical experience at Bosch and Alibaba Cloud. Currently, he focuses on interdisciplinary solutions to integrate AWS IoT, edge computing, big data, AI, and machine learning.

Zihang Huang is a solution architect at AWS. He is an IoT domain expert for connected vehicles, smart home, smart renewable energy, and industrial IoT. Before AWS, he gained technical experience at Bosch and Alibaba Cloud. Currently, he focuses on interdisciplinary solutions to integrate AWS IoT, edge computing, big data, AI, and machine learning. Siva Somasundaram is a senior engineer at AWS and builds embedded SDK and server-side components for Kinesis Video Streams. With over 15 years of experience in video streaming services, he has developed media processing pipelines, transcoding and security features for large-scale video ingestion. His expertise spans across video compression, WebRTC, RTSP, and video AI. He is passionate about creating metadata hubs that power semantic search, RAG experiences, and pushing the boundaries of what’s possible in video technology.

Siva Somasundaram is a senior engineer at AWS and builds embedded SDK and server-side components for Kinesis Video Streams. With over 15 years of experience in video streaming services, he has developed media processing pipelines, transcoding and security features for large-scale video ingestion. His expertise spans across video compression, WebRTC, RTSP, and video AI. He is passionate about creating metadata hubs that power semantic search, RAG experiences, and pushing the boundaries of what’s possible in video technology. Emily Chou is director at Realtek Semiconductor Corp. She specializes in wireless communication network technology and has worked with several generations of the AmebaIoT MCU. She guides a team to provide connectivity solutions, video analytics, and edge AI computing.

Emily Chou is director at Realtek Semiconductor Corp. She specializes in wireless communication network technology and has worked with several generations of the AmebaIoT MCU. She guides a team to provide connectivity solutions, video analytics, and edge AI computing. Marco Jacobs is the Head of Product Marketing at Plumerai, where he drives adoption of tiny, highly accurate AI solutions for smart home cameras and IoT devices. With 25 years of experience in camera and imaging applications, he seamlessly connects executives and engineers to drive innovation. Holding seven issued patents, Marco is passionate about transforming cutting-edge AI technology into business opportunities that deliver real-world impact.

Marco Jacobs is the Head of Product Marketing at Plumerai, where he drives adoption of tiny, highly accurate AI solutions for smart home cameras and IoT devices. With 25 years of experience in camera and imaging applications, he seamlessly connects executives and engineers to drive innovation. Holding seven issued patents, Marco is passionate about transforming cutting-edge AI technology into business opportunities that deliver real-world impact.