AWS for Industries

Optimizing ad exchange infrastructure: Sharethrough’s journey from ALB to NLB

In the fast-paced world of programmatic advertising, every millisecond counts. Sharethrough recently embarked on an ambitious project to optimize their real-time bidding (RTB) exchange infrastructure. We’ll explore how Sharethrough, with support from Amazon Web Services (AWS), successfully migrated from Application Load Balancers (ALBs) to Network Load Balancers (NLBs), achieving significant cost savings and performance improvements.

The challenge: Scaling efficiency under high traffic

Sharethrough, now part of the Equativ Brand, is a global omni-channel ad exchange and has been an AWS customer since 2011. Their RTB exchange processes over four million requests every second across four AWS Regions, serving more than 200 countries. While their ALB setup performed well, the increasing traffic volume led to escalating costs. The company needed a solution that could handle their massive scale while optimizing expenses.

ALB for AdTech workloads

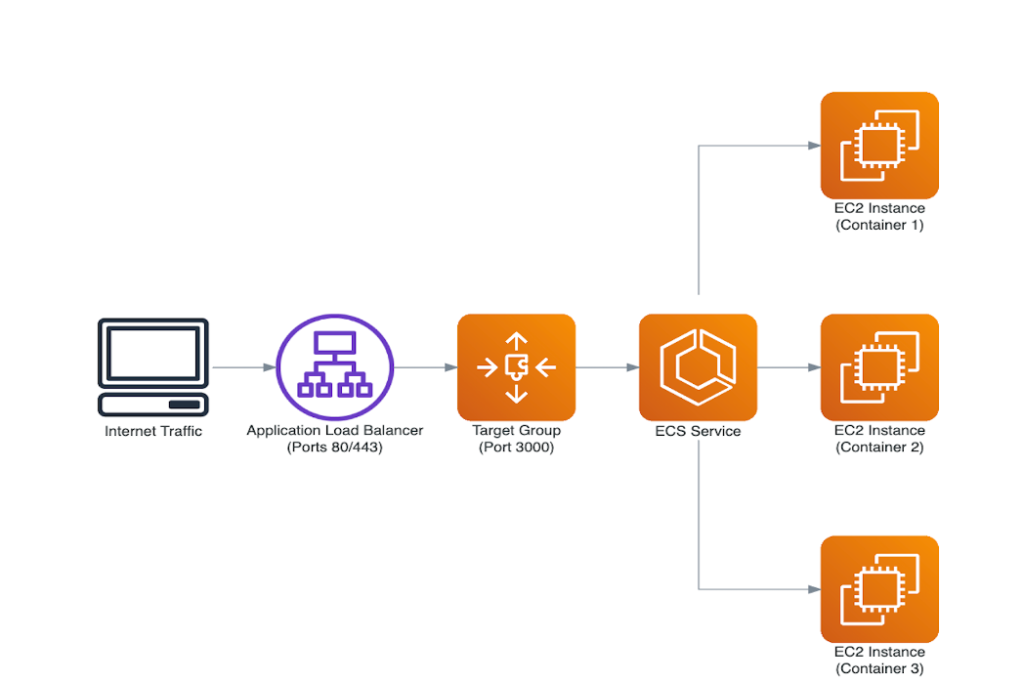

Sharethrough’s infrastructure using ALB was straightforward:

- An ALB configured to listen on ports 80 and 443 for incoming HTTP/HTTPS traffic from publishers. The ALB acted as a frontend routing layer.

- The ALB was associated with a target group containing the Amazon Elastic Container Service (Amazon ECS) that managed the backend ad servers. The target group specified the port (for example, 3000) that the ALB should forward traffic to on the Amazon ECS containers.

- The Amazon ECS service ran the ad server application across multiple container instances on Amazon EC2 instances. The ALB distributed the incoming traffic across the Amazon ECS containers in a round-robin fashion to provide scalability and high availability.

- The ALB handled tasks like TLS termination, health checks, and automatic scaling of the Amazon ECS based on traffic patterns.

Why move to NLB?

Although ALB performed well, AdTech companies operate with very narrow operating margins. This need for cost optimization motivated Sharethrough to rethink their architecture.

ALB, provides Layer 7 (OSI model) routing capabilities including path-based routing and TLS termination, but increasing traffic volume led to proportionally higher ALB costs due to the number of newly established connections per second contributing to high LCU charges (see ALB pricing model). After working with the AWS Enterprise Support team, we decided that migrating to NLB is the optimized and cost-effective solution.

NLBs, operating at Layer 4 (transport layer) of the OSI model, delivers consistent performance with ultra-low latency, handling millions of requests for every second, making it particularly suitable for high-throughput applications requiring connection-level routing.

The migration decision was primarily driven by NLB’s cost efficiency, offering approximately 25 percent lower LCU costs compared to ALB for equivalent traffic volumes based on our workload analysis. This cost advantage becomes increasingly significant as traffic scales due to NLB’s simplified pricing structure. However, the transition required careful consideration of trade-offs, including the need to handle TLS termination at the target level and implementing application-layer routing logic within the application stack.

When “simple” becomes complex

Our initial plan seemed straightforward enough: swap our Application Load Balancers for Network Load Balancers in our existing infrastructure. Same architecture, different load balancer type. How hard could it be?

As it turns out, quite challenging.

The NLB balancing act

Network Load Balancers offer two approaches to handling traffic across Availability Zones (AZ): Cross-AZ enabled or disabled. Each option presented its own set of obstacles for our high-volume AdTech platform.

With Cross-AZ balancing enabled, NLB distributes traffic to all tasks regardless of their Availability Zone. Perfect for resilience, but we quickly hit two roadblocks:

- AWS imposes regional limits on targets for each Target Group behind an NLB (500 is the default limit, 1,000 is the maximum hard limit)

- Every byte crossing Availability Zone boundaries incurs data transfer charges—a potentially significant cost at scale

Disabling Cross-AZ balancing seemed like the answer, but this restricted each NLB node to routing traffic only to targets in its own AZ. While this successfully negotiated an increase from the default 500 targets for each Target Group to 1000 through AWS Support, there was still a risk of future scaling constraints. The maximum number of instances we could scale to was now 1000. This initially appeared to make NLB unsuitable for our architecture.

The network mode puzzle

Our cost optimization strategy relies on EC2 instances with public IP addresses running Amazon ECS with bridge network mode and dynamic port mapping. This configuration created an unexpected compatibility challenge with NLB.

In bridge mode, NLB listeners can only use the “Instance” target type—not the “IP” target type. This limitation exists because bridge mode with dynamic port mapping does not provide direct network paths to container IPs. It also does not use fixed predictable ports and lacks compatibility with network modes like “awsvpc” or “host” that would enable IP targeting.

The security implications became evident. With containers assigned to random, unused ports, one must maintain broad port ranges in security groups between hosts—making precise service-to-service communication controls difficult to implement.

The protocol predicament

As we gradually enabled HTTP/2 alongside HTTP/1.1, we noticed traffic becoming unevenly distributed across our ad servers. The root cause? NLB operates at Layer 4 and cannot distinguish between HTTP protocols, treating HTTP/1.1 and HTTP/2 as identical Transmission Control Protocol (TCP) streams.

This protocol blindness led to some servers handling disproportionately more requests than others. The solution required implementing TLS termination at as a backend to the NLB, adding another layer of complexity to our migration.

The spot instance challenge

Perhaps the most significant hurdle involved the extensive use of EC2 Spot Instances —a critical cost-saving measure that directly improves margins on ad serving operations. NLB distributes traffic equally across all Availability Zones regardless of actual instance capacity.

This equal distribution clashed with the natural variability of Spot Instance availability across AZs. Compute capacity fluctuates between zones based on spot market conditions, creating a fundamental mismatch with NLB’s traffic distribution pattern.

What started as a load balancer swap had evolved into a complex architectural challenge requiring creative solutions.

The solution overview

Working closely with AWS, Sharethrough implemented creative solutions to address the previously mentioned challenges.

Envoy integration

With guidance from AWS Technical Account Manager the solution incorporated Envoy for enhanced security (since it was built to be exposed to the open internet) and TLS termination. This allows NLB to operate without TLS, reducing costs.

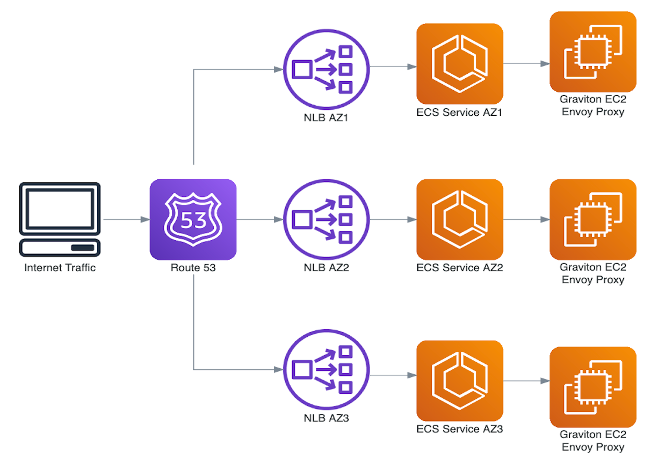

Multi-AZ architecture

The architecture employs multiple NLB, Amazon ECS and Auto Scaling Group configurations to circumvent the NLB . Amazon ECS has built-in throttling that limits how many tasks can be started simultaneously to prevent overwhelming the infrastructure.

By splitting Amazon ECS services across AZs, the system achieved faster deployments and scaling, as we were able to run multiple Amazon ECS task sets in parallel across AZs. This approach-maintained application stability while slightly improving latency due to NLB’s connection-based architecture—reducing processing overhead.

Crucially, the implementation proved highly cost-effective compared to the previous ALB setup, satisfying both engineering and management priorities.

Amazon ECS optimization

Sharethrough then migrated to Amazon ECS Capacity Providers with Auto Scaling Groups for granular control over workloads.

AWS Graviton adoption

The last piece of the puzzle was integrating AWS Graviton processors for improved performance and cost-efficiency.

Figure 2 – Optimized NLB architecture

Figure 2 – Optimized NLB architecture

Results and Benefits

Sharethrough’s migration to NLB yielded impressive results across three key areas: cost optimization, performance preservation, and strategic improvements.

Cost optimization

- Load balancer cost reduction: 30 percent decrease achieved by transitioning from ALB to NLB

- TLS offloading strategy: Improved cost efficiency by reducing infrastructure overhead

- Annual recurring revenue: Net 6-figure decrease from SPOT.IO to Amazon ECS migration

Performance preservation

- Application resilience: No performance degradation, maintaining system stability during transition

- Architectural integrity: Consistent performance metrics validating the migration approach

- Faster deployments: Migrating to Amazon ECS Capacity Provider with Auto Scaling Groups reduced instance provisioning time by 40 percent—leading to faster deployments

Strategic improvements

- New architecture framework: Enabled future optimizations through creation of a scalable infrastructure model

- Infrastructure modernization: Migration to native services resulting in reduced vendor lock-in

As an additional benefit of the successful Network Load Balancer migration, we achieved architectural optimization by leveraging native Amazon ECS features and services. The new configuration provided more granular control over our workloads through a “one service for each AZ” approach, enabling us to dynamically adjust resource allocation based on instance availability within each Availability Zone.

Additional optimization details:

Lessons learned

While the migration was ultimately successful, Sharethrough’s experience offers valuable insights for other organizations considering a similar move:

- Carefully analyze traffic patterns and conduct detailed cost comparisons before migration.

- Consider the total cost of ownership, not just load balancer pricing.

- Perform proof-of-concept testing to validate cost implications and performance impacts.

- Leverage AWS expertise for complex optimizations to balance technical requirements with business objectives.

When moving to NLB may not be a right fit:

When processed bytes are the primary cost driver in your workload, Network Load Balancer with TLS termination at the backend can lead to significant cost increases. This occurs because NLB charges are directly tied to the volume of data processed. When TLS termination happens at the back end, rather than at the load balancer level, each encrypted connection requires the data to be transmitted in its encrypted form through the NLB.

The encrypted TLS traffic typically includes overhead from the encryption itself, making the payload larger than the original unencrypted data. This encryption overhead can increase the total bytes processed by approximately 25 percent, directly translating to higher NLB costs since you’re paying for processing this additional encrypted data volume.

This cost impact is particularly relevant for data-intensive applications. Such applications include media streaming, large file transfers, or high-volume API services where the data transfer volume significantly outweighs the request count in the overall cost calculation.

Conclusion

Sharethrough’s successful migration from ALB to NLB demonstrates the power of flexible infrastructure of AWS and the value of close collaboration between customers and AWS. By leveraging AWS services and implementing innovative architectural solutions, Sharethrough achieved significant cost savings. This was all achieved while maintaining high performance and scalability for their global ad exchange platform.

This case study illustrates how AWS empowers customers to optimize their infrastructure continually, adapting to changing business needs and technological advancements. As the digital advertising landscape evolves, Sharethrough is well-positioned to grow and innovate, backed by the robust and flexible foundation of AWS services.

Contact an AWS Representative to find out how we can help accelerate your business.

Further reading