AWS for Industries

How Zurich Insurance is becoming an AI-led insurer using Amazon SageMaker AI

This AWS FSI Blog post outlines how Zurich Insurance in the UK, or ‘Zurich’ in the remainder of this post, is leveraging artificial intelligence and machine learning (AI/ML) with Amazon SageMaker AI to anticipate flood claims, thereby proactively protecting customers against potentially catastrophic events. Zurich Insurance Group (Zurich) is a leading insurer providing property, casualty, and life insurance solutions globally. In 2022, Zurich began a multi-year program to accelerate its digital transformation and innovation through the migration of 1,000 applications to AWS, including core insurance and SAP workloads.

Introduction

The insurance model has been broadly the same for hundreds of years. Customers, which are often businesses, take out a policy and in the event of a disaster, they make a claim and receive assistance. This is a successful way of working and will continue well into the future. However, with AI, insurance carriers could augment this with a completely new and complementary offering – a proactive approach Zurich refers to as “predictive parametrics”. Climate related events increase claims in the UK, as reported by The Guardian in their article which the reader can find by following this link.

Zurich’s first use-case for proactive insurance is to predict flood claims weeks before they occur. Floods cause billions of pounds of damage to homes and businesses in the UK each year, a problem only getting worse due to climate change. Using multiple data sources and SageMaker AI, Zurich is testing the capability to provide early-warning for customers’ properties. In the case of a flood, if Zurich can predict within a week or month that a flood is likely, they could proactively help customers to mitigate this risk.

Key Business Challenges

Despite insurers’ extensive risk expertise and data amassed over hundreds of years, manual assessment methods can’t effectively predict claims at an individual level across millions of customers’ assets – from properties to cyber estates. Machine learning (ML) now offers a solution by enabling automated, continuous risk monitoring at scale.

Data science teams develop and train models and require a rigorous path to production to ensure model deployments and inference results are governed and safe. This is where SageMaker AI can help customers establish a foundation for all data science and machine learning operations (MLOps) to provide a consistent and auditable path to production for the AI models. With built-in governance controls and monitoring capabilities, SageMaker AI enables Zurich to scale their ML initiatives while maintaining responsible AI practices. The interested reader can follow this link to read more about Zurich’s commitment to data and responsible AI.

Scaling with SageMaker AI

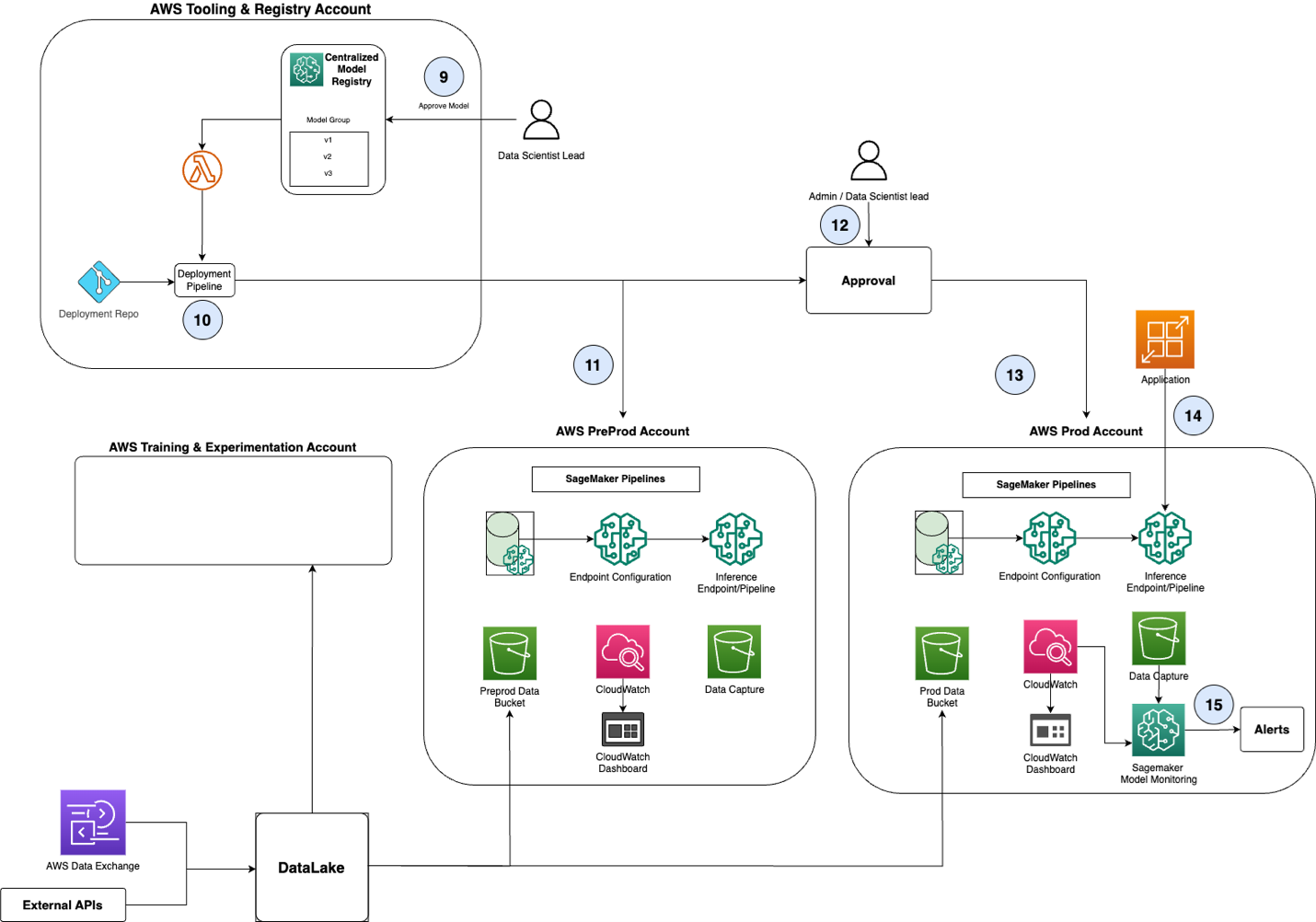

The end-to-end architecture using SageMaker AI for model development, deployment, and inferencing is outlined in Figure 1. For readability, the architecture for model approval and promotion to production is shown separately in Figure 2. Together, these diagrams represent the AWS recommended MLOps architecture as adopted by Zurich.

Figure 1: High-level end-to-end MLOps architecture

Figure 2: Model approval and promotion

At a high level, the full architecture contains a central Tooling and Registry account, and three accounts to mirror environments for each Line of Business (LOB) team:

- A Training and Experimentation account

- A PreProduction account (labeled ‘PreProd’ in the diagrams) for UAT and testing

- A Production account (labeled ‘Prod’ in the diagrams) for final deployment

The central tooling account contains a central model registry that keeps track of models trained by various teams, model versions, and deployment status.

Zurich has developed their strategic data asset using Snowflake, an AWS Partner. This strategic data asset serves as the primary data source for this use case. Here’s how the data is processed:

- Each customer’s address is matched and linked to the UK’s Unique Property Reference Number (UPRN), a standard adopted by the UK government for efficient identification of individual locations.

- Other external sources, such as geographic and weather data, are matched to UPRNs via geospatial algorithms.

- The data sets are anonymized (customer name and address removed) and stored in Amazon S3.

- The anonymized data is then processed by SageMaker AI.

This data source is depicted as the Data Lake in Figure 1.

The main workflows are described below:

- Steps 1 to 8 are illustrated in Figure 1

- Steps 9 to 15 are shown in Figure 2

Create base infrastructure

First, an administrator, persona ‘admin’ in Figure 1, creates the base infrastructure needed for the data science team to submit their models to the MLOps workflow (Step 1 in Figure 1). Initially, ‘admin’ creates three AWS accounts (Training and Experimentation, PreProduction, and Production) for the data science team and then executes Terraform scripts to create the base AWS cloud infrastructure in these accounts.

The Training and Experimentation account additionally includes a new SageMaker Studio Domain that allows the data scientists to build with their preferred IDEs such as Jupyter or VS Code (Step 2).

Finally, this workflow also creates the project template repositories for model training and model deployment, which can be cloned for each data science project. These templates are registered with SageMaker AI projects as ‘Project Templates’ and will be available for data science leads to clone for their project. For example, a project template might include samples such as an XGBoost classification model, or a deep learning model using PyTorch.

Create new project

For each new ML use-case, the data science lead logs into SageMaker Studio within the training and experimentation account, selects the project template that matches their requirements, and clicks “Deploy” (Step 3). Behind the scenes, this triggers an AWS Lambda function that clones the project template repository to create two project-specific repositories (for Training and Deployment code) and sets up a DevOps pipeline using chosen CI/CD tooling (Step 4). This workflow also creates an S3 bucket to store the data for training and evaluation.

Train and evaluate model

The data scientist uses SageMaker Studio within the Training and Experimentation account to access the training data from S3 and experiment with ML training and evaluation (Step 5).

When ready, the data scientist commits the training code to the project training code repository. The commit automatically triggers a training pipeline that trains the model on the full dataset using hyperparameter optimisation and other common methods. This ensures highly performant models without overfitting and captures key measures of model performance (Step 6).

Readers can review hyperparameter tuning strategies available in SageMaker AI by following this link. The training pipeline creates a new model version in the team’s model registry (Step 7). Once satisfied with the metrics, the data scientist can trigger the approval process in their team’s model registry to push the model to the centralized model registry in the Tooling and Registry account (Step 8).

Promote model to PreProd and Prod

Once the model and code have been thoroughly checked, the data science lead approves the model in the centralized model registry in the Tooling and Registry account (Step 9 in Figure 2).

As a result, the deployment pipeline is automatically tiggered (Step 10) which promotes the model artifacts to the PreProd environment of SageMaker AI (Step 11). Here the model is tested against hold-out datasets (stored in the pre-prod data bucket on Amazon S3), and compared with the current production instance, if one exists (Step 12).

Upon passing the required checks, the data science lead can approve the deployment of the model artefacts to production (Step 13). Different deployment strategies such as all-at-once, canary, or linear deployment are supported out-of-the-box with SageMaker AI.

Monitor risk

In production, the model runs against customer data assets (stored in the production data bucket on Amazon S3), continuously checking risk levels of customer properties for flood damage (Step 14 in Figure 2). This automation is how Zurich scales their ability to monitor their customer base as frequently as needed. Previously, this task would not be possible as risk monitoring was largely a manual task and not scalable to the thousands of customer assets underwritten by Zurich. SageMaker AI monitors the model for key risks such as model drift and bias (Step 15).

This process is repeatable for new models with each new model being stored in a central registry thereby providing a consistent and auditable path to production for Zurich’s AI models.

Responsible AI by design

SageMaker AI’s MLOps enables Zurich to implement responsible AI through robust monitoring and transparency. Zurich integrates their AI assurance framework directly into the model development process via SageMaker AI, ensuring compliance-by-design.

To reduce bias in model training, their privacy-first approach removes personal information (customer names and addresses, including UPRN) from the training data set before transferring it to SageMaker AI. The model only uses relevant property features, such as size and proximity to flood plains to be able to make a prediction.

Continuous monitoring allows Zurich to detect both sudden performance changes and gradual drift in their AI models. SageMaker AI’s fully auditable MLOps process provides clear documentation for each development step, enabling easy explanation and the ability to roll back models when needed.

Scalability and Reusability

Zurich, as a global organization with distributed AI teams, required a platform for productionizing models and sharing internal AI assets across the company. These teams work on a variety of projects, not just risk models, but also generative AI tools to drive efficiency, and tools to simplify customer interactions and more.

Zurich standardized their MLOps using SageMaker AI, allowing teams worldwide to deploy models through traceable processes. AWS region selection is based on data residency requirements. The shared model registry allows publishing and accessing models company-wide, complete with statistics and documentation. In this way, Zurich is developing modular AI components to speed up deployment of risk models while maximizing reuse.

Jonathan Davis, Data Science Lead at Zurich Insurance UK, highlights the impact of this approach:

“Having the ability to re-use patterns for deployment through Amazon SageMaker AI has substantially accelerated our speed to deploy, from around 26 weeks without re-usable patterns to around 8 weeks with them, which other teams across the organisation has benefitted from as well.”

Delivery Approach

Zurich has established SageMaker AI as a key tool in their AI and ML toolkit, with MLOps capability built-in to ensure they can quickly build and deploy models with the checks needed to ensure they are reliable, auditable, and safe. In the UK in particular, the data scientists have been working directly with AWS teams to build this capability alongside their migration of their data warehouse assets to Snowflake on AWS. This combination means they have an end-to-end platform where AI and ML models can be trained on anonymized data from existing risk experience. These models are deployed to monitor changing risks in the customer base.

To accelerate the delivery of the MLOps platform, Zurich’s data science team partnered with AWS FSI Prototyping and Cloud Engineering (PACE) team. The FSI PACE team accelerates financial services customers’ cloud journeys through a unique combination of hands-on prototype development and domain expertise. Through focused 4 to 8-week development cycles, the team delivers working solutions in AWS-owned accounts that demonstrate tangible business value, while enabling knowledge transfer through Experience Based Acceleration (EBA) workshops that help customers successfully deploy and adopt these solutions.

Starting with a whiteboarding session to agree architecture and scope, the PACE team built the prototype within 8 weeks and deployed the first version of code to Zurich’s AWS environment using an EBA workshop where PACE worked together with the Zurich team.

Conclusion

This Amazon SageMaker AI platform implementation marks Zurich’s first step in the use of AI to prevent flood damage through accurate and timely predictions, which is especially vital as these events become more frequent due to climate change. With SageMaker AI, Zurich can develop AI/ML models at scale, with a rigorous MLOps framework to ensure models can be deployed rapidly and re-used across Zurich. Zurich hopes to contribute to the insurance industry’s ability to use AI to ensure customers always receive the best protection, and the aim is that this work with SageMaker AI is only the beginning of a proactive revolution in the insurance model.