AWS Database Blog

Restore self-managed Db2 Linux databases in Amazon RDS for Db2

As more organizations migrate their self-managed Db2 Linux-based workloads to Amazon Relational Database Service (Amazon RDS) for Db2, migration teams are learning that preparation is key to avoiding project delays. Common roadblocks include outdated database versions, invalid objects, and improper storage configurations that surface during the migration process.

In this post, we introduce a Db2 Migration Prerequisites Validation Tool that catches these issues before they impact your timeline. This tool performs thorough pre-migration validation and guides you through the necessary preparations for migrating self-managed Db2 on Linux to Amazon RDS for Db2

Solution overview

The Db2 Migration Prerequisites Validation Tool performs comprehensive pre-migration assessments across various validation categories to ascertain migration readiness. Upon identifying any discrepancies, the tool offers specific, actionable recommendations for remediation. These detailed insights facilitate database administrators and migration teams in systematically addressing potential issues. The identified problems must be resolved prior to creating the final on-premises Db2 backup, which will be used for restoration in Amazon RDS for Db2.

This proactive approach reduces failures and provides a smooth transition to Amazon RDS for Db2. For step-by-step guidance on migrating your self-managed Db2 databases from Linux to Amazon RDS for Db2, see Migrating from Linux to Linux for Amazon RDS for Db2.

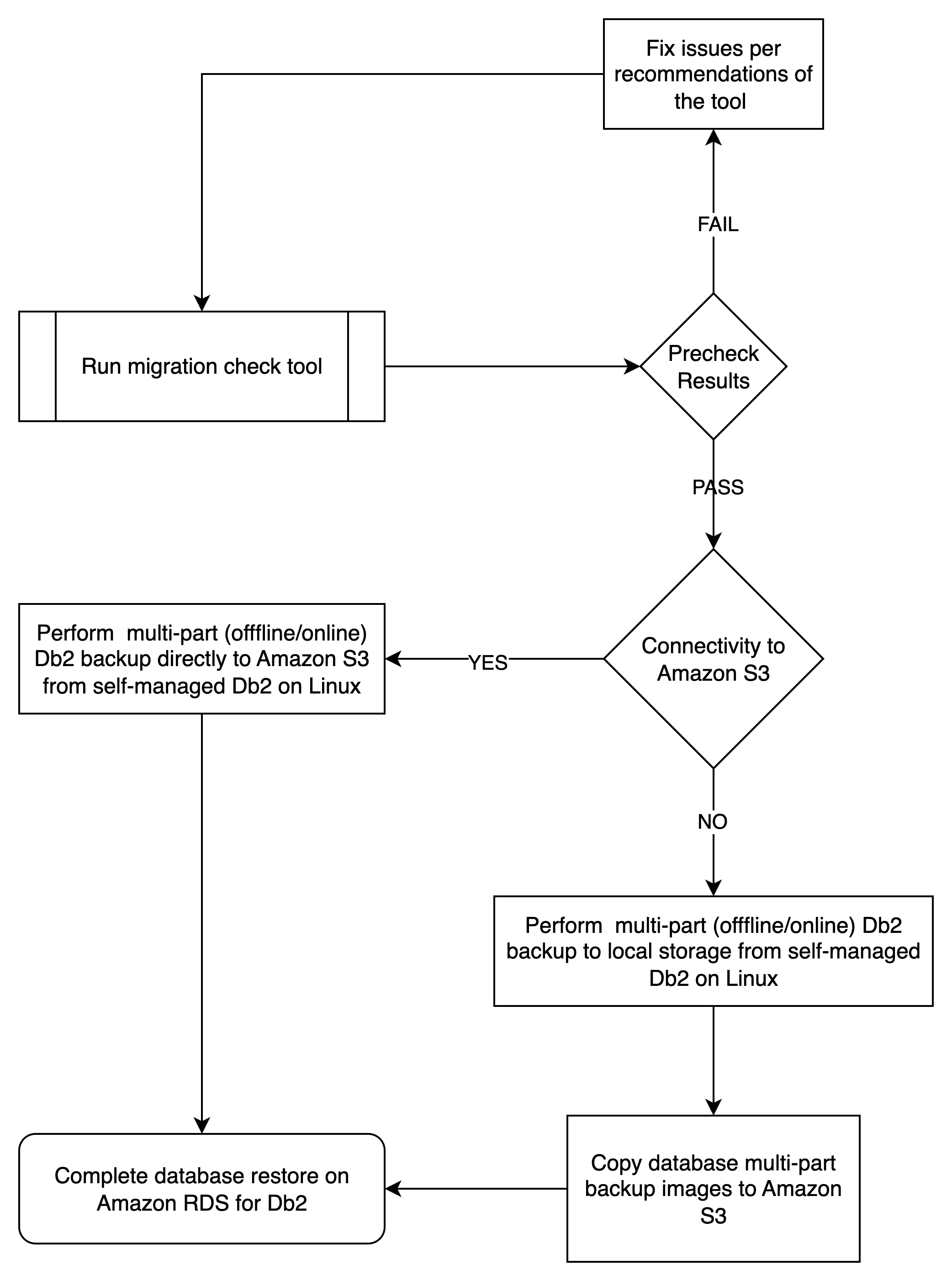

The following diagram illustrates the general process flow to facilitate successful restoration from an on-premises, self-managed Db2 database on Linux to Amazon RDS for Db2.

The tool offers the following key features:

- Cross-platform support:

- Linux on x86, Linux on POWER

- Compatible with older bash versions

- No external dependencies beyond standard Unix tools

- For non-Linux platforms or other migration options, consider using Db2 Migration Tooling (Db2MT)

- For more details about migration, refer to Migrate from self-managed Db2 to Amazon RDS for Db2 using AWS DMS and Data migration strategies to Amazon RDS for Db2

- Multiple operation modes:

- Interactive mode – Guided experience with user prompts

- Non-interactive mode – Automated execution for scripting

- Remote Mode – Connect to remote Db2 databases for validation

- Comprehensive reporting:

- Color-coded console output

- Detailed log files with timestamps

- PASS/FAIL/WARNING status for each check

- Actionable recommendations for failures

- Inventory analysis:

- Complete database object inventory

- Sanity checks for migration readiness

- Object count summaries by type

You can use the tool in the following scenarios:

- Pre-migration planning – To identify potential issues early and allocate time for remediation.

- Migration readiness assessment – To perform a final validation before initiating the migration process to Amazon RDS for Db2.

- After fixpack upgrades – Validate databases after applying Db2 fixpacks to confirm proper update completion.

- Before taking final Db2 backups – To confirm readiness before restoring to Amazon RDS for Db2, a clean output can safeguard against restore. We provide general guidance on using the database backup command later in this post.

How to use the tool

Prerequisites

Before getting started, note the following prerequisites and limitations:

Local Mode

- The Db2 instance must be running and accessible

- Run the tool in the Db2 server using SYSADM authority

- The Db2 environment must be properly sourced (

. ~/sqllib/db2profile) - Run as the Db2 instance user (for example, db2inst1)

Remote Mode

- Db2 client must be installed and configured

- Network connectivity to remote Db2 server

- Valid Db2 user credentials with DBADM or SYSMAINT privileges

- Database must be cataloged or DSN entries available in

db2dsdriver.cfgfile

In an interactive flow, the tool completes the following steps:

- Display Db2 version information.

- List available instances.

- Identify current instance for validation.

- Discover local databases in current instance.

- Validate remote databases when you cannot run the script on the Db2 server.

- Allow database selection for validation.

- Execute comprehensive validation checks.

- Generate a detailed report.

The following commands lists the relevant code for interactive execution.

Direct execute of the tool

Download and execute

Remote mode usage – direct execute

Remote mode usage – download and execute

Note: The DBNAME environment variable used for remote connection must be either locally catalogued remote database name or name of the DSN entry used in the db2dsdriver.cfg file.

The following commands lists the code for non-interactive mode when the tool is used locally on the Db2 server.

Validate specific instance

With custom report location

NOTE: Reports prefixed with “db2_inventory” will be generated in the directory where script was launched.

Verbose output for debugging

For a multi-instance execution, you can run this tool separately in each instance. See the following sample code:

Understanding tool’s output

The tool’s behavior is slightly different if you run the tool locally or through a Db2 client.

Local run

When you run the following command, it will download the script db2_migration_prereq_check.sh and run it immediately.

When executing the tool locally in a Db2 instance, it will identify all local databases and perform the validation against them.

The output will show Db2 version, number of local databases discovered, validation results, size of the database and performs an inventory analysis on the database.

Remote run

For a remote run, you must export three environment variables such as DB2USER, DB2PASSWORD and DBNAME before running the script. The validation will occur only one database at a time.

Understanding validation checks

The following table lists the various category checks. The tool will also complete a database inventory analysis and save the output locally in db2_inventory_yyyymmdd_hhmmss.json.

| Category | Checks, Recommendations, and Remediations |

| db2updv115 |

|

| InDoubt Transaction |

|

| Invalid Objects |

|

| Tablespace Check |

|

| Non-Fenced Routines |

|

| Automatic Storage |

|

| Database Config |

|

| Database Config |

|

| Database Sizing |

|

| Federation |

|

| Java stored procedures |

|

Reading and acting on reports

The following table summarizes the reports generated.

| Report Name | Details |

db2_migration_prereq_report_yyyymmdd_hhmmss.log |

This report has checks, recommendations, and remediations for all the databases scanned. |

db2_inventory_detail_ yyyymmdd_hhmmss.txt |

For each database scanned, this report contains inventory details like number of tablespaces, tables, view, indexes, and more. |

db2_inventory_summary_ yyyymmdd_hhmmss.txt |

This report summarizes inventory information for all the databases scanned. |

db2_inventory_ yyyymmdd_hhmmss.json |

For each database scanned, this report contains inventory details like number of tablespaces, tables, view, indexes, and so on in JSON format. |

Navigate to the section DATABASE SUMMARY to check DATABASE READINESS status:

The migration readiness levels are as follows:

- READY FOR MIGRATION:

- All checks passed (PASS status)

- No critical issues found

- Database ready for backup and to restore in Amazon RDS for Db2

- REVIEW REQUIRED:

- Some warnings found

- Manual review of recommendations needed

- Migration possible with considerations

- NOT READY FOR MIGRATION:

- Critical failures found

- Must address failed checks before migration

- Migration should not proceed

Best practices

Consider the following best practices:

- Run early and often:

- Execute during migration planning phase

- Rerun after any database changes

- Validate immediately before backup creation

- Address issues systematically:

- Fix FAIL items first (blocking issues)

- Review WARNING items for potential risks

- Document INFO items for reference

- Automate for multiple databases:

- Use non-interactive mode for automation

- Create scripts for multi-instance environments

- Schedule regular validation runs

- Maintain documentation:

- Keep validation reports for audit trails

- Document remediation actions taken

- Track validation history over time

Advanced usage scenarios

The following code illustrates a continuous integration scenario:

The following code illustrates a scheduled monitoring scenario:

Troubleshooting common issues

The following table presents common issues and troubleshooting tips.

| Issue | Troubleshooting |

| db2 command not found | |

| No Db2 instances found | |

| Unable to connect to database | |

| Taking too long to connect | |

| Permission error |

General guidance on using database backup command

When using the database backup command, consider the following best practices:

- Amazon RDS for Db2 uses Amazon Simple Storage Service (Amazon S3) streaming capabilities to restore the database using parallel streams. Amazon S3 streaming is most effective when dealing with multi-part files of the database backup image. For instance, the command

db2 backup database <dbname> to /backupwill generate a single image of the database, which might not be optimal from a performance standpoint. Instead, use multiple locations in the command, such asdb2 backup database to /backup, /backup, /backup, /backup, /backup. This example demonstrates that the database backup operation will be executed in parallel, resulting in the database image being divided into five parts, each labeled as.001,.002,.003,.004, and.005. - Consider employing multi-part backup even for smaller databases. Determine the number of database locations based on your Linux machine’s CPU and memory capabilities. In cases of uncertainty, it is advisable to use 20 locations.

- Consider using db2 backup directly to Amazon S3 if you have connectivity from self-managed db2 to the AWS Region’s network. To copy database multi-part images to Amazon S3, you must create a bucket in your account with the necessary privileges. Use this bucket to create a db2 storage access alias.

Consideartions for creating storage alias:

- If using Db2 on EC2, grant proper IAM role to access the Amazon S3 bucket and then do not specify

USER,PASSWORDorTOKENin the create storage alias command.

Example command - If using self-manage Db2, you can get the AWS CLI credentials and create the storage alias.

- Using log-term credentials:

- Using short-term credentials:

- Use the storage alias in the backup command to directly write database backup images to Amazon S3. For instance, if the storage alias created is

db2S3, use the command:This command will facilitate splitting database multi-part images into five parts in your S3 bucket.

General guidance on using database restore command

When using the database restore command, consider the following best practices:

- Make sure that you have enabled Amazon S3 integration for RDS for Db2 instances with the proper AWS Identity and Access Management (IAM) role for access to an S3 bucket with database backup multi-part copies.

- Use the stored procedure rdsadmin.set_configuration to set performance parameters such as

RESTORE_DATABASE_NUM_BUFFERS,RESTORE_DATABASE_PARALLELISM, andRESTORE_DATABASE_NUM_MULTI_PATHS. - Set the parameter

USE_STREAMING_RESTOREtoTRUEto enable Amazon S3 streaming direct from Amazon S3 to Amazon RDS for Db2. - Amazon RDS for Db2 will create a Db2 storage alias automatically for restoring the database multi-part database backup images using the rdsadmin.restore_database stored procedure.

- Pay attention to the

s3_prefixvariable used in therdsadmin.restore_databasestored procedure. This prefix is the common part of multi-part backup images excluding extensions such as .001, .002, and so on so that matching files are restored to the RDS for Db2 database. - If using online backup image, you must keep copying Db2 archive log files to Amazon S3 and run the stored procedure rdsadmin.rollforward_database to apply Db2 archive log files. Repeat the process until you have applied all log files. You should use a different prefix for each operation.

- After applying all log files, run the stored procedure rdsadmin.complete_rollforward to bring the RDS for Db2 database in a connectible state.

- Consider using the Db2MT tool for automation for using online restore instead of manual steps.

Enhancements to the tool

The source code of this tool is available in the following GitHub repository. Open an issue to submit your enhancements request or submit a pull request with your suggested changes.

Additional Resources

To learn more about Amazon RDS for Db2 and migration strategies, refer to the following resources:

- Amazon RDS for Db2 User Guide

- Data migration strategies to Amazon RDS for Db2

- Near zero-downtime migrations from self-managed Db2 on AIX or Windows to Amazon RDS for Db2 using IBM Q Replication

- Performance optimization of full load and ongoing replication tasks from self-managed Db2 to Amazon RDS for Db2

- Migrating tables from IBM Db2 for z/OS to Amazon RDS for Db2

Conclusion

The Db2 Migration Prerequisites Validation Tool significantly reduces migration failures by identifying and addressing common issues before they impact your migration timeline. By incorporating this tool into your migration workflow, you can achieve the following:

- Reduce migration risk – Identify issues early in the process

- Save time – Avoid failed restore operations and troubleshooting

- Improve success rate – Make sure databases are properly prepared

- Maintain documentation – Keep detailed validation records

Regular use of this tool as part of your Db2 maintenance and migration processes can help facilitate smooth, successful migrations to Amazon RDS for Db2.

Have you tried this approach in your setup? Let us know how it works for you or if you want to see enhancements in the tool.