AWS Database Blog

Inside Booking.com’s ultra-low latency feature platform with Amazon ElastiCache

This is a guest post by Klaus Schaefers, Senior Software Engineer at Booking.com and Basak Eskili, Machine Learning Engineer at Booking.com, in partnership with AWS.

As a global leader in the online travel industry, Booking.com continuously works to improve the travel experience for its users. Latency is a key factor in achieving this—nobody likes waiting for their search results to be returned.

Booking.com generates several million real-time predictions per minute, supporting a variety of algorithms, including ranking and fraud detection. Common to most algorithms is the need for ultra-low end to end latencies, often less than 100 milliseconds. Higher latencies not only result in a worse user experience, but also ultimately mean a loss in conversion rate. To meet these demands, Booking.com developed an ultra-low latency feature platform, capable of serving ML features with a p99.9 latency below 25 milliseconds at a scale of 200,000 requests per second.

In this post, we share how Booking.com designed a well-architected Amazon ElastiCache-based feature platform, achieving ultra-low latency and high throughput, to ensure the best possible user experience.

Amazon ElastiCache has long been a reliable technology within Booking.com, proving its value across a variety of use cases. Given its combination of low latency, high throughput, and operational simplicity, Booking.com chose to build their feature store with ElastiCache at its core. The managed nature of ElastiCache means teams can focus on development rather than infrastructure, and its support for complex data types and competitive pricing provides flexibility and cost-effectiveness at scale.

Requirements

To meet the scale and performance expectations at Booking.com, the feature store needed to deliver on several key requirements:

- Ultra-low latency – Latencies is a critical aspect, because it directly impacts Booking.com’s user experience. We defined our platform needed to respond with a p99.9 client-side latency of less than 25 ms.

- High throughput – Workloads scale during peak hours, where ML systems serve several million requests per minute. The feature store needed to handle approximately 10 million key lookups per second, while maintaining an ultra-low latency.

- Self-service – The feature store is used by many teams within Booking.com. To keep the operational burden for the core team to a minimum, the internal clients had to be able to manage the feature store by themselves through a central git repository.

- Large data volumes – The size of the datasets can reach up to several TB of feature data. The solution had to be able to scale cost-efficiently to specific use cases, without sacrificing performance.

- Isolation of workloads – The feature store had to provide strong levels of isolation for every use case if needed to meet Service Level Objectives (SLOs) and increase cost transparency.

Solution overview

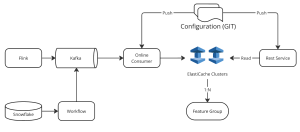

The architecture addresses scalability, latency, and flexibility. The main components of the system are an ingestion component and a serving component, which both interact with ElastiCache clusters. The following diagram depicts the main components.

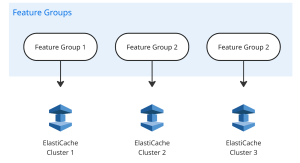

A major design decision was to delegate the feature computation to external systems, and focus the feature store on speed and scale. This means, in practice, that systems such as Snowflake, Apache Fling, or Apache Spark are used to compute the features. Snowflake is mostly used when data recency is not a major concern, or features are seldom changing. When data recency is key, streaming based solutions such as Flink or Spark are used. Once a feature is computed, it is published to Apache Kafka, from where it is consumed and ingested into ElastiCache via a dedicated Java service.Another important characteristic of the solution is that it spins up a cluster per use case to provide isolation. Each cluster is registered under an alias in the system. Each feature group is assigned to an ElastiCache cluster, so users can separate workloads and tailor resources to specific use cases. This setup is managed through a centralized configuration, which maps feature groups directly to their corresponding cluster aliases. The configuration is managed in a central Git repository, and changes are pushed through continuous integration and continuous delivery (CI/CD) tasks into the production systems.The following diagram illustrates this setup.

For feature groups that have higher storage requirements but don’t require ultra-low latency, ElastiCache with Data Tiering is a great option. Here, ElastiCache offloads some of their data to local SSD to provide ample capacity while still maintaining reasonable performance.

ElastiCache replication helps maintain high availability and provide data resiliency. This approach helps safeguard against node failures by replicating data across multiple instances. Additionally, read replicas handle high query volumes, so the solution can distribute the load and consistently deliver low-latency responses even during periods of heavy traffic.In the following sections, we discuss the key components of the solution in more detail.

Schema support

In the feature store, data is organized into feature groups. Each feature group contains several features, which are defined by a schema. The records are stored in a feature group, and contain the actual typed feature values. One feature must be the so-called record_id which specifies the key the record is stored under.

A feature group is specified by the following attributes:

- The name field defines the unique identifier for the feature group.

- The description field provides a human-readable summary of the feature group’s purpose. This data is mirrored in Booking.com’s Central Machine Learning Registry so ML practitioners can reuse existing feature groups.

- The storage_engine field specifies the backend storage, and storage_layout defines the data format.

- The schema section defines the features and is strongly inspired by JSON schema. The properties value lists the features, including its name, data type, and optional metadata (for example, descriptions and examples).

- The record_id field is marked with is_record_identifier: true, identifying it as the primary key for each record.

- Additional features are described with their data types and purpose.

- The kafka section specifies the source of data ingestion, including the Kafka topic and federation responsible for publishing updates.

The schema-driven design provides the following benefits:

- Data integrity – Data adheres to a predefined structure, preventing errors caused by missing or unexpected fields

- Schema evolution – New features can be added to the schema without requiring existing data to be backfilled

- Developer clarity – The solution provides a clear contract between data producers and consumers, making it straightforward to onboard new teams or extend existing workflows

Storage layout

ElastiCache is, in principle, a key-value store. The Booking.com team built storage layout components as adapters to connect ElastiCache with the schema model. Each storage layout implements different strategies to serialize the records into singular values (byte arrays). Two primary layouts have been selected for the feature store: Key-JSON and Key-Kryo serialization:

- Key-JSON layout – Data is stored as JSON objects, with all features of a record encapsulated in a single key. Each key is constructed by combining the feature group and record ID, making it straightforward to retrieve or update complete records.

- Pros – Supports nested structures, enables partial updates, and integrates well with schema-on-read approaches.

- Cons – Higher latency due to the overhead of JSON serialization and deserialization. JSON payloads are larger compared to serialized formats.

The following represents a sample key and its associated (JSON) value.:group_1.record_id_1 = { “feature1”: 1, “feature2”: 2, “feature3”: “abc”}

- Key-Kryo serialization layout – Data is stored as serialized object arrays using Kryo, compact and efficient serialization framework for the JVM. The order of the objects corresponds to a particular feature. This layout is optimized for performance-critical use cases requiring minimal latency.

- Pros – Compact data representation significantly reduces memory usage and network transfer times. It offers excellent performance for batch queries.

- Cons – No support for partial updates directly; the entire record must be read, updated, and written back. Debugging is more challenging due to binary storage.

The following represents a sample key and its associated (JSON) value.group_1.record_id_1 = [1, 2, “abc”] // <as Kryo Serialized Blob>

Self-service

The feature store architecture at Booking.com has been intentionally designed to facilitate self-service operations among a diverse set of internal teams, thereby minimizing the operational demands placed on the core development group. The configuration is managed through a shared Git repository, where schema changes can be submitted through merge requests. After a merge request is reviewed and approved by the team, a dedicated CI pipeline pushes the updates into the system. The accompanying diagram provides a schematic representation of this end-to-end workflow, highlighting the interaction between contributors, the review process, and automated deployment mechanisms.

API service

Access to the feature store is provided through a secure REST API, which is implemented as a dedicated service, so users can retrieve features. The API service also ensures authorization and enforces role-based access policies. This service was designed, developed, and deployed on Amazon Elastic Kubernetes Service (Amazon EKS) to provide high availability and scalability.

The API exposes RESTful endpoints for retrieving, streaming, and deleting features.

Ingestion pipeline and offline store

Ingestion is not handled through direct API calls to ElastiCache. The ingestion is Kafka-based to provide scalability, reliability, and flexibility. The process is designed to handle high-throughput requirements while maintaining data integrity.Each feature group has a corresponding Kafka topic. The Kafka consumers are dynamically initialized using the feature group configuration, which is pushed from a Git repository to each consumer pod, allowing them to map Avro-encoded messages directly to the corresponding feature group columns. After the data is mapped, the consumers write the data to ElastiCache. One of the key reasons for choosing Kafka is its comprehensive logging and message retention capabilities, which greatly enhance the ability to trace and debug data ingestion workflows. This level of transparency helps identify and resolve issues quickly, contributing to the operational resilience of the systems. In addition, Kafka makes it very easy for us to scale the ingestion to our needs. By choosing the right partitioning scheme for our messages, we can easily ingest 50,000 records per second into a single feature group in ElastiCache.Booking.com has a deep integration between Kafka and Snowflake, which enables them to materialize topics into offline datasets. In the context of the feature store, these materializations are the offline store. The offline store is used by data scientists and engineers to query timestamped features for analytics and model training and run time travel queries, where they can reconstruct the system’s state at a given moment in time.By using Kafka as the central streaming backbone, data workflows are both transparent and highly adaptable, supporting a wide variety of use cases across the organization.Booking.com picked ElastiCache mainly due to the following capabilities:

Latency

Our initial tests have showed that ElastiCache can deliver single-digit server-side latencies. This is important when handling millions of real-time predictions per minute, as it gives us enough room to apply data transformations and account for network access. For use cases that require ultra-low latency, in-memory clusters ensure sub-millisecond responses, while SSD-backed clusters are available for workloads that need more storage capacity but can tolerate slightly higher latencies

Scale

ElastiCache clusters can support up to 500 million requests per second, easily meeting Booking.com’s requirements for managing 200,000 requests per second, even while ingesting at a rate of 50,000 per second. Its high throughput capacity and ability to distribute reads across multiple replicas help maintain consistent performance during peak traffic. Replication across nodes also adds resiliency and high availability.

Manageability

The managed nature of ElastiCache reduces operational overhead. Through Terraform, we can manage many clusters centrally and right-size them based on each use case’s demand profile. The system also allows workload isolation by spinning up separate clusters per use case, each registered under an alias, ensuring teams can scale independently without interference.

Cost

In contrast to fully managed solutions billed per request, ElastiCache is billed based on Amazon Elastic Compute Cloud (Amazon EC2) instance usage. This makes it an ideal and cost-effective solution for high throughput use cases like ours. Combined with its flexibility in data types and competitive pricing, ElastiCache allows us to optimize performance without overpaying for infrastructure.

Business outcomes

Over 22 teams across Booking.com have already integrated the feature store into their real-time ML pipelines, bringing meaningful business value. The platform supports a variety of use cases, from improving fraud detection accuracy and reducing operational costs to enhancing user-facing predictions. Its low-latency API, self-service schema management, and high-throughput ingestion pipelines help teams innovate faster—without worrying about infrastructure complexity or performance bottlenecks.One of the most impactful migrations was in our ranking systems, where moving to the ElastiCache-backed feature platform resulted in 82% lower infrastructure cost, delivering over $3M in annual savings, all while maintaining ultra-low latency and meeting stricter performance requirements than before

Conclusion

In online commerce, latency is a feature: if it feels slow, people bounce and business opportunities are lost. With AI applications, the challenge gets tougher, as the complex algorithms and models consume a considerable chunk of the latency budget. That’s why it is important to deliver the feature data as fast as possible to the models.

At Booking.com, we built a custom feature store using Amazon ElastiCache to achieve p99.9 under 25 ms at roughly 200,000 RPS. The feature store is built on Amazon ElastiCache as its performance foundation and extended with additional functionality to ease integration into Booking.com system landscape, standardize development efforts, isolate workloads and optimize cost attribution.

This work was made possible by our amazing team: Fan Song, Nithin Kamath, Offer Sharabi, Oleg Taykalo, Rafael Ribeiro, Sagiv Avraham, Sandra Gergawi, and Tolga Eren, alongside us