AWS Database Blog

How to optimize Amazon RDS and Amazon Aurora database costs/performance with AWS Compute Optimizer

In this post, we dive deeper into database optimization for your Amazon Relational Database Service (Amazon RDS), exploring how you can use AWS Compute Optimizer recommendations to make cost-aware resource configuration decisions for your MySQL and PostgreSQL databases. In our previous post, AWS tools to optimize your Amazon RDS costs , we discussed various optimization tools including AWS Trusted Advisor, Instance Scheduler on AWS, AWS Backup, Cloud Intelligence Dashboards in Amazon QuickSight, and Amazon CloudWatch metrics. Building on that foundation, we now focus specifically on evaluating your current instance performance against other available options, including the latest generation instances along with AWS Graviton processors for your Amazon RDS for MySQL, Amazon RDS for PostgreSQL, Amazon Aurora MySQL-Compatible Edition, and Amazon Aurora PostgreSQL-Compatible Edition databases. These recommendations help you right size database (DB) instances, upgrade to the latest generation hardware, and evaluate AWS Graviton instance classes for improved price-performance.

The challenge of database resource optimization

Identifying the optimal DB instance configuration is one of the most challenging aspects of managing cloud resources. Database administrators face several key challenges: ensuring sufficient resources for performance, controlling costs by avoiding excess capacity, exploring newer technologies like AWS Graviton, and adapting to changing workload demands.

Some organizations face these exact challenges with their database environments. Database teams often spend countless hours manually analyzing CloudWatch metrics to determine whether their Amazon RDS instances are properly sized. Without clear performance benchmarks, they frequently over-provision instances to achieve application stability, resulting in wasted spend.

Compute Optimizer for database right sizing

AWS Compute Optimizer now delivers powerful instance recommendations specifically for your RDS for MySQL, RDS for PostgreSQL, Aurora MySQL, and Aurora PostgreSQL databases, helping you maximize both performance and cost-efficiency. It’s important to note that these database types are currently the only RDS and Aurora database engines supported by Compute Optimizer. Compute Optimizer takes a performance-first approach, recognizing that database performance is highly dependent on available memory among other factors, the service focuses on optimizing your instances without reducing memory capacity of your DBinstances.

How Compute Optimizer evaluates database resources

Compute Optimizer analyzes your databases using multiple data sources, including CloudWatch metrics capturing CPU utilization, network throughput, and database connections. When enabled, CloudWatch Database Insights provides deeper analysis, including DBLoad and swap utilization. Database Insights also examines historical usage patterns to identify trends and cyclical workload patterns.

For memory analysis specifically, when CloudWatch Database Insights is enabled, Compute Optimizer examines metrics such as os.swap.in and os.swap.out, and for Aurora databases, os.memory.outOfMemoryKillCount. While it does not recommend memory reductions, it does provide “Memory under-provisioned” recommendations when your DB instance’s memory configuration doesn’t meet the workload performance requirements. For RDS open-source, this is identified through swap metrics analysis, while Aurora instances are evaluated using memory kill counts and, for Aurora MySQL specifically, additional memory health state metrics. This comprehensive analysis provides insight into memory pressure and helps identify when current memory resources are insufficient for your workload needs.

Finding right sizing opportunities

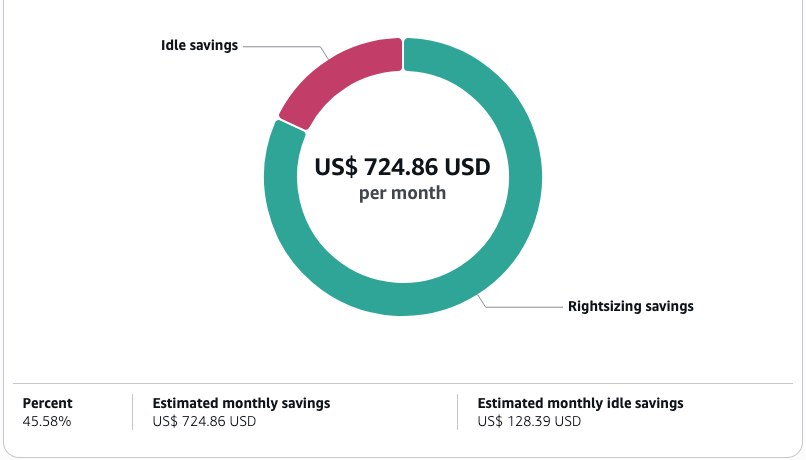

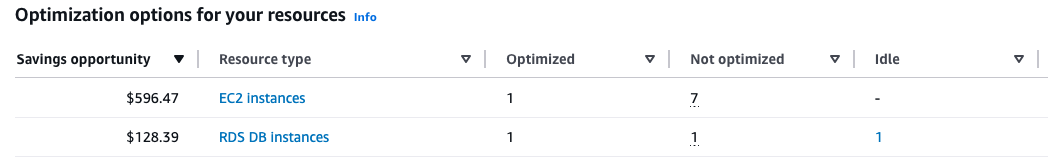

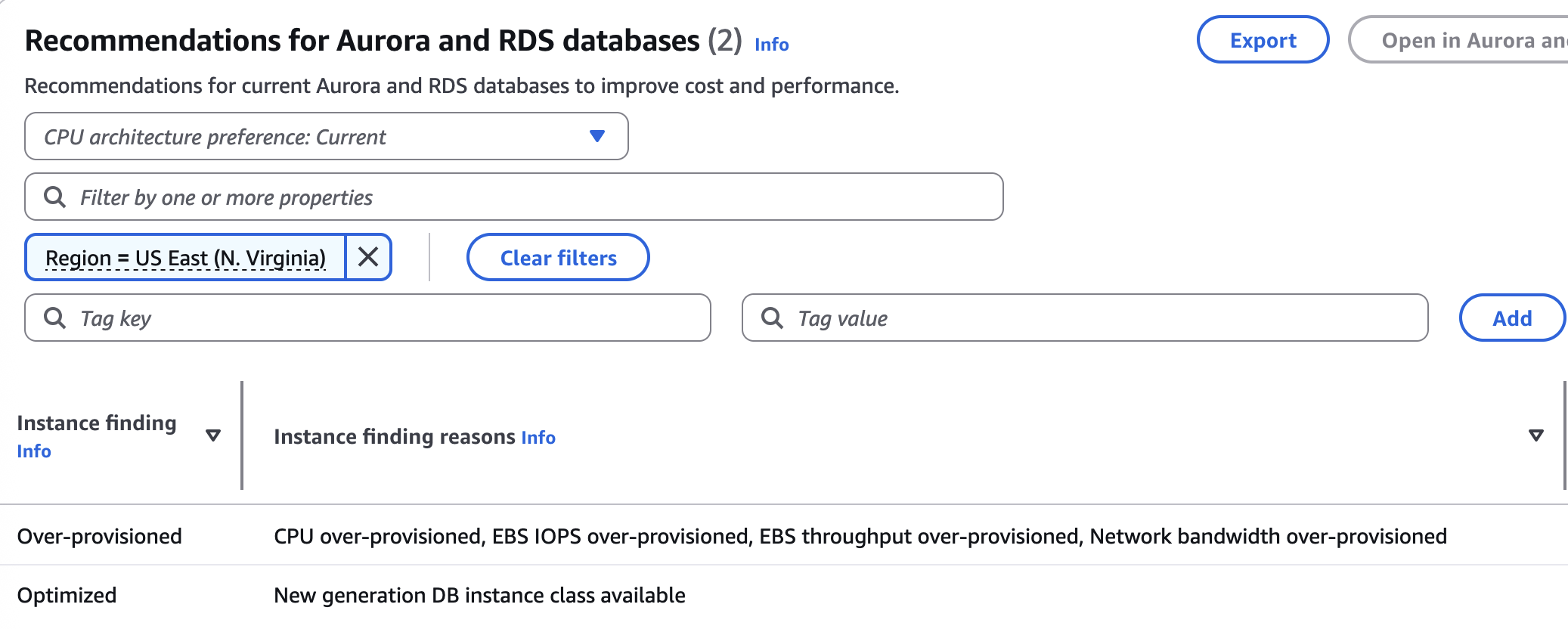

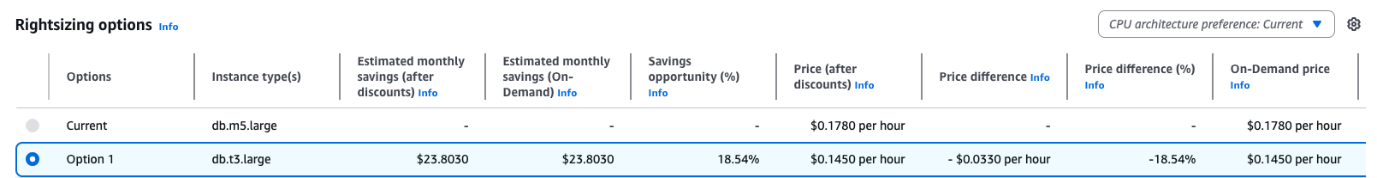

The recommendations dashboard presents a comprehensive view of your Amazon RDS open-source or Aurora environment with clear classifications, as shown in the following screenshots. Optimized instances are appropriately sized for their workloads. Over-provisioned instances have excess capacity that could be reduced (though not reducing memory). Under-provisioned instances lack sufficient resources for optimal performance.Each database includes specific finding reasons like “CPU over-provisioned” or “Network bandwidth under-provisioned,” taking the guesswork out of optimization decisions. For each instance, you can see recommended alternatives with estimated monthly savings and performance risk assessments, helping you make informed decisions about right sizing your databases.

Image 1 shows the Compute Optimizer dashboard with a pie chart highlighting potential savings opportunities. Image 2 displays a resource summary table categorizing your database resources as “Optimized,” “Not optimized,” or “Idle.” You can click on any number in this table to access detailed information for resources in that category. Image 3 shows the instance findings with specific optimization reasons, and Image 4 presents the recommended options for improving your configuration.

Image 1

Image 2

Image 3

Image 4

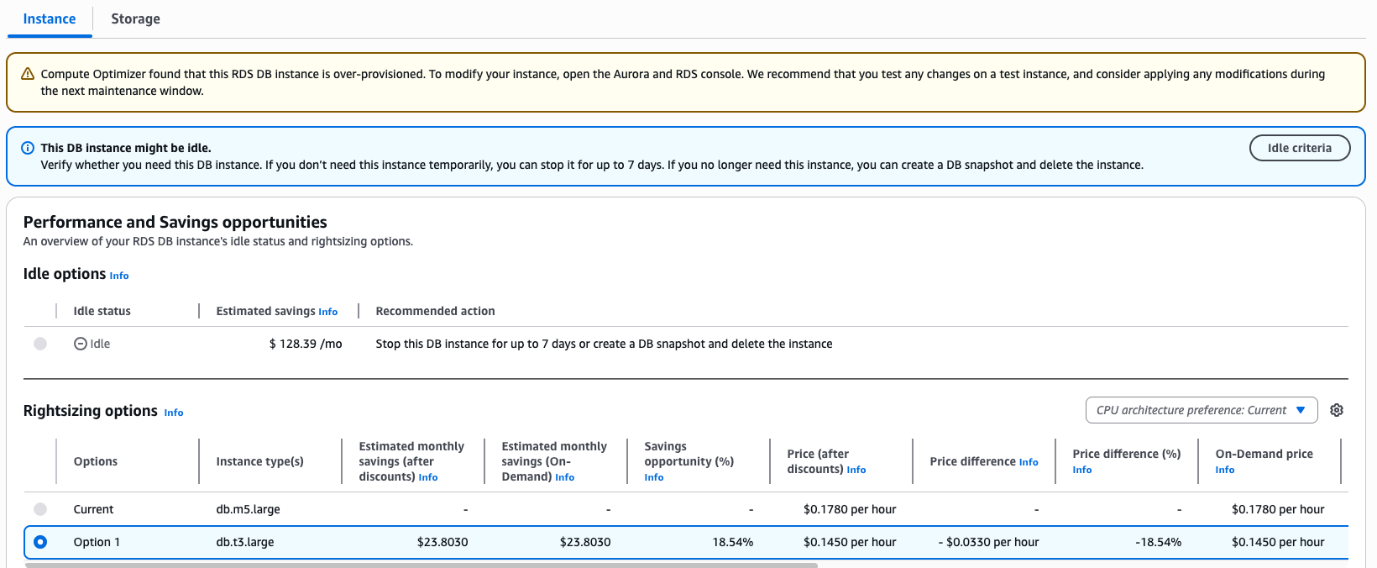

How Compute Optimizer identifies idle databases

For RDS open-source databases, Compute Optimizer evaluates multiple operational metrics to determine if a resource is truly idle. A database is flagged as idle when it meets all of the following criteria: it isn’t being used as a read replica, has no database connections over the configured lookback period (which can be 14 days by default, 32 days for capturing monthly cycles, or 93 days for longer trend analysis). The 14-day and 32-day lookback periods are included at no additional cost.

Similarly, Aurora databases are considered idle when they aren’t part of a secondary cluster in an Aurora global database, show no database connections, and preserving low CPU usage with minimal read/write activity.The following screenshot shows an example of an idle database recommendation showing criteria and suggested actions.

Taking action on idle database recommendations

Compute Optimizer identifies truly idle resources and provides specific recommendations for them. When it detects an idle instance, the tool displays the notification: “Verify whether you need this DB instance. If you don’t need this instance temporarily, you can stop it for up to 7 days. If you no longer need this instance, you can create a DB snapshot and delete the instance.”. Following these guidelines helps you make appropriate decisions about temporary stoppage versus complete removal of unnecessary database resources.

To stop an idle RDS instance, complete the following steps:

- On the Amazon RDS console, in the navigation pane, choose Databases.

- Select the idle DB instance you want to stop.

- Choose Actions, and then choose Stop.

- In the confirmation window, choose Yes, Stop Now.

For idle Aurora databases, Compute Optimizer recommends converting to Amazon Aurora Serverless v2 instance classes, which can scale down to 0 ACUs during periods of inactivity—completely pausing compute charges while maintaining storage. This capability enables true scale-to-zero functionality, automatically resuming within seconds when connections are requested.

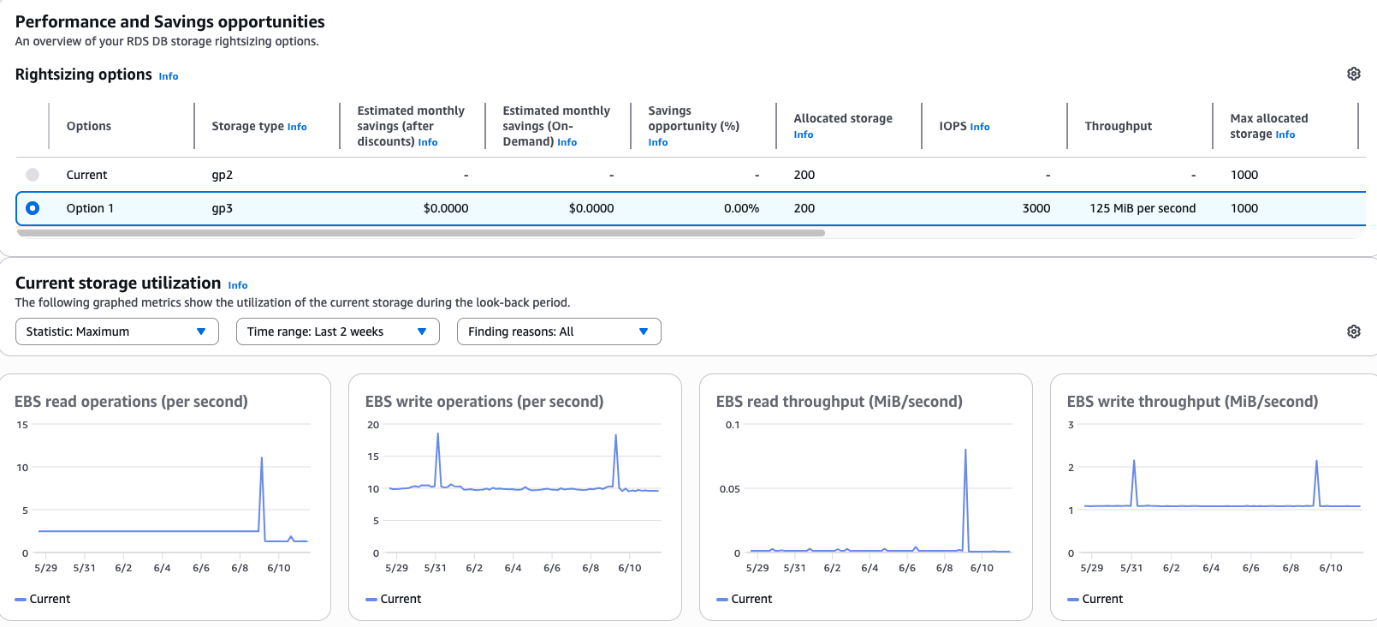

Storage optimization recommendations

Compute Optimizer goes beyond instance sizing to provide detailed storage recommendations, helping you optimize both performance and cost.

Storage type analysis and recommendations

The service analyzes your database storage patterns across multiple dimensions, including I/O utilization for reads and writes per second, throughput measured in MBps transferred, storage growth tracking the rate of storage utilization change, and for gp2 volumes, burst credit utilization patterns.

Based on these metrics, Compute Optimizer provides recommendations for storage type changes such as migrating from gp2 to gp3, right sizing provisioned IOPS for io1/io2 volumes, and optimizing throughput settings for gp3 storage.

The following screenshot shows an example of storage recommendation details, including metrics and projected savings.

A common recommendation is migrating from gp2 to gp3 storage. Compute Optimizer helps you understand when this makes sense by analyzing baseline performance needs, because gp3 provides higher baseline performance of 3,000 IOPS and 125 MiBps. The service also examines cost structure considerations, because gp3 allows independent scaling of IOPS and throughput, potentially providing better economics. It also identifies burst patterns to determine if your workload relies on gp2’s burst capability or would benefit from gp3’s consistent performance.

For Aurora databases, Compute Optimizer provides recommendations for switching between Aurora Standard and Aurora I/O-Optimized storage configurations. For I/O-intensive workloads, Aurora I/O-Optimized can provide cost savings and better price predictability because you pay hourly for DB instances and storage usage rather than per I/O operation. When Compute Optimizer identifies potential savings opportunities, it marks clusters as “Not Optimized” and provides a breakdown of potential cost differences, helping you make informed decisions about your storage configuration.

To modify your database storage, complete the following steps:

- On the Amazon RDS console, in the navigation pane, choose Databases.

- Choose the DB instance that you want to modify, then choose Modify.

- Under Storage, adjust the storage type and allocated storage based on the recommendations.

- Choose Continue to see a summary of changes.

- Choose Apply immediately or Apply during the next scheduled maintenance window, then choose Modify DB instance.

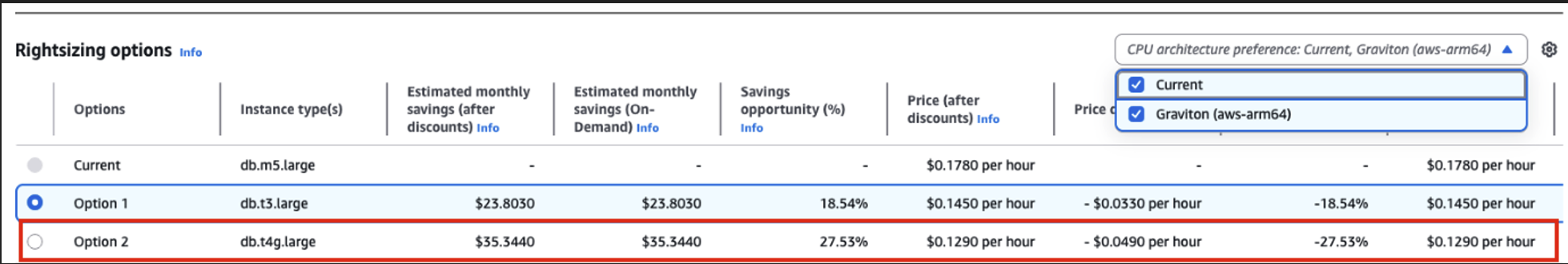

Graviton migration for managed databases

A valuable feature of Compute Optimizer is its ability to identify opportunities to migrate to AWS Graviton based instances, which can offer significant performance and cost benefits.

AWS Graviton performance and cost benefits

Migrating your RDS open-source and Aurora databases to Graviton is relatively simple, requiring only a configuration change that can typically be completed during a standard maintenance window. Since AWS manages the underlying infrastructure complexity as part of the service, your applications continue to connect using the same endpoints and drivers, making the transition seamless with minimal implementation effort.

The following screenshot shows an example AWS Graviton recommendation with a performance comparison and projected savings.

AWS Graviton recommendation analysis

When evaluating a potential AWS Graviton migration, Compute Optimizer provides a performance comparison with side-by-side metrics comparing your current instance with Graviton alternatives. You will also see a cost impact analysis with projected savings after migration, and a risk assessment evaluation of potential performance impact.

Recommendations for latest generation instances

Technology advances quickly, and newer instance generations often provide better price-performance ratios than their predecessors. Compute Optimizer identifies opportunities to upgrade your RDS open-source and Aurora instances to newer instance families, offering improved hardware specifications, enhanced networking capabilities, and access to newer features and optimizations.

The detailed analysis page shows comprehensive utilization metrics with comparison graphs that help you understand the potential impact before implementation.

Compute Optimizer’s recommendations account for your existing Reserved Instance (RI) investments when savings estimation mode is turned on, ensuring suggested changes don’t harm your cost efficiency by abandoning active reservations.

For effective planning:

- Use recommendations with savings estimation mode on to respect existing RI commitments

- Turn savings estimation off to identify ideal instance families to target when your RIs expire

This balanced approach helps you develop a modernization strategy that maximizes both current investments and future technological benefits, ensuring a cost-effective evolution of your database infrastructure.

Visualization Tools for Organization

AWS Cost Optimization Hub provides a unified view of database cost optimization opportunities across your organization. It supports reservation recommendations for Amazon RDS, Aurora, Amazon ElastiCache, Amazon DynamoDB, and Amazon MemoryDB, enabling you to consolidate and prioritize database-related cost savings while incorporating your specific AWS commercial terms. Its integration with Compute Optimizer ensures consistent savings estimates, allowing holistic decisions about both performance and commitment-based savings.

For cross-account visualization, Cloud Intelligence Dashboards, particularly the CORA Dashboard (Cost Optimization Recommended Actions) dashboard, extends Compute Optimizer capabilities by providing multi-account and multi-Region visibility without requiring account switching. CORA aggregates recommendations across your AWS Organization, presenting prioritized savings opportunities through visual heatmaps and allowing custom filtering by account, Region, or database engine.

Cloud Financial Management

Identifying optimization opportunities is only valuable if you can implement them effectively. The AWS Cloud Financial Management (CFM) Technical Implementation Playbooks (TIPs) provide systematic approaches to optimize your database resources.

The CFM TIPs Databases playbook covers essential optimization strategies, including reducing idle resources with step-by-step processes for safely stopping or stopping unused databases, implementing automated start/stop schedules for non-production databases, right-sizing guidance with best practices for implementing Compute Optimizer recommendations, migration to AWS Graviton with detailed frameworks for transitioning to ARM-based instances, and storage optimizations, including GP2 to GP3 migrations and provisioned IOPS adjustments.

The playbooks recommend a structured approach to optimization:

- Reduce waste through idle resource removal and instance scheduling.

- Right-size the remaining resources based on Compute Optimizer recommendations.

- Evaluate AWS Graviton migrations for compatible workloads.

- Optimize storage configurations for performance and cost.

- Consider Reserved Instances or Savings Plans for predictable workloads.

Taking action on recommendations

With insights from Compute Optimizer, visualization through Cost Optimization Hub and CORA dashboards, and operational guidance from CFM TIPs, you can implement changes with confidence. Prioritize opportunities by focusing on instances with the highest potential savings and lowest risk, test recommendations in non-production environments first, closely monitor performance metrics after implementing changes, and periodically review new recommendations as workload patterns evolve.

Conclusion

Database resource optimization is an ongoing process, not a one-time event. By using the right tools and methodologies provided by AWS, you can create a culture of continuous improvement, making sure your database resources are optimally configured for performance and cost-efficiency. Take the first step by implementing one optimization recommendation from each category – instance right-sizing, storage configuration, and reservation opportunities – to begin realizing immediate cost savings while maintaining optimal database performance. Visit the AWS Console to access these powerful optimization tools and transform your database cost management strategy.