AWS Database Blog

Better together: Amazon RDS for SQL Server and Amazon SageMaker Lakehouse, a generative AI data integration use case

Generative AI solutions are transforming how businesses operate worldwide, captivating users and customers across industries with their innovative capabilities. It has now become paramount for businesses to integrate generative AI capabilities into their customer-facing services and applications. The challenge they often face is the need to use massive amounts of relational data hosted on SQL Server databases to contextualize these new generative AI solutions.

In this post, we demonstrate how you can address this challenge by combining Amazon Relational Database Service (Amazon RDS) for SQL Server and Amazon SageMaker Lakehouse. These managed services work seamlessly together, enabling you to build integrated solutions that enhance generic foundation models (FMs) through Retrieval Augmented Generation (RAG). By connecting your existing SQL Server data with powerful AI capabilities, you can create more accurate, contextually relevant generative AI features for your services and applications.

Solution overview

Amazon RDS for SQL Server simplifies the process of setting up, operating, and scaling SQL Server deployments in the AWS Cloud. You can deploy multiple editions of SQL Server, including Express, Web, Standard, and Enterprise, in minutes with cost-efficient and resizable compute capacity. Amazon RDS frees you from time-consuming database administration tasks, so you can focus on application development.

SageMaker Lakehouse unifies your data across Amazon Simple Storage Service (Amazon S3) data lakes, Amazon Redshift data warehouses, and other relational data sources (like Amazon RDS for SQL Server), helping you build powerful analytics, machine learning (ML), and generative AI applications on a single copy of data. SageMaker Lakehouse provides integrated access controls and open source Apache Iceberg for data interoperability and collaboration. With SageMaker Lakehouse, you can build an open lakehouse on your existing data investments without changing your data architecture.

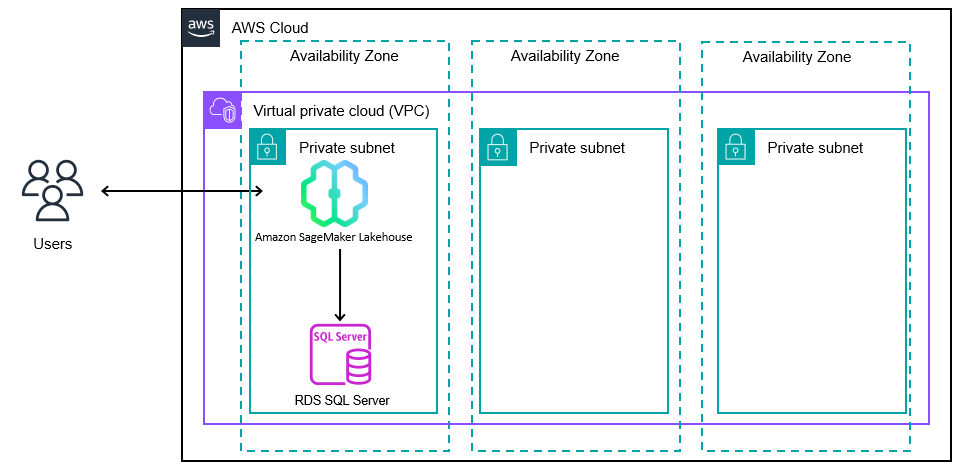

To support the integration between SageMaker Lakehouse and Amazon RDS, you can create a new SQL Server data source from Amazon SageMaker Unified Studio, which in turn is persisted as a federated data source in the lakehouse. The following diagram illustrates the key services involved in the solution.

Prerequisites

We assume you have familiarity with navigating the AWS Management Console. For this post, you also need the following resources and services enabled in your AWS account:

- An RDS for SQL Server instance. We suggest that you deploy a SQL Server 2022 instance after creating the SageMaker domain in the following section, in the same virtual private cloud (VPC) where the SageMaker domain is hosted (SageMakerUnifiedStudio-VPC). Refer to Create and Connect to a Microsoft SQL Server Database with Amazon RDS for detailed instructions.

- A database backup to restore to the RDS for SQL Server instance. For this post, we use the following sample database backup. This is a SQL Server 2022 backup.

- Access to the Stability AI Stable Image Ultra 1.0 FM on Amazon Bedrock.

- Access to the Amazon Nova Pro FM on Amazon Bedrock.

- Access to the SageMaker Unified Studio domains with the appropriate SSO credentials, if needed. AWS Identity and Access Management (IAM) user credentials are supported by default. For more details, see Managing users in Amazon SageMaker Unified Studio.

Refer to Importing and exporting SQL Server databases using native backup and restore for instructions to perform a database restore into an RDS for SQL Server instance.

Create a SageMaker Unified Studio domain

Before starting with the onboarding process, verify that the chosen VPC to onboard the SageMaker domain meets the following requirements:

- The VPC spans across three or more Availability Zones

- The VPC contains at least three private subnets

When the preceding requirements are met, proceed with the following steps:

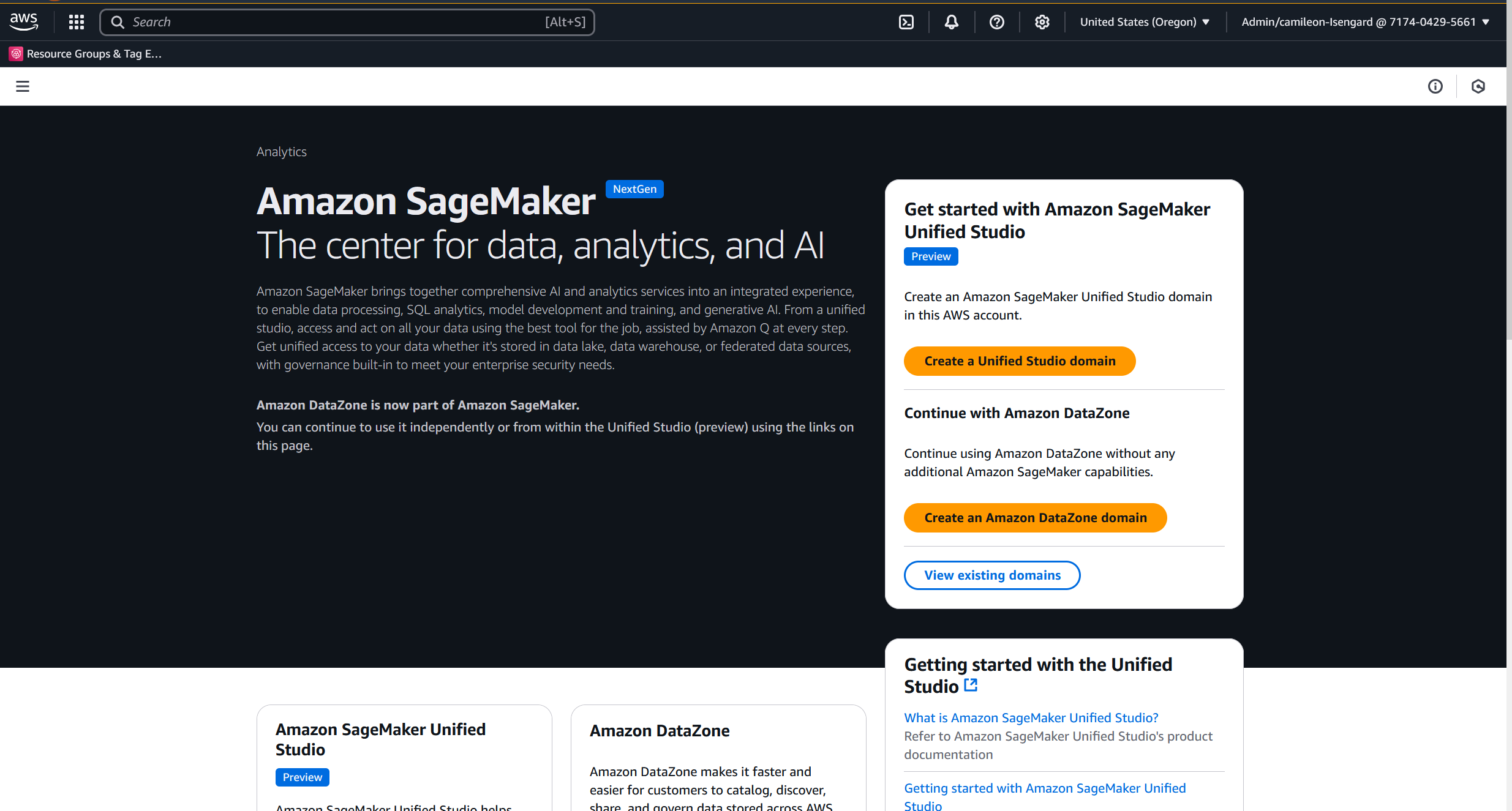

- On the SageMaker console, choose Create a Unified Studio domain.

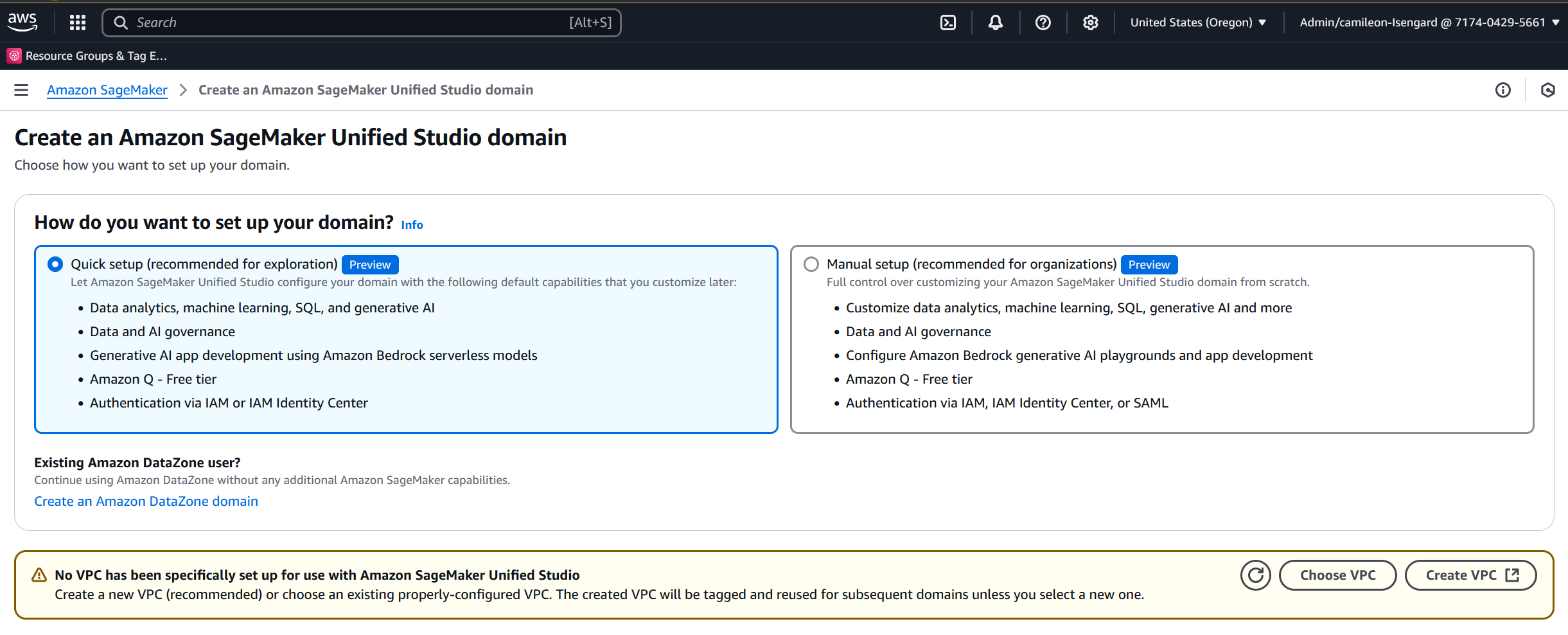

- Select Quick setup (recommended for exploration), then choose an appropriate option for VPC. For this post, we choose Create VPC and create a new VPC.When you choose Create VPC, you are redirected to the AWS CloudFormation console to create a new VPC named SageMakerUnifiedStudio-VPC. The process to deploy the stack takes a few minutes to complete. It will incur additional cost.

Proceed to the following steps after the new VPC named SageMakerUnifiedStudio-VPC is created successfully.

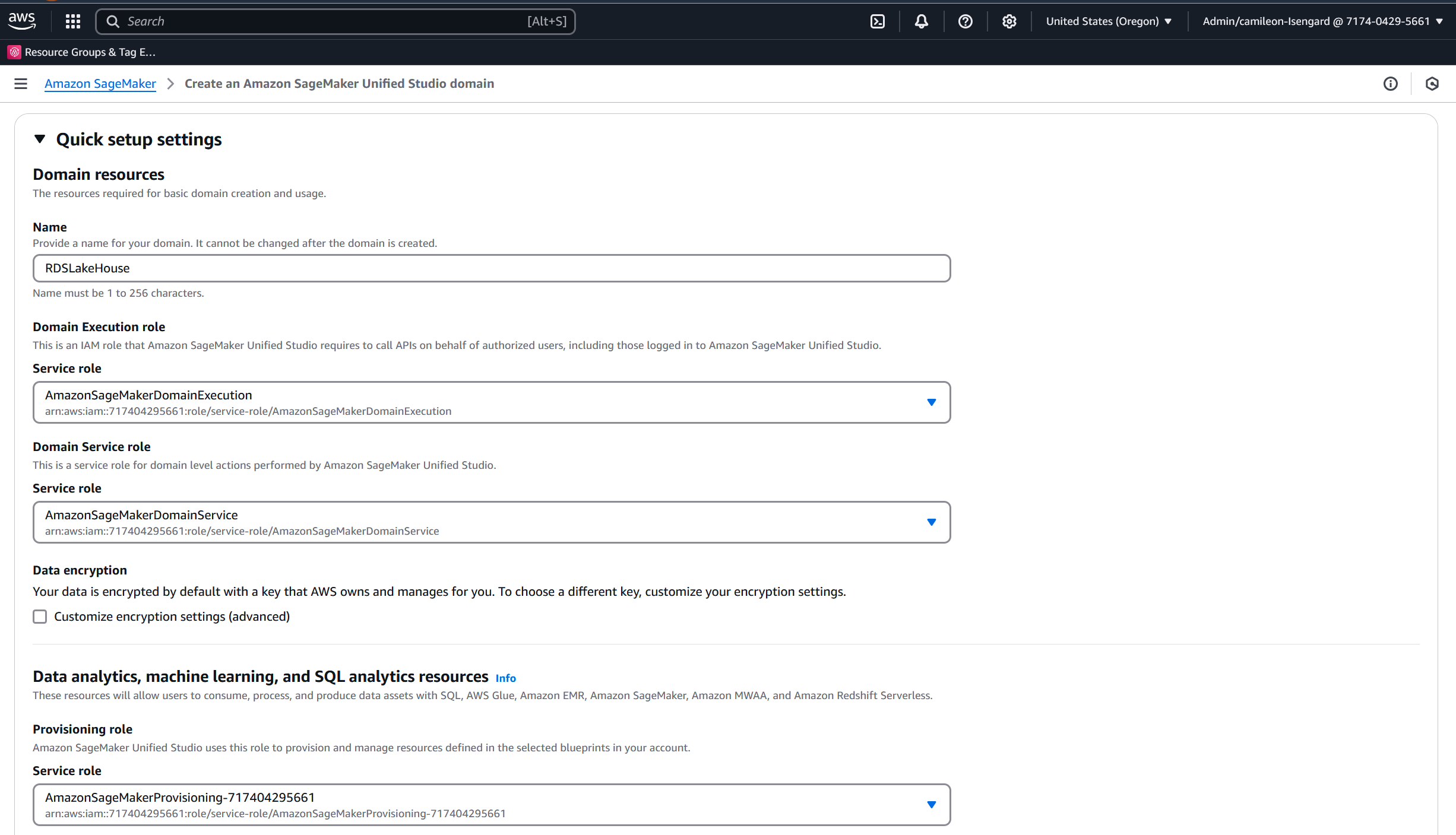

- For Domain name, enter a name (for example, RDSLakeHouse).

- For Domain Execution role, keep the default selection AmazonSageMakerDomainExecution.

- For Domain Service role, keep the default selection AmazonSageMakerDomainService.

- For Data encryption, keep the Customize encryption settings (advanced) check box unselected.

- For Provisioning role, keep the default selection AmazonSageMakerProvisioning-<YourAccountNumber>.

- Keep the default Create and use a new service role option for Manage access role.

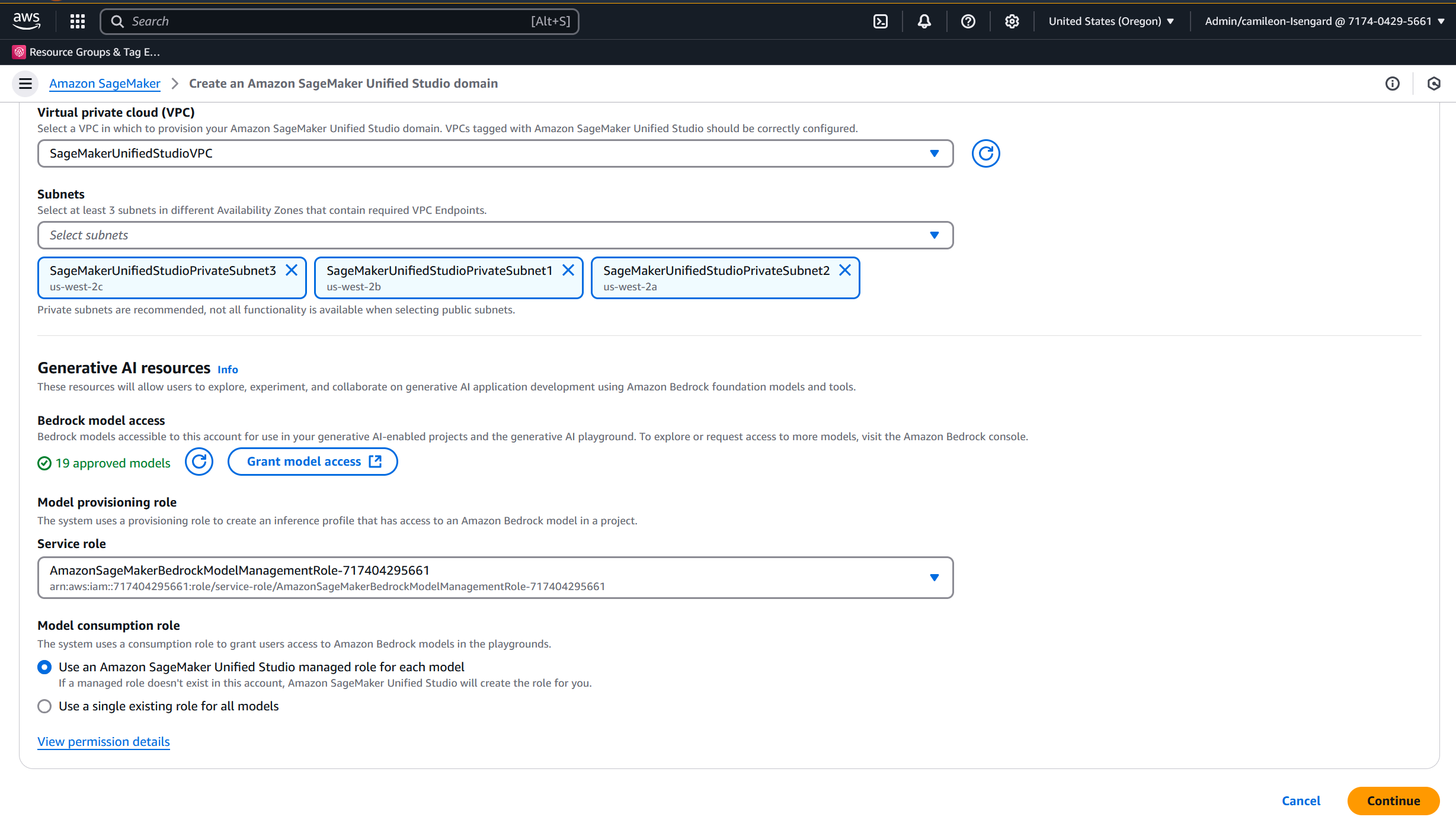

- For Virtual private cloud (VPC), choose the appropriate VPC.

- For Subnets, choose at least three subnets in different Availability Zones.

- In the Generative AI resources section, for Model provisioning role, keep the default selection AmazonSageMakerBedrockModelManagementRole-<YourAccountNumber>.

- For Model consumption role, keep the default option Use an Amazon SageMaker Unified Studio managed role for each model selected.

- Choose Continue.

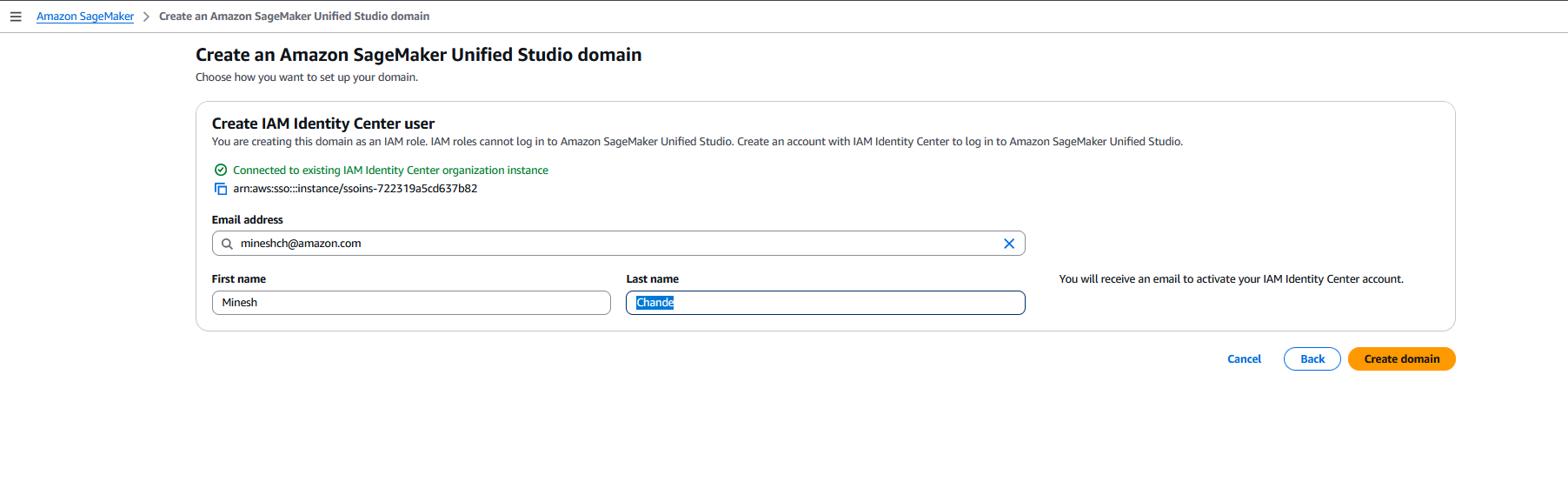

- In the Create IAM Identity Center user section, enter an email address, first name, and last name.

- Choose Create domain.

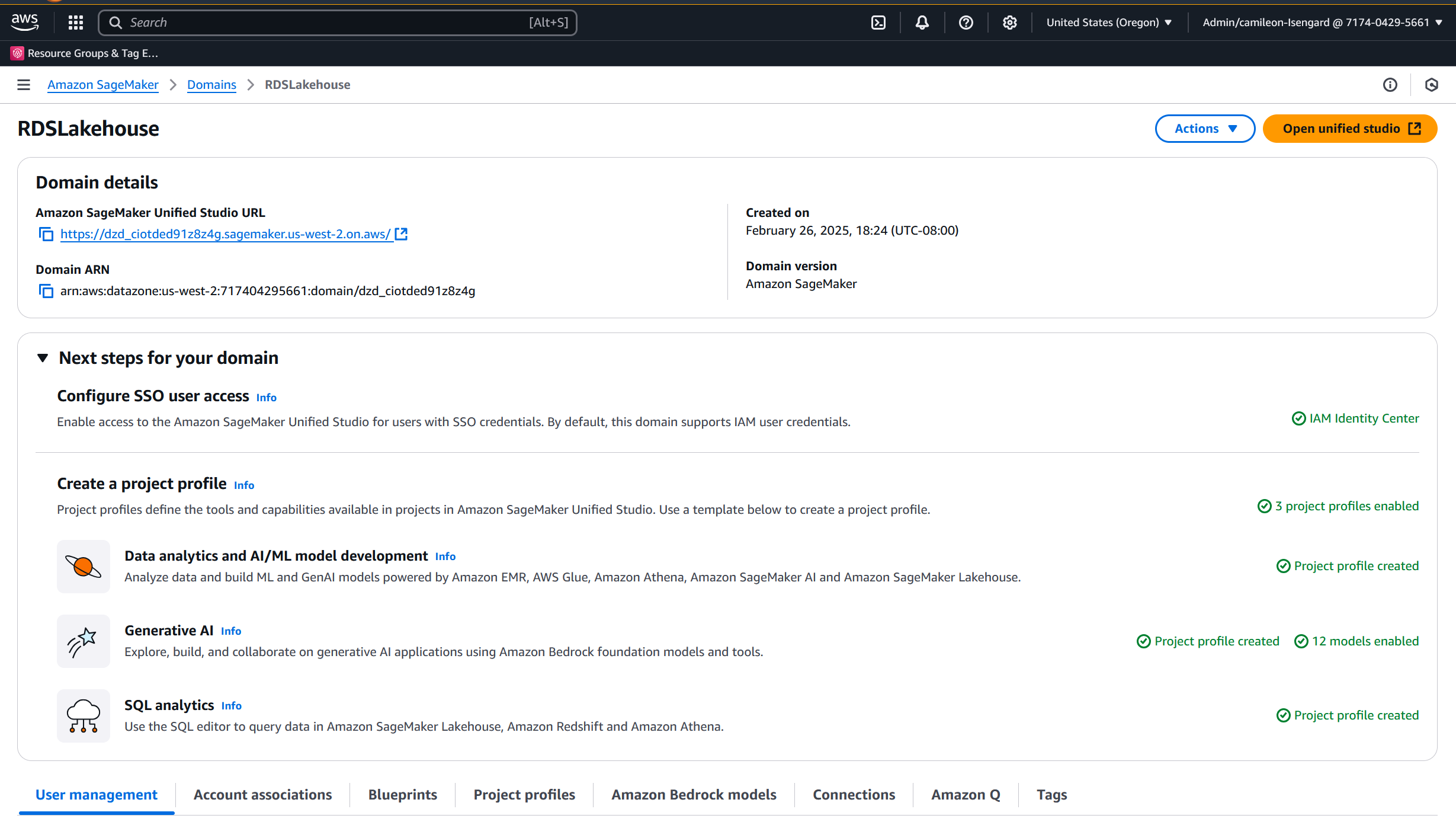

- Choose the newly created SageMaker domain.

- On the domain details page, choose Open unified studio to launch SageMaker Unified Studio (follow the prompts to complete the two-factor authentication process).

Create a new SQL Server data source from SageMaker Lakehouse

After you configure a SageMaker domain using the appropriate VPC, you now need to create a new SQL Server data source using SageMaker Lakehouse built-in features to connect to our sample wwi database, hosted on Amazon RDS for SQL Server. Complete the following steps:

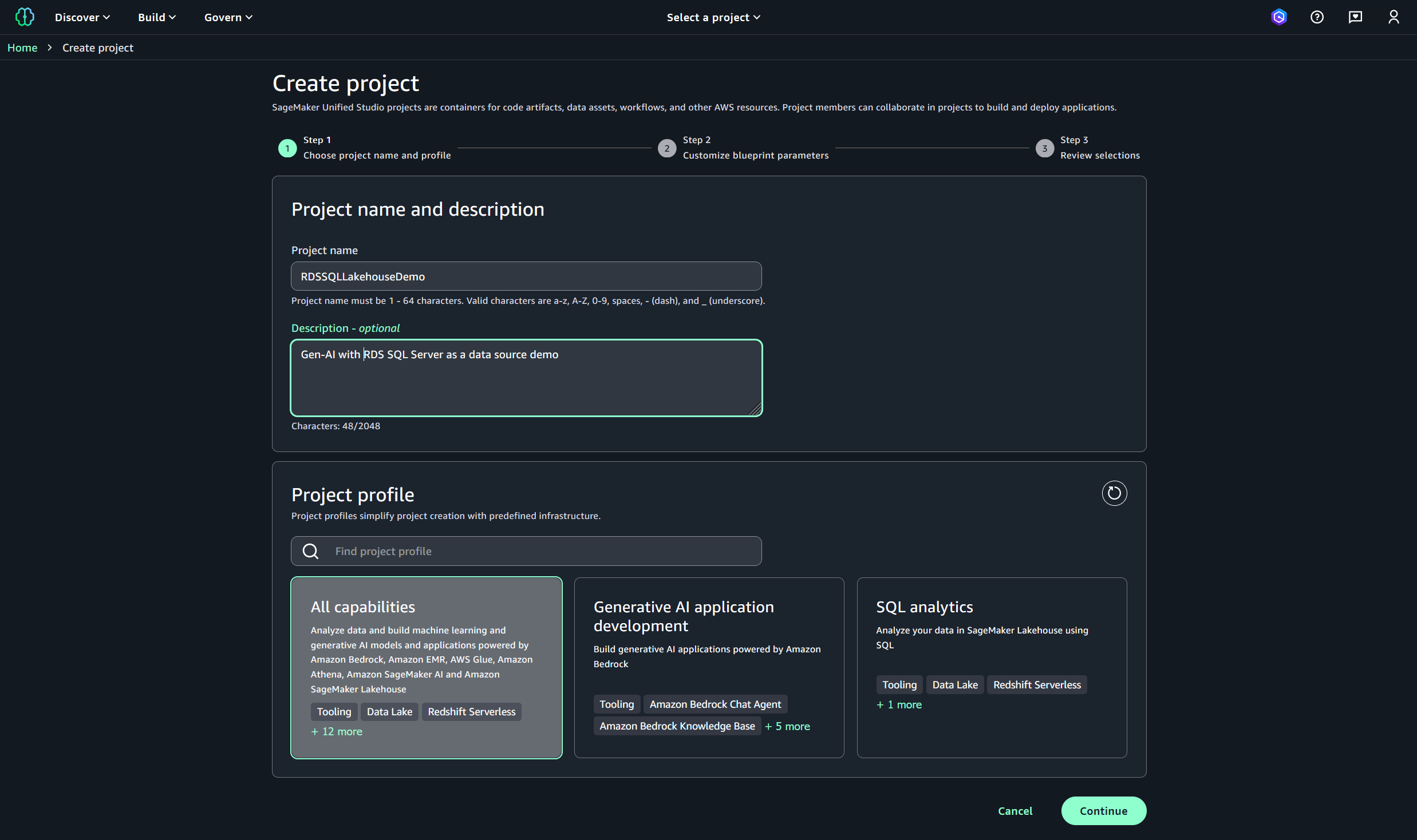

- On the SageMaker Unified Studio landing page, choose Create project.

- Under Create project, provide the following information:

- For Project name, enter a name (for example, RDSSQLLakehouseDemo).

- For Description, enter an optional description (for example, Gen-AI with RDS SQL Server as a data source demo).

- Choose All capabilities for the project profile.

- Choose Continue.

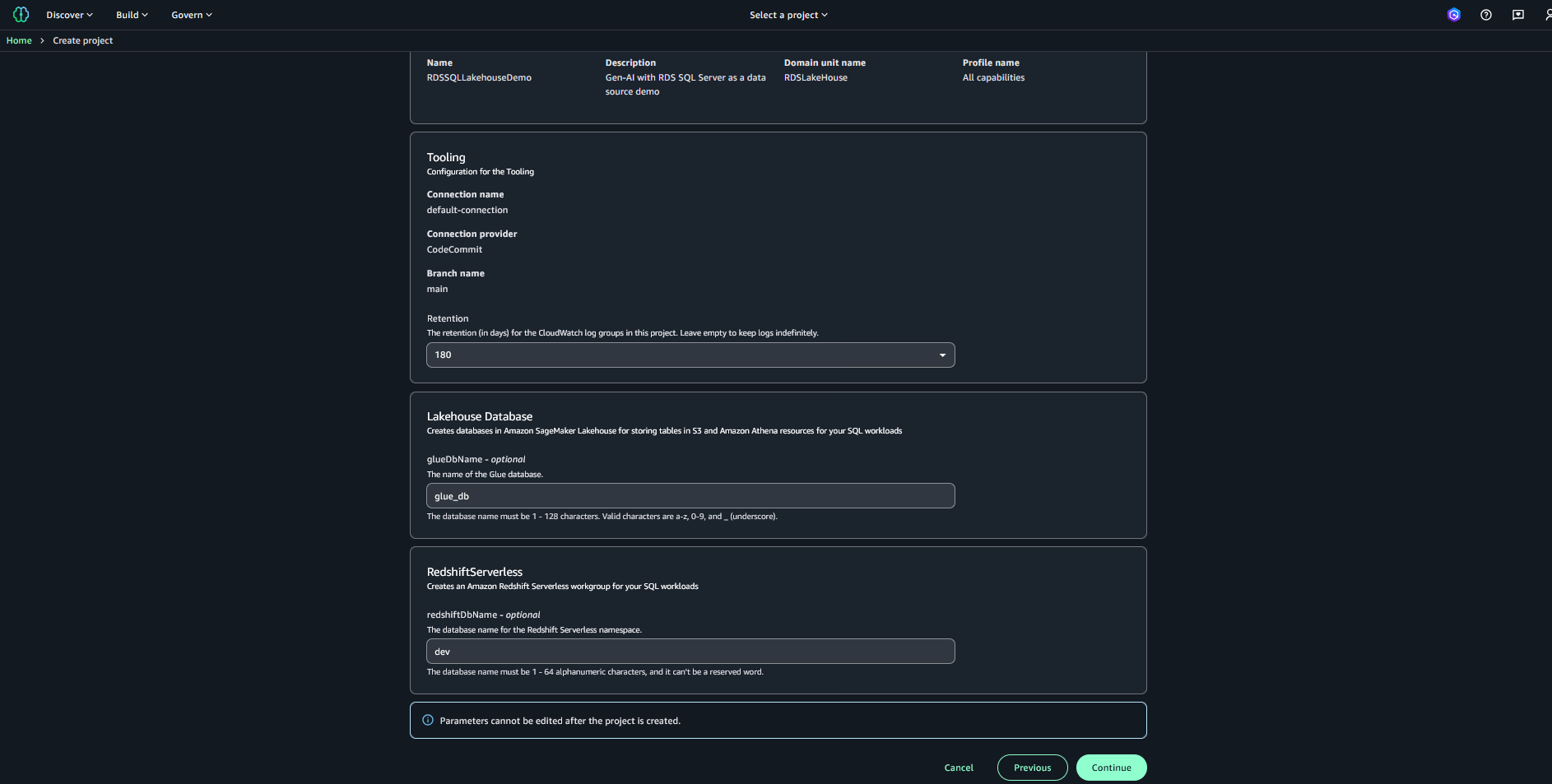

- In the Customize blueprint parameters section, provide the requested information, then choose Continue.

- Retention – Select 180 from the drop down

- glueDbName – Enter “glue_db”

- redshiftDbName – Enter “dev”

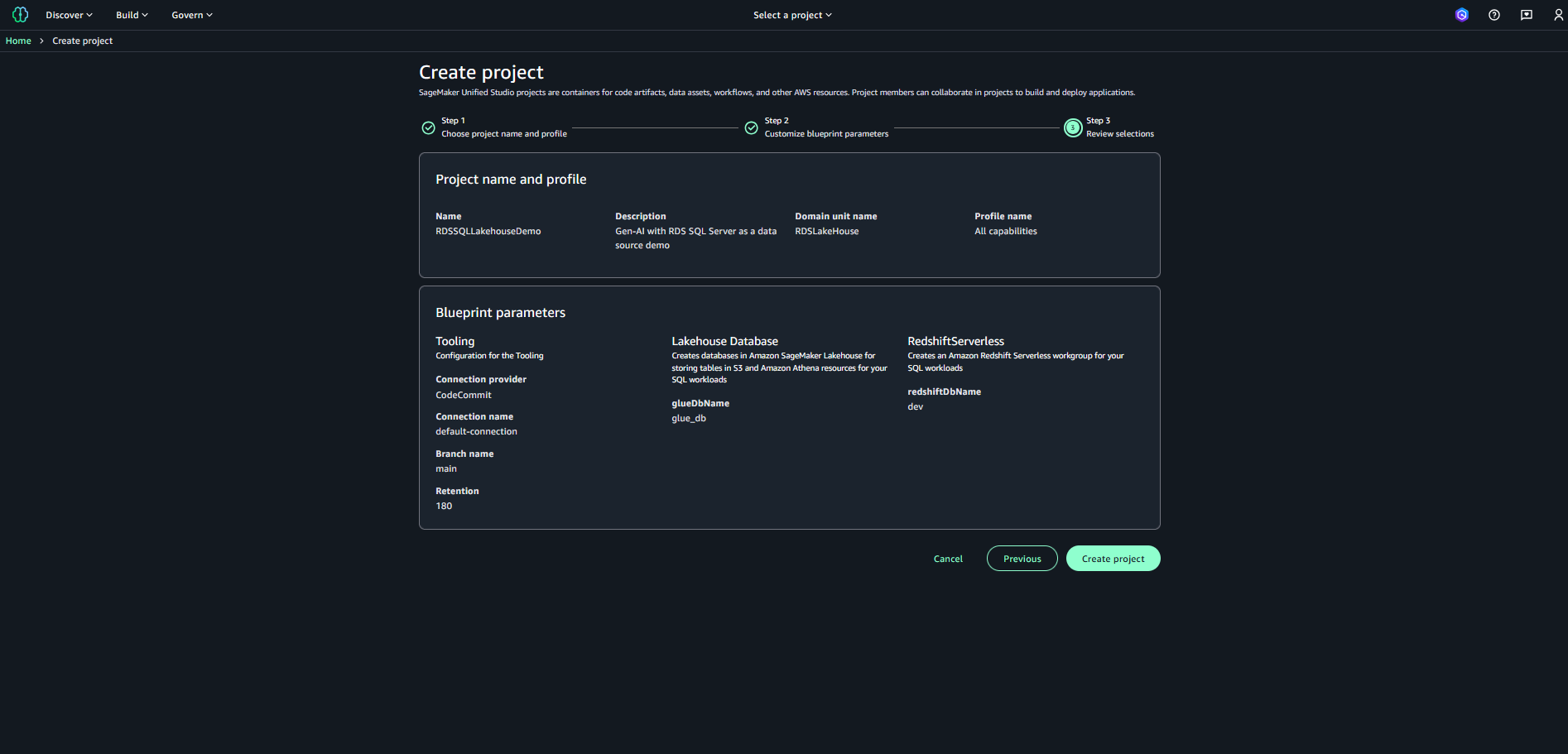

- Review your selections and choose Create project.

It may take a few minutes to create a project in SageMaker Lakehouse. Proceed to the next steps after the project is created successfully.

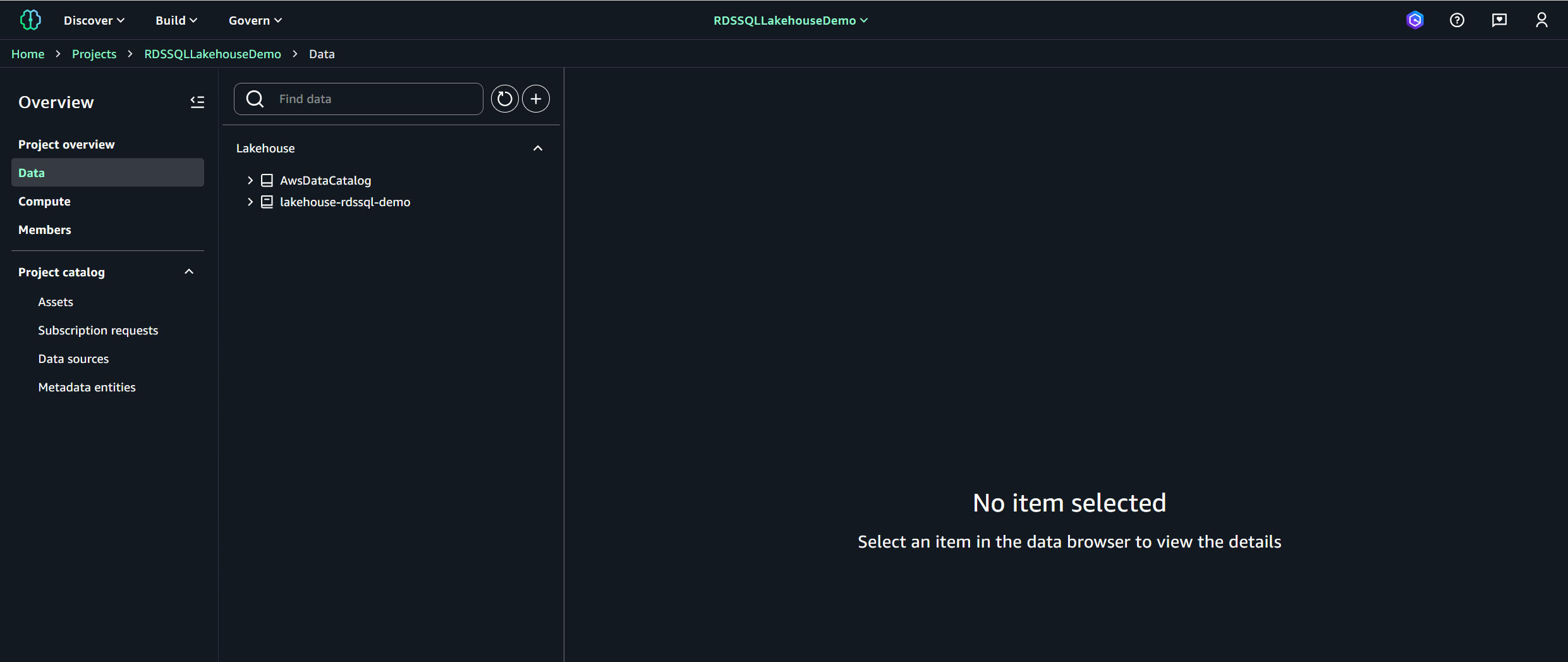

It may take a few minutes to create a project in SageMaker Lakehouse. Proceed to the next steps after the project is created successfully. - On the RDSSQLLakehouseDemo project page, choose Data in the navigation pane to open SageMaker Lakehouse.

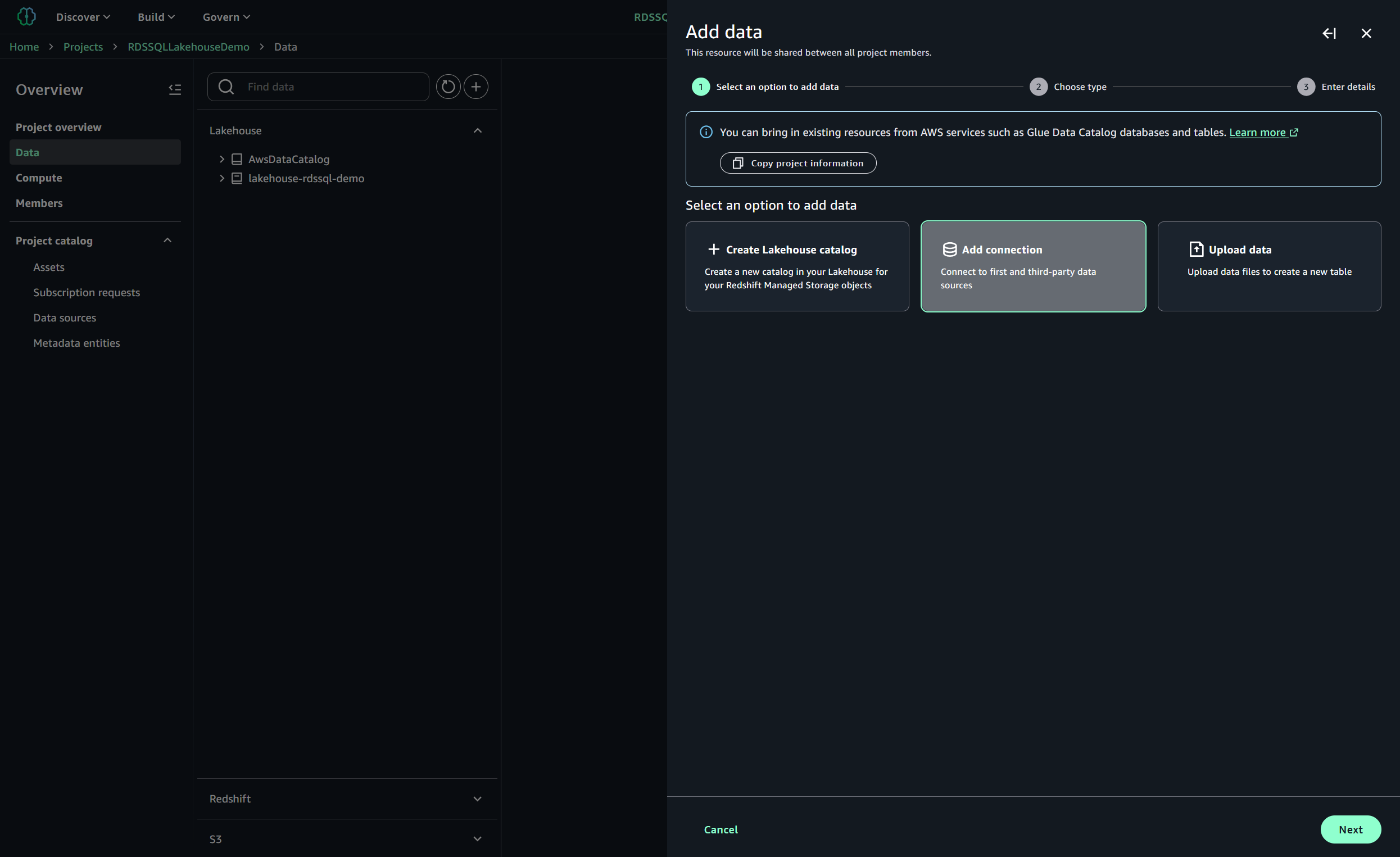

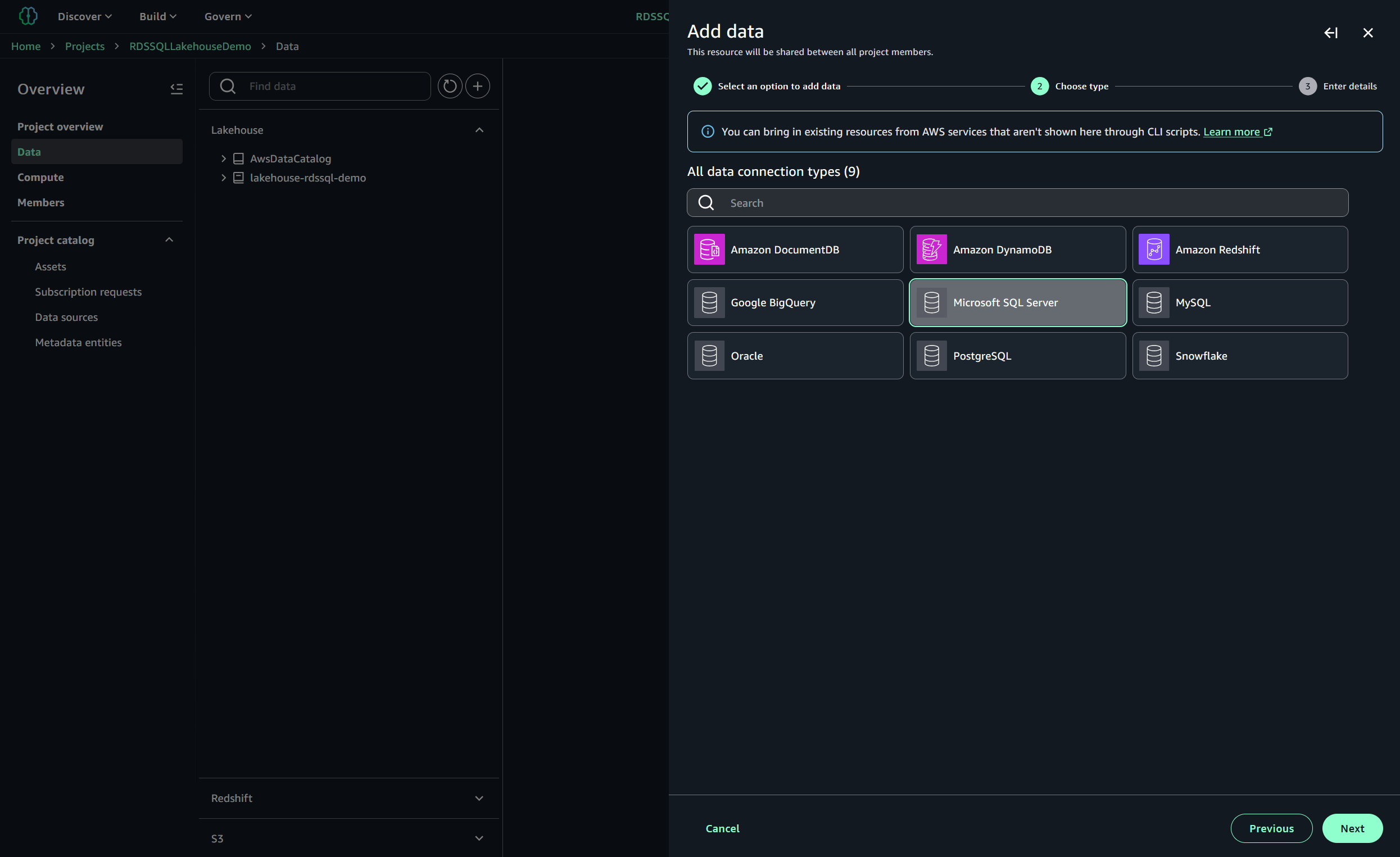

- Choose the plus sign to create a new data source.

- Choose Add connection, then choose Next.

- Choose Microsoft SQL Server as the connection type, then choose Next.

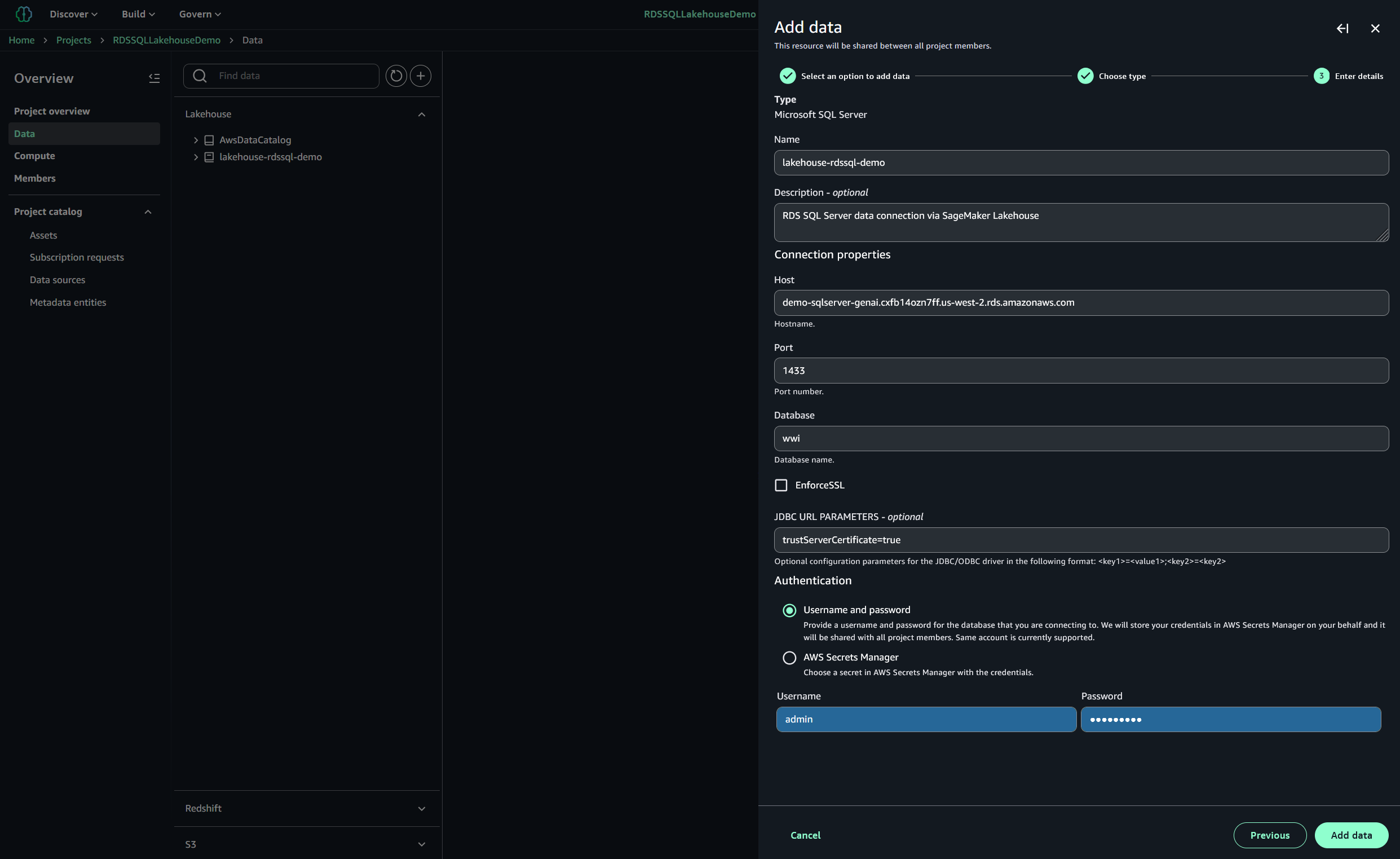

- Under Add data, provide the following information:

- For Name, enter a name (for example, lakehouse-rdssql-demo).

- For Description, enter an optional description (for example, RDS SQL Server data connection via SageMaker Lakehouse).

- For Host, enter your RDS for SQL Server instance endpoint.

- For Post, use 1433 (unless your instance was configured differently).

- For Database, enter wwi.

- For JDBC URL Parameters, enter trustServerCertificate=true.

- Under Authentication, select Username and password, then enter your RDS for SQL Server username and password (as of this writing, there’s an UI issue for passwords with special characters).

- Choose Add data.

The “Add Data” feature connects to and scans your data sources, infers schema or structure, and prepares the data for use in notebooks or pipelines, which can take a few minutes depending on data size, permissions, and environment readiness.

After successful completion of this task, a new entry will show in the Lakehouse pane, under the default AwsDataCatalog data connection.

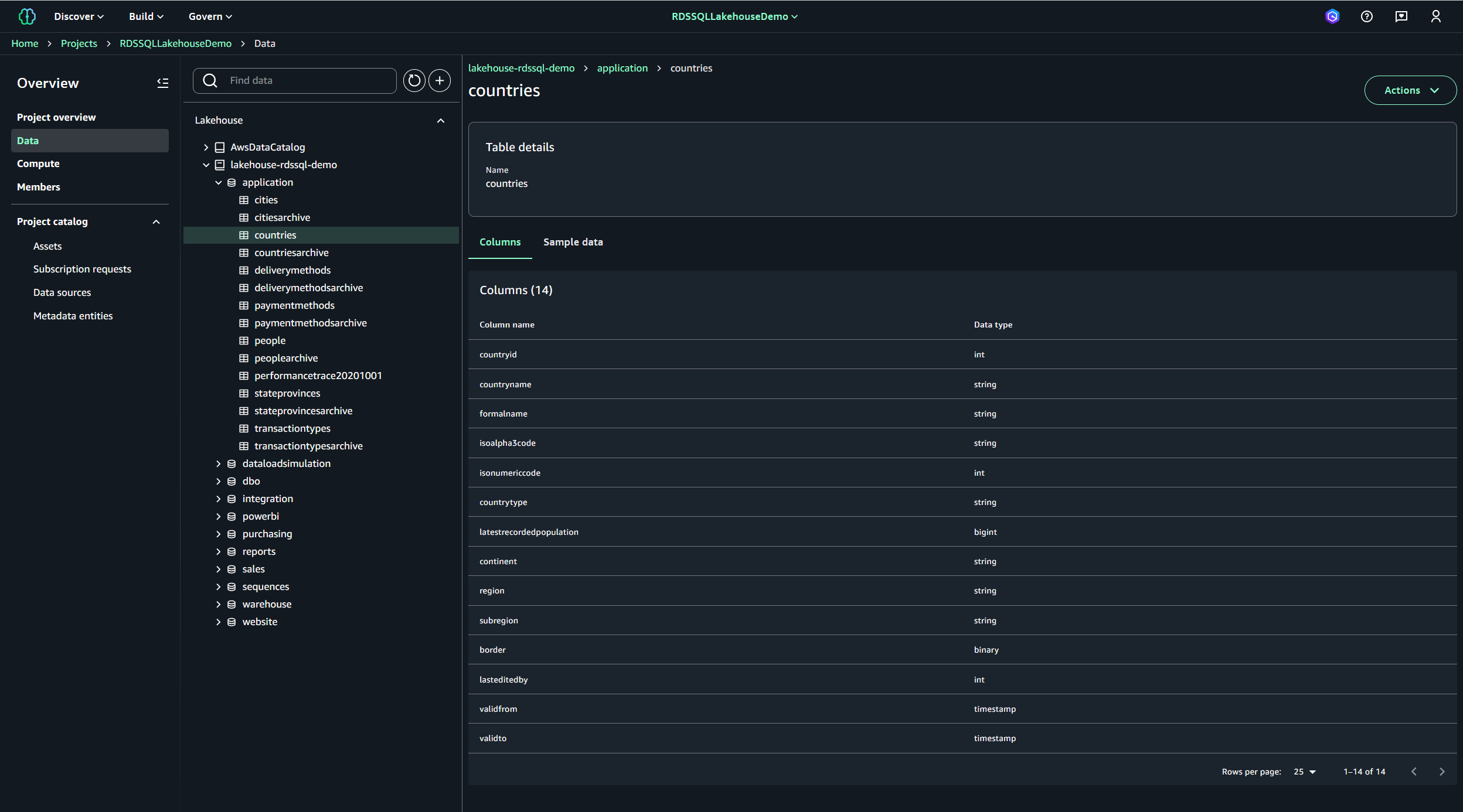

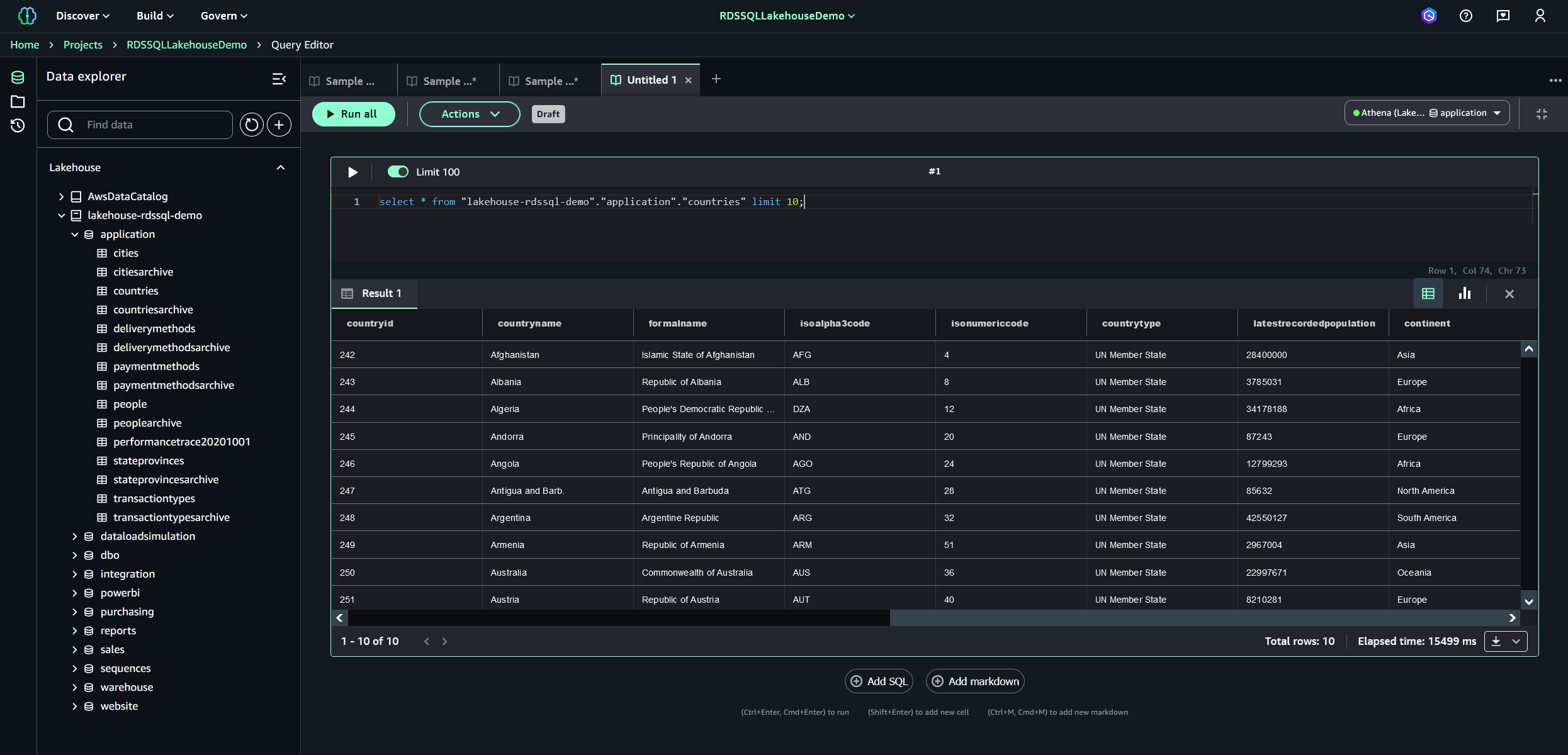

- Expand the lakehouse-rdssql-demo data connection to browse available schemas in the wwi database, expand the application schema, then choose the countries table.

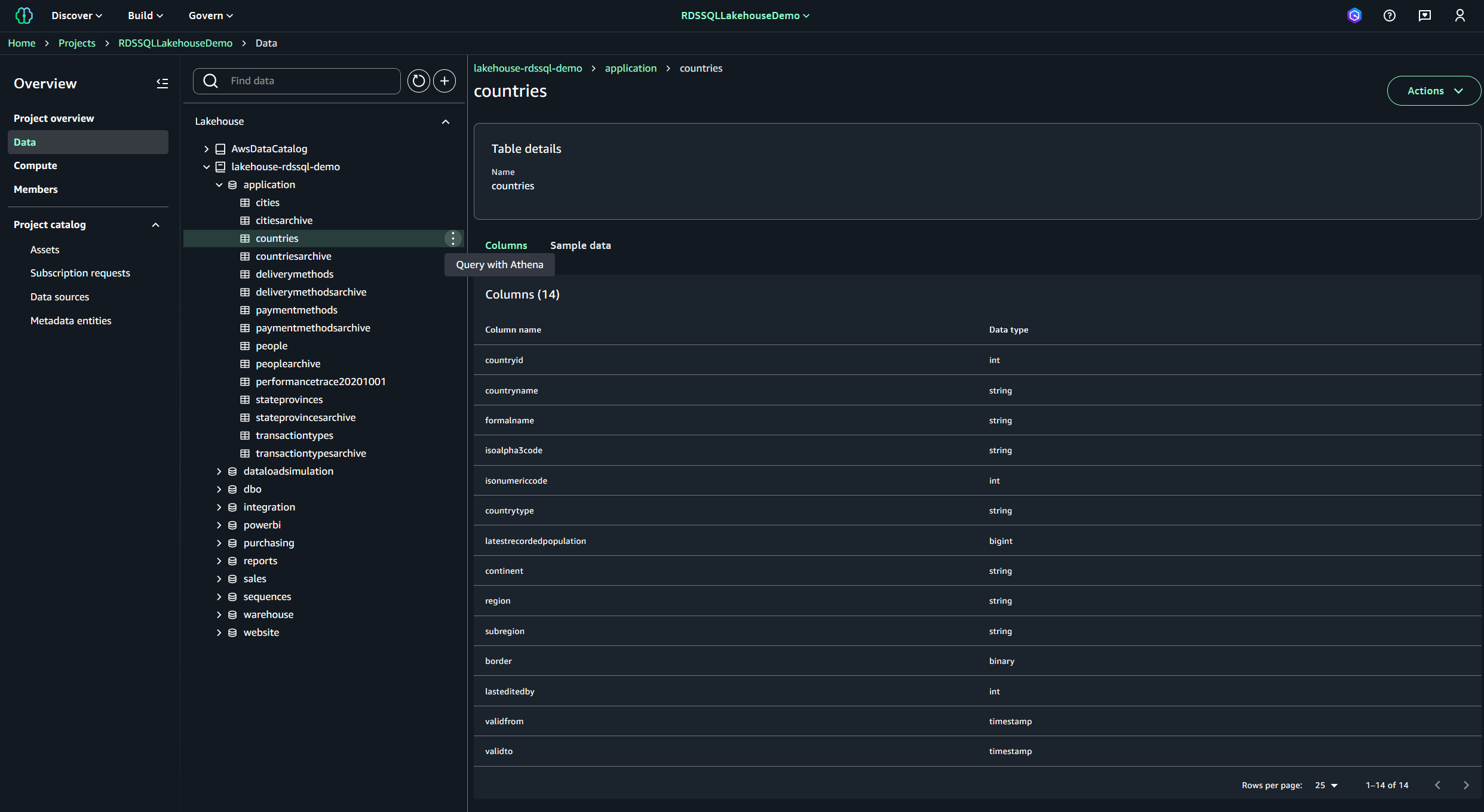

- Choose the options menu (three vertical dots) next to the countries table and choose Query with Athena.

This action launches the query editor with a prepopulated Amazon Athena SQL query, which will be automatically executed. After a few seconds, a short list of countries (10) should be displayed on the page, as shown in the following screenshot. To browse all countries, remove the limit 10 portion of the SQL statement, disable the Limit 100 option, and rerun the query.

Generative AI RAG scenarios using SageMaker Lakehouse

After you have created a SageMaker project with a properly configured SQL Server data source, you’re ready for the generative AI section of this demo. Complete the steps in this section to execute generative AI RAG scenarios using the sample wwi SQL Server database as a source.

Image generation use case

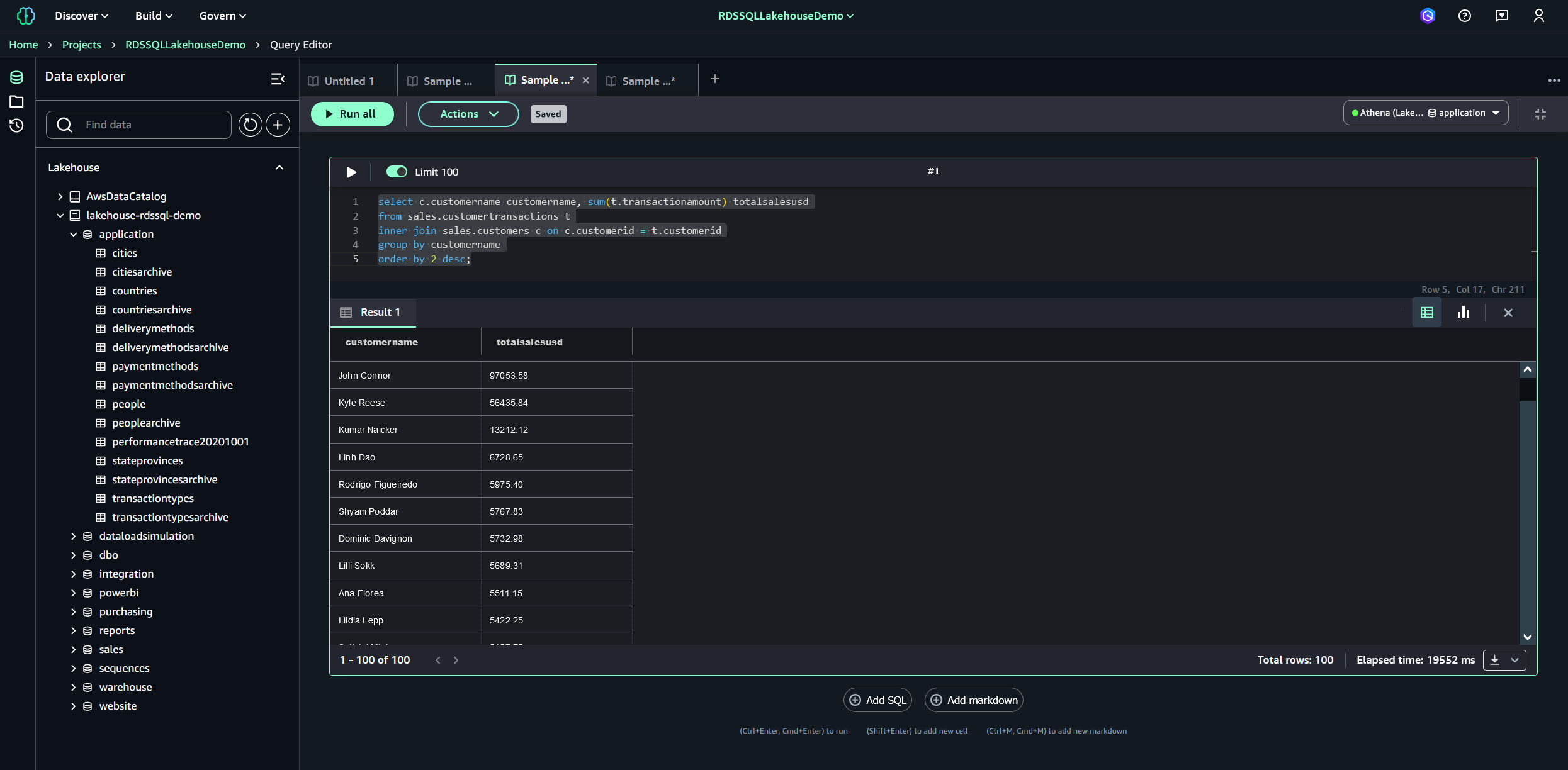

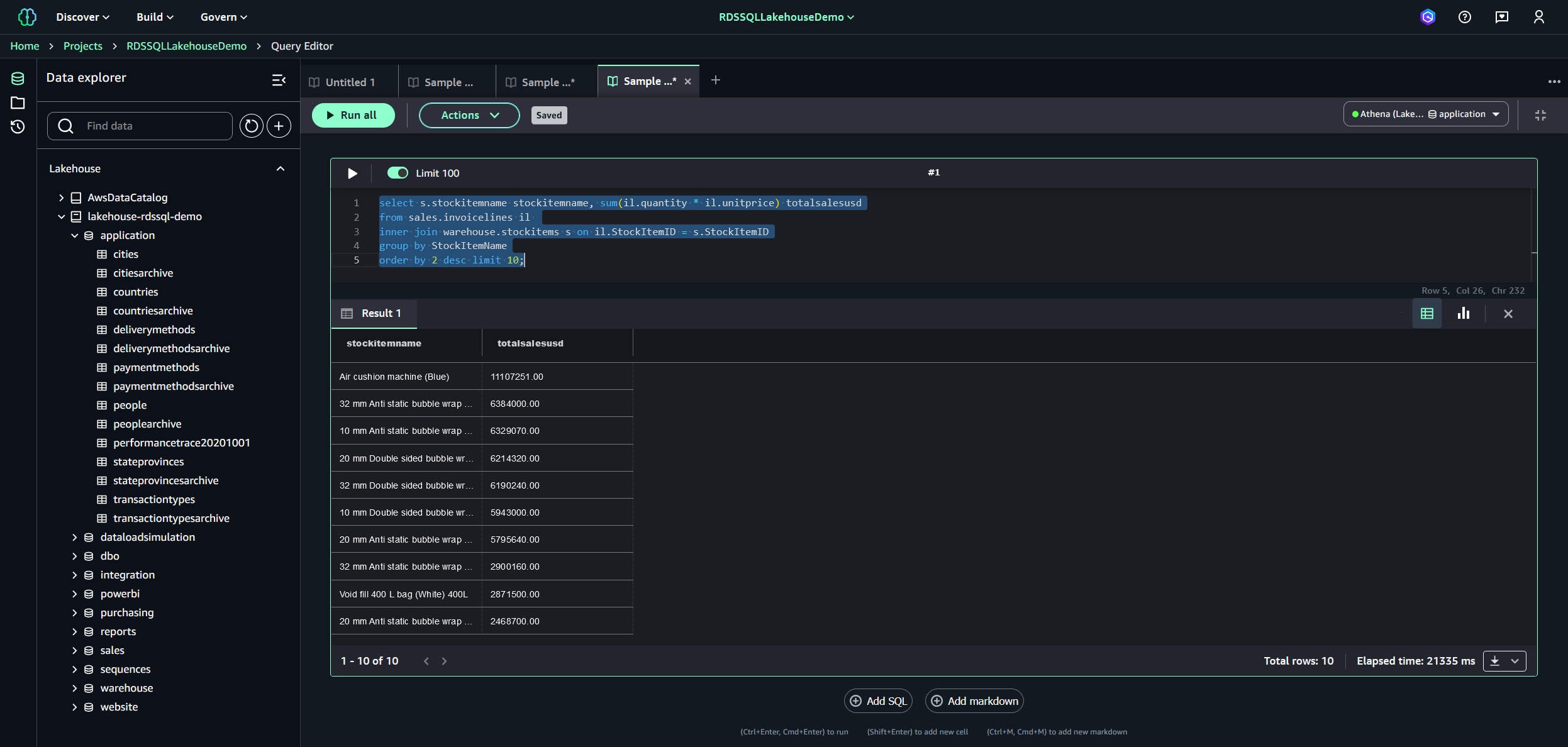

For our first use case, we execute a sample query that will be the source data for an image generation generative AI example using a Stability AI FM. To execute a sample query using the SageMaker Unified Studio query editor, complete the following steps:

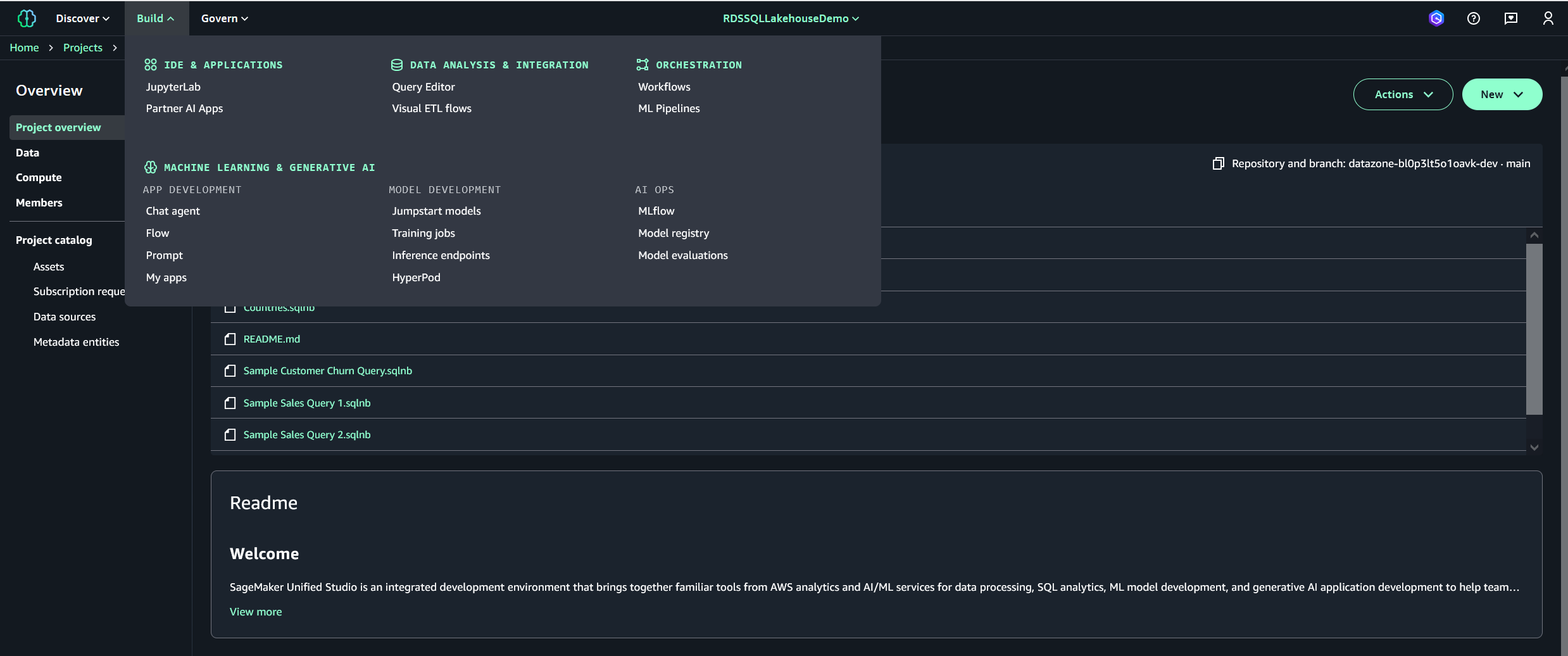

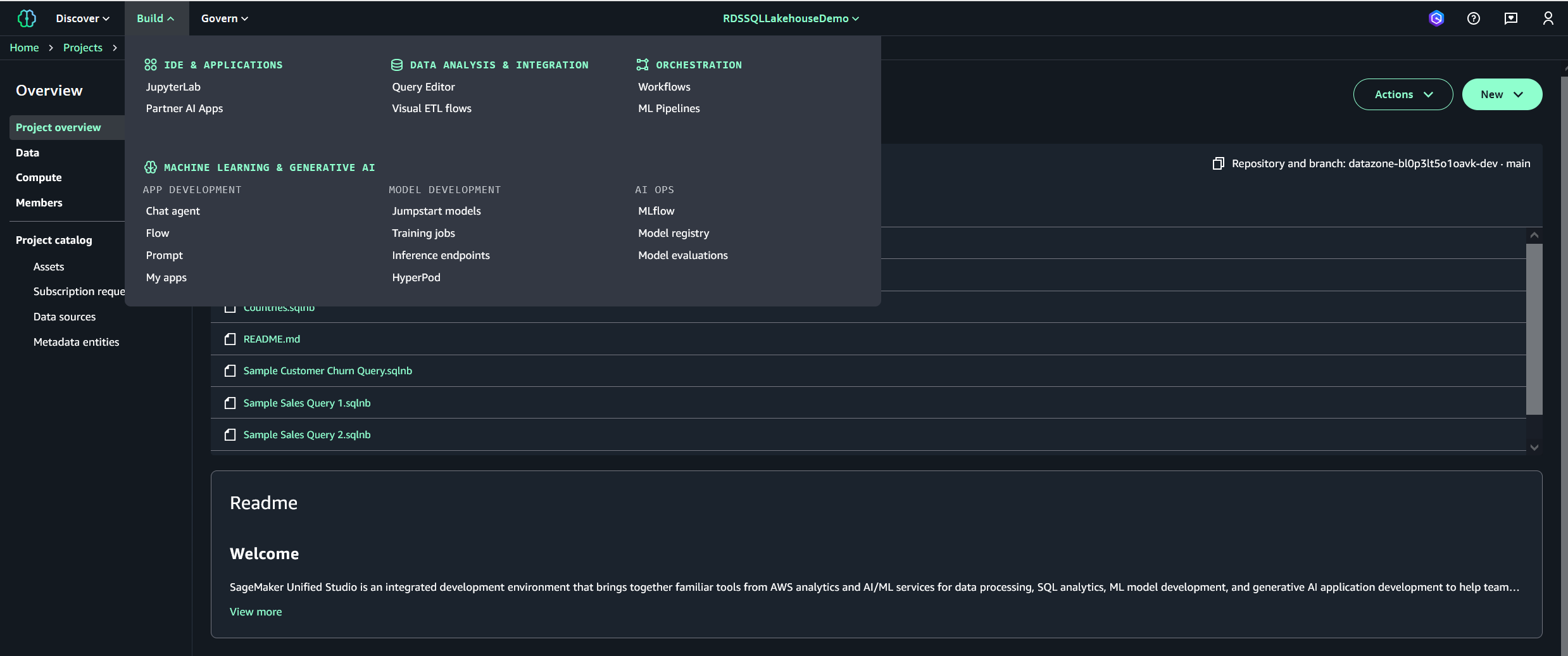

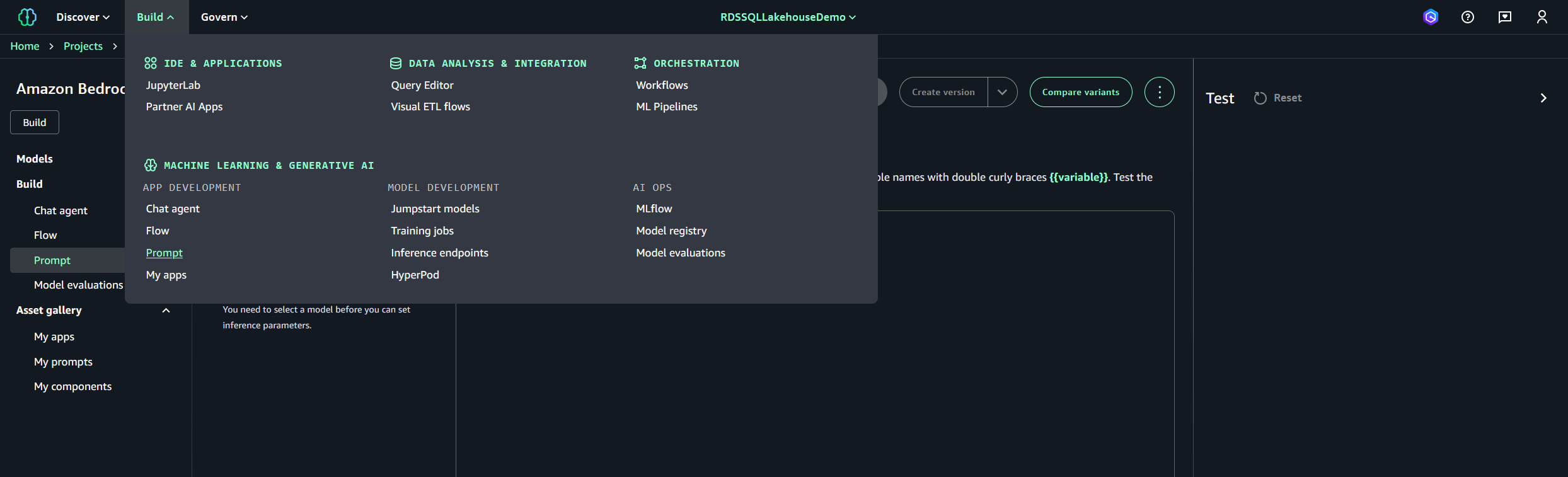

- In SageMaker Unified Studio, choose Build from the menu bar, then choose Query Editor under Data Analysis & Integration.

- In the query editor, enter the following T-SQL command, then choose Run all to run the query:

After a few seconds, the top 10 list of customers will be shown.

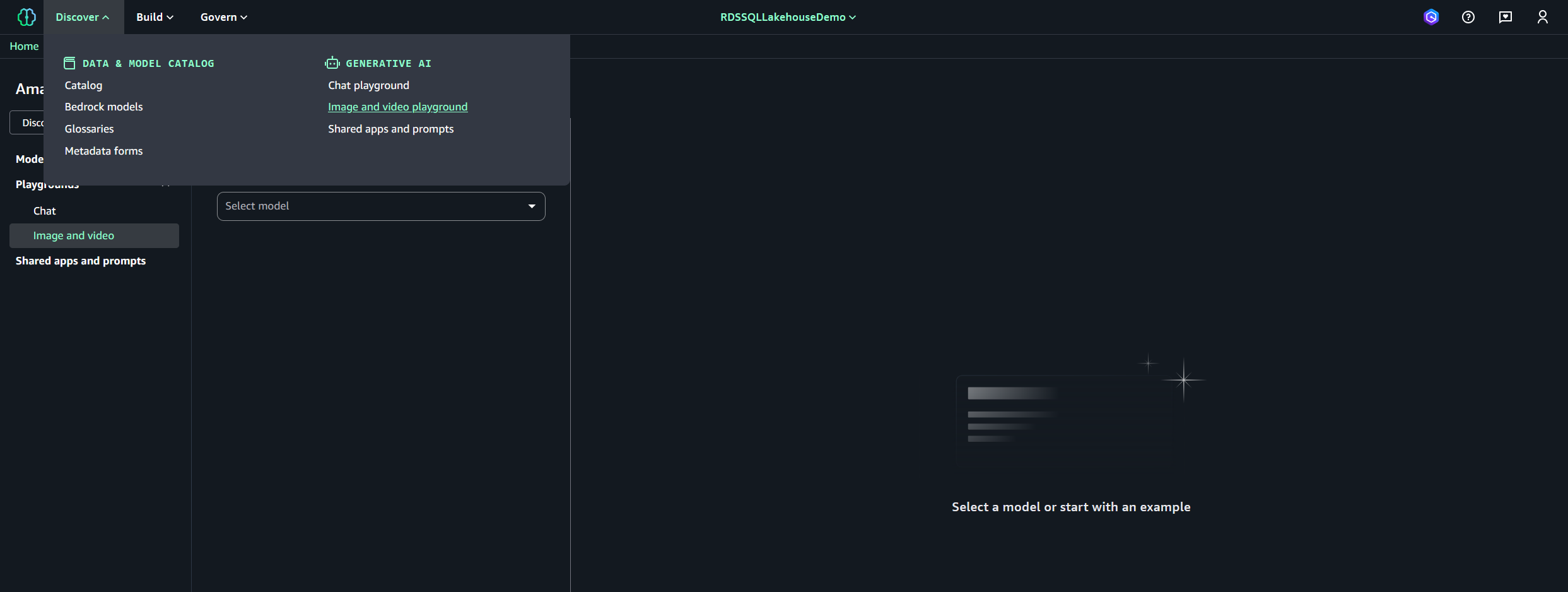

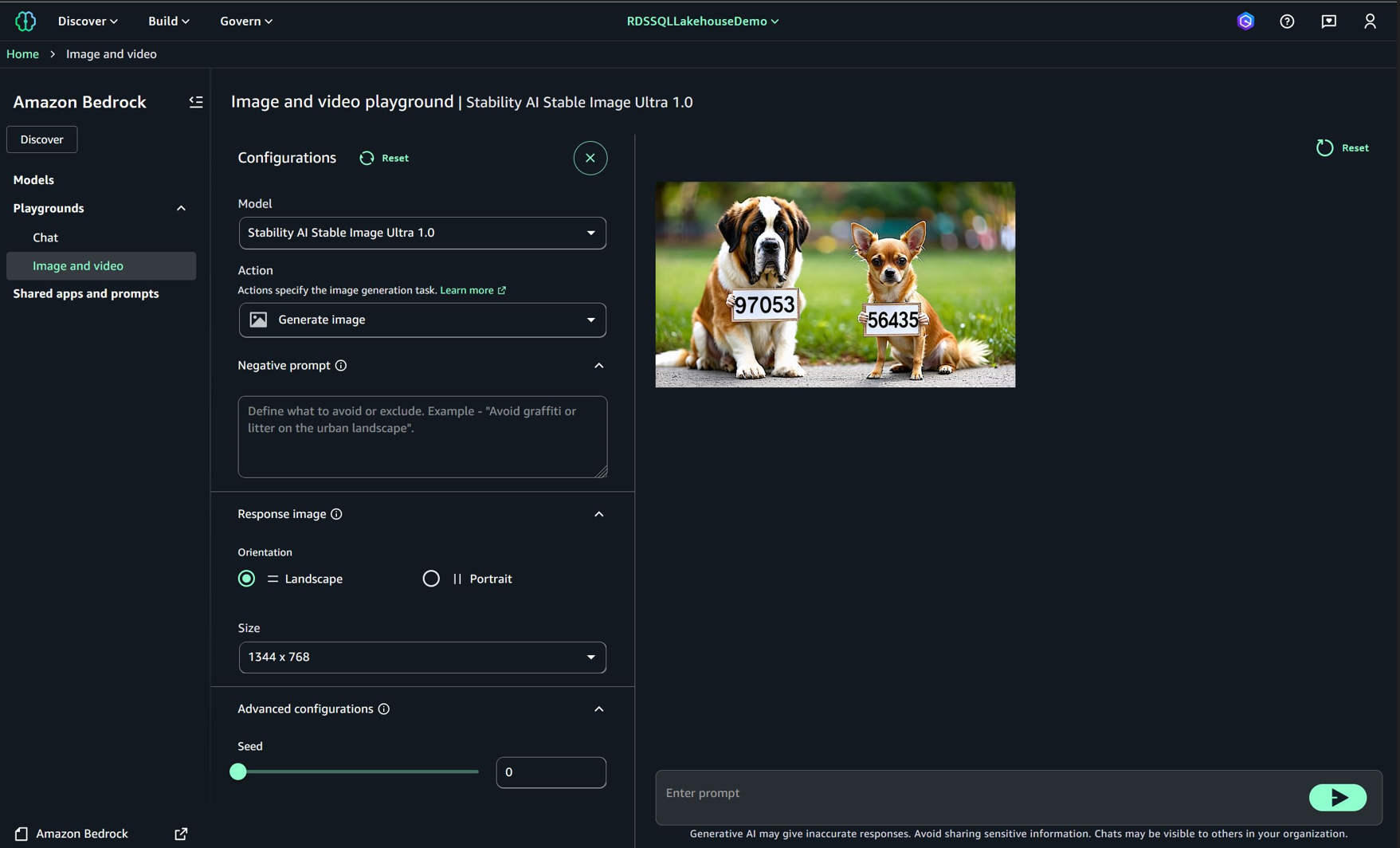

- Choose Discover on the menu bar and choose Image and video playground under Generative AI.

- Under Image and video playground, for Configurations, choose Stability AI Stable Image Ultra 1.0.

- For Size, choose 1344 x 768.

- Keep remaining default values.

- Enter the following prompt and then choose the play icon:

An image similar to the one shown in the following screenshot will appear. Because these FMs are non-deterministic (they are all statistical models), it’s likely that the image generated in your environment will be different. An interesting exercise is retrying the same prompt several times to see what the FM generates in each case. This example is just to showcase SageMaker Unified Studio image generation capabilities using specific data points from a SQL Server source (“97053” and “56435” sales totals) as literal values extracted from the query and embedded in the prompt.

Text generation use case

For the second use case, we execute another sample query that will be the source data for a text generation generative AI example using an Amazon Nova FM. Complete the following steps:

- In SageMaker Unified Studio, choose Build from the menu bar, then choose Query Editor under Data Analysis & Integration.

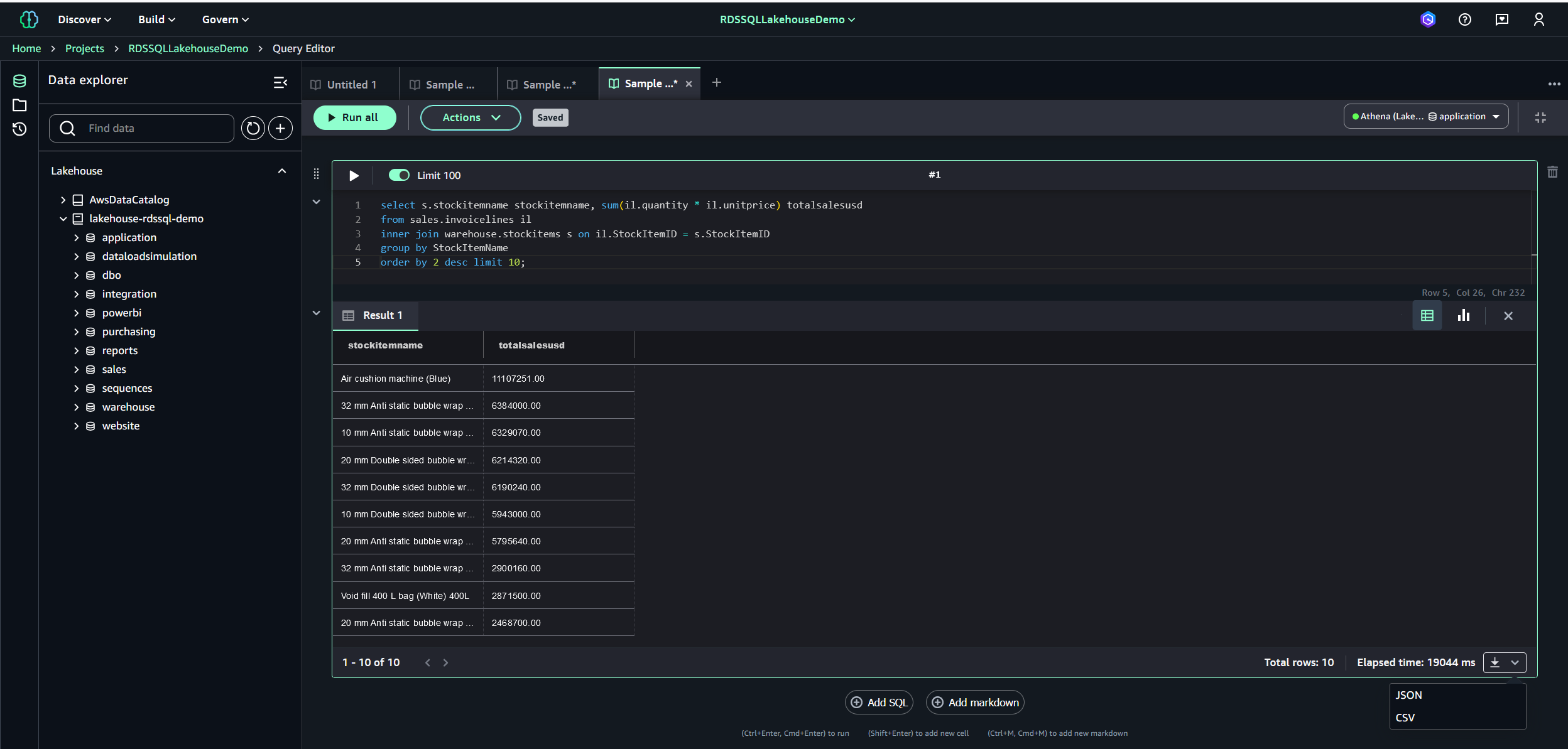

- In the query editor, enter the following T-SQL command, then choose Run all to run the query:

After a few seconds, the top 10 list of customers will be shown.

- Download the result set by choosing the CSV icon.

- Open the CSV file, select the entire result set, and copy it to the clipboard for later use.

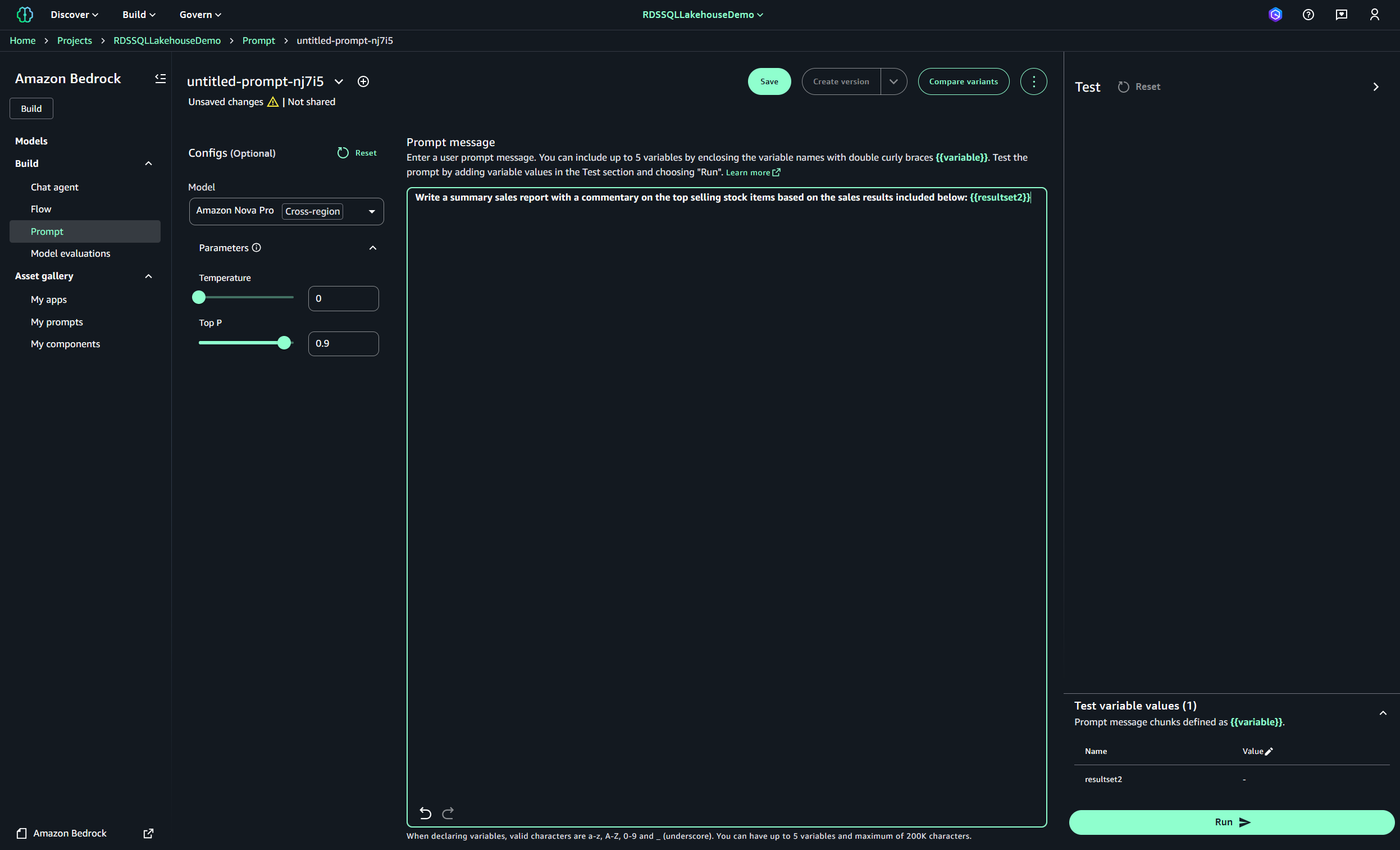

- Choose Build on the menu bar and choose Prompt under Machine Learning & Generative AI.

- Choose the Amazon Nova Pro FM.

- Keep the remaining default values.

- Enter the following prompt: “Write a summary sales report with a commentary on the top selling stock items based on the sales results included below: {{resultset2}}”.

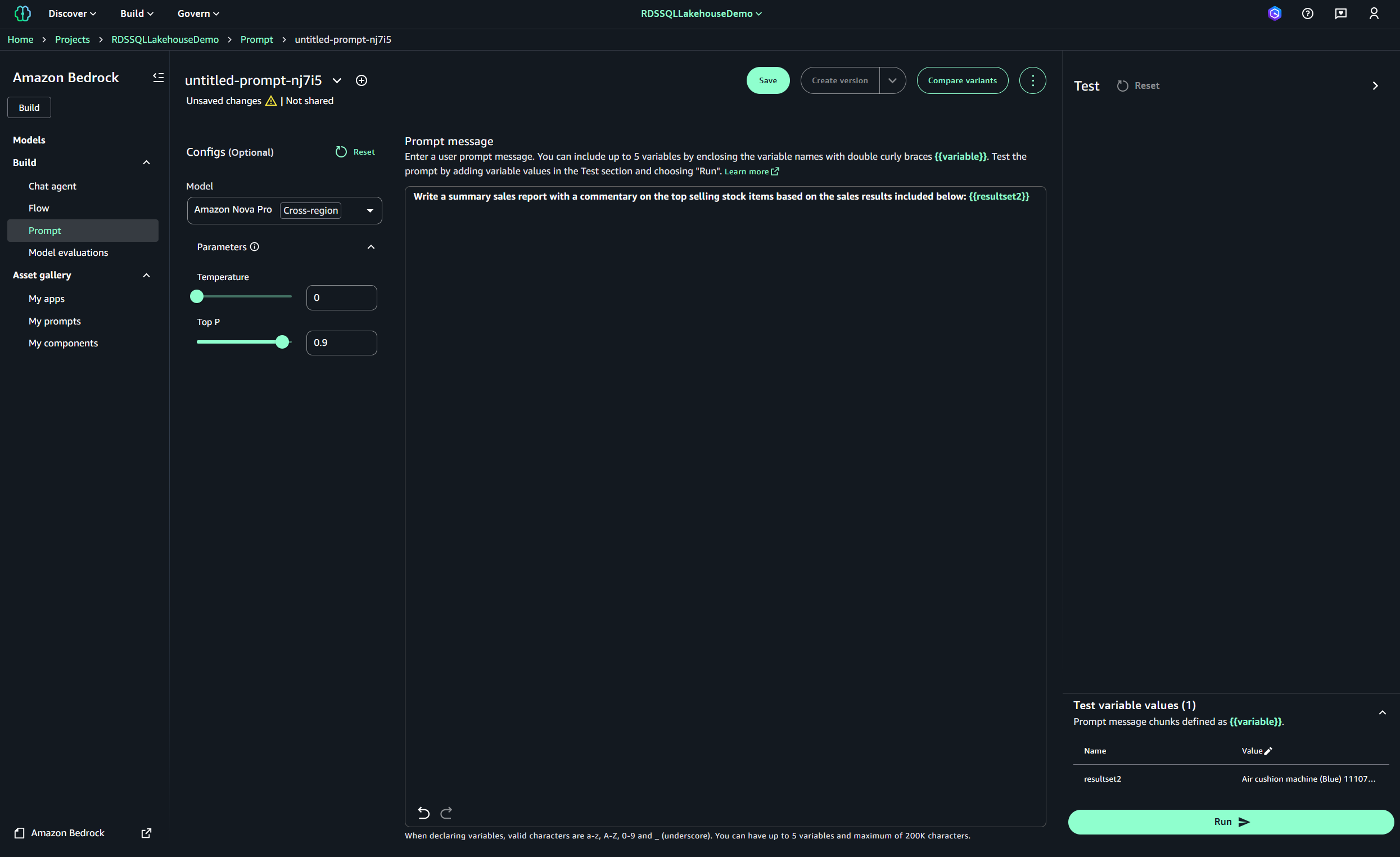

- Edit the resultset2 variable Value field and enter in the SQL Server result set obtained from previous steps.

- Choose Run and wait for a few seconds for the summary sales report to generate.

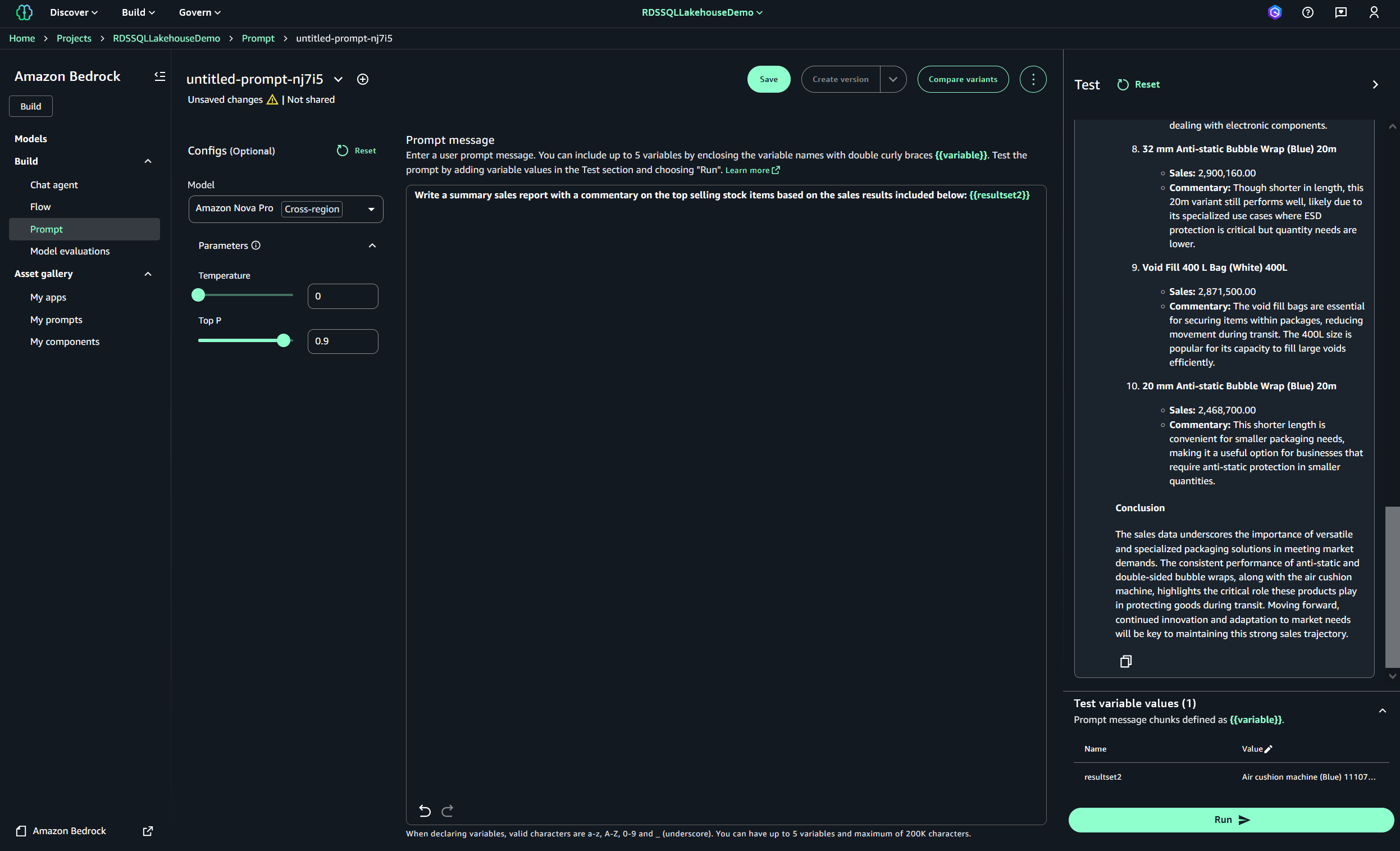

You should see a report similar to the one in the following screenshot.

- Copy the report, enter it into your preferred text editor, and examine its contents.

Because these FMs are non-deterministic (they are all statistical models), the report generated in your environment might be slightly different. An interesting exercise is retrying the same prompt several times to see what the FM generates in each case.

Clean up

To avoid incurring future charges and remove the components you created while testing this use case, complete the following steps:

- On the Amazon RDS console, choose Databases in the navigation pane.

- Select the database you set up, and on the Actions menu, choose Delete.

- On the SageMaker console, choose View existing domains and choose your domain.

- Choose Delete.

- On the AWS CloudFormation console, select the SageMakerUnifiedStudio-VPC stack, then choose Delete to delete the components created by the stack.

- Confirm the deletion request and follow the required prompts.

Conclusion

In this post, we demonstrated how to integrate Amazon SageMaker Lakehouse with Amazon RDS for SQL Server to build powerful generative AI applications. Through practical examples, we explored how Retrieval Augmented Generation (RAG) can enhance foundation models, using Stability AI Stable Image Ultra 1.0 for image generation and Amazon Nova Pro for text generation. The solution’s straightforward setup process allows you to seamlessly access and utilize data from your RDS for SQL Server database, requiring minimal configuration effort. This solution highlights how the seamless integration between these two AWS services can help accelerate your generative AI development journey. By combining the robust capabilities of these two services you can quickly build and deploy sophisticated AI-powered solutions that leverage your existing data assets. We encourage you to try this integration in your own environment and explore how it can benefit your specific use cases. If you have any feedback or questions, please leave them in the comments section.