Containers

Testing network resilience of AWS Fargate workloads on Amazon ECS using AWS Fault Injection Service

Container-based applications are increasingly prevalent in modern cloud architectures, with Amazon Web Services (AWS) Fargate providing a serverless compute engine for containers that eliminates server management. To improve resiliency of these containerized applications, organizations need the ability to simulate network disruptions and partitioning scenarios, particularly in multi-Availability Zone (AZ) deployments. This capability is essential for validating the application behavior during network impairment and testing failover mechanisms.

Chaos Engineering is a best practice for testing application resilience. It involves intentionally introducing controlled disruptions to validate how applications handle real-world issues, such as elevated latency from dependencies or service events. AWS Fault Injection Service (AWS FIS) helps create controlled failure conditions so organizations can see how applications and disaster recovery procedures respond to disruptions. This proactive approach helps organizations uncover vulnerabilities before they impact users, improve recovery processes, and build more robust, fault-tolerant systems.

AWS users across industries have expressed specific needs for network chaos experiments. Financial services and media companies highlighted the need to test application resilience under varying network conditions, specifically requesting capabilities to inject latency between Fargate applications and their dependencies. Enterprise users in utilities and transportation sectors need the simulation of specific network faults such as packet loss and port blackholing to validate disaster recovery procedures. These controlled experiments allow teams to build confidence that their systems can withstand unexpected disruptions and maintain service continuity even under challenging network conditions.

Network actions for Fargate

In December 2024, AWS FIS expanded its capabilities for Amazon Elastic Container Service (Amazon ECS), introducing network fault injection experiments for Fargate tasks. This enhancement complements the existing resource-level actions (CPU stress, I/O stress, and kill process) with three new network-focused actions: network latency, network blackhole, and network packet loss.

With a total of six action types for both Amazon Elastic Compute Cloud (Amazon EC2) and Fargate launch types, AWS FIS enables more comprehensive chaos engineering experiments at the container level. AWS FIS simulates various network conditions and failures without needing code changes or more infrastructure, thereby empowering you to conduct thorough resilience testing of Amazon ECS on Fargate applications against a wider range of potential network issues.

This post demonstrates how these actions work and provides practical examples for implementing network resilience experiments in Amazon ECS on Fargate applications and their dependencies. The sample application demonstrated here helps you follow along and perform the experiments in your environment. It also shows how you can use AWS FIS to proactively identify and address potential weaknesses in Fargate applications, ultimately improving their resilience. The code for this demo is available at sample-network-faults-on-ECS-Fargate-with-FIS GitHub repository.

Sample application: demonstrating network resilience testing

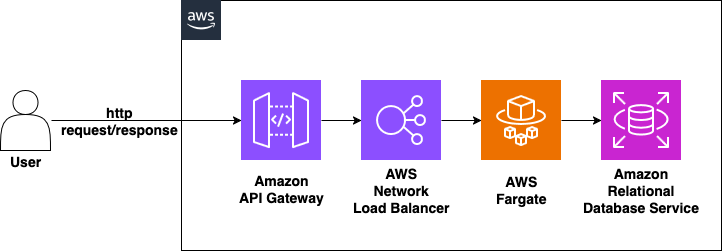

To demonstrate these new network fault injection capabilities, the demo uses an Amazon ECS Fargate application with a dependency on Amazon Relational Database Service (Amazon RDS). The application has a three-tier architecture that uses Amazon API Gateway serving as the entry point for all client requests, which are then routed through an internal Network Load Balancer (NLB) to a cluster of containerized applications running on Amazon ECS Fargate. The application tier interacts with an Amazon RDS MySQL database for data, completing the three-tier setup, as shown in the following figure.

Figure 1: Sample application architecture

Prerequisites

To deploy this sample application in your AWS account, you need the following prerequisites:

- Node.js 18 or later

- AWS Command Line Interface (AWS CLI) configured with appropriate credentials

- Docker installed

- AWS CDK CLI installed (

npm install -g aws-cdk)

Deploy the application with AWS CDK

- Clone the repository:

- Verify that you have the AWS CDK installed and bootstrapped:

- Install dependencies:

- Deploy the stack:

This provisions all necessary resources such as the ECS cluster, Fargate tasks, RDS database, API Gateway, and monitoring components.

Experiment planning and execution

To perform the chaos experiment, follow the steps outlined in the Principles of Chaos Engineering:

- Start by defining ‘steady state’ as some measurable output of the system that indicates normal behavior.

- Hypothesize that this steady state continues when failures are injected.

- Run the experiment using AWS FIS and inject failures into the application.

- Review application behavior and validate application exhibited resilience posture in the event of failures.

Walkthrough

In the next sections we walk through each step, starting with defining the application’s steady state behavior and the metrics used to measure it.

Establishing steady state of the application performance

To monitor the experiments effectively, it is imperative to understand the steady state behavior of the application under normal conditions. The sample application installed previously has a comprehensive Amazon CloudWatch dashboard that provides visibility into key metrics across the application stack. The dashboard includes charts tracking end-to-end request latency at the API Gateway and Amazon RDS levels. The metrics showing the response times between Amazon ECS Fargate tasks and the RDS database are particularly important for the experiment, because the network faults are injected.

Before you introduce network faults, you need to understand your application’s normal behavior. The sample environment includes a load generation script that creates synthetic traffic—100 requests per second for a period of ten minutes—simulating real-world usage patterns. Run this script to establish performance baselines before running chaos experiments.

- Install load generation dependencies:

- Set the AWS Region:

- Get the API Endpoint from the application stack and set it as the environment variable:

- Run the load test:

You should have the observability mechanism to monitor the application’s behavior through the perspectives of the users as well as internal infrastructure. Under normal load conditions, the following dashboard shows consistent patterns: API Gateway latency is between 29 and 33 ms and database query latency (time taken by Amazon RDS) between 8 and 11 ms. During the same time window, end-to-end p99 latency as observed by the user is <236 ms, providing a responsive experience for users. Load generator maintains this steady state by sending 100 requests per second, and the application handles this load efficiently with no degradation in performance as seen in the following CloudWatch dashboard.

Figure 2: CloudWatch dashboard—API Gateway steady state latency

Figure 3: CloudWatch dashboard—RDS database query steady state latency

Figure 4: Application user view—steady state average http response time

Defining hypothesis

In the three-tier application, network latency between Amazon ECS Fargate tasks and RDS database is a common scenario that must be handled gracefully.

Hypothesis: When AWS FIS injects a 200 ms network latency between the Fargate tasks and RDS database, the application successfully completes all database transactions without errors, with end-to-end response times less than 1.5 seconds, even under sustained load of 100 requests per second.

Experiment: simulating network latency with AWS FIS

Use the AWS FIS new network latency action aws:ecs:task-network-latency to inject a 200 ms delay specifically in the network path between Fargate tasks and the RDS database. Running this experiment for ten minutes allows you to observe how your application responds to increased database response times and validate its resilience to network degradation.

Open the NetworkLatencyExperiment template by choosing Experiment templates from the left navigation pane in the AWS FIS console, and choose Actions, then Update experiment template. Choose Edit in the Specify actions and target section. In Actions and targets section, choose Edit next to the action.

- Verify the Name is set as

NetworkLatencyas configured in the sample app. - Review the Description and modify if needed.

- Confirm the Action type is ECS with aws:ecs:task-network-latency chosen.

- For Action parameters, verify the following, as shown in the following figure:

- delayMilliseconds, is set as 200 ms.

- Duration, is set to 10 Minutes

- For Sources, confirm that the endpoint name of the RDS cluster is entered and Use ECS fault injection endpoints is toggled “ON“

Figure 5: AWS FIS experiment template—Edit action

In the Targets section, choose Edit to edit aws:ecs:task:

- Verify that Resource type is set to aws:ecs:task.

- Confirm that Target method is Resource IDs.

- Under Resource IDs, review the chosen tasks and modify if needed by using the search function to find specific Amazon ECS tasks for the experiment.

- Confirm that Selection Scope is set to All, which means running the action on all targets, as shown in the following figure.

Figure 6: AWS FIS experiment template—Edit target

In Service Access, review the AWS Identity Access and Management (IAM) role selection. The sample application has created an appropriate IAM role starting with NetworkFaultsExperimentStack-FisRole that follows the least privilege principle, granting AWS FIS only the permissions needed to perform the experiment actions on Amazon ECS tasks. Verify if this role is chosen from the dropdown menu.

When implementing in your own applications, enable the Amazon ECS Fault Injection APIs by setting the enableFaultInjection field in the Amazon ECS task definition. When using the aws:ecs:task-network-blackhole-port,aws:ecs:task-network-latency, or aws:ecs:task-network-packet-loss actions on Fargate tasks, set useEcsFaultInjectionEndpoints to true and pidMode to task.

Run experiment

Navigate to the AWS FIS console and choose the NetworkLatencyExperiment experiment template. Choose Start experiment. Record information about this experiment by adding a tag, Name and a meaningful value such as ECS_task_network_latency_MMDDYYYY-1. When you have started the experiment, confirm that the State changed to Running.

Review results

In this section we can examine the results of the network fault injection experiment, as shown in the following figures. As soon as AWS FIS initiates the experiment, the CloudWatch dashboard provides insights into the application’s behavior under stress. Under normal operations, the application demonstrates swift database interactions, with Amazon RDS queries completing in under 11 ms. The end-to-end request flow, measured at the API Gateway, typically processed in under 28 ms. However, when FIS injects network latency between the Fargate tasks and RDS database, a shift in performance metrics becomes apparent. Database query response times increase to more than 400 ms throughout the duration of the experiment, which comes down when latency ingestion stops. This ripple effect cascades through the architecture, causing the overall request latency at API Gateway to climb to 1044 ms.

Figure 7: CloudWatch dashboard—API Gateway latency after experiment

Figure 8: CloudWatch dashboard—RDS database query latency after experiment

Figure 9: Application user view—average http response time after experiment

Although the latency increased significantly, the database continues to serve requests without interruption, and there’s no spike in error rates. Despite the Amazon RDS query response time jumping from 11 ms to 400 ms, the application maintains its operational integrity. The absence of errors indicates that the system’s timeout settings, connection pooling, and retry mechanisms are well-configured to handle such latency spikes, as shown in the following figure.

Figure 10: CloudWatch dashboard—Errors

The monitoring clearly shows the increased latency isolated specifically to the targeted Fargate-to-RDS communication path, while other system components maintain their normal performance characteristics. This experiment effectively validates several aspects of your application’s resilience. First, it confirms that your monitoring system successfully detects and alerts on increased latency at the specific tier where it occurs. Second, it helps you understand how delays in database communication impact the overall user experience. Third, it provides valuable data for tuning your timeout settings, retry mechanisms, and connection pooling configurations. Most importantly, it allows you to proactively identify and address potential bottlenecks before they affect production users.

The ability to conduct such precise, controlled experiments at the network level represents a significant advancement in chaos engineering capabilities for containerized applications. Whether validating application behavior during cloud migrations, testing disaster recovery procedures, or validating regulatory compliance, these new AWS FIS features provide the tools needed to build more resilient applications.

Other Amazon ECS Fargate network fault experiments

AWS FIS also supports packet loss and network blackhole testing following a similar approach.

Using the same three-tier application setup, you can execute packet loss experiments (aws:ecs:task-network-packet-loss) to simulate degraded network conditions by specifying a percentage of packets to be dropped. This helps validate how your application handles partial network failures and tests retry mechanisms.

Similarly, network blackhole experiments (aws:ecs:task-network-blackhole) can be configured to completely block inbound or outbound traffic to specific ports, simulating more severe network partition scenarios. These experiments use identical prerequisites, monitoring setup, and CloudWatch dashboards as the latency example. The key difference lies in the experiment configuration where you specify either a packet loss percentage (for example, 30% packet loss) or port numbers to blackhole.

Both actions can provide valuable insights into your application’s resilience against different types of network disruptions. We encourage you to adapt this approach to explore these other fault types, adjusting the success criteria and monitoring metrics according to your specific resilience requirements.

Supported destinations and use cases

AWS FIS provides flexible network fault injection capabilities for Amazon ECS tasks, allowing you to test application resilience against various network scenarios. Whether you’re testing communication with specific microservices using IP addresses, validating domain-based dependencies, or simulating disruptions with AWS managed services such as Amazon DynamoDB and Amazon S3, you can precisely target your experiments. This granular control enables you to simulate real-world network issues while maintaining safe execution through automatic exclusion of essential paths like AWS Systems Manager connectivity.

Cleaning up

To avoid unnecessary charges, delete the resources by running the following:

Conclusion

AWS FIS network fault injection actions for Amazon ECS Fargate provide powerful, native capability to validate resilience of containerized applications against various network disruptions. These new features enable comprehensive chaos engineering practices without needing code modifications or complex infrastructure changes. Implementing controlled experiments as demonstrated in this post allows organizations to build truly resilient systems that can withstand unexpected network disruptions. These experiments help teams identify potential failure points before they impact users, validate recovery mechanisms, and develop greater confidence in their application’s ability to maintain service during adverse network conditions.

To deepen your understanding of chaos engineering principles and practice, we encourage you to explore the AWS FIS Workshop. The workshop now includes a dedicated section for the Amazon ECS Fargate network fault experiments, allowing you to gain practical experience in validating the resilience of containerized applications.

About the authors

Syed Masudullah Sadullah is a Principal Technical Account Manager at Amazon Web Services, based in Columbus, Ohio. With two decades in technology consulting, he specializes in helping customers architect secure, scalable and resilient solutions on AWS. A technology enthusiast, he develops innovative solutions to GenAI challenges and combines hands-on technical knowledge to drive transformative outcomes for customers.

Syed Masudullah Sadullah is a Principal Technical Account Manager at Amazon Web Services, based in Columbus, Ohio. With two decades in technology consulting, he specializes in helping customers architect secure, scalable and resilient solutions on AWS. A technology enthusiast, he develops innovative solutions to GenAI challenges and combines hands-on technical knowledge to drive transformative outcomes for customers.

Sunil Govindankutty is a Principal TAM at AWS, where he helps customers operate optimized and secure cloud workloads. With over 20 years of experience in software development and architecture, he helps enterprises build resilient systems and integrate AI-powered assistants into modern development workflows. Outside of work, Sunil enjoys family time, chess, gardening, and solving mysteries both in books and cloud architectures.

Sunil Govindankutty is a Principal TAM at AWS, where he helps customers operate optimized and secure cloud workloads. With over 20 years of experience in software development and architecture, he helps enterprises build resilient systems and integrate AI-powered assistants into modern development workflows. Outside of work, Sunil enjoys family time, chess, gardening, and solving mysteries both in books and cloud architectures.