Containers

Category: Technical How-to

Streamline your containerized CI/CD with GitLab Runners and Amazon EKS Auto Mode

In this post we demonstrate how using GitLab Runners on EKS Auto Mode, combined with Amazon Elastic Compute Cloud (Amazon EC2) Spot Instances, can deliver enterprise-scale CI/CD capabilities while achieving up to 90% cost reduction when compared to traditional deployment models. This approach not only optimizes operational expenses, but also provides resilient, scalable pipeline execution.

Amazon EKS introduces Provisioned Control Plane

Amazon EKS introduces Provisioned Control Plane, a new capability that allows you to pre-allocate control plane capacity for predictable, high-performance Kubernetes operations at scale. In this post, we explore how this enhanced option complements the Standard Control Plane by offering multiple scaling tiers (XL, 2XL, 4XL) with well-defined performance characteristics for API request concurrency, pod scheduling rates, and cluster database size—enabling you to handle demanding workloads like ultra-scale AI training, high-performance computing, and mission-critical applications with confidence.

Amazon EKS Blueprints for CDK: Now supporting Amazon EKS Auto Mode

Amazon EKS Blueprints for CDK now supports EKS Auto Mode, enabling developers to deploy fully managed Kubernetes clusters with minimal configuration while AWS automatically handles infrastructure provisioning, compute scaling, and core add-on management. In this post, we explore how this integration combines EKS Blueprints’ declarative infrastructure-as-code approach with EKS Auto Mode’s hands-off cluster operations, providing three practical deployment patterns—from basic clusters to specialized ARM-based and AI/ML workloads—that let teams focus on application development rather than infrastructure management .

Enhancing and monitoring network performance when running ML Inference on Amazon EKS

In this post, we explore how to enhance and monitor network performance for ML inference workloads running on Amazon EKS using the newly launched Container Network Observability feature. We demonstrate practical use cases through a sample Stable Diffusion image generation workload, showing how platform teams can visualize service communication, analyze traffic patterns, investigate latency issues, and identify network bottlenecks—ultimately improving metrics like inference latency and time to first token.

Introducing the fully managed Amazon EKS MCP Server (preview)

Learn how to manage your Amazon Elastic Kubernetes Service (Amazon EKS) clusters through simple conversations instead of complex kubectl commands or deep Kubernetes expertise. This post shows you how to use the new fully managed EKS Model Context Protocol (MCP) Server in Preview to deploy applications, troubleshoot issues, and upgrade clusters using natural language with no deep Kubernetes expertise required. We’ll walk through real scenarios showing how conversational AI turns multi-step manual tasks into simple natural language requests.

Accelerate container troubleshooting with the fully managed Amazon ECS MCP server (preview)

Amazon ECS today launched a fully managed, remote Model Context Protocol (MCP) server in preview, enabling AI agents to provide deep contextual knowledge of ECS workflows, APIs, and best practices for more accurate guidance throughout your application lifecycle. In this post, we walk through how to streamline your container troubleshooting using the Amazon ECS MCP server, which offers intelligent AI-assisted inspection and diagnostics through natural language queries in CLI tools like Kiro, IDEs like Cline and Cursor, and directly within the Amazon ECS console through Amazon Q.

Streamline container image signatures with Amazon ECR managed signing

Container image security is critical for modern applications with the increasing adoption of containerized workloads. Organizations need reliable ways to verify the authenticity and integrity of their container images. Amazon Elastic Container Registry (Amazon ECR) now offers managed signing as a streamlined approach to automatically sign container images when they are pushed to the Amazon […]

Monitoring network performance on Amazon EKS using AWS Managed Open-Source Services

In this post, we demonstrate how to monitor network performance for Amazon EKS workloads using new advanced network observability features powered by Network Flow Monitor. We explore how to capture Kubernetes-enriched network metrics, export them to AWS Managed Open-Source services like Amazon Managed Service for Prometheus and Amazon Managed Grafana, and visualize critical performance indicators including throughput, packet drops, latency, and connection states across your containerized services.

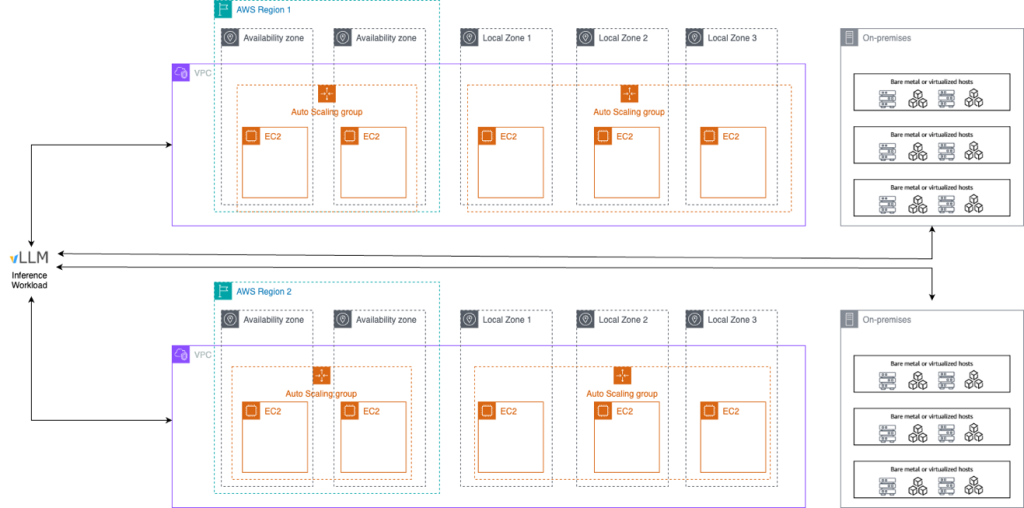

Extending GPU Fractionalization and Orchestration to the edge with NVIDIA Run:ai and Amazon EKS

In this post, we explore how AWS and NVIDIA Run:ai are extending GPU fractionalization and orchestration capabilities beyond traditional cloud regions to edge environments, including AWS Local Zones, Outposts, and EKS Hybrid Nodes. The collaboration addresses the growing demand for distributed AI/ML workloads that require efficient GPU resource management across geographically separated locations while maintaining consistent performance, compliance, and cost optimization .

Kubernetes Gateway API in action

In this post, we explore advanced traffic routing patterns with the Kubernetes Gateway API through a practical Calendar web application example, demonstrating how it streamlines and standardizes application connectivity and service mesh integration in Kubernetes. The post covers three key use cases: exposing applications to external clients through hostname-based routing, implementing canary deployments between microservices using gRPC traffic splitting, and controlling egress traffic to external services with security policies.