AWS Contact Center

Insights and learnings from Amazon Q in Connect web crawler integration

Human agents are critical components of any contact center, and it is essential for organizations to provide them with the right tools for success. By supporting agents effectively, companies not only improve the agent experience but also enhance the end-customer experience. To address this need, Amazon Connect offers Amazon Q in Connect, a generative AI assistant that provides real-time, personalized recommended responses and actions to help agents perform their roles more efficiently and effectively.

Amazon Q in Connect can integrate with knowledge bases through different integration methods. This blog focuses on Web crawler integration, including key implementation considerations and best practices. We will explore how customers can effectively access and use their website URLs as a source for Amazon Q in Connect.

Why web crawler integration?

Content fragmentation across websites forces agents to juggle multiple sources while helping customers. This slows service delivery and disrupts conversations, especially as companies expand their digital presence and customers demand faster support.

Amazon Q in Connect, when using a web crawler integration, automatically indexes content from multiple websites and support portals to create a comprehensive knowledge management solution.

Amazon Q in Connect gives agents instant access to product information through web crawling. It maintains links to original online sources for both agents and customers to reference. This integration saves organizations time by eliminating manual content extraction and updates when website content changes.

In this blog, we will talk through these different phases and how you can optimize your Amazon Q in Connect web crawler deployment.

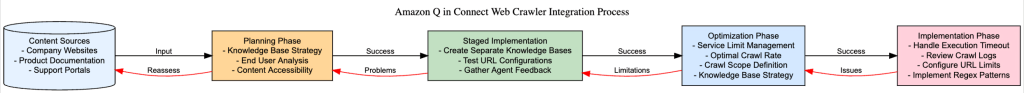

Diagram 1: Different phases to optimize web crawler deployment

Planning phase

You can find instructions for enabling Amazon Q in Connect and review prerequisites for web crawler integration in the Amazon Connect documentation.

Before implementing web crawler integration, consider these fundamental planning questions:

- Knowledge base strategy: Identify the department responsible for content management (e.g., Marketing, IT, or Customer experience team). Establish a clear content update workflow and ensure changes are properly synchronized with the contact center.

- End user analysis: Identify who will be using the knowledge base—are they tier 1 agents handling general inquiries, specialized technical support agents, or a mix of both? This determines the scope and depth of content you need to crawl.

- Content accessibility: Assess whether the knowledge sources are publicly accessible or require authentication, and ensure you have proper authorization to crawl the target websites.

Staged implementation

Start with a focused approach by creating separate knowledge bases for critical content areas. Test different URL configurations independently before combining them into a comprehensive solution. This method allows you to troubleshoot specific content sources in isolation and fine-tune crawl patterns for optimal results. As agents provide feedback on the quality and relevance of responses, gradually expand coverage to include additional content areas. This staged implementation not only accelerates the initial deployment but also helps optimize each content before integration into the primary knowledge base.

Optimization phase

Here are some practical considerations to optimize the web crawler integration strategy:

- Service limit: When architecting the web crawler implementation for Amazon Q, it’s crucial to understand that the 25,000 file limitation per crawler ingestion necessitates a strategic approach to URL selection and content prioritization. Implement a well-defined content filtering mechanism that prioritizes business-critical documentation, frequently accessed knowledge articles, and high-value customer support content. Consider implementing URL pattern matching, content relevancy scoring, and metadata filtering for optimal utilization of the file limit threshold. This constraint requires careful planning of the content architecture and potentially segmenting the knowledge base into multiple crawler configurations if needed.

- Setup optimal crawl rate: When implementing Amazon Q’s web crawler integration, it’s critical to coordinate with your infrastructure/IT team that owns the website to establish optimal crawl rate thresholds that align with the website’s capacity and load balancing configurations. The crawler’s concurrent request limits and crawl frequency must be carefully calibrated to prevent overwhelming the web servers, as aggressive crawling can trigger 504 Gateway Timeout responses and result in incomplete knowledge ingestion. Start with progressive rate limit and monitor your web server response times using a a web traffic monitoring tool to fine-tune the crawl parameters, ensuring reliable content indexing while maintaining website stability.

- Crawl scope definition: Rather than attempting to crawl entire websites, precisely define starting points (seed URLs) for each content category across all domains which are needed to be crawled.

- For example, below, each seed URL points to category index pages rather than individual product pages, allowing the crawler to discover all related content while minimizing the number of seed URLs required.

{“url”: “https://example.com/products/”},

{“url”: “https://example.com/documentation/”}

- Evaluate single vs. multiple knowledge base strategy: Create either one unified knowledge base or separate ones for each product line. Use a single knowledge base if customers regularly contact about multiple products. Otherwise create separate knowledge bases if customers typically contact about only one product line. However, be aware of the service limit for the number of knowledge bases allowed.

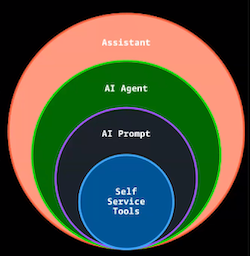

You can dynamically change knowledge bases during runtime by first overriding the knowledge base configuration with AI agents and then using the UpdateSession API to switch between AI agents.

Diagram 2: Components of a Q in Connect Assistant

Implementation phase

Here are some practical considerations to implement the web crawler integration

- Execution timeout: When implementing Amazon Q’s web crawler, architects must account for the timeout constraint per crawl session. This limitation requires implementing efficient crawl strategies, including URL prioritization, optimized crawl patterns, and potentially segmenting content into multiple crawl jobs to help complete ingestion within the time window. While the crawl job may report as “completed” at the timeout, failed Amazon Q responses during testing indicate incomplete content ingestion. In such scenario it is recommended to open a AWS support ticket to confirm if the website was crawled successfully. To mitigate this, implement monitoring mechanisms and consider breaking down large content sites into smaller, manageable crawl jobs that can complete within the one-hour window.

- Scope configuration and URL limits: The Amazon Connect UI interface allows you to add 10 URLs, while the UrlConfiguration API supports up to 100 seed URLs. For organizations with multiple websites or extensive content, this requires smart planning. Instead of listing every page individually, map the content structure first to identify key categories. Create targeted regex patterns for inclusion and exclusion and use seed URLs that point to main category pages. For example, a pattern like .*domain\.com/support/.*\.pdf can replace dozens of individual PDF document URLs. Exclusion patterns help filter out marketing materials or outdated resources, ensuring the knowledge base contains only the most valuable content for the agents.

Conclusion

To summarize, Amazon Q in Connect web crawler integration best practice follows a systematic workflow through four main phases: Planning, Staged Implementation, Optimization, and Implementation.

The planning phase focuses on establishing knowledge base strategy, analyzing end-user needs, and ensuring content accessibility. This leads to staged implementation, where separate knowledge bases are created and tested before full integration. The optimization phase addresses crucial technical considerations like service limits, crawl rates, and knowledge base strategy decisions. Finally, the implementation phase handles execution timeouts, and URL configuration limits.

The process is designed to be iterative, with feedback loops between phases to ensure successful integration and optimal performance. This structured approach helps organizations effectively implement Amazon Q’s web crawler while maintaining system stability and ensuring comprehensive knowledge capture for contact center operations.

For best results when using the web crawler integration option:

- Identify high-priority content and analyze URLs

- Set specific starting points for crawls

- Use regex filters to target relevant URLs

- Track Amazon Q in Connect’s performance via Amazon CloudWatch Logs

By implementing these strategies, organizations can successfully integrate knowledge from multiple websites into Amazon Q in Connect, providing agents with comprehensive access to product information and documentation while maintaining efficient crawl operations within service limits.

About the Authors

Vikas Prasad works as a Specialist Solutions Architect at Amazon Web Services (AWS) in Maryland, USA. He enables customers to achieve business outcomes through Customer Experience solutions and digital transformation. In his leisure time, he enjoys outdoor activities such as traveling, cycling, and trekking.

Ayush Mehta is a Technical Account Manager at Amazon Web Services supporting Automotive Manufacturing customers in Austin, Texas. He partners with customers to optimize their AWS infrastructure and drive digital transformation initiatives. Outside of work, he enjoys traveling, exploring new countries and food with his partner, and spending time with his two dogs.