AWS Compute Blog

Multi-rack and multiple logical AWS Outposts architecture considerations for resiliency

AWS Outposts rack offers the same Amazon Web Services (AWS) infrastructure, AWS services, APIs, and tools to virtually any on-premises data center or colocation space for a truly consistent hybrid experience. A logical Outpost (hereafter referred to as an Outpost) is a deployment of one or more physically connected Outposts racks managed as a single entity under one Amazon Resource Name (ARN). An Outpost provides a pool of AWS compute and storage capacity at one of your sites as a private extension of an Availability Zone (AZ) in an AWS Region. Several AWS services that support Outposts offer deployment options that improve your workload’s fault tolerance. However, certain Outposts configuration requirements have to be met in order to use them.

In this post, we explore the architecture considerations that come into play when deciding between a multi-rack logical Outposts rack, or using multiple Outposts racks to support your highly available workloads.

Amazon EC2 on AWS Outposts rack

The following sections cover Amazon Elastic Compute Cloud (Amazon EC2) on Outposts rack

Multi-rack logical Outposts

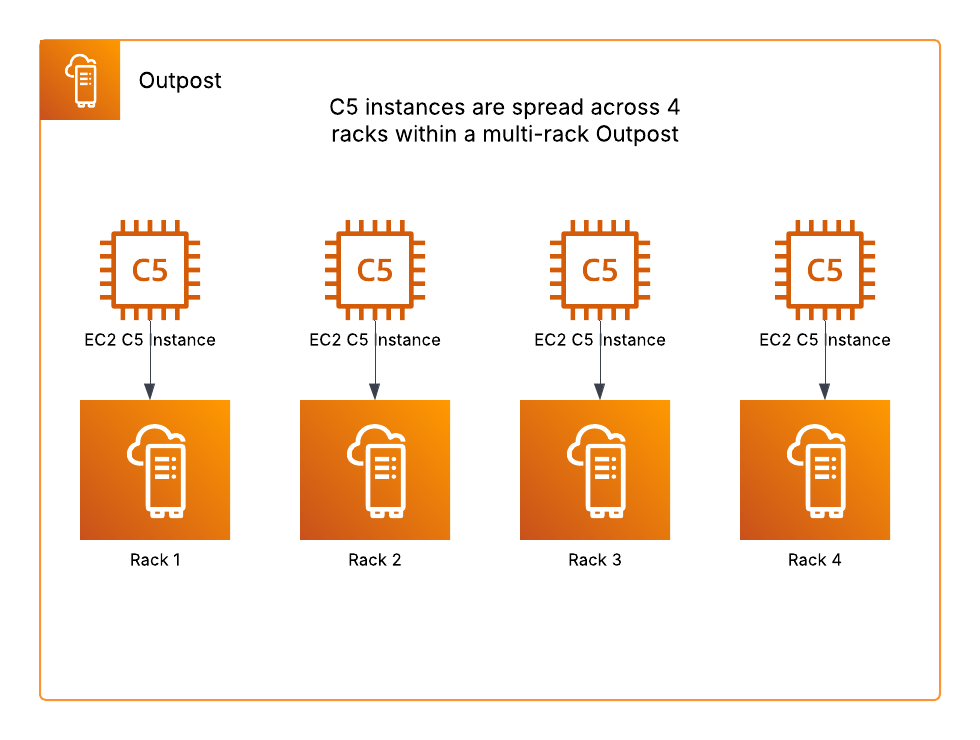

When using a multi-rack logical Outpost, you can use a rack level spread Amazon EC2 placement group. A rack level spread placement group can have as many partitions as you have racks in your Outpost deployment, and this allows you to spread out your instances to improve the fault tolerance of your workloads. In the following example, we have C5 instances in an Amazon EC2 Auto Scaling group that uses a launch template specifying a rack level spread placement group strategy should be used. This multi-rack Outpost has four racks, thus the instances are spread across the four racks as evenly as possible.

This placement group strategy can make your workloads more resilient to rack or host failures, but it would not be useful in mitigating an AZ failure. EC2 instances on Outposts are statically stable to network disconnects. Therefore, workloads would continue running during an AZ failure, but mutating actions would be unavailable. Read on to see how this strategy can be used with multiple Outposts to create a multi-AZ resilient architecture.

Multiple Outposts racks

If you have more than one logical Outpost in the same Region, we recommend connecting each Outpost to a different AZ. This would allow you to create multi-AZ resilient architectures, and when used in combination with features such as Intra-VPC communication between your Outposts, you can stretch an Amazon EC2 Auto Scaling group across two or more Outposts in the same VPC. If each Outpost is a single rack deployment, then this can be combined with a host level spread placement group specified in your instance launch template. A host level spread placement group can have as many partitions as you have hosts of that instance type in your Outpost, and would improve your workload’s resiliency to host failures.

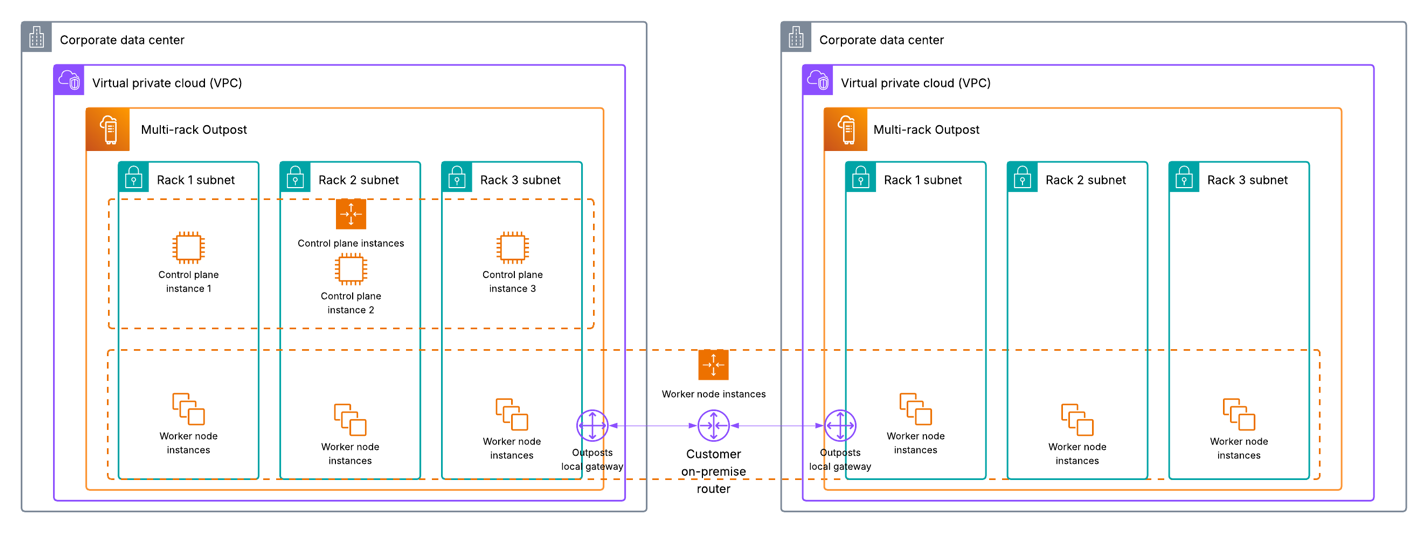

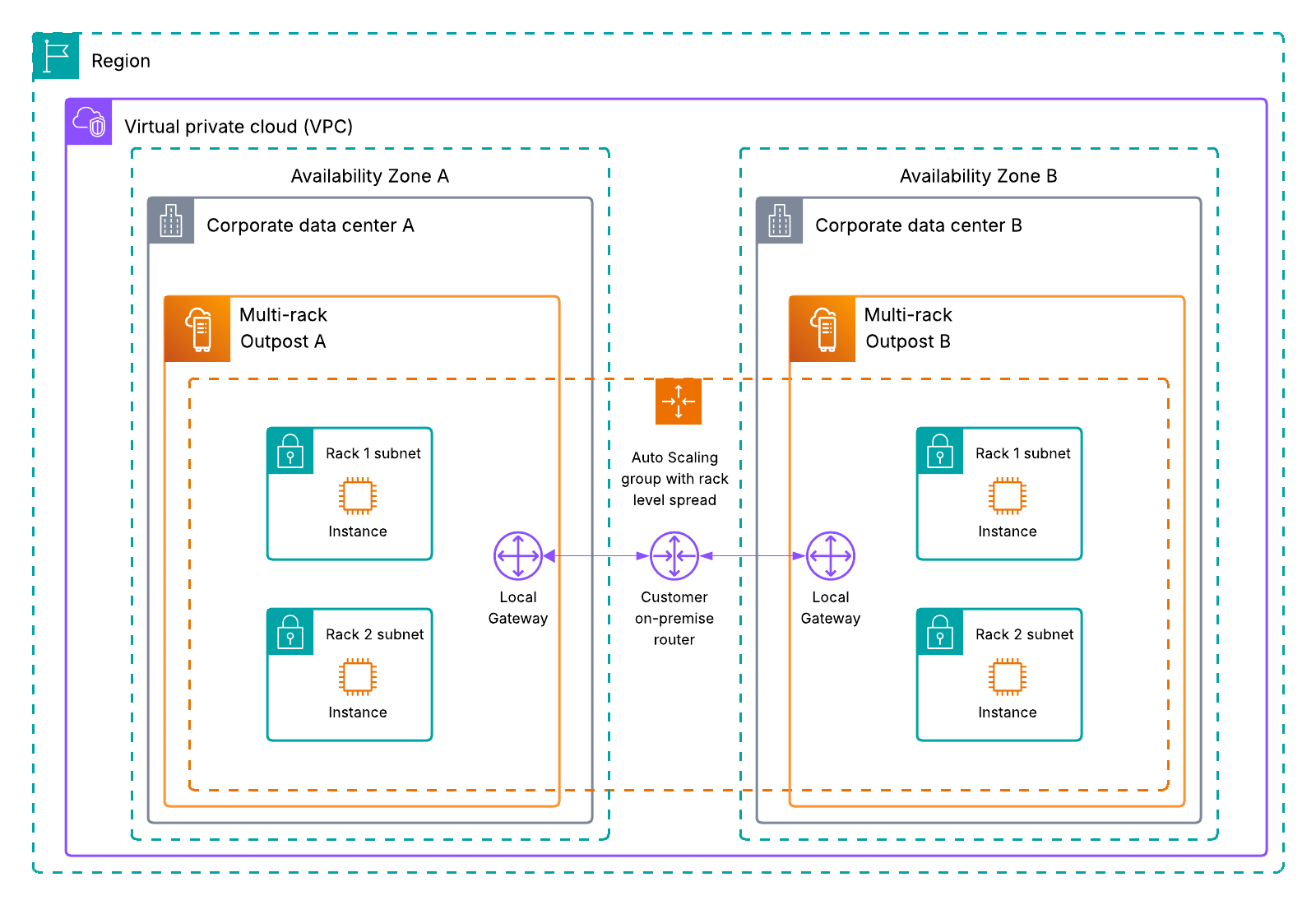

For the highest level of spread and resiliency, consider using multiple multi-rack logical Outposts. This would allow you to use rack level spread placement groups, and intra-VPC communication between Outposts, as shown in the following figure. Having more than one multi-rack Outpost allows you to create application architectures that are resilient toward hardware and AZ level failures by spreading your workload across as many fault domains as possible.

Figure 2: Intra-VPC communication between two multi-rack logical Outposts using an Amazon EC2 Auto Scaling group with rack level spread

Amazon RDS on AWS Outposts rack

The following sections cover Amazon Relational Database Service (Amazon RDS) on Outposts rack.

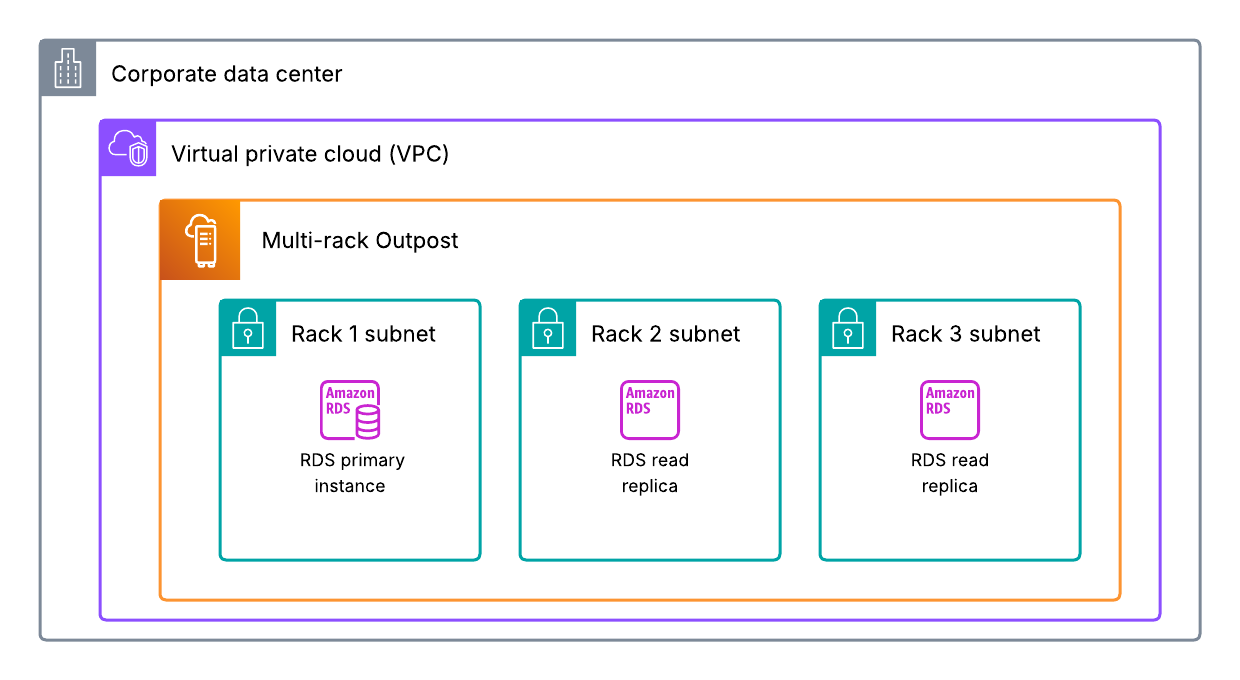

Multi-rack logical Outposts

Amazon RDS on Outposts rack supports read replicas, which use the MySQL and PostgreSQL database engines’ built-in asynchronous replication functionality to create a read replica from a source database instance. Read replicas on Amazon RDS on Outposts can be located on the same Outpost or another Outpost in the same VPC as the source database instance, as shown in the following figure. Furthermore, these can be used to scale out beyond the capacity constraints of a single database instance for read-heavy database workloads. They can also be used to maintain a second copy of your database, which can be used in the event of a host failure to improve workload resiliency. The process to promote a read replica to primary must be manually initiated, and your DNS records must be updated to the new primary instance. However, this is a good option to improve database durability if you only have one logical Outpost. Multiple read replicas can be created for a single database instance for added resiliency. You can also create an Amazon RDS read replica for a single rack Outpost to improve your resiliency to host failures. However, having a multi-rack Outpost would allow you to spread your read replica to another rack within your Outpost.

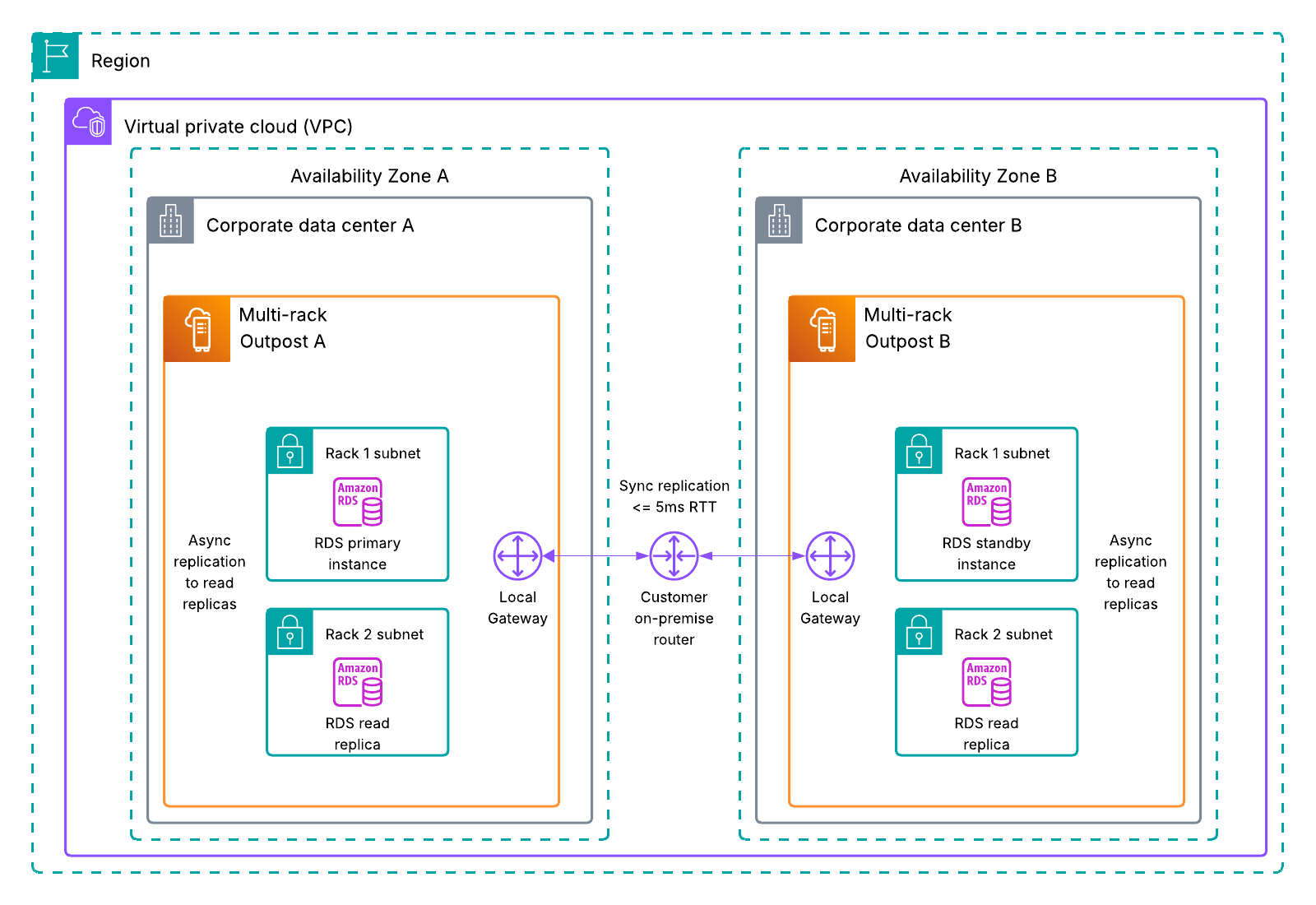

Multiple Outposts racks

Multi-AZ Amazon RDS deployments are supported on Outposts rack for MySQL and PostgreSQL database instances, as shown in the following figure. Using your Outposts Local Gateway and synchronous data replication, Amazon RDS creates a primary database instance on one Outpost, and maintains a standby database instance on a different Outpost. Failover to a multi-AZ Amazon RDS standby instance is automatic, and the DNS records are also automatically updated as part of the failover process. Using this deployment option protects you from AZ, host, and Outpost failures. You can also use multi-AZ Amazon RDS in combination with read replicas spread across different hosts on the same rack, or across multiple racks if using two multi-rack Outposts to provide more database durability.

Amazon EKS on Outposts rack

The following sections cover Amazon Elastic Kubernetes Service (Amazon EKS) on Outposts rack.

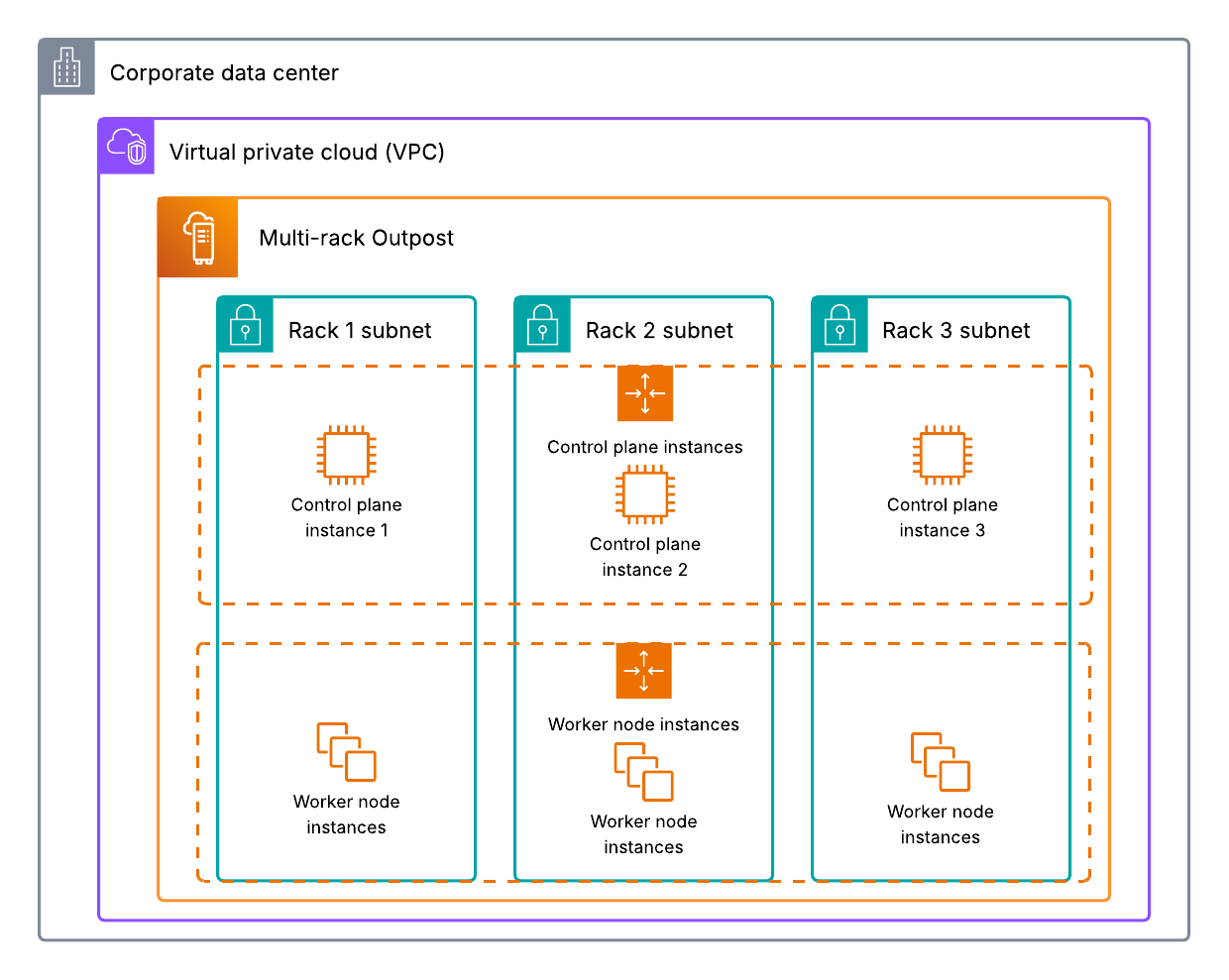

Multi-rack logical Outposts

Outposts rack supports two Amazon EKS deployment methods: EKS extended cluster, and EKS local cluster, as shown in the following figure. Go to our documentation for help deciding which method is right for your workload. Using the rack level placement group strategy discussed earlier in this post allows you to spread your EKS instances (worker and control plane depending on the deployment model used) across multiple racks within your Outpost. Amazon EKS control plane instances are automatically replaced in the event of an instance, host, or rack failure, and self-managed worker node instances are typically placed in an Amazon EC2 Auto Scaling group. Therefore, when they’re used with a rack level spread placement group, you can increase your Amazon EKS resiliency and use automation to handle failures.

Multiple Outposts racks

When using multiple Outposts racks, you’re unable to spread EKS control plane instances across two disparate Outposts. Go to Deploy an Amazon EKS cluster across AWS Outposts with Intra-VPC communication for more information on how to stretch an EKS extended cluster across multiple Outposts racks. If EKS local cluster is a requirement for your workload, you could use an external load balancer and deploy one instance of EKS local cluster on each Outpost in an active/active or active/passive configuration, and use the load balancer to direct incoming traffic to each respective EKS cluster. If your EKS cluster is using persistent storage, then you should consider whether each cluster needs access to the other clusters data, and centralized storage or replication should be used if needed.

Alternatively, if you are using EKS local cluster with two single rack Outposts, then you can also choose to only spread your EKS worker node instances across both of your Outposts. Furthermore, you can use host level spread on your primary Outpost to provide host level resiliency for your control plane instances. This would provide some added durability in the event of a host failure, and you could withstand the failure of your secondary Outpost that is only running some of your worker node instances. If you have two multi-rack Outposts, even though you couldn’t spread your control plane instances across Outposts, you can still use a rack level spread placement group to spread them across racks within your primary multi-rack Outpost. This would provide resiliency against instance, host, rack, and AZ level failures, and you could withstand the failure of your secondary multi-rack Outpost that isn’t running your EKS control plane instances as well.

Amazon S3 on Outposts rack

The following sections cover Amazon S3 on Outposts rack.

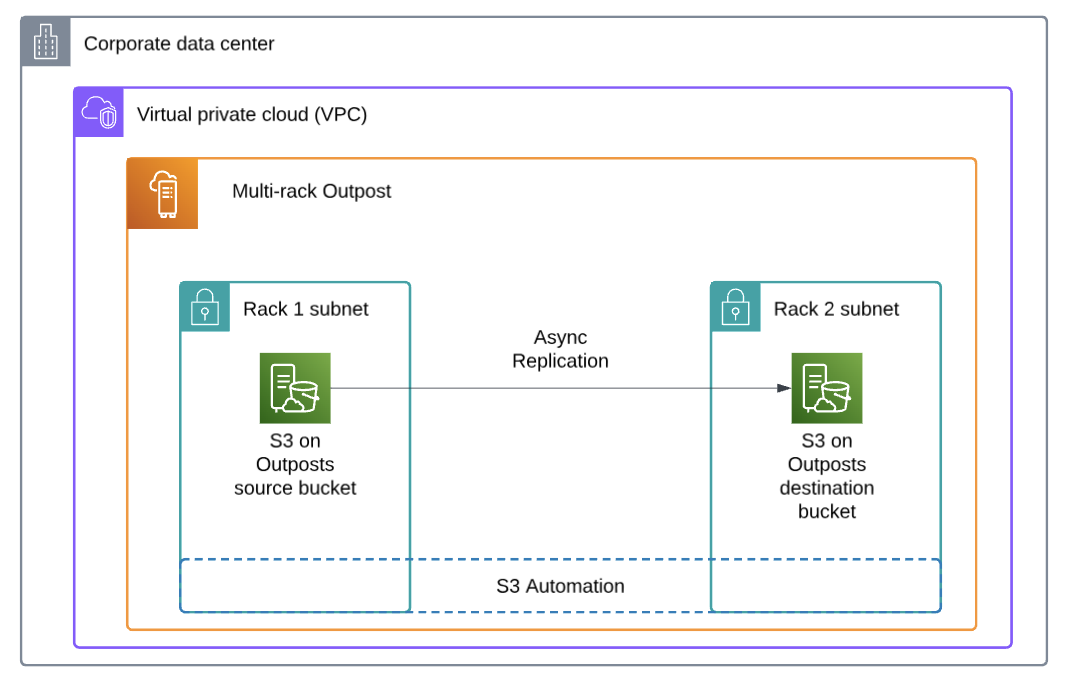

Multi-rack logical Outposts

Amazon S3 on Outposts supports object replication, either across distinct Outposts, or between buckets on the same Outpost to help meet data-residency needs. The Outpost or bucket you’re replicating to can be in the same AWS account, or a different account. If you have a multi-rack Outpost, then you can replicate your S3 objects to another bucket on the same Outpost to create a copy of your data locally for added resiliency.

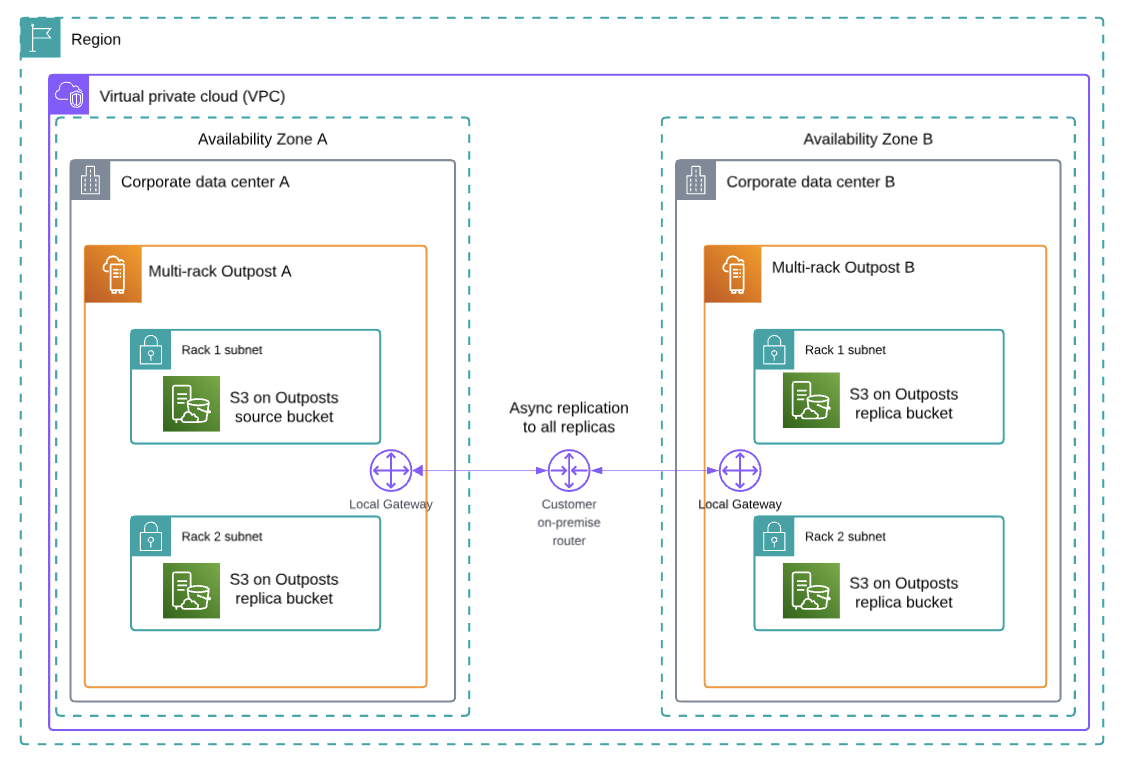

Multiple Outposts racks

Moreover, if you have multiple Outposts, then you can replicate S3 objects between buckets on each Outpost, as shown in the following figure. Connect each Outpost to a unique AZ to create a multi-AZ resilient architecture, and store a copy of your data on each Outpost. You can combine this with Amazon S3 replication to a bucket on the same Outpost as well, and have multiple replicas managed through Amazon S3 automation for the highest availability. AWS DataSync also supports Amazon S3 on Outposts, and can be used to replicate S3 objects to the Region your Outpost is connected to if you want to store a copy of your data in the cloud, or use Amazon S3 in the Region for data tiering. Refer to Automate data synchronization between AWS Outposts racks and Amazon S3 with AWS DataSync for more information.

Further considerations

- When using multiple Outposts, we recommend connecting each Outpost to a unique availability zone to use multi-AZ deployment options.

- Outposts are designed to be a connected service, and network outages could cause workflow disruptions. AWS can help you design for continued operations during network outages. We recommend creating a redundant service link connection to support workloads on Outposts with high availability requirements. Go to AWS Direct Connect Resiliency Recommendations for guidance on how to create a highly available service link connection through AWS Direct Connect, and Satellite Resiliency for AWS Outposts.

- Outposts have a finite amount of compute resources based on the physical configuration chosen, and the logical capacity configuration on your Outpost can be changed at any time using a capacity task. If the Amazon EC2 compute requirements for your workload change over time, then your Outposts capacity configuration can be updated to meet these requirements non-disruptively. Go to Dynamically reconfigure your AWS Outposts capacity using Capacity Tasks for more information.

Conclusion

This post explores the architecture options and considerations for deciding between a multi-rack Outpost, and using multiple Outposts to support your highly available workloads. For more information on how to design highly available architecture patterns for Outposts, go to the AWS Outposts High Availability Design and Architecture Considerations whitepaper. Reach out to your AWS account team, or fill out this form to learn more about Outposts and self-service capacity management.