AWS Business Intelligence Blog

Amazon QuickSight BIOps – Part 3: Assets deployment using APIs

|

All your trusted BI capabilities of Amazon QuickSight now come with powerful new AI features that integrate chat agents, deep research, and automation in one seamless experience with Amazon Quick Suite! Learn more » |

As business intelligence (BI) ecosystems expand across teams, accounts, and environments, maintaining consistency, reliability, and governance becomes a serious challenge. Manual deployment of dashboards and datasets often leads to version mismatches, broken dependencies, and increased operational risk—especially in multi-account setups.

This series of posts focuses on business intelligence operations (BIOps) in Amazon QuickSight. In Part 1, we provided a no-code guide to version control and collaboration. In Part 2, we discussed how to use QuickSight APIs for automated version control and rollback, and continuous integration and delivery (CI/CD) pipelines for BI assets.

This post focuses on API-driven BIOps strategies in QuickSight, with a specific emphasis on the following topics:

- Cross-account and multi-environment asset deployment using APIs

- Conflict detection and resolution during dataset version updates

- Permission and configuration management across dev, QA, and production environments

We show how to use tools like the Assets-as-Bundle APIs, Describe-Definition APIs, and automation scripts to promote assets safely and programmatically, while minimizing manual effort and preventing downstream breakage.

Solution overview

Part 2 in this series covered version control, rollback, and CI/CD workflows using QuickSight APIs. This post builds on that foundation with practical techniques to scale deployment and enforce consistency across environments. In the following sections, we provide an overview of API-based BIOps solutions in QuickSight, highlighting how teams can use programmatic methods to automate assets deployment and governance of BI assets. We explain how to deploy BI assets across AWS accounts and AWS Regions in QuickSight, using APIs to facilitate consistent, secure, and scalable promotion of dashboards, datasets, and related dependencies.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- An AWS account

- Access to the following AWS services:

- AWS CloudFormation

- Amazon QuickSight

- Amazon Simple Storage Service (Amazon S3)

- AWS Identity and Access Management (IAM), with access to use the QuickSight

Template,Assets-as-Bundle,Create,Update,Delete, andDescribeAPIs

- Basic knowledge of Python (use Python 3.9 or later)

- Basic knowledge of SQL

- The latest version of the AWS Command Line Interface (AWS CLI) installed

- Boto3 installed

Additionally, we recommend reviewing Part 1 and Part 2 of this series before continuing.

Template APIs for asset deployment

In the post BIOps: Amazon QuickSight object migration and version control, we discussed how to deploy QuickSight assets using the template-based approach. At the time of writing, templates were the primary mechanism for promoting and backing up dashboards across environments.

Let’s assume that we have two QuickSight accounts: development and production. Both accounts are configured to connect to valid data sources. The following diagram illustrates our architecture.

If you’re interested in the full implementation, you can refer to the original post. However, we no longer recommend using the template method for asset deployment. With the introduction of newer APIs—such as Assets-as-Bundle and Describe-Definition—BI teams now have more flexible, transparent, and version-control-friendly options for managing QuickSight assets.

Assets-as-Bundle APIs for batch asset deployment

The post Automate and accelerate your Amazon QuickSight asset deployments using the new APIs explains how to use the Assets-as-Bundle APIs to deploy QuickSight assets across AWS accounts or Regions. These APIs are designed for coordinated deployment of dashboards, analyses, datasets, and related dependencies.

The following diagram illustrates a sample workflow based on this approach.

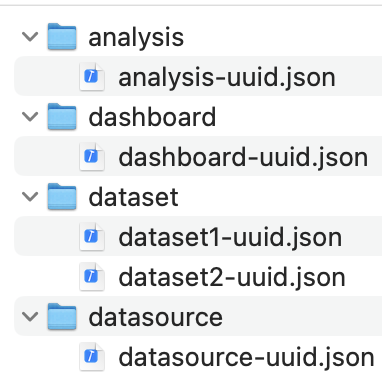

The ExportAssetsBundle API offers two export format options: QuickSight JSON or CloudFormation template. When using the QuickSight JSON option, the assets are exported as a ZIP file that contains structured JSON files for each asset type—such as analyses, dashboards, datasets, and data sources. This ZIP package preserves the relationships between assets, and helps teams inspect or modify individual JSON files, making it suitable for deployment automation, though less ideal for granular version control.

The contents follow the hierarchy shown in the following screenshot.

In this sample Jupyter notebook, we provide an end-to-end workflow demonstrating how to use the ExportAssetsBundle and ImportAssetsBundle APIs to deploy QuickSight assets from a source account to a target account using the QuickSight JSON option. This example serves as a foundational use case and can be extended into a full deployment pipeline, incorporating automation steps such as source control integration, version tagging, and environment-specific configuration.

Managing permissions in asset deployment

By default, exported QuickSight assets don’t retain user- or group-level permissions unless the include-permissions option is explicitly enabled during the export operation. Even when permissions are included, they might not map correctly across accounts unless the user or group names match in both the source and target environments.

For proper access control, it is a best practice to manually update asset permissions after the import job is complete, using the appropriate UpdatePermissions APIs. This makes sure the correct users or groups in the target environment have access to the imported dashboards, analyses, datasets, or other assets.

Another option is to assign permissions during import by using the OverridePermissions parameter in the StartAssetBundleImportJob API. You can specify the users or groups in the target account who should receive access to the imported assets. However, you should use this option with caution: when the import job is complete, the specified users or groups will have immediate access to the assets. It’s important to validate that permissions are correctly configured to avoid exposing sensitive dashboards or datasets to unintended users.

Managing environment-specific configurations in asset deployment

The StartAssetBundleImportJob API also provides a parameter called OverrideParameters, which BI teams can use to override environment-specific configurations during import. This includes settings such as virtual private cloud (VPC) connections, data source credentials, and other deployment-specific attributes.

Using OverrideParameters helps make sure assets imported into a new account or environment are connected to the correct infrastructure resources, without needing to modify the exported bundle files directly. It’s especially useful when promoting assets across environments (for example, from dev to prod) with differing network or authentication configurations.

For a more comprehensive architecture of asset deployment across environments and accounts, refer to Guidance for Multi-Account Environments on Amazon QuickSight. This guidance outlines best practices for organizing development, staging, and production accounts, managing QuickSight namespaces and permissions, and implementing automated asset deployment pipelines—all aligned with enterprise-grade governance and scalability requirements.

The following diagram illustrates the solution architecture for this option.

The CloudFormation template option of the Assets-as-Bundle API is functionally similar to the QuickSight JSON option. When selected, the export output is a CloudFormation template that encapsulates the dashboard or analysis along with its dependencies, such as datasets, data sources, themes, and folders. This option is particularly useful for teams already using AWS CloudFormation for infrastructure automation, because it allows QuickSight assets to be deployed and managed alongside other AWS resources using consistent tooling and deployment pipelines.

For a practical example, refer to the following sample Jupyter notebook, which demonstrates an end-to-end workflow using the CloudFormation template option of the Assets-as-Bundle API. This notebook shows how to export and deploy QuickSight assets across accounts, using the CloudFormation template for structured and repeatable asset promotion.

The following table compares the CloudFormation template method vs. using QuickSight JSON (Assets-as-Bundle API).

| Aspect | CloudFormation Template | QuickSight JSON |

| Export Format | YAML/JSON CloudFormation template | Structured JSON files in a ZIP archive |

| Purpose | Integration with AWS CloudFormation based infrastructure as code workflows | Simplified asset portability and inspection in native QuickSight format |

| Asset Coverage | Includes dashboard, analysis, and dependencies | Includes dashboard, analysis, and dependencies |

| Modifiability | Requires AWS CloudFormation syntax knowledge; more rigid | Straightforward to read and edit; JSON follows QuickSight internal structure |

| Deployment Method | Deployed using CloudFormation stacks | Imported using the ImportAssetsBundle API |

| Tooling Compatibility | Best for AWS CloudFormation based environments | Best for JSON-based workflows or custom deployment scripts |

| Readability | Less intuitive for BI users; infrastructure-oriented | More intuitive for BI teams; closely aligned with Describe APIs |

| Use Case Fit | Teams integrating BI asset deployment into broader infrastructure as code templates | Teams focused on QuickSight asset migration or code-based workflows |

For a deeper comparison of the QuickSight JSON and CloudFormation template options available in the Assets-as-Bundle APIs, refer to Choosing between the two export options of the Amazon QuickSight asset deployment APIs. This post provides a detailed breakdown of the pros and cons of each option, along with real-world examples to help BI teams decide which format best fits their deployment workflows and automation needs.

The post Automate your Amazon QuickSight assets deployment using the new Amazon EventBridge integration demonstrates how to integrate the Assets-as-Bundle APIs with Amazon EventBridge to automate deployment workflows. By setting up EventBridge rules that respond to QuickSight asset events—such as asset creation, update, or deletion—teams can automatically trigger workflows that export, version, or promote assets across environments. This integration is particularly useful for enabling event-driven CI/CD pipelines for BI assets in QuickSight.

Describe-Definition APIs for incremental asset deployment

In Part 2 of this series, we discussed how to manage version control within a single QuickSight account using the Describe-Definition APIs. You can extend the same principles to a multi-account setup, enabling structured and selective deployment of BI assets across environments.

Using Describe APIs for individual asset deployment is considered a best practice in many scenarios—for example, when executing an emergency deployment to fix a dataset issue in the production account without needing to update the associated dashboards, or when updating a filter definition within a dashboard while leaving its dependent assets untouched. This fine-grained control helps reduce deployment risk, avoids unnecessary overwrites, and aligns well with incremental development workflows in large-scale BI environments.

For the sample code, refer to BIOps: Amazon QuickSight object migration and version control, which outlines two end-to-end automated options for deploying QuickSight assets. Although the post was originally based on the template-based approach, the underlying concepts remain valuable.

You can reuse and adapt the code provided in the associated GitHub repository. By updating the legacy template-related API calls with the newer DescribeDashboardDefinition and other Describe-Definition APIs, you can extend the same automation workflow to align with current best practices for version-controlled asset deployment.

Comparing API methods

The following table compares using the Assets-as-Bundle APIs vs. Describe-Definition APIs.

| Aspect | Assets-as-Bundle APIs | Describe-Definition/Describe APIs |

| Primary Use Case | Deployment of related assets across environments, accounts, or Regions | Version control, modular development, and CI/CD integration |

| Granularity | Bundles full dashboards and analyses along with dependencies (datasets, sources, themes, folders) | Focused on individual asset definitions |

| Visibility | JSON or CloudFormation template embedded in a ZIP file; inspectable with extra effort | Fully visible, line-by-line JSON definitions directly accessible through an API |

| Version Control Fit | Not ideal; harder to diff, modularize, or review | Excellent; suited for Git-based workflows, review, and rollback |

| Reusability of Components | Limited; bundles are self-contained, might duplicate datasets in multi-bundle scenarios | High; components (calculated fields, filters) can be reused and modularized across assets with well architected solutions |

| Development Workflow | Bundle created from existing dashboard or analysis in QuickSight | JSON can be authored or modified programmatically, then applied using Update APIs |

| Deployment Workflow | Export using ExportAssetsBundle, then import using ImportAssetsBundle |

Use Create or Update APIs to push changes |

| Ideal For | Migrating dashboards and their dependencies as a package between environments | Iterative development, CI/CD, code-driven BI teams |

| Limitations | Bundles are larger, might include redundant assets; harder to isolate or edit individual components | Does not include dependent assets (datasets, sources, themes)—must be handled separately |

Backup, restore, and disaster recovery

This section outlines how to use QuickSight APIs to back up, restore, and recover BI assets, enabling robust disaster recovery and minimizing data loss across environments.

You can use both the Assets-as-Bundle API and the Describe-Definition APIs to support backup, restore, and disaster recovery for QuickSight assets. By regularly exporting assets—either as bundles (ZIP files or CloudFormation templates) or as individual JSON definitions—BI teams can create versioned backups stored in Amazon S3, Git, or other repositories.

With the Assets-as-Bundle APIs, teams can organize backups on a per-dashboard basis, exporting each dashboard along with its dependencies (datasets, data sources, themes, and so on). This approach is convenient and well-suited for dashboard-level recovery scenarios.

In contrast, the Describe-Definition APIs offer greater flexibility but require more effort. BI teams can list and describe all assets individually, store them by type (data sources, datasets, analyses), and maintain a modular backup structure. For reliable restoration, assets should be redeployed in a logical order—starting with data sources, followed by datasets, then themes, and finally dashboards and analyses.

As mentioned earlier, the post BIOps: Amazon QuickSight object migration and version control provides a sample implementation for backing up and restoring assets in a QuickSight account using the template-based approach. The associated Jupyter notebook demonstrates how to automate batch export and import of assets across an entire account.

Though originally built around templates, this code can be adapted to use the modern Describe-Definition APIs by replacing the relevant API calls, creating a scalable and maintainable backup and restore workflow aligned with current best practices.

Additionally, teams can use QuickSight folders to organize backups and manage restore operations more intuitively, grouping related assets together as part of a structured recovery strategy. For a folder-based approach to backup and restore, refer to the following sample Jupyter notebook. It demonstrates how to export and re-import QuickSight assets organized by folders, so BI teams can group related assets (dashboards, analyses, datasets) and manage them collectively.

How to resolve the merge conflict between dataset and dashboard

This section explains how to detect and resolve merge conflicts between datasets and dashboards in QuickSight, making sure schema changes don’t break dependent visuals or filters. The following figure illustrates the dataset version control and conflict resolution workflow.

The process begins with an initial dataset (v1) and evaluates whether an update—such as a field rename or data type change—has occurred. If an update is detected, the dataset progresses to version 2 (v2), triggering a check for potential merge conflicts.If a conflict is found, the flow determines whether it can be resolved through field mapping (for example, remapping a renamed field to restore visuals). If the conflict is resolvable, the mapping is applied to recover the impacted assets. If not (for example, due to a data type change), the sample code can be used to automatically detect modified fields and clean up any broken filters or visuals caused by the unresolved changes.

The sample code for detecting and handling dataset merge conflicts is available in the following Jupyter notebook. It demonstrates how to automatically identify modified fields, resolve simple mapping issues (like changed field data type), and clean up broken filters caused by incompatible changes.

Furthermore, we provided sample code and a GitHub Actions workflow to automate merge conflict detection during QuickSight dataset updates. The Python script compare_quicksight_datasets.py compares dataset versions to identify data type changes, renamed fields, added fields, and deleted fields.

The GitHub Actions workflow compare-quicksight.yml is triggered automatically to run the comparison. It saves the comparison results as a new artifact in the repository, helping BI teams review the changes and take appropriate action—either to automate conflict resolution or guide manual intervention during dataset version promotion.

To set up and run the workflow, complete the following steps:

- Make sure the YAML file is saved in the correct GitHub directory:

.github/workflows/compare-quicksight.ymlYour repository structure should look like the following code:

- Make sure your

compare-quicksight.ymlcontains a valid trigger, for example:

This way, you can manually run the workflow from the Actions tab.

- Commit and push the workflow file to your repository:

- Add a GitHub repo secret:

- Go to your GitHub repo, and choose Settings, Secrets and variables, Actions.

- Choose New repository secret.

- Add secrets, for example:

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_REGIONQUICKSIGHT_ACCOUNT_IDNEW_DATASET_ID

- Run the workflow:

- Go to your GitHub repository.

- On the Actions tab, choose Compare QuickSight Datasets.

- Choose Run workflow, and provide any required inputs.

You can use this utility as part of a version control or deployment pipeline. Additionally, the BI team can manually resolve conflicts by reviewing and updating affected visuals or filters directly in the analysis or dashboard.

Clean up

To avoid incurring future charges, delete any resources you created while testing, such as S3 buckets, QuickSight datasets, analyses, dashboards, or other assets used in the sample scripts.

Conclusion

The QuickSight API capabilities unlock powerful automation, governance, and flexibility for managing BI assets at scale. In this post, we discussed how to use the APIs for cross-account and multi-environment deployments, and conflict detection and governance. You can use the guidance in this post to establish robust, conflict-aware deployment practices for QuickSight.

About the authors

Ying Wang is a Senior Specialist Solutions Architect in the Generative AI organization at AWS, specializing in Amazon QuickSight and Amazon Q to support large enterprise and ISV customers. She brings 16 years of experience in data analytics and data science, with a strong background as a data architect and software development engineering manager. As a data architect, Ying helped customers design and scale enterprise data architecture solutions in the cloud. In her role as an engineering manager, she enabled customers to unlock the power of their data through QuickSight by delivering new features and driving product innovation from both engineering and product perspectives.

Ying Wang is a Senior Specialist Solutions Architect in the Generative AI organization at AWS, specializing in Amazon QuickSight and Amazon Q to support large enterprise and ISV customers. She brings 16 years of experience in data analytics and data science, with a strong background as a data architect and software development engineering manager. As a data architect, Ying helped customers design and scale enterprise data architecture solutions in the cloud. In her role as an engineering manager, she enabled customers to unlock the power of their data through QuickSight by delivering new features and driving product innovation from both engineering and product perspectives.