AWS Business Intelligence Blog

Amazon QuickSight BIOps – Part 2: Version control using APIs

|

All your trusted BI capabilities of Amazon QuickSight now come with powerful new AI features that integrate chat agents, deep research, and automation in one seamless experience with Amazon Quick Suite! Learn more » |

This post outlines an API-driven business intelligence operations (BIOps) framework to reduce manual workload and improve lifecycle management in Amazon QuickSight.

As business intelligence (BI) environments grow more complex, manual management of dashboards, datasets, and deployments can lead to inconsistent results, version drift, and inefficient collaboration. Automation is crucial to scale insight delivery, enforce governance, and promote reliability across development, quality assurance (QA), and production environments.

DevOps integrates software development and IT operations to accelerate delivery and improve reliability. It emphasizes the following practices to streamline and scale software workflows:

- Continuous integration and continuous delivery (CI/CD)

- Infrastructure as code (IaC) and assets as code (AaC)

- Automation

- Monitoring

- Collaboration tools (such as Git, Jira, or Slack)

BIOps applies similar principles to BI workflows. By automating asset backup, version control, and deployment, BI teams can manage dashboards, datasets, and analyses with greater consistency, improved traceability, and enhanced efficiency.

In this series of posts, we discuss ways to help your team implement BIOps practices in QuickSight, leading to more scalable and reliable BI operations. In Part 1, we provided a no-code guide to version control and collaboration. In this post, we discuss how to use QuickSight APIs for automated version control and rollback, and CI/CD pipelines for BI assets. In Part 3, we cover cross-account and multi-environment deployments, and conflict detection and governance.

Solution overview

This post provides an overview of API-based BIOps solutions in QuickSight, highlighting how teams can use programmatic methods to automate version control, deployment, and governance of BI assets. BI engineers, DevOps leads, and platform admins can follow the code-based practices discussed in this post to scale their BI workflows with confidence.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- An AWS account

- Access to the following AWS services:

- AWS CloudFormation

- Amazon QuickSight

- Amazon Simple Storage Service (Amazon S3)

- AWS Identity and Access Management (IAM), with access to use the QuickSight

Template,Assets-as-Bundle,Create,Update,Delete, andDescribeAPIs

- Basic knowledge of Python (use Python 3.9 or later)

- Basic knowledge of SQL

- The latest version of the AWS Command Line Interface (AWS CLI) installed

- Boto3 installed

Additionally, we recommend reviewing Part 1 of this series before continuing.

Overview of QuickSight asset APIs

QuickSight offers multiple API-based approaches to manage BI artifacts, each with distinct strengths depending on your goals, such as version control, deployment automation, or backup. The three main approaches include:

- The legacy Template APIs

- The newer Assets-as-Bundle APIs

- The Describe-Definition APIs

Although all three approaches facilitate programmatic handling of dashboards, analyses, datasets, and more, they differ significantly in visibility, control, and alignment with modern CI/CD workflows.

Template APIs

The Template-based approach was the original method for promoting QuickSight assets across AWS accounts and AWS Regions, using APIs such as CreateTemplate and CreateDashboard. Historically, templates were used to back up dashboards or deploy assets to other environments. In earlier implementations, the template content was opaque—a complete black box to BI teams. However, with the introduction of the DescribeTemplateDefinition API, teams can now extract the JSON definition of a template for inspection or backup. However, templates remain more rigid than newer alternatives and are not optimized for iterative development, field-level editing, or version control workflows.

Nevertheless, templates can still serve BI teams who prefer to use the QuickSight based environment to store backups, without needing to set up external version control systems or storage such as Git repositories or S3 buckets. This makes templates a lightweight option for teams focused on in-product asset management.

The full list of Template APIs for QuickSight assets include:

CreateTemplateDeleteTemplateDescribeTemplateDecribeTemplateDefinitionUpdateTemplateUpdateTemplatePermissions

Assets-as-Bundle APIs

For teams adopting CI/CD pipelines or practicing IaC, the modern Assets-as-Bundle and Describe-Definition APIs provide greater transparency, flexibility, and control—and are therefore the preferred approach for most enterprise use cases today.

The Assets-as-Bundle APIs offer a more modular and portable deployment option. They export a QuickSight dashboard or analysis and its related assets—datasets, data sources, themes, and folders—into a ZIP archive or AWS CloudFormation template. Although the archive can be unpacked to inspect assets metadata as JSON files, doing so requires extra effort and is not ideal for fine-grained versioning. Moreover, when exporting multiple dashboards, overlapping datasets might appear redundantly across bundles. The QuickSight import process manages these overlaps gracefully, avoiding unintended overwrites, but this method is still best suited for coordinated asset deployment rather than detailed version control.

You can use the following APIs to initiate, track, and describe the export jobs that produce the bundle files from the source account. A bundle file is a ZIP file (with the .qs extension) that contains assets specified by the caller, and optionally all dependencies of the assets.

- StartAssetBundleExportJob – Use this asynchronous API to export an asset bundle file.

- DescribeAssetBundleExportJob – Use this synchronous API to get the status of your export job. When successful, this API call response will have a pre-signed URL to fetch the asset bundle.

- ListAssetBundleExportJobs – Use this synchronous API to list past export jobs. The list will contain both finished and running jobs from the past 15 days.

The following APIs initiate, track, and describe the import jobs that take the bundle file as input and create or update assets in the destination account:

- StartAssetBundleImportJob – Use this asynchronous API to start an import of an asset bundle file.

- DescribeAssetBundleImportJob – Use this synchronous API to get the status of your import job.

- ListAssetBundleImportJobs – Use this synchronous API to list past import jobs. The list will contain both finished and running jobs from the past 15 days.

Describe-Definition APIs

The Describe-Definition APIs expose the internal JSON structure of each artifact in a transparent, field-level format. These definitions can be tracked in Git, reviewed through pull requests, and updated through corresponding Update APIs—making this method ideal for integration with CI/CD pipelines and IaC practices. The main trade-off is that dependencies like datasets and data sources must be handled separately, because they’re not automatically bundled with the dashboard or analysis.

The Describe-Definition APIs include:

In practice, many BI teams use both approaches in tandem—Assets-as-Bundle for coordinated deployments and Describe-Definition for fine-grained version tracking and iterative development. Understanding when to use each API type enables better governance, improved auditability, and smoother asset promotion across environments.

Comparing methods

The following table summarizes the use cases and storage options for these three API methods.

| Method | Primary Use Case | Ideal For | Storage Location |

| Template APIs | In-product backup, legacy deployment | UI-driven teams, simple backups | Stored within QuickSight |

| Describe-Definition APIs | Fine-grained version control and automation | CI/CD, Git integration | Git, Amazon S3, or code repos |

| Assets-as-Bundle APIs | Environment-level deployment with dependencies | Dev to prod rollout, bulk migration | Amazon S3 or local ZIP archive |

For more information about the QuickSight APIs, refer to the QuickSight API reference and Boto3 QuickSight documentation.

Best practices of version control for BI artifacts

In this the following sections, we discuss best practices for dashboard version control within a single account. In QuickSight, a dashboard represents the officially released, stable snapshot of an analysis. An analysis is the working asset used by BI teams for development and iteration. In the UI, QuickSight provides version control only for dashboards, because analyses aren’t exposed to end-users and are considered to be always in development.

However, when using programmable methods such as the Describe-Definition APIs, you can treat elements of an analysis—such as filters, calculated fields, visuals, and parameters—as modular code blocks. Teams can manage and version analyses in a structured, code-driven way, so multiple authors can collaborate in parallel. Therefore, we also consider analysis version control as a key practice when using the Describe-Definition APIs.

Version control using Template APIs

In the post BIOps: Amazon QuickSight object migration and version control, we discussed how to implement dashboard version control in QuickSight using the template-based approach. At the time of writing, templates were the only available mechanism for managing version control of dashboard.

The following diagram illustrates the architecture we used in that solution.

The workflow consists of the following steps:

- The BI developer creates an analysis and saves a template based on it. These are considered the version 1 assets.

- The analysis is published as a dashboard, and the QA team conducts testing on this version 1 dashboard.

- After QA testing, the BI developer continues working on the analysis to build version 2.

- The updated analysis is published as version 2 of the dashboard.

- The QA team tests the version 2 dashboard and takes one of the following actions:

- If the tests pass, the BI admin updates the template to reflect version 2.

- If issues are found, the BI developer attempts to fix them in the analysis:

- If the issues are fixable, development continues on version 2.

- If the issues are not fixable, the BI admin can roll back to version 1 using the backup template.

- QuickSight offers an Undo function with which authors can revert to earlier versions during a session. If undo history has been reset (for example, due to a dataset replacement), or a rollback to a previously confirmed stable state is required, the BI admin can use the version 1 template with the

UpdateAnalysisAPI to restore the analysis. - The BI developer continues work using the restored version 1 analysis as a base, repeating the development cycle toward the next stable version.

Version control using Describe-Definition APIs

The following diagram illustrates the architecture for using Describe-Definition and Create or Update APIs to implement version control for QuickSight assets.

The workflow consists of the following steps:

- Users author and store the assets:

- The BI team can begin building QuickSight assets programmatically by studying the response syntax of the

Describe-DefinitionAPIs. Dashboards and analyses can be constructed using JSON-based building blocks such as sheets, calculated fields, visuals, filters, and parameters. Similarly, datasets can be authored using JSON structures that define physical and logical table mappings. QuickSight assets follow a structured JSON format, making them suitable for code-driven development. - To ease the learning curve, the BI team can first create assets using the QuickSight console, verify that the configurations meet their requirements, and then use the

Describe-DefinitionAPIs to extract the asset definitions. These JSON definitions can be stored in version-controlled locations such as Git, Amazon S3, or internal code repositories. This helps bridge the transition from UI-based to code-driven development. - If the team already has assets deployed in a production environment and wants to shift to a programmable workflow, they can extract definitions directly from the production account using

Describe-DefinitionAPIs. These definitions can then be saved to the development environment’s source repository (such as Git or Amazon S3) and used as a starting point for future iterations and controlled deployments.

- The BI team can begin building QuickSight assets programmatically by studying the response syntax of the

- After the BI team completes development of the JSON definitions, they can use the

CreateorUpdateAPIs (such asCreateDashboard,UpdateAnalysis, orUpdateDataSet) to deploy the assets and visualize them directly on the QuickSight console. This enables a seamless transition from code to visual output, making sure version-controlled definitions are consistently reflected in the UI. - After deploying the assets in the development or QA QuickSight environment, the BI team can perform testing to validate that the assets meet functional and visual expectations. Post-verification, the tested definitions can be promoted and deployed to the production account using the same

CreateorUpdateAPIs, facilitating a controlled and consistent release process. You can use the following sample code to deploy the updated analysis into a folder calledQA. Alternatively, you can use the following sample code to promote the updated analysis to a QA account.

The following sample code snippet describes the definition of an analysis and copies it in a folder called dev, so the BI team can programmatically develop and manage the asset:

The following sample code snippet defines an analysis and programmatically adds calculated fields, parameters, and filters. These secondary assets (calculated fields, filters, parameters) are saved in a shared library as reusable code blocks.

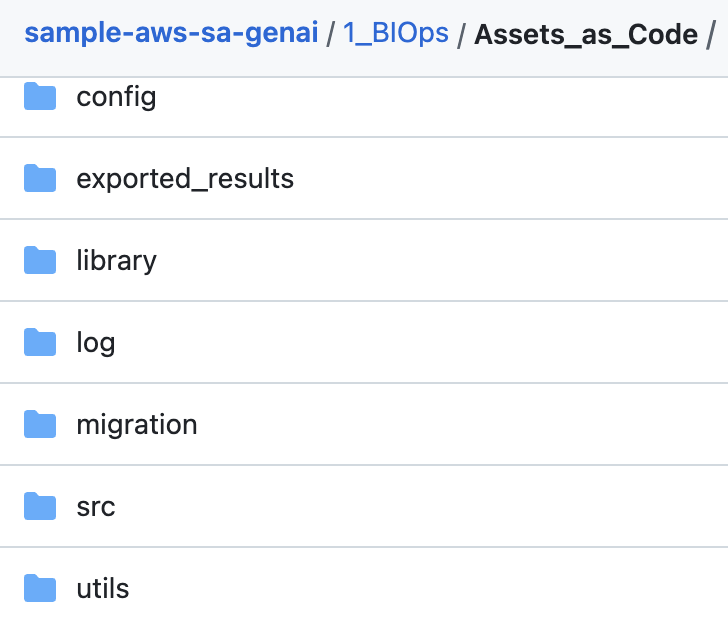

The prebuilt functions are available on GitHub. Additional supplemental code—including libraries, utilities, configuration samples, and logging helpers—is located in the following directory. The following screenshot shows the sample code directory structure for the AaC approach. You can download the entire package and use it as a best practice to build your own AaC solution.

In this architecture, version control is handled externally through Git repositories or Amazon S3 versioning. With this approach, the BI team can develop assets in parallel and reuse secondary assets such as calculated fields, filters, and visuals. For example, the team can store the definition of a calculated field as a standalone JSON object with a standardized name, enabling it to be reused across multiple analyses or dashboards.Additional benefits of this method include line-by-line code review through pull requests, straightforward rollback to previous versions, and seamless integration with CI/CD workflows.

In summary, this pattern transforms QuickSight into a fully code-driven platform—treating infrastructure and BI assets as code.

Version control using a hybrid workflow with QuickSight UI and APIs

The BI team can also extend this architecture into a hybrid model: performing development in the QuickSight UI while managing version control through APIs. In this approach, the team continues to build and update assets interactively on the QuickSight console. When development is complete and the assets have passed testing, the team uses the Describe-Definition APIs to extract the updated JSON definitions and save them to a version-controlled repository such as Git or Amazon S3.

This model combines the ease and flexibility of UI-based development with the structure and traceability of code-based versioning—providing the best of both worlds for BI teams transitioning into programmatic workflows.

The following diagram illustrates the architecture for using UI-based development with the structure and traceability of code-based versioning.

The workflow consists of the following steps:

- BI authors develop dashboards, analyses, datasets, data sources, and themes directly on the QuickSight console.

- When development is complete and the assets pass functional and visual tests, the team uses

Describe-DefinitionAPIs to export the asset definitions and store them in Git or Amazon S3. - The validated assets are deployed to the production environment using

CreateorUpdateAPIs. - Authors resume development on the same assets within the UI for the next version.

- The version is tested:

- If the new version passes testing, update the versioned JSON definitions in the repository and proceed to Step 6.

- If the new version fails, use the previously stored definitions from Git or Amazon S3 to roll back the assets in the QuickSight UI using the

UpdateAPIs.

- The updated or rolled-back definitions are deployed to the production account to facilitate a stable, version-controlled release.

Automating QuickSight version control with Amazon EventBridge

You can use Amazon EventBridge to monitor changes to individual QuickSight assets—such as analyses, dashboards, and VPC connections—or folder structures by capturing asset-level events in near real time. These events include asset creation, updates, deletions, and changes in folder membership. For more information, see Automate your Amazon QuickSight assets deployment using the new Amazon EventBridge integration.

By integrating QuickSight with EventBridge, BI teams can define rules that automatically trigger downstream workflows (for example, AWS Lambda or AWS Step Functions) whenever specific assets or folders are modified. This facilitates seamless version control processes—such as exporting asset definitions, storing snapshots in Git or Amazon S3, and tagging for audit or rollback—so teams can automate governance and maintain consistency across environments without manual intervention.

Version control using Assets-as-Bundle APIs

We do not recommend using the Assets-as-Bundle APIs for version control. Their primary use case is for asset deployment, such as promoting dashboards and their dependencies across environments (for example, from dev to QA or prod). Although the bundle files can technically be stored and reused, they are not well-suited for fine-grained tracking, comparison, or modular development. For proper version control, use the Describe-Definition APIs combined with Git or Amazon S3 based storage.

Clean up

To avoid incurring future charges, delete any resources you created while testing, such as S3 buckets, QuickSight datasets, analyses, dashboards, or other assets used in the sample scripts.

Conclusion

The QuickSight API capabilities discussed in this post unlock powerful automation, governance, and flexibility for managing BI assets at scale. These APIs give you full control over asset lifecycles, with the ability to integrate seamlessly into CI/CD pipelines, Git-based workflows, and custom tooling. You can effortlessly version dashboards, deploy across environments, or resolve schema conflicts.

Use APIs when you need fine-grained control, auditability, and repeatable deployment across accounts or regions. Use the QuickSight console when prioritizing speed, ease of use, or lightweight iteration. For many teams, a hybrid approach—developing on the QuickSight console and capturing changes using APIs—offers the best of both worlds.

By adopting programmable BIOps practices, BI teams can scale delivery, reduce risk, and move from one-time development to reliable, governed insight production.

In Part 3, we discuss cross-account and multi-environment deployments, and conflict detection and governance.

About the authors

Ying Wang is a Senior Specialist Solutions Architect in the Generative AI organization at AWS, specializing in Amazon QuickSight and Amazon Q to support large enterprise and ISV customers. She brings 16 years of experience in data analytics and data science, with a strong background as a data architect and software development engineering manager. As a data architect, Ying helped customers design and scale enterprise data architecture solutions in the cloud. In her role as an engineering manager, she enabled customers to unlock the power of their data through QuickSight by delivering new features and driving product innovation from both engineering and product perspectives.

Ying Wang is a Senior Specialist Solutions Architect in the Generative AI organization at AWS, specializing in Amazon QuickSight and Amazon Q to support large enterprise and ISV customers. She brings 16 years of experience in data analytics and data science, with a strong background as a data architect and software development engineering manager. As a data architect, Ying helped customers design and scale enterprise data architecture solutions in the cloud. In her role as an engineering manager, she enabled customers to unlock the power of their data through QuickSight by delivering new features and driving product innovation from both engineering and product perspectives.