AWS Big Data Blog

Category: Intermediate (200)

Deliver decompressed Amazon CloudWatch Logs to Amazon S3 and Splunk using Amazon Data Firehose

You can use Amazon Data Firehose to aggregate and deliver log events from your applications and services captured in Amazon CloudWatch Logs to your Amazon Simple Storage Service (Amazon S3) bucket and Splunk destinations, for use cases such as data analytics, security analysis, application troubleshooting etc. By default, CloudWatch Logs are delivered as gzip-compressed objects. […]

Nexthink scales to trillions of events per day with Amazon MSK

Real-time data streaming and event processing present scalability and management challenges. AWS offers a broad selection of managed real-time data streaming services to effortlessly run these workloads at any scale. In this post, Nexthink shares how Amazon Managed Streaming for Apache Kafka (Amazon MSK) empowered them to achieve massive scale in event processing. Experiencing business […]

Enhance monitoring and debugging for AWS Glue jobs using new job observability metrics, Part 3: Visualization and trend analysis using Amazon QuickSight

In Part 2 of this series, we discussed how to enable AWS Glue job observability metrics and integrate them with Grafana for real-time monitoring. Grafana provides powerful customizable dashboards to view pipeline health. However, to analyze trends over time, aggregate from different dimensions, and share insights across the organization, a purpose-built business intelligence (BI) tool […]

Successfully conduct a proof of concept in Amazon Redshift

Amazon Redshift is a fast, scalable, and fully managed cloud data warehouse that allows you to process and run your complex SQL analytics workloads on structured and semi-structured data. In this post, we discuss how to successfully conduct a proof of concept in Amazon Redshift by going through the main stages of the process, available tools that accelerate implementation, and common use cases.

Announcing the AWS Well-Architected Data Analytics Lens

We are delighted to announce the latest version of the Data Analytics Lens, an AWS Well-Architected whitepaper. AWS Well-Architected provides a consistent approach to evaluate architectures and implement scalable designs. The AWS Well-Architected Framework is based on six pillars—operational excellence, security, reliability, performance efficiency, cost optimization, and sustainability. With the framework, cloud architects, system architects, engineers, and developers can build secure, high-performance, resilient, and efficient infrastructure for their applications and workloads.

Run Trino queries 2.7 times faster with Amazon EMR 6.15.0

In this blog, we compare Amazon EMR 6.15.0 with open source Trino 426 and show that TPC-DS queries ran up to 2.7 times faster on Amazon EMR 6.15.0 Trino 426 compared to open source Trino 426. Later, we explain a few of the AWS-developed performance optimizations that contribute to these results.

Amazon Managed Service for Apache Flink now supports Apache Flink version 1.18

Apache Flink is an open source distributed processing engine, offering powerful programming interfaces for both stream and batch processing, with first-class support for stateful processing and event time semantics. Apache Flink supports multiple programming languages, Java, Python, Scala, SQL, and multiple APIs with different level of abstraction, which can be used interchangeably in the same […]

Measure performance of AWS Glue Data Quality for ETL pipelines

In this post, we provide benchmark results of running increasingly complex data quality rulesets over a predefined test dataset. As part of the results, we show how AWS Glue Data Quality provides information about the runtime of extract, transform, and load (ETL) jobs, the resources measured in terms of data processing units (DPUs), and how you can track the cost of running AWS Glue Data Quality for ETL pipelines by defining custom cost reporting in AWS Cost Explorer.

Build a pseudonymization service on AWS to protect sensitive data: Part 2

Part 1 of this two-part series described how to build a pseudonymization service that converts plain text data attributes into a pseudonym or vice versa. A centralized pseudonymization service provides a unique and universally recognized architecture for generating pseudonyms. Consequently, an organization can achieve a standard process to handle sensitive data across all platforms. Additionally, […]

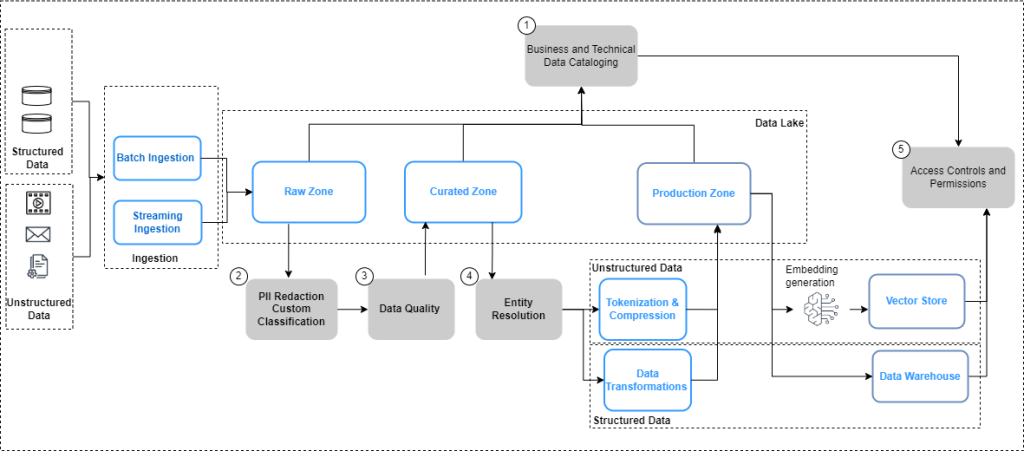

Data governance in the age of generative AI

Data is your generative AI differentiator, and a successful generative AI implementation depends on a robust data strategy incorporating a comprehensive data governance approach. Working with large language models (LLMs) for enterprise use cases requires the implementation of quality and privacy considerations to drive responsible AI. However, enterprise data generated from siloed sources combined with […]