AWS Big Data Blog

Category: Advanced (300)

Using Amazon EMR DeltaStreamer to stream data to multiple Apache Hudi tables

In this post, we show you how to implement real-time data ingestion from multiple Kafka topics to Apache Hudi tables using Amazon EMR. This solution streamlines data ingestion by processing multiple Amazon Managed Streaming for Apache Kafka (Amazon MSK) topics in parallel while providing data quality and scalability through change data capture (CDC) and Apache Hudi.

Access Snowflake Horizon Catalog data using catalog federation in the AWS Glue Data Catalog

AWS has introduced a new catalog federation feature that enables direct access to Snowflake Horizon Catalog data through AWS Glue Data Catalog. This integration allows organizations to discover and query data in Iceberg format while maintaining security through AWS Lake Formation. This post provides a step-by-step guide to establishing this integration, including configuring Snowflake Horizon Catalog, setting up authentication, creating necessary IAM roles, and implementing AWS Lake Formation permissions. Learn how to enable cross-platform analytics while maintaining robust security and governance across your data environment.

Access Databricks Unity Catalog data using catalog federation in the AWS Glue Data Catalog

AWS has launched the catalog federation capability, enabling direct access to Apache Iceberg tables managed in Databricks Unity Catalog through the AWS Glue Data Catalog. With this integration, you can discover and query Unity Catalog data in Iceberg format using an Iceberg REST API endpoint, while maintaining granular access controls through AWS Lake Formation. In this post, we demonstrate how to set up catalog federation between the Glue Data Catalog and Databricks Unity Catalog, enabling data querying using AWS analytics services.

Use Amazon SageMaker custom tags for project resource governance and cost tracking

Amazon SageMaker announced a new feature that you can use to add custom tags to resources created through an Amazon SageMaker Unified Studio project. This helps you enforce tagging standards that conform to your organization’s service control policies (SCPs) and helps enable cost tracking reporting practices on resources created across the organization. In this post, we look at use cases for custom tags and how to use the AWS Command Line Interface (AWS CLI) to add tags to project resources.

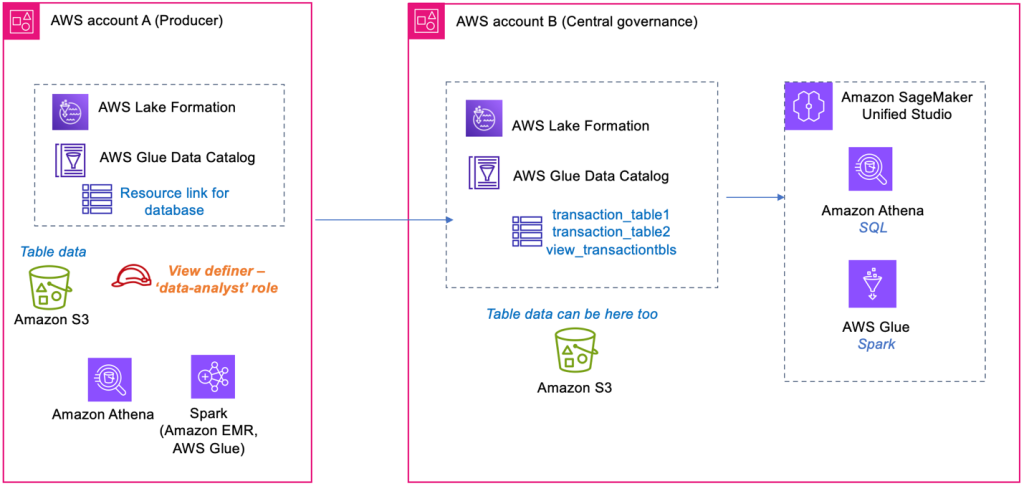

Create AWS Glue Data Catalog views using cross-account definer roles

In this post, we demonstrate how to use cross-account IAM definer roles with AWS Glue Data Catalog views. We show how data owner accounts can create and manage views in a central governance account while maintaining security and control over their data assets.

Simplify multi-warehouse data governance with Amazon Redshift federated permissions

Amazon Redshift federated permissions simplify permissions management across multiple Redshift warehouses. In this post, we show you how to define data permissions one time and automatically enforce them across warehouses in your AWS account, removing the need to re-create security policies in each warehouse.

Unifying governance and metadata across Amazon SageMaker Unified Studio and Atlan

In this post, we show you how to unify governance and metadata across Amazon SageMaker Unified Studio and Atlan through a comprehensive bidirectional integration. You’ll learn how to deploy the necessary AWS infrastructure, configure secure connections, and set up automated synchronization to maintain consistent metadata across both platforms.

Modernize Apache Spark workflows using Spark Connect on Amazon EMR on Amazon EC2

In this post, we demonstrate how to implement Apache Spark Connect on Amazon EMR on Amazon Elastic Compute Cloud (Amazon EC2) to build decoupled data processing applications. We show how to set up and configure Spark Connect securely, so you can develop and test Spark applications locally while executing them on remote Amazon EMR clusters.

Create and update Apache Iceberg tables with partitions in the AWS Glue Data Catalog using the AWS SDK and AWS CloudFormation

In this post, we show how to create and update Iceberg tables with partitions in the Data Catalog using the AWS SDK and AWS CloudFormation.

IPv6 addressing with Amazon Redshift

As we witness the gradual transition from IPv4 to IPv6, AWS continues to expand its support for dual-stack networking across its service portfolio. In this post, we show how you can migrate your Amazon Redshift Serverless workgroup from IPv4-only to dual-stack mode, so you can make your data warehouse future ready.