AWS Partner Network (APN) Blog

Unlock Advanced AI Control with Kong AI Gateway And Amazon Bedrock

By Mohamed Salah, Senior Solutions Architect – AWS

By Amir Tarek, Senior Cloud Operations Architect – AWS

By Anuj Sharma, Principal Solutions Architect – AWS

By Michel Zwarts – Partner Sales Technical Director – Kong

|

| Kong |

|

Generative AI is changing how businesses work in amazing ways. Many companies have tested AI and seen great results. Now, they’re ready to use AI in their day-to-day operations. But moving from small pilots to big projects isn’t easy. It comes with new challenges that need smart solutions. Successful pilot projects lead to questions about how to effectively scale, integrate with various large language models (LLMs), and maintain oversight. In particular, teams face challenges with unpredictable token usage that makes cost control difficult, manual integration of multiple LLMs that slows development, and limited observability into model interactions that hampers optimization and governance. These challenges can stall progress just as companies are ready to innovate with AI at scale.

A critical challenge that emerges at enterprise scale is the need for centralized AI governance and AI service consumer management. Organizations require a structured approach that moves away from direct, point-to-point accounts with individual LLM providers toward a unified corporate-level management system. This centralized approach empowers organizations with full control over AI capabilities throughout the enterprise. It helps prevent account sprawl while enabling enterprise Identity Management to effectively manage user and consumer entitlements. The integration of Amazon Bedrock with Kong AI Gateway addresses these challenges through three key areas: cost management and governance, intelligent multi-model orchestration, and comprehensive operational visibility.

Amazon Bedrock – A Secure Foundation for GenAI

Amazon Bedrock provides a secure, fully managed foundation for building generative AI applications. It offers developers access to leading foundation models from multiple AI providers (such as Anthropic Claude, Amazon Nova, and Amazon Titan) through a unified API. With Amazon Bedrock, teams can experiment and deploy using optimized models without needing to manage the underlying model infrastructure. The service delivers enterprise-grade security, reliability, and scalability out of the box, so builders can focus on application logic and creativity rather than provisioning Graphics Processing Units (GPUs) or maintaining model servers. By abstracting away the complexity of hosting and integrating different Foundation Models (FMs), Amazon Bedrock helps organizations accelerate their AI initiatives on a solid system aligned with security, privacy, and responsible AI development.

However, running generative AI applications in production at scale requires more than just access to foundation models. Teams also need to enforce cost controls, intelligently utilize multiple models for different tasks, and gain visibility into how the models are performing. This is where Kong AI Gateway complements Amazon Bedrock to provide full control over multi-LLM applications.

Kong AI Gateway – Extending Control and Governance

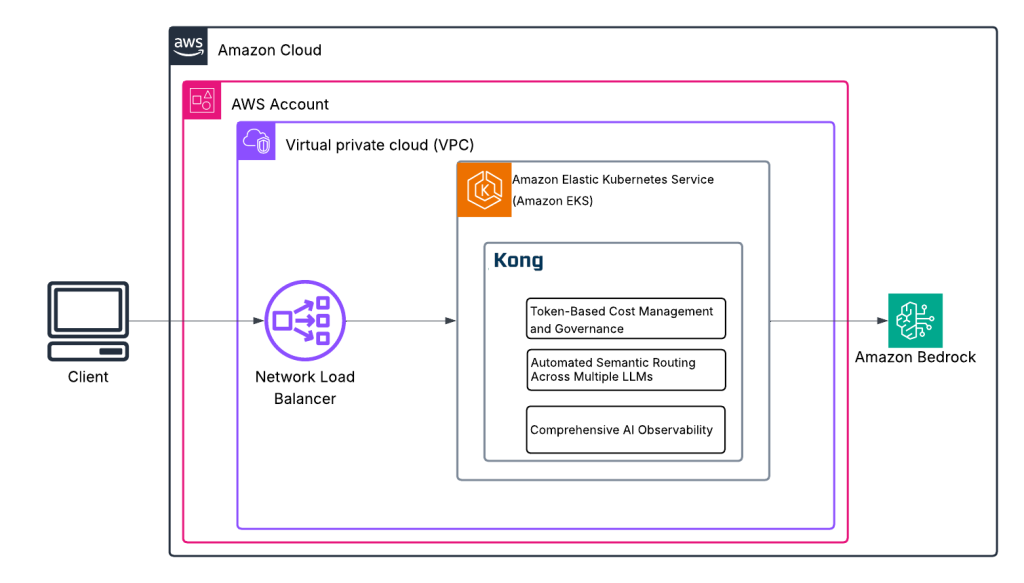

Figure 1: Kong AI Gateway Architecture

Kong AI Gateway functions as a control plane between applications and Amazon Bedrock, providing comprehensive governance over LLM usage. Kong AI Gateway is an AI-aware gateway that integrates with Amazon Bedrock to address operational challenges in production AI systems. It sits between the generative AI applications and the foundation models as seen in Figure 1. It acts as a high-performance control plane to secure, govern, and optimize AI traffic. By deploying Kong AI Gateway in front of Amazon Bedrock’s endpoints, organizations gain fine-grained control over how different LLMs are utilized, without having to modify the application itself. This layered approach lets teams confidently scale up their generative AI use cases while maintaining oversight on costs, performance, and compliance.

Kong AI Gateway offers extensive capabilities beyond the core features detailed in this article, including:

- Semantic caching

- Prompt compression

- AI model failover and retry mechanisms

- Automated RAG injection

- Content safety guardrails

- PII sanitization, prompt engineering templates

- Request/response transformations

- MCP traffic gateway support

- Streaming capabilities, secrets management

- Advanced analytics

- Audit logging

- Custom plugin extensibility to address comprehensive enterprise AI governance and operational requirements

Key Capabilities

Token-Based Cost Management and Governance

One of the key capabilities Kong AI Gateway introduces is token-based throttling for cost management. Rather than simply limiting requests, Kong AI Gateway can enforce precise usage quotas based on tokens – the fundamental unit of work for LLM APIs. Teams can define limits on prompt tokens, response tokens, or total tokens consumed, tailored per user, application, or time period. This means you can help prevent runaway usage by a single user or feature, avoiding unexpected AI bills. For example, an enterprise could allow a maximum of N tokens per day for a given department’s chatbot integration. If that limit is reached, the gateway will gracefully throttle further calls. This level of control enables predictable costs and makes budgeting for generative AI services much more reliable. In practice, setting token-based policies via an AI gateway helps prevent the cost overruns that might otherwise occur as usage scales up uncontrolled.

Automated Semantic Routing Across Multiple LLMs

Another powerful capability is automated semantic routing across multiple LLMs. In various production systems, no single model is best for every task – one model might excel at summarization while another is better at open-ended creative generation. Kong AI Gateway addresses the complexity of multi-LLM integration by intelligently routing each request to the most appropriate model available through Amazon Bedrock. It can dynamically dispatch a prompt to different foundation models based on the prompt’s content or intent, or according to business rules and context. This semantic routing happens in real time without developers needing to hard-code model selection logic. For instance, a short factual query could be directed to a smaller, faster model, while a complex analytical question goes to a more advanced capability model. Kong AI Gateway’s multi-LLM capability uses policies (e.g. routing by prompt semantics, model latency, or usage patterns) to load-balance and orchestrate calls to Bedrock’s models in an optimal way. This not only accelerates development – since developers can hit one endpoint and let the gateway choose the model – but also improves performance and user experience by leveraging each model’s strengths automatically.

Comprehensive AI Observability

The third key capability is AI observability. Kong AI Gateway provides detailed, real-time analytics into prompt traffic and model behavior that are otherwise difficult to obtain from black-box model APIs alone. As it brokers calls to Amazon Bedrock, the gateway gathers detailed metrics on each interaction: tracking how many requests go to each model, measuring latency and error rates, and counting tokens in prompts and responses. All of these metrics are invaluable for understanding usage patterns and diagnosing issues. Kong AI Gateway makes it straightforward to monitor these analytics by integrating with industry-standard monitoring solutions. It exposes LLM-specific metrics through OpenTelemetry and Prometheus endpoints, meaning teams can plug AI workload data into their existing dashboards on different systems like Amazon CloudWatch. With full observability into the generative AI stack, organizations can optimize prompt designs, detect anomalies or drifts in model output, and enforce governance policies (for example, spotting if certain content triggers the safety filters). This transparency turns what used to be a ‘blind spot’ into a well-instrumented part of the application, enabling continuous improvement of AI outcomes and user experiences.

Integration Benefits and Business Impact

The integration of Amazon Bedrock and Kong AI Gateway enables organizations to develop and deploy production-ready multi-LLM applications with systematic control mechanisms. Amazon Bedrock delivers validated foundation models and managed infrastructure, while Kong AI Gateway implements essential production controls through automated token usage monitoring, intelligent request routing, real-time performance metrics, and compliance enforcement mechanisms.

Developers can implement and test foundation models through standardized APIs, while built-in scaling controls maintain predetermined usage thresholds. The system’s automated model selection operates on defined performance criteria, reducing development overhead through pre-built integration components. Engineering teams benefit from minimized custom integration requirements, while architecture teams gain standardized model management capabilities. Business units receive transparent cost allocation and usage reporting, supported by comprehensive audit trails.

The practical applications span across various business needs, from customer service systems with contextual routing to content management systems with automated generation capabilities. Analytics tools and document processing systems benefit from distributed workload management, each implementation leveraging the structured control and oversight provided by the integrated solution.

Conclusion

Amazon Bedrock and Kong AI Gateway integration provides organizations with a structured approach to scaling generative AI implementations. The solution addresses three key areas: cost management through token-based monitoring and predictable resource allocation; technical integration via standardized APIs and automated model selection; and operational visibility through real-time analytics and compliance reporting.

Organizations can begin implementation by reviewing technical documentation through the AWS Partner Network and initiating a controlled proof-of-concept deployment. This methodical approach facilitates successful implementation while maintaining operational control and cost management. The solution provides the framework to scale AI implementations across business units while maintaining consistent governance and performance standards.

.

.

Kong – AWS Partner Spotlight

Kong provides the foundation that enables any company to securely adopt AI and become an API-first company — speeding up time to market, creating new business opportunities, and delivering superior products and services, Kong builds, sells, and supports Kong, the worlds most popular open source API gateway and microservice management platform.